Pyspark

Note: If you think the blog is good, don’t forget to like and collect it. I will update the content related to artificial intelligence and big data every week. Most of the content is original, Python Java Scala SQL code, CV NLP recommendation system, etc., Spark Flink Kafka, Hbase, Hive, Flume, etc. are all pure dry goods, and the interpretation of various top conference papers makes progress together.

Today continue to share with you about Pyspark_SQL3

#博学谷IT Learning Technical Support

Article directory

foreword

Continue to share Pyspark_SQL3 today.

1. Film analysis case

- Requirement 1: Query the average score of users

- Requirement 2: Query the average score of movies

- Requirement 3: Query the number of movies with a score greater than the average

- Requirement 4: Query the user who scored the most times (>3) in high-scoring movies, and find the average score of this person

- Requirement 5: Query the average score, minimum score, and maximum score of each user

- Requirement 6: Query the top 10 average scores of movies rated more than 100 times

from pyspark import SparkContext, SparkConf

from pyspark.sql import SparkSession

from pyspark.sql.types import *

import pyspark.sql.functions as F

def method01():

# 需求1:查询用户平均分

df.select("userid", "score").groupBy("userid").agg(

F.round(F.avg("score"), 2).alias("u_s_avg")

).orderBy("u_s_avg", ascending=False).show()

def method02():

# 需求2:查询电影平均分

df.select("movieid", "score").groupBy("movieid").agg(

F.round(F.avg("score"), 2).alias("m_s_avg")

).orderBy("m_s_avg", ascending=False).show()

def method03():

# 需求3:查询大于平均分的电影数量

df_avg_score = df.select("score").agg(

F.avg("score").alias("avg_score")

)

df_movie_avg_score = df.select("movieid", "score").groupBy("movieid").agg(

F.avg("score").alias("movie_avg_score")

)

print(df_movie_avg_score.where(df_movie_avg_score["movie_avg_score"] > df_avg_score.first()["avg_score"]).count())

def method04():

# 需求4:查询高分电影中(>3)打分次数最多的用户,并求出此人打的平均分

# 4.1高分电影

df_hight_score_movie = df.groupBy("movieid").agg(

F.avg("score").alias("m_s_avg")

).where("m_s_avg>3").select("movieid")

# 4.2高分电影中打分次数最多的用户

df_hight_count_user = df_hight_score_movie.join(df, "movieid", "inner").groupBy("userid").agg(

F.count("movieid").alias("u_m_count")

).orderBy("u_m_count", ascending=False).limit(1)

# 4.3此用户的平均分

df.where(df["userid"] == df_hight_count_user.first()["userid"]) \

.select("userid", "score").groupBy("userid").agg(

F.avg("score").alias("hight_user_avg_score")

).show()

def method05():

# 需求5:查询每个用户的平均打分,最低打分,最高打分

df.select("userid", "score").groupBy("userid").agg(

F.avg("score").alias("u_avg_score")

).show()

df.select("userid", "score").groupBy("userid").agg(

F.max("score").alias("u_avg_score")

).show()

df.select("userid", "score").groupBy("userid").agg(

F.min("score").alias("u_avg_score")

).show()

def method06():

# 需求6:查询被评分超过100次的电影的平均分排名前10

df.groupBy("movieid").agg(

F.count("movieid").alias("m_count"),

F.avg("score").alias("m_avg_score")

).where("m_count>100").orderBy("m_avg_score", ascending=False).limit(10).show()

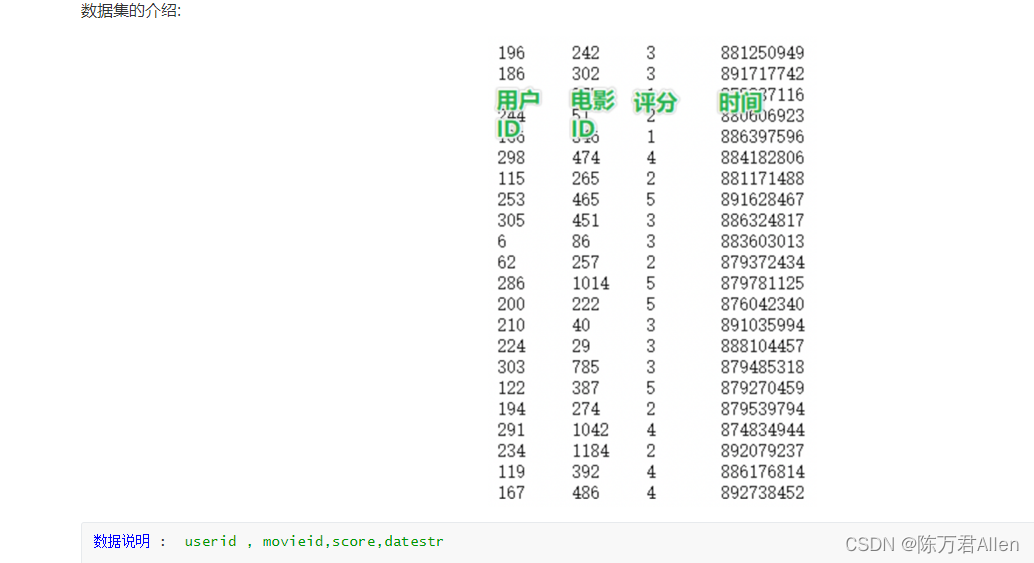

if __name__ == '__main__':

print("move example")

spark = SparkSession.builder.appName("move example").master("local[*]").getOrCreate()

schema = StructType().add("userid", StringType()).add("movieid", StringType()) \

.add("score", IntegerType()).add("datestr", StringType())

df = spark.read \

.format("csv") \

.option("sep", "\t") \

.schema(schema=schema) \

.load("file:///export/data/workspace/ky06_pyspark/_03_SparkSql/data/u.data")

method01()

method02()

method03()

method04()

method05()

method06()

spark.stop()

Summarize

Today I mainly share with you a comprehensive case of Pyspark_SQL.