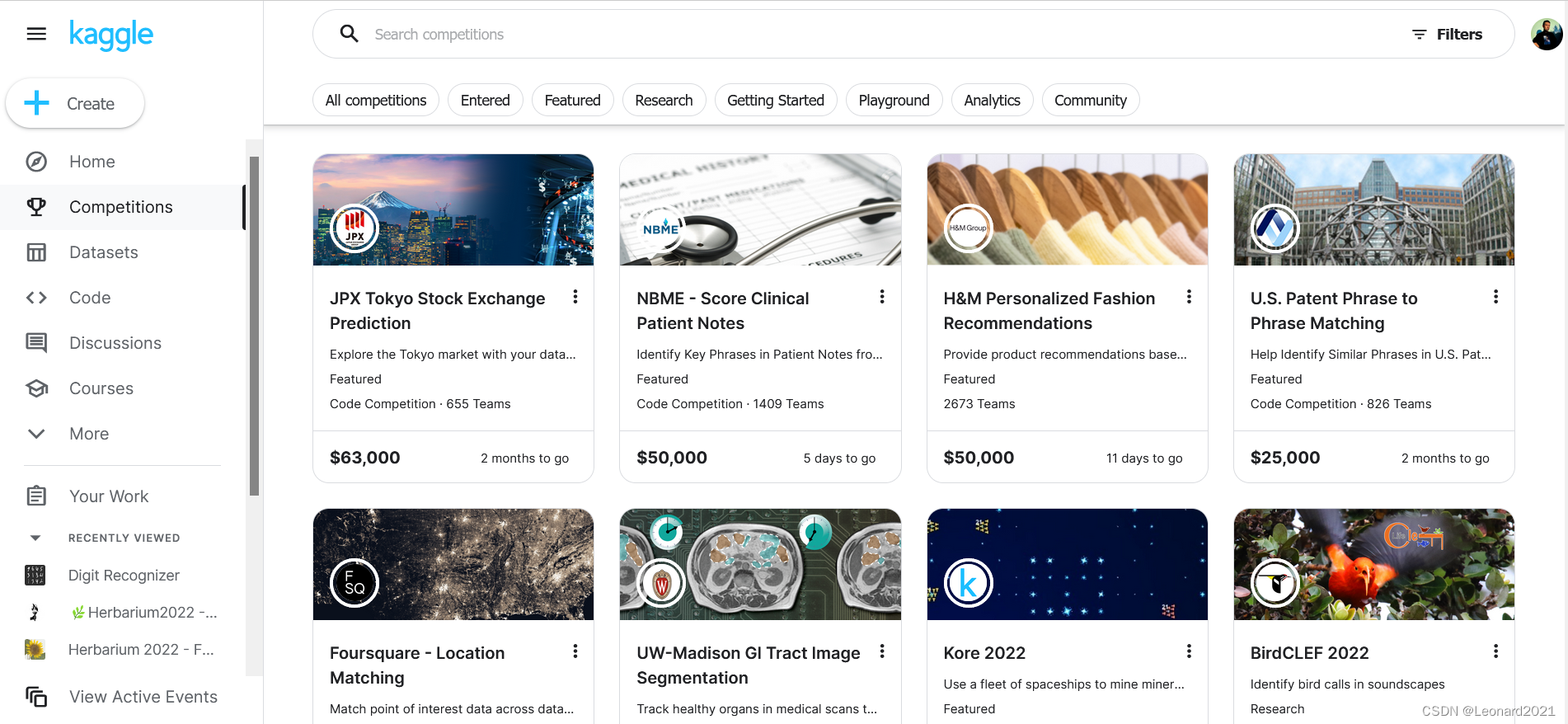

1. Introduction to Kaggle

Kaggle is a well-known website both at home and abroad in the field of AI industry. The domestic Alibaba Tianchi is the benchmark for this website. There are rich data sets on it, including data sets in the field of computer vision CV (including: image recognition, target detection, semantic segmentation, etc.), data sets such as Boston house price prediction, and data sets in speech recognition, etc. Some competitions will be held from time to time, and any registered member can participate. Kaggle not only provides free data sets, but also has a certain amount of free GPU and TPU usage quota every week (GPU is free for 30 hours a week, and TPU is free for 20 hours. ), our code can run on the NoteBook provided by kaggle, you can learn and refer to the code shared by others, and you can also share your own code. Generally speaking, this is a very good community for AI learners, with a good open source atmosphere. By participating in competitions and exchanges, we can also increase our related experience. Free GPU and CPU also lower the threshold for our learning.

Kaggle website address: Kaggle: Your Machine Learning and Data Science Community

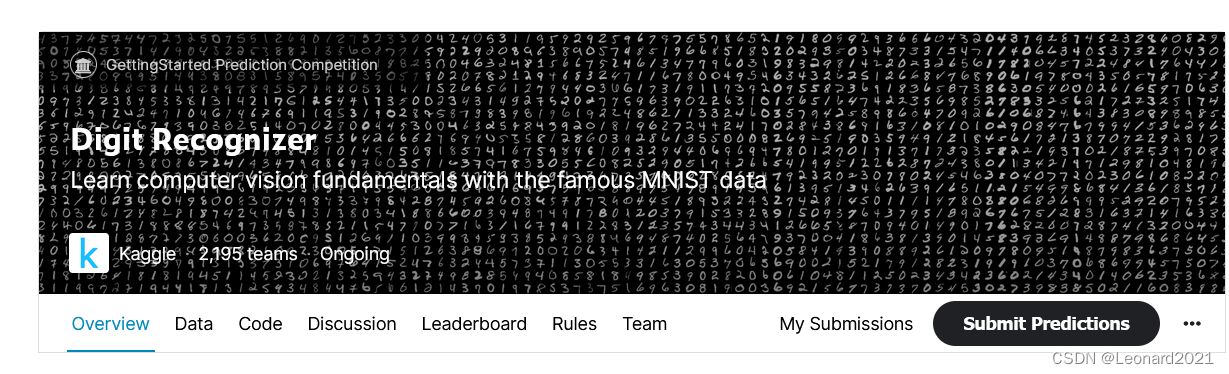

2. Digit Recognizer Competition

This is an entry-level competition in computer vision image recognition. You can understand it as "Hello World" in computer vision . This Mnist handwritten digit data set dates back to the last century and may be older than you. At that time After training, it is applied to digital recognition of postage stamp codes and digital recognition of bank checks . Some models are still in use even now.

Introduction to the dataset:

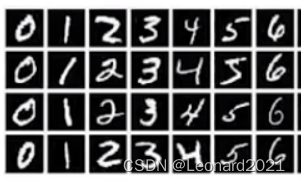

Collected by Yann LeCun, the MNIST dataset is a large database of handwritten digits. It is usually used to train various image processing systems, and is also widely used for training and testing in the field of machine learning. The MNIST digital text recognition data set does not have too much data, and it is a monochrome image, which is relatively simple and suitable for deep learning beginners to practice model building, training, and prediction. The image set in the MNIST database is a combination of two databases of NIST (National Institute of Standards and Technology): special database 1 and special database 3. The dataset consists of handwritten digits from 250 people, half high school students and half U.S. Census Bureau.

The MNIST data set has a total of 60,000 items of training data and 10,000 items of test data. The size of each image is 28*28 (pixels), and each image is a grayscale image with a bit depth of 8 (grayscale images are 0-255). It looks like this:

3. Actual code

My code is run directly on Kaggle's Notebook.

1. Training:

import os

import math

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

import torch

from torch.utils.data import DataLoader, Dataset

import matplotlib.pyplot as plt

import torch.nn as nn

from torch.autograd import Variable

from torch.nn import functional as F

from torchvision import datasets, models, transforms

import torch.optim as optim

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

dataframe_train_valid = pd.read_csv(os.path.join('../input/digit-recognizer/', 'train.csv'), dtype=np.float32)

dataframe_test = pd.read_csv(os.path.join('../input/digit-recognizer/', 'test.csv'), dtype=np.float32)

class mnist_data(Dataset):

def __init__(self, type, dataframe, transform):

if type == 'train' or type == 'valid':

labels = dataframe.label.values

features = (dataframe.loc[:, dataframe.columns != "label"].values)

# 划分训练集与验证集

features_train, features_valid, labels_train, labels_valid = \

train_test_split(features, labels, test_size=0.2, random_state=0)

if type == 'train':

self.X = features_train.reshape((-1,28,28))

self.y = labels_train

elif type == 'valid':

self.X = features_valid.reshape((-1,28,28))

self.y = labels_valid

if type == 'test':

self.X = dataframe.values.reshape((-1,28,28))

self.y = None

self.transform = transform

def __getitem__(self, index):

if self.y is not None:

return self.y[index], self.transform(self.X[index])

else:

return self.transform(self.X[index])

def __len__(self):

return self.X.shape[0]

batch_size = 256

train_dataset = mnist_data('train', dataframe_train_valid,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(mean=(0.1307,), std=(0.3081,))

]))

valid_dataset = mnist_data('valid', dataframe_train_valid,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(mean=(0.1307,), std=(0.3081,))

]))

test_dataset = mnist_data('test', dataframe_test,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(mean=(0.1307,), std=(0.3081,))

]))

train_dataloader = DataLoader(dataset=train_dataset, batch_size=batch_size, shuffle=False)

valid_dataloader = DataLoader(dataset=valid_dataset, batch_size=batch_size, shuffle=False)

test_dataloader = DataLoader(dataset=test_dataset, batch_size=batch_size, shuffle=False)

model = models.resnet18()#pretrained=True

num_ftrs = model.fc.in_features

model.fc = nn.Linear(num_ftrs, 10)

model.conv1 = nn.Conv2d(1, 64, kernel_size=7, stride=2, padding=3, bias=False)

# 选择优化器

optimizer = torch.optim.Adam(model.parameters(), lr=0.01)

#optimizer = optim.SGD(model.parameters(), lr=0.001, momentum=0.9, weight_decay=1e-2)

scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer=optimizer, T_max=1000)#, eta_min=1e-6

'''

# 若训练时测量值(如loss)停滞,则调整学习率

scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer,

patience=5,

verbose=1,

factor=0.5,

min_lr=1e-5)'''

# 选择loss function

criterion = nn.CrossEntropyLoss()

# 使用gpu进行训练

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

criterion.to(device)

count = 0

loss_list = []

iteration_list = []

accuracy_list = []

best_accuracy = 0

for epoch in range(1000):

for i, (labels, images) in enumerate(train_dataloader):

train = Variable(images.type(torch.FloatTensor)).to(device)

labels = Variable(labels.type(torch.LongTensor)).to(device)

optimizer.zero_grad()

outputs = model(train)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

count = count + 1

if count % 50 == 0:

# 检查loss与该模型在验证集下的识别准确率

correct = 0

total = 0

for i, (labels, images) in enumerate(valid_dataloader):

valid = Variable(images.type(torch.FloatTensor)).to(device)

labels = Variable(labels.type(torch.LongTensor)).to(device)

outputs = model(valid)

predicted = torch.max(outputs.data, 1)[1]

total += len(labels)

correct += (predicted == labels).sum()

accuracy = 100 * correct / float(total)

loss_list.append(loss.data)

iteration_list.append(count)

accuracy_list.append(accuracy)

print('Epoch:{} Iteration: {} Loss: {} Accuracy: {} %'.format(epoch,count,

loss.data,

accuracy))

if accuracy > best_accuracy:

torch.save(model.state_dict(),'./mymodel.pt')

scheduler.step()#loss

optimizer.step()

2. Model evaluation and generate submission files:

from torchvision import datasets, models, transforms

import torch.nn as nn

model = models.resnet18()#pretrained=True

num_ftrs = model.fc.in_features

model.fc = nn.Linear(num_ftrs, 10)#识别种类数

model.conv1 = nn.Conv2d(1, 64, kernel_size=7, stride=2, padding=3, bias=False)

model.load_state_dict(torch.load('./mymodel.pt'))

model.eval()

model.to(device)

prediction = []

with torch.no_grad():

for i, images in enumerate(test_dataloader):

test = Variable(images.type(torch.FloatTensor)).to(device)

outputs = model(test)

predicted = torch.max(outputs.data, 1)[1]

prediction.append(predicted.cpu())

p = [x.numpy() for x in prediction]

p = np.array(p,dtype=object)

p = np.hstack(p)

print(p.shape)

submission = pd.DataFrame({

"ImageId": np.arange(len(p))+1,

"Label": p.tolist()

})

submission.to_csv('./sample_submission_leonard2021.csv', index=False)

print(submission)After completing the training and evaluation, you can refresh the Output interface, download the submission file to your computer and upload it to the competition interface to get your score and ranking.

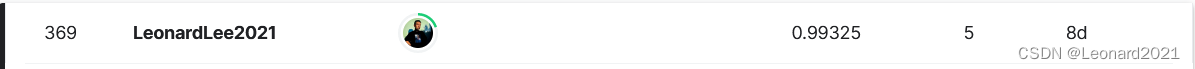

I used a ResNet18 network that I modified myself, changed the input picture format of the network to single channel, and changed the output of the fully connected layer to 10, using the Aadm optimizer (initial learning rate 0.01), learning rate adjustment algorithm Cosine Annealing for CosineAnnealingLR.

After I submit the simple training, the evaluation result of the competition is 0.99325, ranking in the top 17%, which is quite satisfactory. You can also improve the model and adjust the parameters yourself, or try to freeze some network parameters to achieve better results.

——————————————————————————————————————————