1. All code

from tensorflow.examples.tutorials.mnist import input_data

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

mnist = input_data.read_data_sets('E:\img\mnist', one_hot=True)

n_batch = mnist.train.num_examples

print(n_batch)

with tf.name_scope("input"):

x = tf.placeholder(tf.float32,[None,784],name = 'x-input')

y = tf.placeholder(tf.float32,[None,10],name = "y-input")

W = tf.Variable(tf.zeros([784,10]))

b = tf.Variable(tf.zeros([10]))

prediction = tf.nn.softmax(tf.matmul(x,W)+b)

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(labels = y,logits = prediction))

train_step = tf.train.GradientDescentOptimizer(0.2).minimize(loss)

c_prediction = tf.equal(tf.argmax(y,1),tf.argmax(prediction,1))

accuracy = tf.reduce_mean(tf.cast(c_prediction,tf.float32))

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

for epoch in range(300):

batch_x,batch_y = mnist.train.next_batch(100)

sess.run(train_step,feed_dict={x:batch_x,y:batch_y})

acc = sess.run(accuracy,feed_dict={x:mnist.test.images,y:mnist.test.labels})

print("Iter {}, Testing Acc : {}".format(epoch,acc))2. Prepare the data

from tensorflow.examples.tutorials.mnist import input_data

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

mnist = input_data.read_data_sets('E:\img\mnist', one_hot=True)

print(n_batch)

'''输出:

Extracting E:\img\mnist\train-images-idx3-ubyte.gz

Extracting E:\img\mnist\train-labels-idx1-ubyte.gz

Extracting E:\img\mnist\t10k-images-idx3-ubyte.gz

Extracting E:\img\mnist\t10k-labels-idx1-ubyte.gz

55000

'''3. Define variables

Input variables (images and labels)

x = tf.placeholder(tf.float32,[None,784])#input

y = tf.placeholder(tf.float32,[None,10])#outputplaceholder translates as a placeholder. A single image of the mnist dataset is a grayscale image of a one-dimensional channel, 28*28*1=784, and there are 10 numbers from 0 to 9. The above two lines of code mean to declare the input variable, where x is a one-dimensional variable of length 784, y is a one-dimensional variable of length 10, representing the number represented by the picture (0-9)

Detailed explanation of placeholder parameters

variable

W = tf.Variable(tf.zeros([784,10]))

b = tf.Variable(tf.zeros([10]))Define two variables, the initial value is tf.zeros()generated by, where the Wsum bis a vector of 784*10 and 1*10 respectively

Predicted value and loss

predictionon = tf.nn.softmax(tf.matmul(x,W)+b)

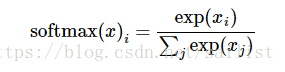

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(labels = y,logits = prediction))tf.nn.softmaxis a classifier, the formula is

to get a vector with a sum of 1 from a bunch of vectors, and in mnist training, it will return a 1*10 vector (with a sum of 1)

tf.nn.softmax_cross_entropy_with_logits_v2It is to find cross entropy, that is, to find the uncertainty of information. It returns a vector with negative values, and loss is a separate value, so it is necessary to set this vector according to a certain weight, and a function needs to be nested.

tf.reduce_meanis to find the average value of the given parameters, and similarly tf.reduce_max, tf.reduce_sum… etc.

Prediction Results and Accuracy

c_prediction = tf.equal(tf.argmax(y,1),tf.argmax(prediction,1))

accuracy = tf.reduce_mean(tf.cast(c_prediction,tf.float32))tf.equalTo judge whether it is equal, the above code is to compare y(real result) and prediction(predicted result) to be equal, and return a series of boolvalues[False,True,False...]

tf.castIs to convert the value of parameter 1 to the type of parameter 2 (as long as it can be converted), the above code is to change the returned boolvalue to the floattype (1 and 0), and then tf.reduce_mean()by averaging, the result is obvious, which is the accuracy rate

4. Initialization and training

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

for epoch in range(300):

batch_x,batch_y = mnist.train.next_batch(100)

sess.run(train_step,feed_dict={x:batch_x,y:batch_y})

acc = sess.run(accuracy,feed_dict={x:mnist.test.images,y:mnist.test.labels})

print("Iter {}, Testing Acc : {}".format(epoch,acc))The above only defines a part of the variables, but to use it, an initialization process is required, that is, a bunch of static elements (or processes) are generated before, but to make it move, it needs to be initialized, which tf.global_variables_initializer()is to generate an initialization all the The initializer of the variable, (this initializer is also static and requires a run() call to complete the initialization process)

tf.Session()Indicates that a session is opened, and the entire graph can be moved during the session opening process, that is, the session can be run.

At the beginning, sess.run(init)the initialization method is called, and after all variables are initialized, other variables can be run. During the run process, all elements related to the elements of the run will run.

#train_step涉及的loss,x,y,W,b...都会一起参与运算

sess.run(train_step,feed_dict={x:batch_x,y:batch_y})

#accuracy涉及的c_prediction,y,prediction会参与运算

acc = sess.run(accuracy,feed_dict={x:mnist.test.images,y:mnist.test.labels})Analyzing the usage of a module through a case is my preferred method, but sometimes it may be limited to a certain point and not comprehensive enough. At this time, it may be necessary to study it in depth after understanding a certain level.