write in front

Last week, a colleague wrote a test code for ConcurrentHashMap, saying that the memory overflowed after putting 32 elements in the map. I took a general look at his code and the parameters of the running jvm, and found it very strange, so I fiddled with it myself. . First the previous code:

public class MapTest { public static void main(String[] args) { System.out.println("Before allocate map, free memory is " + Runtime.getRuntime().freeMemory()/(1024*1024) + "M"); Map<String, String> map = new ConcurrentHashMap<String, String>(2000000000); System.out.println("After allocate map, free memory is " + Runtime.getRuntime().freeMemory()/(1024*1024) + "M"); int i = 0; try { while (i < 1000000) { System.out.println("Before put the " + (i + 1) + " element, free memory is " + Runtime.getRuntime().freeMemory()/(1024*1024) + "M"); map.put(String.valueOf(i), String.valueOf(i)); System.out.println("After put the " + (i + 1) + " element, free memory is " + Runtime.getRuntime().freeMemory()/(1024*1024) + "M"); i++; } } catch (Exception e) { e.printStackTrace (); } catch (Throwable t) { t.printStackTrace(); } finally { System.out.println("map size is " + map.size()); } } }

When executing, add the jvm execution parameters -Xms512m -Xmx512m , the execution result:

Before allocate map, free memory is 120M After allocate map, free memory is 121M Before put the 1 element, free memory is 121M After put the 1 element, free memory is 121M Before put the 2 element, free memory is 121M After put the 2 element, free memory is 122M Before put the 3 element, free memory is 122M After put the 3 element, free memory is 122M Before put the 4 element, free memory is 122M After put the 4 element, free memory is 122M Before put the 5 element, free memory is 122M After put the 5 element, free memory is 114M Before put the 6 element, free memory is 114M java.lang.OutOfMemoryError: Java heap space map size is 5 at java.util.concurrent.ConcurrentHashMap.ensureSegment(Unknown Source) at java.util.concurrent.ConcurrentHashMap.put(Unknown Source) at com.j.u.c.tj.MapTest.main(MapTest.java:17)

The first code did not add some log printing, it was very strange at the time, why only put one element in the map and reported OutOfMemoryError.

So I added the above print log, and found that more than 200 megabytes of space had been occupied when the map was created, and then put an element into it, there were more than 200 megabytes before put, and an OutOfMemoryError was reported when put, then Even more strange, it takes up a certain amount of space when initializing the map, which is understandable, but just put a small element in it, why does an OutOfMemoryError occur?

Troubleshooting process

1. The first step is to change the Map<String, String> map = new ConcurrentHashMap<String, String>(2000000000) in the first piece of code to Map<String, String> map = new ConcurrentHashMap<String, String>( );, which works fine this time. (I haven't found the root cause of the problem, but I have to pay attention to using ConcurrentHashMap in the future: 1. Try not to initialize it as much as possible; 2. If initialization is required, try to give a more appropriate value)

2. In the second step, add the jvm parameter -Xms20124m -Xmx1024m during execution. Found still the same problem.

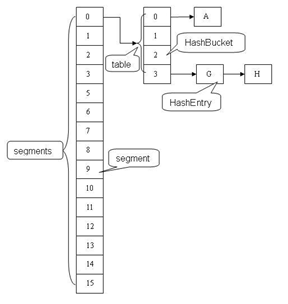

3. The third step is to analyze the source code of ConcurrentHashMap. First, understand the structure of ConcurrentHashMap, which is composed of multiple segments (each segment has a lock, which is also the guarantee of thread safety of ConcurrentHashMap, not the focus of this article). Each Segment consists of a HashEntry array, and the problem is on this HashEntry

4. In the fourth step, check the initialization method of ConcurrentHashMap. It can be seen that the HashEntry array of Segment[0] is initialized. The length of the array is the cap value, and this value is 67108864

The calculation process of cap (can be debugged for the initialization process)

1) initialCapacity is 2,000,000,000, and the default value of MAXIMUM_CAPACITY (that is, the maximum value supported by ConcurrentHashMap is 1<<30, that is, 2 30 =1,073,741,824), the value of initialCapacity is greater than MAXIMUM_CAPACITY, that is, initialCapacity=1,073,741,824

2) The value of c is calculated as initialCapacity/ssize=67108864

3) cap is the first n-th power of 2 greater than or equal to c, that is, 67108864

public ConcurrentHashMap(int initialCapacity, float loadFactor, int concurrencyLevel) { if (!(loadFactor > 0) || initialCapacity < 0 || concurrencyLevel <= 0) throw new IllegalArgumentException(); if (concurrencyLevel > MAX_SEGMENTS) concurrencyLevel = MAX_SEGMENTS; // Find power-of-two sizes best matching arguments int sshift = 0; int ssize = 1; while (ssize < concurrencyLevel) { ++sshift; ssize <<= 1; } this.segmentShift = 32 - sshift; this.segmentMask = ssize - 1; if (initialCapacity > MAXIMUM_CAPACITY) initialCapacity = MAXIMUM_CAPACITY; int c = initialCapacity / ssize; if (c * ssize < initialCapacity) ++c; int cap = MIN_SEGMENT_TABLE_CAPACITY; while (cap < c) cap <<= 1; // create segments and segments[0] Segment<K,V> s0 = new Segment<K,V>(loadFactor, (int)(cap * loadFactor), (HashEntry<K,V>[])new HashEntry[cap]); Segment<K,V>[] ss = (Segment<K,V>[])new Segment[ssize]; UNSAFE.putOrderedObject(ss, SBASE, s0); // ordered write of segments[0] this.segments = ss; }

5. The fifth step, look at the put method of ConcurrentHashMap, you can see that when putting an element,

1) Calculate the hash value of the current key, check whether the semgment where the current key is located is initialized, and if so, perform the subsequent put operation.

2) If the ensureSement() method is not executed, and a HashEntry array is initialized in the ensureSement() method, the length of the array is the same as the length of the HashEntry of the first initialized Segment.

public V put(K key, V value) { Segment<K,V> s; if (value == null) throw new NullPointerException(); int hash = hash(key); int j = (hash >>> segmentShift) & segmentMask; if ((s = (Segment<K,V>)UNSAFE.getObject // nonvolatile; recheck (segments, (j << SSHIFT) + SBASE)) == null) // in ensureSegment s = ensureSegment(j); return s.put(key, hash, value, false); } private Segment<K,V> ensureSegment(int k) { final Segment<K,V>[] ss = this.segments; long u = (k << SSHIFT) + SBASE; // raw offset Segment<K,V> seg; if ((seg = (Segment<K,V>)UNSAFE.getObjectVolatile(ss, u)) == null) { Segment<K,V> proto = ss[0]; // use segment 0 as prototype int cap = proto.table.length; float lf = proto.loadFactor; int threshold = (int)(cap * lf); HashEntry<K,V>[] tab = (HashEntry<K,V>[])new HashEntry[cap]; if ((seg = (Segment<K,V>)UNSAFE.getObjectVolatile(ss, u)) == null) { // recheck Segment<K,V> s = new Segment<K,V>(lf, threshold, tab); while ((seg = (Segment<K,V>)UNSAFE.getObjectVolatile(ss, u)) == null) { if (UNSAFE.compareAndSwapObject(ss, u, null, seg = s)) break; } } } return seg; }

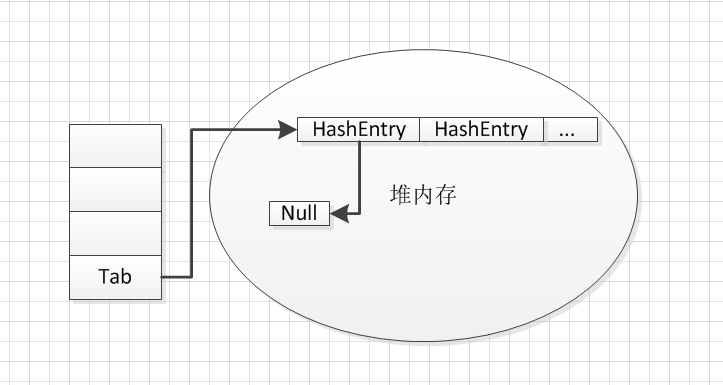

6. At this time, it can be located because a HashEntry array with a length of 67103684 is created when an element is put, and this HashEntry array will occupy 67108864*4byte=256M, which can correspond to the above test results. Why does the array occupy such a large space? Many students may have questions. Let's take a look at the initialization of the array. The array initialization will create an array of HashEntry references in the heap memory, and the length is 67103684, and each HashEntry reference (referenced by objects are null) all occupy 4 bytes.

summary of a problem

1. When initializing ConcurrentHashMap, you should specify reasonable initialization parameters (of course, I did a small test, and I did not find any improvement in performance when specifying initialization parameters)