Hbase is a distributed, scalable, big data storage database. A distributed, column-oriented storage system built on HDFS.

principle

Features: Column Store

- Features:

- Large : A table can have hundreds of millions of rows and hundreds of columns (each Region of Hbase has very few columns) .

- Column-oriented : List-oriented (cluster: Columnfamily) storage and permission control, column (cluster) independent retrieval

- Sparse : For empty (NULL) columns, no storage space is occupied. Therefore, tables can be designed to be very sparse.

- No schema : each row has a primary key that can be sorted and as many columns as you want, columns can be dynamically added as needed, and different rows in the same table can have distinct columns.

- Data multi-version : The data of each cell can have multiple data versions. By default, the version number is automatically assigned, and the version number is the timestamp of the cell insertion.

- Single data type : The data in Hbase are all strings and have no type.

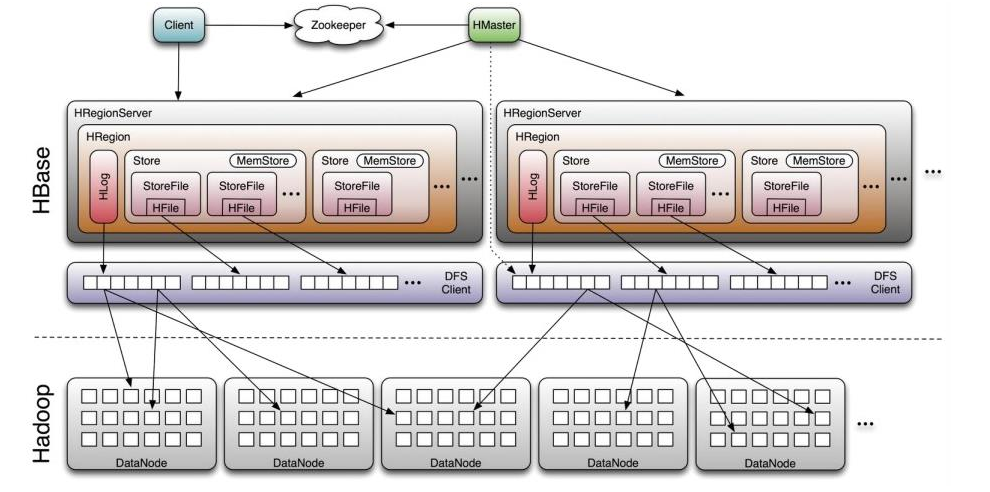

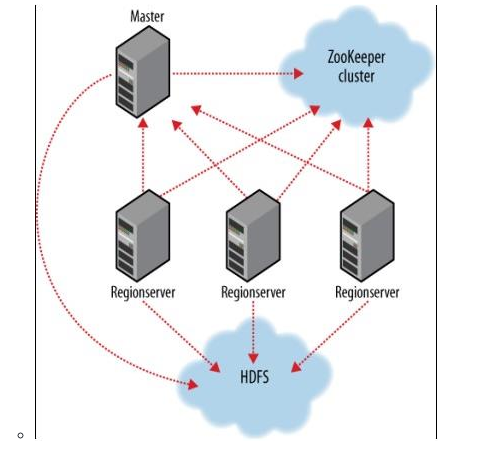

Overall architecture

composition

- client : Contains the interface to access HBase, and maintains the cache to speed up access to HBase.

- ZK : The master and RegionServer will register with Zookeeper when they start (service discovery)

- It is guaranteed that at any time, the cluster has only one mater.

- Stores the addressing entry of all Regions

- Monitor the online and offline information of the Region Server in real time, and notify the master. service discovery

- Store HBase schema and table metadata.

- Hmaster:

- Manage users' additions, deletions, and changes to Tables, and assign regions to Region Servers

- Responsible for load balancing of Region Server

- Find the failed Region Server and reassign the region on it

- Junk File Recovery on HDFS

- Handling schema update requests

- After the Region Split, responsible for the allocation of the new Region

- Region Server:

- Maintains the regions that the master assigns to him, except for I/O requests to those regions

- Responsible for slicing regions that become too large during runtime.

- The client first obtains the address of the Region Server from the master and zk. Then read and write directly through the Region Server

- HRegion:

- The table is divided into multiple Regions in the row direction. Region is the smallest unit of distributed storage and load balancing in HBase. Different Regions can be sharded on different Region Servers, but the same Region will not be split into multiple Servers.

- In order for the client to obtain a complete Row data, it must obtain specific data from Regions in different Region Servers through the load balancing of row, column, and timestamp, and then assemble it.

- Each region is identified by the following information:

- key : <table name, startRowKey, creation time>

- The endRowkey of the region is recorded by the directory table (-ROOT and .META) .

- The table is divided into multiple Regions in the row direction. Region is the smallest unit of distributed storage and load balancing in HBase. Different Regions can be sharded on different Region Servers, but the same Region will not be split into multiple Servers.

- Store:

- Each Region consists of one or more stores.

- Hbase will put the data in a store, that is, see a store for each ColumnFamily. Several columnFamily have several Stores .

- MemStore:

- Save the key-Values of the data, stored in memory

- When the size of the memStore reaches a threshold (64MB by default), Hbase will flush the memStore to the file StoreFile by a thread to generate a snapshot.

- StoreFile : After the data in the memory of memStore is written to the file, it is StoreFile, and the bottom layer of StoreFile is stored in HFile format.

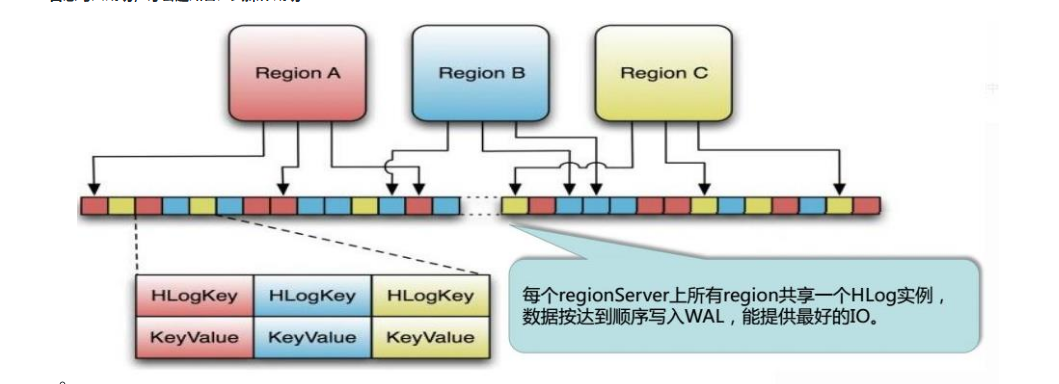

- HLog(WAL log:write ahead log) : It is used for disaster recovery. Hlog records all data changes. Once the Region Server goes down, it can be recovered from the log. Similar to: AOF for redis

- The HLog file is just an ordinary Hadoop Sequence File.

- The value of Sequence File is the HLogKey object (?) of the key, which records the attribution information of the written data. In addition to the table and region names, it also includes the sequence number and timestamp. The timestamp is the writing time, and the starting value of the sequence number is 0, or the sequence number that was last written to the file system. The value of Sequence File is the Key-Value object of HBase, corresponding to the KeyValue in HFile

- The HLog file is just an ordinary Hadoop Sequence File.

- LogFlusher : sync() flushes data to SequenceFileLogWriter implementation

- Log Roller:

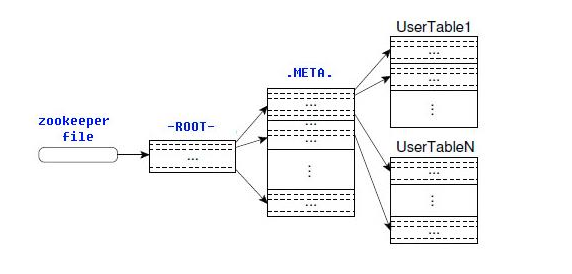

HRegion positioning and split

-

-ROOT-table and .META. table (these two tables are also stored in Region)

- Region metadata is stored in the .META. table. As the Region increases, the data in the .META. table will also increase and split into multiple new Regions. In order to locate the location of each Region in the .META. table (which .META. table is stored in) , store the metadata of which .META. table the Region is in in the -ROOT-table, and finally record the -ROOT-table by Zookeeper location information.

- The -ROOT- table will never be split, it has only one Region, which ensures that any Region can be located with at most three jumps.

- The client will save and cache the location information of the query , and the cache will not automatically invalidate. Therefore, if all the caches on the client are invalid, 6 network round trips are required to locate the correct region, three of which are used to find the cache invalidation. Three times are used to obtain location information.

-

logic:

- Get the location of the -ROOT-table through the file /hbase/rs in zk . -ROOT-table has only one region

- Find which **.META. table** the Region is in through the -ROOT table .

- Find the location of the requested user table region through the .META. table .

-

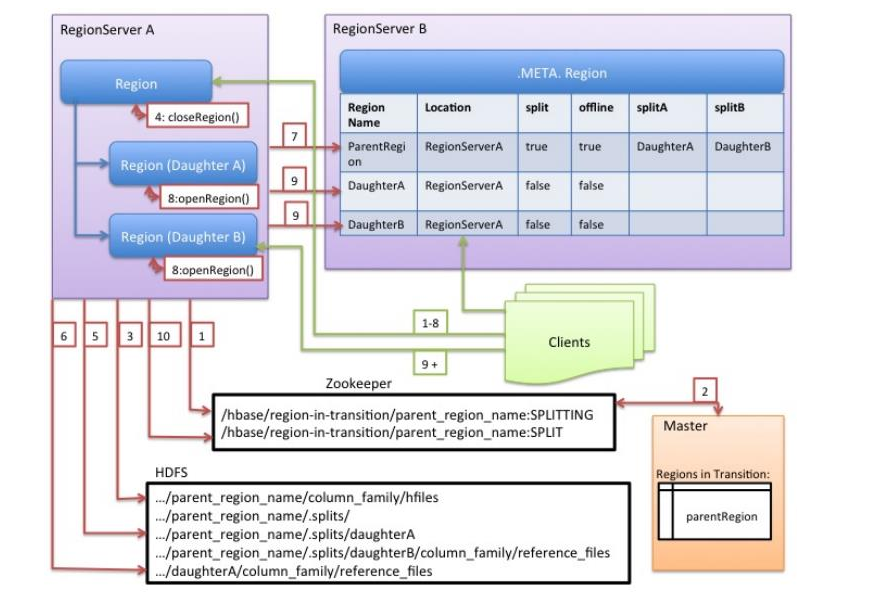

Compact &&Split

logical storage

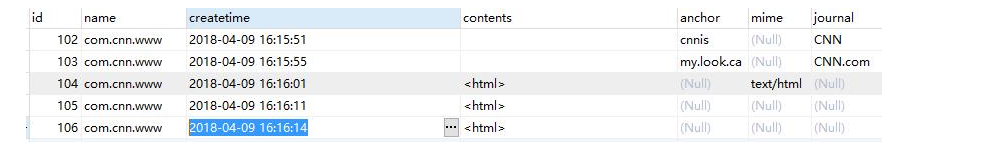

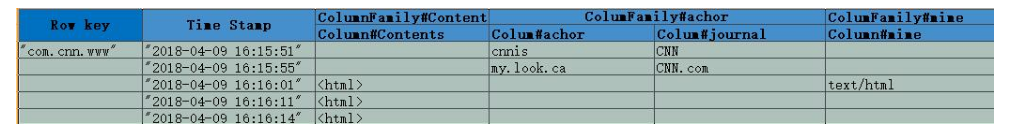

- Traditional relational databases record log data:

- Hbase column-oriented storage method: old data will not be overwritten, only added

- Logical structure:

- Row key : It is a byte array (maximum length is 64KB), which is used as the primary key of each record .

- Access via a single Row key

- Range full table scan through Row key

- The Row key can be any string (the maximum length is 64K, and in practical applications, the length is generally 10~100 bytes), and the Row key in Hbase stores a byte array.

- Column Family : A column family, with a name, containing one or more related columns

- Each Column family is a separate file stored on HDFS, and null values are not saved.

- Column : column, belonging to a columnfamily, familyName:columnName , each record can be dynamically added

- Hbase determines a unit to store Cell through Row and Column. Each cell holds multiple versions of the same data. The version is indexed by a timestamp index, which is a 64-bit integer

- Version Number : Type bit Long, the default value is the system timestamp, which can be customized by the user

- Value(Cell):Byte Array。

- The unit that is uniquely topped by {row key, column(=<family>+<label>), version} . There is no type in Cell, all are stored in bytecode form.

- Row key : It is a byte array (maximum length is 64KB), which is used as the primary key of each record .

physical storage

-

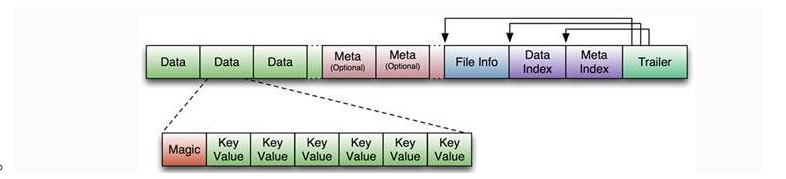

HFile : The basic file format of HBase, all HBase is stored on HFile. If StoreFile is the smallest logical unit of HBase data storage. Then HFile is equivalent to a Linux file system, mapping physical disks and the smallest unit of logical data.

-

- Trailer : The pointer points to the starting point of other data blocks.

- File Info : Some meta information about the file is recorded.

- Data Block : It is the basic unit of hbase io. In order to improve efficiency, there is an LRU-based block cache mechanism in HRegionServer.

- The size of each data block can be specified by parameters when creating a table (the default block size is 64K). Large blocks are good for sequential scans, and small blocks are good for random queries .

- In addition to the Magic at the beginning, each Data block is formed by splicing KeyValue pairs one by one. The Magic content is some random numbers to prevent data damage.

-

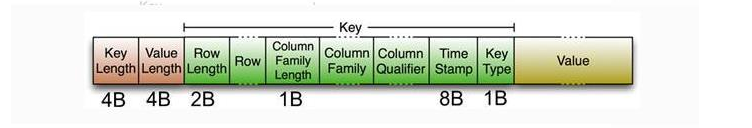

- Start with 2 fixed lengths: indicating the length of key and value

- key: [Length of RowKey]-[Rowkey]-[Length of ColumnFamily]-[ColumnFamily]-[Qualifier]-[TimeStamp]-[key Type] .key Type: in Put/Delete

-

-

HLog File : The storage number of HBase's WAL (write ahead log), which is physically Hadoop's Sequence File. In the process of inserting and deleting, the log used to record the operation content will notify the client that the operation is successful only when the log is written successfully.

- The key of Sequence File is the object of HLogKey. HLogKey records the attribution information of the written data. In addition to table and Region names, it also includes sequence number and timestamp.

- The starting value of the sequence number is 0, or the most recent sequence number stored in the file system

- timestamp is the write time.

- The key of Sequence File is the object of HLogKey. HLogKey records the attribution information of the written data. In addition to table and Region names, it also includes sequence number and timestamp.

read and write

- write:

- Client schedules through zookeeper,

- RowKey exists : query the -ROOT- and .META. tables corresponding to the RowKey, and query the corresponding RegionServer information. Send a PUT write request to the RegionServer, and write the put to the WriteBuffer. If it is submitted in batches, it will be automatically submitted after the buffer is full.

- RowKey does not exist : load directly into the corresponding Region through Hmaster

- The data in the RegionServer is written to the MemStore of the Region until the MemStore reaches the preset threshold.

- The data in the MemStore is flushed into a StoreFile

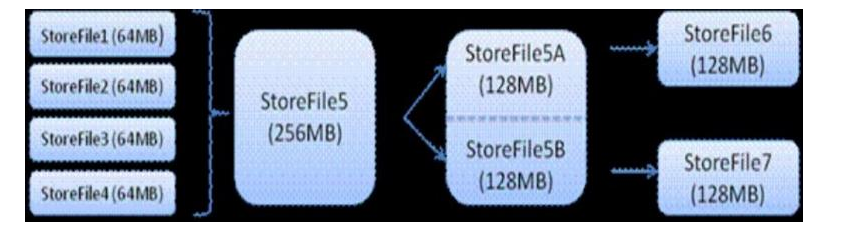

- With the continuous increase of StoreFile files, when the number increases to a certain threshold, the Compact operation is triggered to merge multiple StoreFiles into one StoreFile, and at the same time, version merging and data deletion are performed.

- StroeFiles will form larger and larger StoreFiles through improper Compact merging operations.

- When the size of a StroeFile exceeds a certain threshold, the Split operation is triggered to split the current Region into two new Regions. The parent Region will go offline, and the two Regions generated by the new Split will be allocated to the corresponding RegionServer by the Hmaster, so that the pressure of the original Region can be shunted to the two Regions.

- Client schedules through zookeeper,

- Read:

- Client accesses Zookeeper to find **-ROOT-table and .META.table** information

- Query the Region information that stores the target data from the **.META. table** to find the corresponding RegionServer

- Get the data you need to find from the RegionServer.

- The memory of RegionServer is divided into MemStore and BlockCache. MemStore is mainly used for writing data, and BlockCache is mainly used for reading data. The read request wants to check the data in MemStore. If it can't find it, it will check it in BlockCache. If it can't find it, it will read it in StoreFile, and put the read result into BlockCache.