Reprint address: http://www.raychase.net/3788

Reprinted by: "The Nag of the Four Fires"

The performance analysis and tuning of Spark is very interesting, and I will write another article today. The main topic is shuffle, but of course some other code tricks are involved.

I wrote an article before, comparing the performance optimization of several different scenarios , including the performance optimization of portal, the performance optimization of web service, and the performance optimization of Spark job. Spark's performance optimization has some special features. For example, real-time performance is generally not considered. Usually, the data we use Spark to process is the data that requires asynchronous results; for example, the amount of data is generally large, or else There's no need to manipulate such a big guy on a cluster, etc. In fact, we all know that there is no silver bullet, but each performance optimization scenario has some specific "big bosses", and usually after catching and solving the big boss, a large part of the problems can be solved. For example, for portal, it is page static, and for web service, it is high concurrency (of course, these two can be said to be inaccurate, this is just the experience summed up for the projects I participated in), and for Spark , this big boss is shuffle.

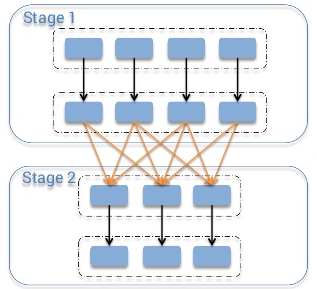

First of all, it is necessary to clarify what shuffle is. Shuffle refers to the transition from the map stage to the reduce stage, that is, when the output of the map is mapped to the input of the reduce, there is no one-to-one correspondence between the nodes, that is, the slave A that does the map work, and its output may be distributed to the reduce. Nodes A, B, C, D... X, Y, Z go, just like the literal meaning of shuffle "shuffle", the output data of these maps should be shuffled and then according to the new routing algorithm (such as some kind of key to the key) hash algorithm), sent to different reduce nodes. (The picture below is from "Spark Architecture: Shuffle" )

The reason why shuffle is the big boss of Spark job is because Spark's own calculations are usually done in memory, such as an RDD of map structure: (String, Seq), the key is a string, and the value is a Seq. If Just perform a one-to-one map operation on the value, such as (1) first calculate the length of Seq, (2) then add this length as an element to Seq. These two steps of calculation can be completed locally, and in fact, they are also completed in memory. In other words, there is no need to go to other nodes to get data, so the execution speed is very fast. However, if shuffle occurs for a large RDD, it will generate a lot of disk IO and CPU overhead due to network transmission, data serialization/deserialization. This performance penalty is huge.

To reduce the overhead of shuffle, there are two main ideas:

- Reduce the number of shuffles, try not to change the key, and complete the data processing locally;

- Reduce the data size of shuffle.

First deduplicate, then merge

For example, if there are two large-scale RDDs such as A and B, if there is a lot of duplication in each, then the two are merged together, and then the duplicates are removed:

|

1

|

A.union(B).distinct()

|

Such an operation is correct, but if you can deduplicate each, then merge, and deduplicate, the overhead of shuffle can be greatly reduced (note that Spark's default union is very similar to "union all" in Oracle - no deduplication ):

|

1

|

A.distinct().union(B.distinct()).distinct()

|

It looks complicated, right, but when I solved this problem, the time overhead was reduced from 3 hours to 20 minutes with the second method.

If the intermediate result rdd is called multiple times, you can explicitly call cache() and persist() to tell Spark to keep the current rdd. Of course, even if you don't do this, Spark still stores the result calculated not long ago (the following is from the official guide ):

Spark also automatically persists some intermediate data in shuffle operations (e.g. reduceByKey), even without users calling persist. This is done to avoid recomputing the entire input if a node fails during the shuffle. We still recommend users call persist on the resulting RDD if they plan to reuse it.

A large amount of data is not necessarily slow. Usually, since Spark jobs are put into memory for operations, a complex map operation is not necessarily slow to execute. But if shuffle is involved, there are network transmission and serialization problems, which may be very slow.

Similarly, there are operations such as filter, etc., the purpose is to "downsize" the large RDD first, and then do other operations.

mapValues is better than map

To specify a map whose key does not change, use mapValues instead, because this ensures that Spark will not shuffle your data:

|

1

|

A.map{

case

(A, ((B, C), (D, E)))

=

> (A, (B, C, E))}

|

Change it to:

|

1

|

A.mapValues{

case

((B, C), (D, E))

=

> (B, C, E)}

|

Use broadcast + filter instead of join

This optimization is an artifact of a specific scenario, that is, take a large RDD A to join a small RDD B, for example, there are two RDDs like this:

- The structure of A is (name, age, sex), which represents the RDD of the people in the whole country.

- The result of B is (age, title), which represents the mapping of "age -> title". For example, 60 years old has the title "Sixtie Years", and 70 years old is "Old Years". This RDD is obviously very small, because people's The age range is between 0 and 200 years old, and some "ages" do not have "titles" yet

Now I want to find these people with titles from the people of the whole country. If written directly as:

|

1

2

3

|

A.map{

case

(name, age, sex)

=

> (age, (name, sex))}

.join(B)

.map{

case

(age, ((name, sex), title))

=

> (name, age, sex)}

|

You can imagine that the super large A is broken up and distributed to each node during execution. And even worse, in order to restore the original (name, age, sex) structure, another map was done, and this time the map also caused shuffle. Shuffle twice, it's crazy. But if you write like this:

|

1

2

|

val

b

=

sc.broadcast(B.collectAsMap)

A.filter{

case

(name, age, sex)

=

> b.values.contains(age)}

|

There was not a single shuffle, and A stayed still, waiting for the full amount of B to be distributed.

In addition, there is BroadcastHashJoin directly in Spark SQL, which also broadcasts small rdd.

uneven shuffle

I encountered such a problem at work, and I needed to convert it into such a huge RDD A. The structure is (countryId, product), the key is the country id, and the value is the specific information of the product. At the time of shuffle, the hash algorithm selected nodes according to the key, but in fact the distribution of the countryId was extremely uneven, and most of the products were in the United States (countryId=1), so we saw through Ganglia, which The CPU of a slave is particularly high, and all calculations are concentrated on that one.

After finding the reason, it is easy to solve the problem. Either avoid this shuffle, or improve the key so that the shuffle can be evenly distributed (for example, you can use the tuple of countryId + commodity name as the key, or even generate a random string).

Identify which operations must be done on the master

If you want to print something to stdout:

|

1

|

A.foreach(println)

|

I want to print the contents of the RDD one by one, but the result does not appear in stdout, because this step is put on the slave to execute. In fact, just collect, these contents are loaded into the master's memory and printed:

|

1

|

A.collect.foreach(println)

|

For another example, if the RDD operation is nested, it is usually considered to be optimized, because only the master can understand and execute the RDD operation, and the slave can only process the assigned tasks. for example:

|

1

|

A.map{

case

(keyA, valueA)

=

> doSomething(B.lookup(keyA).head, valueA)}

|

You can use join instead:

|

1

|

A.join(B).map{

case

(key, (valueA, valueB))

=

> doSomething(valueB, valueA)}

|

Replace groupByKey with reduceByKey

This one should be more classic. reduceByKey will perform the reduce operation in the current node (local), that is, it will reduce the amount of data as much as possible before shuffle. And groupByKey is not, it will go to shuffle directly without any processing. Of course, in some scenarios, the two are not functionally replaceable. Because reduceByKey requires the value involved in the operation, and the type of the output value must be the same, but groupByKey does not have this requirement.

There are some similar xxxByKey operations, all better than groupByKey, such as foldByKey and aggregateByKey.

In addition, there is a similar one that replaces reduce with treeReduce, which is mainly used for a single reduce operation with high overhead, and the scale of each reduce can be controlled by the depth of treeReduce.