The recycling of recyclable waste is of great significance to the sustainable development of my country's economy. The current garbage recycling requires people to manually classify garbage. Finding a method for automatic garbage classification and improving the efficiency of garbage recycling will not only produce significant social benefits, but also have huge economic benefits. In order to improve the accuracy of recyclable household waste identification, researchers have tried to use image processing, machine learning and other methods to automatically identify common household wastes such as glass bottles, waste paper, cartons, and cans. After using image processing technology to obtain the features of garbage images, then using deep learning network, support vector machine, K-nearest neighbor classifier, principal component analysis (PCA) method [3] and other methods to classify garbage image feature vectors.

The classification and reuse of recyclable domestic waste is an important way for a virtuous circle of social economy in our country. An efficient classification algorithm based on machine vision is the key to intelligent garbage classification. A method for transfer learning of ResNetl8 model trained on ImageNet image dataset is proposed. It is used to solve the problem of classification and identification of recyclable domestic waste. After preprocessing the existing recyclable domestic waste image set such as rotation, translation and scaling, it is manually divided into five categories: paper skin, waste paper, plastic, glass and metal. 70% of the samples in each category are randomly selected as the training set, and the remaining 30% are used as the test set. Under the Matlab deep learning framework, the ResNetl8 pre-training model is transferred based on the training set to form a new ResNetl8 classification model. The experimental results on the test set show that the classification accuracy of the new classification model is as high as 93.67%, and the training speed of the model is improved.

1. Data set

The image dataset of this study is based on the garbage image dataset created by Gary Thung and Mindy Yang∞1. The dataset consists of 1,989 images and is divided into 5 categories of glass, paper, plastic, metal, and paper skin. All The size of the picture is adjusted to 512×84. Due to the small number of images in the dataset. 2 485 images of the same type are extracted from the waste-pictures dataset in the Kaggle website, and finally an experimental dataset of 4 474 images is formed. A sample image of each category is shown in Fig. These images can better express the state of domestic waste when it is recycled, such as deformed bottles, wrinkled paper and so on. There are about 500-900 images in each class, and the lighting and pose of each photo are different, and image enhancement techniques are performed on each image. These techniques include random rotation of the image, random brightness control, random translation, random scaling and random shearing. The transformed images were chosen to account for the different orientations of the recycled material and to maximize the size of the dataset.

2. Experimental training

Model training and testing are done under the deep learning framework of Matlab 2019a. Hardware environment: Intel [email protected] CPU, 32GB memory; Nvidia RTX2070 GPU, 8GB video memory. Software environment: CUDA Toolkit 9.0, CUDNN V7.0; Matlab Deep Learning Toolbox; Windows 10 64bit operating system. Model training and testing are accelerated by GPU. For transfer learning model training, there are mainly Epoch, Batch Size and Learning Rate parameters.

- Epoch: An Epoch refers to the process of sending all data to the network to complete a forward calculation and backpropagation. As the number of Epochs increases, the number of weight update iterations in the neural network increases.

- Batch Size: Batch is a part of the data sent to the network for training each time, and Batch Size is the number of training image samples in each Batch. In order to find the best balance between memory efficiency and memory capacity, the Batch Size should be carefully set to optimize the performance and speed of the network model.

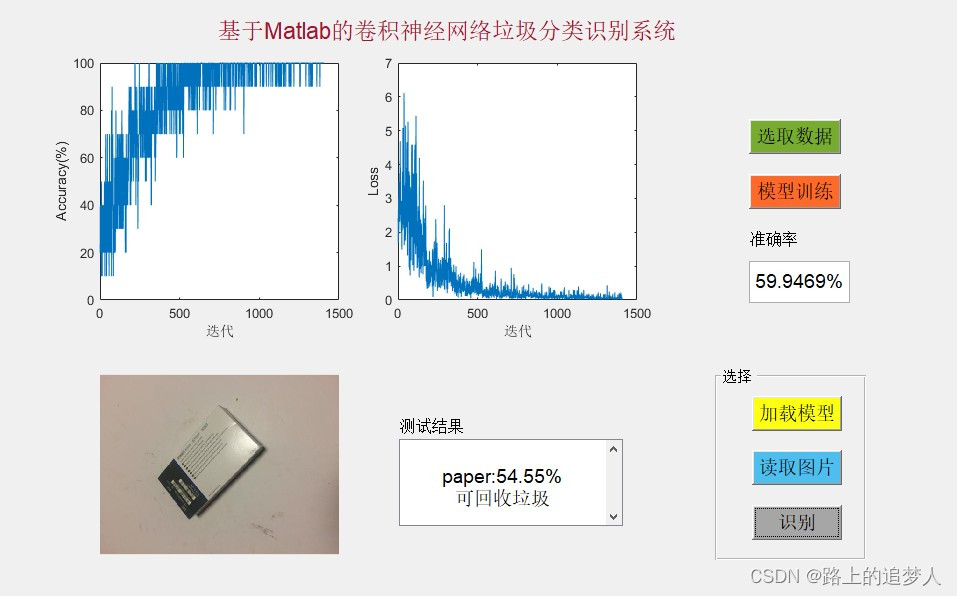

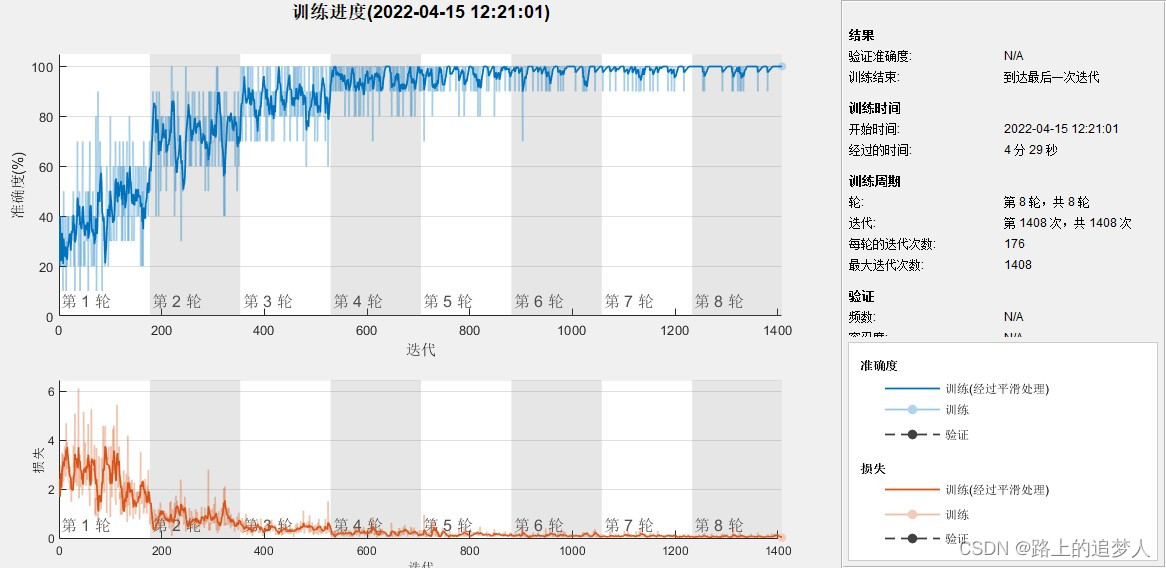

- Learning Rate: It is an important parameter in deep learning, which determines the recognition accuracy of training samples. An appropriate Learning Rate can make the recognition accuracy of training samples reach an ideal value in a suitable time. 70% of the pictures of each category in the recyclable household garbage dataset are randomly divided into training set and the other 30% as test set. When the above three parameters take three different values, a total of 27 experiments are completed. In order to comprehensively evaluate the effect of each parameter combination. The accuracy and time-consuming are normalized as the scores of accuracy and time-consuming, and the accuracy and time-consuming scores are weighted and averaged by 0.6 and 0.4 respectively. A comprehensive score is available. According to the comprehensive score, it can be seen that when the parameter combination of Epoch, Batch Size and Learning Rate is (5, 32, 10'3) and (10, 32, 10.3), the effect of transfer learning is the best. Considering the possible random errors in the NtJll training process, for the two combinations (5, 32, 10.3) and (10, 32, 10'3), repeat the experiment 4 times, and the experimental data in Table 2 can be obtained. . It can be seen that the accuracy of the transfer model obtained by the combination of these two parameters is relatively close, but the training time of the combination of (5, 32, 10.) is much less than that of the combination of (10, 32, 10.). Figure 4 and Figure 5 are the process curves of the two combined training. It can be seen that when the Batch Size and Learning Rate obtain appropriate values, the increase of Epoch can improve the accuracy to a certain extent.

3. Experimental code

function varargout = main(varargin)

% MAIN MATLAB code for main.fig

% MAIN, by itself, creates a new MAIN or raises the existing

% singleton*.

%

% H = MAIN returns the handle to a new MAIN or the handle to

% the existing singleton*.

%

% MAIN('CALLBACK',hObject,eventData,handles,...) calls the local

% function named CALLBACK in MAIN.M with the given input arguments.

%

% MAIN('Property','Value',...) creates a new MAIN or raises the

% existing singleton*. Starting from the left, property value pairs are

% applied to the GUI before main_OpeningFcn gets called. An

% unrecognized property name or invalid value makes property application

% stop. All inputs are passed to main_OpeningFcn via varargin.

%

% *See GUI Options on GUIDE's Tools menu. Choose "GUI allows only one

% instance to run (singleton)".

%

% See also: GUIDE, GUIDATA, GUIHANDLES

% Edit the above text to modify the response to help main

% Last Modified by GUIDE v2.5 14-Apr-2022 22:27:41

% Begin initialization code - DO NOT EDIT

gui_Singleton = 1;

gui_State = struct('gui_Name', mfilename, ...

'gui_Singleton', gui_Singleton, ...

'gui_OpeningFcn', @main_OpeningFcn, ...

'gui_OutputFcn', @main_OutputFcn, ...

'gui_LayoutFcn', [] , ...

'gui_Callback', []);

if nargin && ischar(varargin{1})

gui_State.gui_Callback = str2func(varargin{1});

end

if nargout

[varargout{1:nargout}] = gui_mainfcn(gui_State, varargin{:});

else

gui_mainfcn(gui_State, varargin{:});

end

% End initialization code - DO NOT EDIT

% --- Executes just before main is made visible.

function main_OpeningFcn(hObject, eventdata, handles, varargin)

% This function has no output args, see OutputFcn.

% hObject handle to figure

% eventdata reserved - to be defined in a future version of MATLAB

% handles structure with handles and user data (see GUIDATA)

% varargin command line arguments to main (see VARARGIN)

% Choose default command line output for main

handles.output = hObject;

% Update handles structure

guidata(hObject, handles);

cla reset;

box on;

set(handles.axes1,'xtick',[]);

set(handles.axes1,'ytick',[]);

cla reset;

box on; %在坐标轴四周加上边框

set(handles.axes2,'xtick',[]);

set(handles.axes2,'ytick',[]);

box on; %在坐标轴四周加上边框

cla reset;

set(handles.axes3,'xtick',[]);

set(handles.axes3,'ytick',[]);

set(handles.edit1,'string','');

set(handles.edit2,'string','');

% UIWAIT makes main wait for user response (see UIRESUME)

% uiwait(handles.figure1);

% --- Outputs from this function are returned to the command line.

function varargout = main_OutputFcn(hObject, eventdata, handles)

% varargout cell array for returning output args (see VARARGOUT);

% hObject handle to figure

% eventdata reserved - to be defined in a future version of MATLAB

% handles structure with handles and user data (see GUIDATA)

% Get default command line output from handles structure

varargout{1} = handles.output;

% --- Executes on button press in pushbutton1.

function pushbutton1_Callback(hObject, eventdata, handles)

% hObject handle to pushbutton1 (see GCBO)

% eventdata reserved - to be defined in a future version of MATLAB

% handles structure with handles and user data (see GUIDATA)

global filepath

filepath = uigetdir('*.*','请选择文件夹');%fliepath为文件夹路径

% --- Executes on button press in pushbutton2.

function pushbutton2_Callback(hObject, eventdata, handles)

% hObject handle to pushbutton2 (see GCBO)

% eventdata reserved - to be defined in a future version of MATLAB

% handles structure with handles and user data (see GUIDATA)

global filepath

if filepath==0|isequal(filepath,0)|~exist(filepath,'dir')

warndlg('文件夹不存在,请重新选择!','warning');

return;

end

%% 数据集 %%

% 数据集,每个文件的标签是其所在文件夹

datas=imageDatastore(filepath,'LabelSource','foldernames');

% datas.Labels 标签

% datas.Files 路径+文件名

数量一定要相同

softmaxLayer % 分类层

classificationLayer];

%% 参数设定 %%

% 参数配置

% 验证集才加入

%'ValidationData',valImg,...

%'ValidationFrequency',6,...

options=trainingOptions('sgdm',...

'MiniBatchSize',10,... % 10

'MaxEpochs',8,...

'Shuffle','every-epoch',...

'InitialLearnRate',1e-4,...

'Verbose',true,... %命令窗口显示指标

'Plots','training-progress');

% ,TrainingAccuracy,TrainingLoss

[net_cnn,info]=trainNetwork(trainImg,layers,options);

axes(handles.axes1);

plot(info.TrainingAccuracy);

xlabel('迭代');

ylabel('Accuracy(%)');

axes(handles.axes2);

plot(info.TrainingLoss);

xlabel('迭代');

ylabel('Loss');

%% 模型存储 %%

% 保存模型名称+训练好的模型

save net_cnn net_cnn;

res=num2str(accuracy*100);

res=strcat(res,'%');

set(handles.edit1, 'String',res);

% --- Executes on button press in pushbutton3.

function pushbutton3_Callback(hObject, eventdata, handles)

% hObject handle to pushbutton3 (see GCBO)

% eventdata reserved - to be defined in a future version of MATLAB

% handles structure with handles and user data (see GUIDATA)

global str;

[filename,pathname]=uigetfile({'*.mat'});

str=[pathname filename];

if isequal(filename,0)|isequal(pathname,0)

warndlg('模型不存在,请重试!','warning');

return;

end

references

[1] Ning Kai, Zhang Dongbo. Garbage detection and classification of intelligent sweeping robot based on visual perception [J].

[2] Liu Yaxuan, Pan Wanbin. Long-term garbage classification method based on self-training[J].

For details, please click : 1341703358. In the future, the recyclable garbage image dataset should be further enriched, and a classification and recognition model of mixed garbage images should be established to further improve the recognition accuracy of the recyclable garbage image model.