HDFS

1. Basic Concepts

HDFS (Hadoop Distributed File System) is a distributed file system under Hadoop for distributed file storage of data.

2. Features

-

High fault tolerance: Provides a multi-copy mechanism, so that the loss of some data will not affect the data.

-

High throughput: HDFS is designed for high throughput, not low latency.

-

Large file support: HDFS is more suitable for large data storage, and the scale should be GB or TB level.

-

Simple Consistent Model: A write-once-read-many access model that does not support random access and writes.

-

High reliability: HDFS rack equipment can be built on inexpensive servers.

3. Design principle

HDFS Architecture:

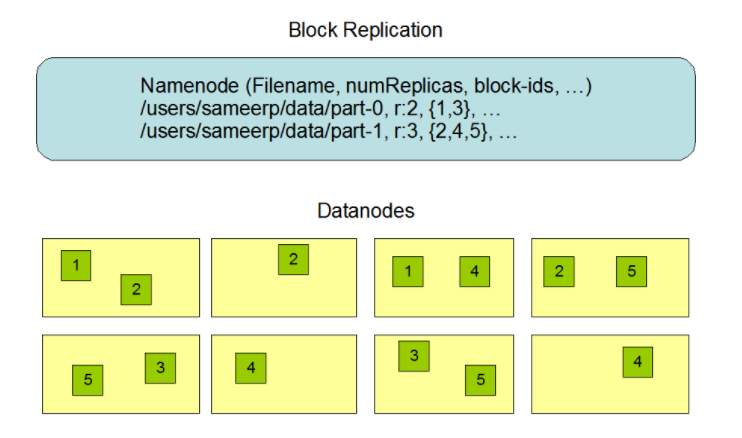

Namenode: master role, manage namespace, data block mapping information, copy strategy, etc., globally unique;

DataNode: slave role, which stores metadata and performs read and write operations. There can be multiple.

Sometimes, in order to be more highly available, the Sencodary Namenode can be configured as a hot standby.

The high availability of HDFS lies in its replication mechanism: each data block has multiple replicas, which are stored in different DataNodes and distributed in different racks. By default, the block size is 128M and the replication factor is 3. Then data query, following the principle of proximity, will preferentially obtain data from the nearest DataNode, the nearest rack, and the nearest data center.

HDFS high reliability lies in:

- Heartbeat mechanism: The DataNode will periodically (3s) send a heartbeat message to the NameNode. If the message is not received within the timeout period, the DataNode will be considered dead, and the related requests will not flow to the node. Subsequent NameNodes will re-replicate the data by some mechanism.

- Data verification: Since the data is split and then spliced, it is necessary to verify the integrity of the data. If the test values match before and after, the data is complete.

- Disk failure: For possible disk failure, data recovery is required. FsImage and EditLog support partial recovery.

- Snapshot support