Author: Lao Xia Source: Hang Seng LIGHT Cloud Community

01 Read the message queue in one article

Overview

What is a message queue? MQ (Message Queue) message queue, as its name, message queue, message is the information of communication between systems, queue is a first-in, first-out data structure. Java implements a lot of queues, as shown below:

Interested friends can take a look at the source code.

Why do you need a message queue?

There are many examples on the Internet, so I won't make up stories. I'll just copy the example of Mr. Li Key, the technical leader:

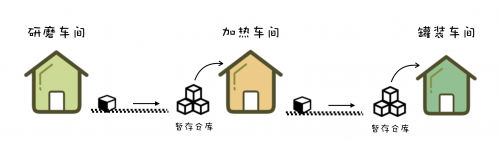

It is said that Xiaoyuan is the owner of a chocolate workshop. To produce delicious chocolate, three processes are required: first, the cocoa beans are ground into cocoa powder, then the cocoa powder is heated and sugar is added to become chocolate syrup, and finally the chocolate syrup is poured into the mold, Sprinkle with chopped nuts and after cooling it is the finished chocolate.

In the beginning, each time a barrel of cocoa powder was ground, the workers would send the barrel to the workers who processed the chocolate syrup, and then came back to process the next barrel of cocoa powder. Xiao Yuan soon discovered that the workers did not need to transport the semi-finished products by themselves, so he added a set of conveyor belts between each process. Bucket of cocoa powder. Conveyor belts solve the problem of "communication" between upstream and downstream processes.

The fact that the conveyor belt came online did improve production efficiency, but it also brought new problems: the production speed of each process was not the same. In the chocolate liquor workshop, when a barrel of cocoa powder arrives, workers may be processing the last batch of cocoa powder and have no time to receive it. Workers in different processes must coordinate when to place semi-finished products on the conveyor belt. If the processing speed of the upstream and downstream processes is inconsistent, the upstream and downstream workers must wait for each other to ensure that no one receives the semi-finished products on the conveyor belt.

In order to solve this problem, Xiaoyuan has equipped a warehouse for temporary storage of semi-finished products on the downstream belt of each group of conveying, so that the upstream workers do not have to wait for the downstream workers to be free, and can throw the processed semi-finished products on the conveyor belt at any time, which cannot be received. The goods are temporarily stored in the warehouse, and downstream workers can come and pick them up at any time. The warehouse equipped with the conveyor belt actually acts as a "cache" in the "communication" process.

The conveyor belt solves the transportation problem of semi-finished products, and the warehouse can temporarily store some semi-finished products, which solves the problem of inconsistent production speed between upstream and downstream.

What problems can message queues solve?

1 Decoupling

One of the functions of message queues is to achieve decoupling between system applications. Give an example to illustrate the role and necessity of decoupling.

Let's take a treasure as an example. When I place an order to buy a product:

a, the payment system initiates the payment process;

b, Zhifbao risk control audits the legitimacy of the order;

c. Send SMS to notify payment to Alipay;

d, a treasure update statistical data;

e, etc.

If using the interface, as the business continues to develop, these order downstream systems continue to increase and change, and each system may only require a subset of the order data, the development team responsible for the order service has to spend a lot of energy, In response to the ever-increasing and changing downstream systems, the interface between the commissioning order system and these downstream systems is constantly modified. Any change of the downstream system interface requires the order module to go online again, which is almost unacceptable for an e-commerce core service.

After the introduction of the message queue, the order service sends a message to a topic Order in the message queue when the order changes, and all downstream systems subscribe to the topic Order, so that each downstream system can obtain a complete real-time order data.

No matter how the downstream system is added or decreased or the requirements of the downstream system change, the order service does not need to be changed, which realizes the decoupling of the order service and the downstream service.

2 Asynchronous processing

We still take the e-commerce system as an example to design a seckill system. The core problem of the seckill system is how to use limited server resources to process massive requests as soon as possible. After all, it is a "seckill" that must be fast! ! ! Then we assume that the spike has the following steps (the real spike will have more steps):

1. Risk control;

2. Lock inventory;

3. Generate an order;

4. Send SMS;

5. Update statistics;

6··········

If you use the interface to process, you need to call the above five processes at a time, and then respond to the second kill result.

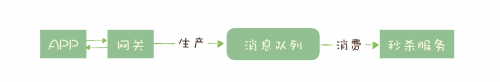

We try to optimize the seckill process. In fact, when we have passed the risk control and the inventory is locked, we can respond to the user that the seckill is successful. By the way, the next few steps do not necessarily require processing of the current seckill request. Therefore, we can process asynchronous requests through the message queue to return results faster, reduce user waiting time, and improve the overall performance of the system.

3 Flow Control

Continuing the example of the spike system above, we have implemented asynchronous processing, but how do we avoid overwhelming the system with a large number of requests?

A robust system needs to have the ability to protect itself. Even under a large number of requests, it can still process requests within its own capabilities, reject requests that cannot be processed and ensure that the service is running normally, but directly rejecting requests does not seem very friendly, so we need to restart. Design a robust architecture to protect the normal operation of the backend.

The general idea: use message queues to isolate gateways and back-end services to achieve flow control and protect back-end services.

Join the message queue, and the seckill request process becomes:

1. After receiving the request, the gateway puts the request into the request message queue;

2. The back-end service obtains the APP request from the request message queue, completes the subsequent spike processing process, and returns the result.

As shown in the figure, when a large number of requests arrive at the gateway, the gateway will not directly forward the requests to the seckill system, but will accumulate the messages in the message queue, and the back-end service can process the requests according to its own capabilities. Requests that have timed out can be discarded directly, and the APP can handle requests with no response over time as a spike failure.

Although this design can achieve the effect of "cutting peaks and filling valleys", it also increases the processing time of the entire link, which increases the response time and increases the complexity of the system. Is there a better solution then? Answer: Token bucket.

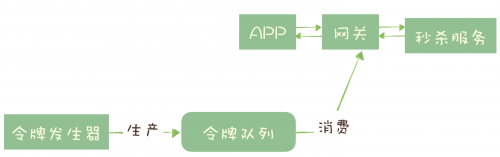

The principle of the token bucket to control traffic is: only a fixed number of tokens are issued to the token bucket per unit time, and it is stipulated that the service must take out a token from the token bucket before processing the request. If there is no token bucket in the token bucket token, the request is denied. This ensures that the number of requests that can be processed in a unit time does not exceed the number of issued tokens, which plays a role in flow control.

The way of implementation is also very simple, it does not need to destroy the original call chain, as long as the gateway adds a logic to obtain the token when processing the APP request. The token bucket can be implemented simply by using a message queue with a fixed capacity and a "token generator": the token generator produces tokens at a constant rate according to the estimated processing capacity and puts them into the token queue (if the queue is full If the request is received, the token will be discarded), the gateway will go to the token queue to consume a token when it receives the request, and will continue to call the back-end seckill service when the token is obtained. If the token cannot be obtained, it will directly return the seckill failure. The above are two commonly used design methods for flow control using message queues. You can choose reasonably according to their respective advantages and disadvantages and different applicable scenarios.

02 How to choose a message queue

1 The old-fashioned message queue RabbitMQ

RabbitMQ, commonly known as Rabbit MQ, is also characterized by the middleware, like a rabbit: lightweight and fast. Written in Erlang language, it was originally designed for reliable communication between systems in the telecommunications industry, and supports AMQP, XMPP, SMTP, and STOMP protocols. Known as the most widely used message queue in the world, it is really impossible to count whether the share rate is the first, but it is definitely "one of the most popular middleware". RabbitMQ supports very flexible routing configuration. Unlike other message queues, it adds an Exchange module between the producer and the queue.

The Exchange module distributes the messages sent by the producers to the corresponding queues according to the configured routing rules. The routing rules are very flexible, and you can also implement routing rules yourself. Based on this Exchange, many ways to play can be generated. If you just need this function, RabbitMQ is a good choice.

RabbitMQ should be the most supported programming language among all queues, so if you are developing in an unpopular language, RabbitMQ is probably the best choice for you, because you have no choice! ! !

Several problems with RabbitMQ

1) According to the design concept of RabbitMQ, a message is a pipeline, so message accumulation is an abnormal situation, and accumulation should be avoided. When a large number of messages are accumulated, the performance of RabbitMQ will drop sharply.

2) The performance of RabbitMQ should be the worst among the queues I will introduce next. According to the official test data combined with our daily experience, and depending on the hardware configuration, it can process tens of thousands to several hours per second. Thousands of news. Unless you have extremely high performance requirements for message queues, the performance of Rabbit MQ can support most scenarios.

3) RabbitMQ is written in Erlang. At present, I haven't encountered this thing around me, so this thing is a little too small. It is said that the more troublesome is that the learning curve of this language is very steep, but it is not very good. study. Most popular programming languages, such as Java, C/C++, Python and JavaScript, although there are many differences in syntax and features, their basic architecture is the same, you are only proficient in one language, and it is easy to learn others language, even if it is not proficient in a short period of time, at least it can reach the level of "proficient use". So if you want to do some expansion and secondary development based on RabbitMQ, it is recommended that you carefully consider the issue of sustainable maintenance.

2 Domestic Rocket MQ "RocketMQ"

RocketMQ is Alibaba's open source message queue product in 2012, and later donated to the Apache Software Foundation. It officially graduated in 2017 and became Apache's top-level project. Alibaba also uses RocketMQ as a message queue to support its business. It has experienced multiple "Double Eleven" tests, and its performance, stability and reliability are trustworthy. As an excellent domestic message queue, it has been used more and more by many domestic manufacturers in recent years.

RocketMQ is like a good student with excellent academic performance. It has good performance, stability and reliability. It has almost all the functions and features that a modern message queue should have, and it is still growing.

RocketMQ has a very active Chinese community, most questions you can encounter can be answered in Chinese, and RocketMQ is developed in Java! Most of his contributors are Chinese, so the source code is relatively easy to understand, so it is also relatively easy to expand and secondary development.

The performance of RocketMQ is an order of magnitude higher than that of RabbitMQ, about hundreds of thousands of messages per second. One of its disadvantages is that it is domestically produced, and it is not very popular internationally, and it is less integrated and compatible with the surrounding ecology.

3 Kafka

About the introduction of Kafka, first copy Baidu Encyclopedia

Kafka is an open source stream processing platform developed by the Apache Software Foundation and written in Scala and Java. Kafka is a high-throughput distributed publish-subscribe messaging system, which can process all the action flow data of consumers in the website. Such actions (web browsing, searching, and other user actions) are a key factor in many social functions on the modern web. This data is usually addressed by processing logs and log aggregation due to throughput requirements. This is a viable solution for log data and offline analysis systems like Hadoop, but with the constraints of real-time processing. The purpose of Kafka is to unify online and offline message processing through Hadoop's parallel loading mechanism, and to provide real-time messages through clusters.

Because Kafka was originally designed to process massive logs, in order to obtain the ultimate performance, some sacrifices were made in other aspects, such as not guaranteeing the reliability of messages, possible loss of messages, not supporting clusters, and rudimentary functions. Kafka cannot be called a qualified message queue. However, with the development of Kafka later, these design defects have been fully repaired, and now it can meet most scenarios in terms of data reliability, stability and functionality.

The compatibility of Kafka with the surrounding ecosystem is one of the best, especially in the field of big data and stream computing, almost all related open source software systems will give priority to supporting Kafka.

Kafka is developed using Scala and Java languages, and the design uses a lot of batch and asynchronous ideas. This design enables Kafka to achieve ultra-high performance. The performance of Kafka, especially the performance of asynchronous sending and receiving, is the best among the three, but there is no order of magnitude difference with RocketMQ, which can process hundreds of thousands of messages per second.

Due to the asynchronous batch design of Kafka, the response delay of receiving messages is relatively high, because Kafka does not send messages immediately, but saves them and sends them again. Therefore, Kafka is not suitable for business scenarios with high real-time requirements.

Summarize

If your business scenario does not have high requirements on the function and performance of message queues, but only needs an out-of-the-box, easy-to-maintain message queue, then you are recommended (must be) RabbitMQ.

If your business scenario requires low latency and financial-grade stability, RocketMQ is recommended.

Kafka is especially suitable for processing massive log monitoring information, or big data, and stream computing related products.