1.sqoop installation

(1) Download the sqoop of the CDH version

(2) Unzip and configure the environment

The environment variables are:

export SQOOP_HOME=/home/sqoop-1.4.6-cdh5.15.1

export PATH=$PATH:$SQOOP_HOME/binIn the sqoop installation directory /conf/:

#新建sqoop-env.sh文件

cp sqoop-env-template.sh sqoop-env.sh

#增加环境变量

1、hadoop_home

2、hive_home

3、hbase_home

(3) Start the test

sqoop helpAnd connect test

sqoop list-databases --connect jdbc:mysql://IP地址/数据库--username 数据库的用户名 --password 数据库密码

2. Use sqoop to import mysql data into hdfs

sqoop import --connect jdbc:mysql://IP地址/数据库 --username 数据库用户名 --password 数据库密码 --table 表名 --driver com.mysql.jdbc.Driver (驱动)Success interface:

Existing errors:

(1)提示java.lang.ClassNotFoundException: Class QueryResult not found

![]()

Into the temporary folder,

Put the jar package in the sqoop installation directory/lib.

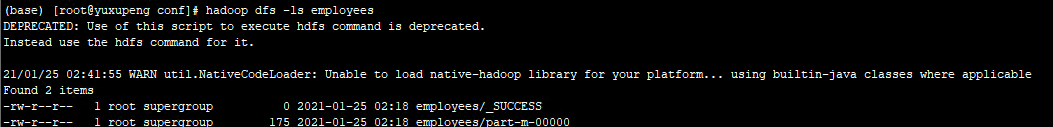

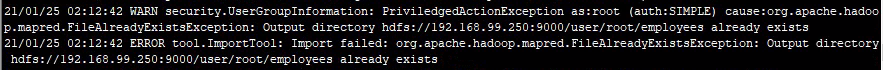

(2)提示org.apache.hadoop.mapred.FileAlreadyExistsException: Output directory hdfs://192.168.99.250:9000/user/root/employees already exists

Just delete the file in hdfs, command: hadoop dfs -rmr / folder path

(3) The following error occurred, it is the lack of java-json.jar, placed in the sqoop installation directory/lib

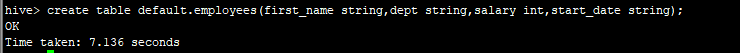

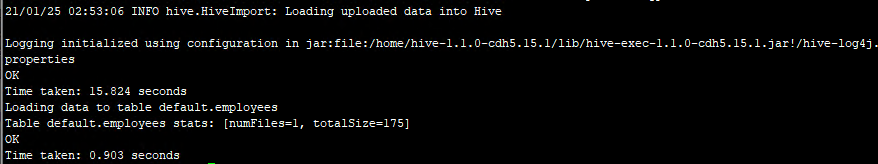

3. Use sqoop to import mysql data into hive

sqoop import --connect jdbc:mysql://192.168.99.16/test --username root --password root --table employees --hive-import --hive-table employees --driver com.mysql.jdbc.Driver(1) Create a new table

success!

Query hive:

(1)出现ERROR hive.HiveConfig: Could not load org.apache.hadoop.hive.conf.HiveConf. Make sure HIVE_CONF_DIR is set correctly.

Add export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$HIVE_HOME/lib/* to the end of

/etc/profile and refresh the configuration, source /etc/profile

4. Use sqoop to import Hadoop data into mysql

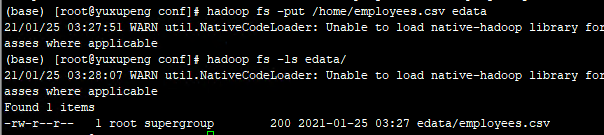

(1) Upload local data to hdfs

(2) Execute the command

sqoop export --connect jdbc:mysql://192.168.99.16/test --username root --password root --table employees_s --export-dir edata --columns first_name,salary,dept --driver com.mysql.jdbc.Driver --input-fields-terminated-by '\001' --input-null-string '\n' --input-null-non-string '\n'wrong reason:

The fields are inconsistent, the encoding is inconsistent, and it is changed to utf-8.

5. Use sqoop to import Hive data into mysql

(1) View the save path of the hive table in hdfs

(2) Export

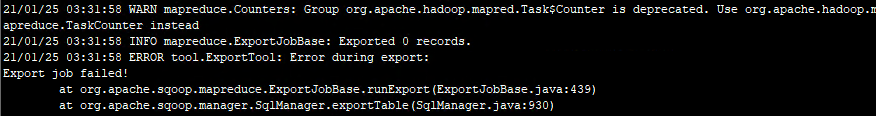

sqoop export --connect jdbc:mysql://192.168.99.16/test --username root --password root --table employees_s --export-dir /user/hive/warehouse/employees --driver com.mysql.jdbc.Driver --input-fields-terminated-by '\001' --input-null-string '\n' --input-null-non-string '\n'![]()

Need to pay attention to the field type!

6. hive connects oracle as the base library

Change the content of the hive-site.xml file:

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:oracle:thin:@IP地址:端口号:实例名</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>oracle.jdbc.OracleDriver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>username</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>password</value>

</property>

</configuration>Restart hive service

hive --service metastore &7. Hive connects to Hbase as the basic database