table of Contents

org.apache.lucene.analysi.Analyzer

TokenStreamComponents createComponents(String fieldName)

org.apache.lucene.analysis.TokenStream

Two types of subclasses of TokenStream

TokenStream inherits AttributeSource

AttributeSource usage rule description

Simple implementation of our own Analyzer, requirement description

The tokenizer provided by Lucene

StandardAnalyzer English word segmentation in Lucene core module

Lucene's Chinese word segmentation device SmartChineseAnalyzer

The tokenizer provided in the Lucene-Analyzers-common package

IKAnalyzer is an open source, lightweight Chinese word segmenter with many applications

The integration is required because the API of the createComponents method of Analyzer has changed.

Please compare IKAnalyzer and SmartChineseAnalyzer

Extend IKAnalyzer's stop words

Expand IKAnalyzer's dictionary

IKAnalyzer.cfg.xml file example

Lucene tokenizer API

org.apache.lucene.analysi.Analyzer

The analyzer , the core API of the tokenizer component, is responsible for constructing a TokenStream (word segmentation processor) that actually performs word segmentation processing on the text. By calling its following two methods, the word segmentation processor of the input text is obtained.

public final TokenStream tokenStream(final String fieldName,

final Reader reader) {

TokenStreamComponents components = reuseStrategy.getReusableComponents(this, fieldName);

final Reader r = initReader(fieldName, reader);

if (components == null) {

components = createComponents(fieldName);

reuseStrategy.setReusableComponents(this, fieldName, components);

}

components.setReader(r);

return components.getTokenStream();

}public final TokenStream tokenStream(final String fieldName, final String text) {

TokenStreamComponents components = reuseStrategy.getReusableComponents(this, fieldName);

@SuppressWarnings("resource") final ReusableStringReader strReader =

(components == null || components.reusableStringReader == null) ?

new ReusableStringReader() : components.reusableStringReader;

strReader.setValue(text);

final Reader r = initReader(fieldName, strReader);

if (components == null) {

components = createComponents(fieldName);

reuseStrategy.setReusableComponents(this, fieldName, components);

}Question 1: Where did you get TokenStream?

Question 2: Who is the character stream Reader passed in by the method?

Question 3: What are components? What is the acquisition logic of components?

Question 4: What is the createComponents(fieldName) method?

Question 5: Can Analyzer create objects directly?

Question 6: Why is it designed this way?

Question 7: Please take a look at what are the implementation subclasses of Analyzer?

Question 8: To implement an own Analyzer, which method must be implemented?

TokenStreamComponents createComponents(String fieldName)

It is the only abstract method and extension point in Analizer. Realize your own Analyzer by providing the implementation of this method.

Parameter description: fieldName, if we need to create different word segmentation processor components for different fields, we can judge based on this parameter. Otherwise, this parameter is not used.

The return value is TokenStreamComponents word segmentation processor component. We need to create the word segmentation processor components we want in the createComponents method.

TokenStreamComponents

Word segmentation processor component : This class encapsulates the TokenStream word segmentation processor for external use. Provides access to the two attributes of source (source) and sink (for external use of word segmentation processors).

Question 1: How many construction methods are there for this class? What is the difference? What can be found out of it?

Question 2: What are the types of source and sink attributes? What is the relationship between these two types?

Question 3: There is no code to create source and sink objects in this class (instead, it is passed in by the constructor). That is to say, before we create its object in the Analyzer.createComponents method, what needs to be created first?

Question 4: Who is the input stream given to the tokenStream() method in Analyzer? Who is the obtained TokenStream object? Does a source object have to be encapsulated in the sink of the TokenStream object?

If it must be, does this encapsulation also have to be done in the Analyzer.createComponents method?

org.apache.lucene.analysis.TokenStream

The word segmentation processor is responsible for the word segmentation and processing of the input text.

Question 1: Continuing from the previous page, is there a corresponding input method in TokenStream?

Question 2: TokenStream is an abstract class. What are its methods and what are its abstract methods? What are the characteristics of its construction method?

Question 3: What are the two types of specific sub-categories of TokenStream? What's the difference?

Question 4: Who has TokenStream inherited? What is it for?

Concept description: Token Attribute: item attributes (item information): such as included words, location, etc.

Two types of subclasses of TokenStream

Tokenizer : Tokenizer , the input is the TokenStream of the Reader character stream, and the sub-items are separated from the stream.

TokenFilter : sub-item filter, its input is another TokenStream, which completes the special processing of the token flowing out from the previous TokenStream.

Question 1: Please check the source code and comments of the Tokenizer class. How to use this class? What only needs to be done to implement your own Tokenizer?

Question 2: What are the subclasses of Tokenizer?

Question 3: Please check the source code and comments of the TokenFilter class, how to implement your own TokenFilter?

Question 4: What are the subclasses of TokenFilter?

Question 5: Is TokenFilter a typical decorator pattern? If we need to perform various processing on word segmentation, we only need to wrap it layer by layer according to our processing order (each layer completes a specific processing). Different processing needs only need different package order and number of layers.

TokenStream inherits AttributeSource

Question 1: Do we see the storage of sub-item information in TokenStream and its two subcategories, such as what is the word of the sub-item and the position index of this word?

Concept description: Attribute Token Attribute sub-item attributes (sub-item information), such as the word of the sub-item, the index position of the word, and so on. These attributes are obtained through different Tokenizer/TokenFilter processing statistics. Different Tokenizer/TokenFilter combinations will have different sub-items. It will change dynamically, you don't know how many or what it is. How to realize the storage of itemized information? The answer is AttributeSource, Attribute, AttributeImpl, AttributeFactory 1. AttribureSource is responsible for storing Attribute objects, and it provides corresponding storage and retrieval methods. 2. Attribute objects can store one or more attribute information. 3. AttributeFactory is a factory responsible for creating Attributre objects. AttributeFactory.getStaticImplementation is used by default in TokenStream. We don’t need to provide it, just follow its rules.

AttributeSource usage rule description

- 1. If you want to store itemized attributes in a certain TokenStream implementation, add an attribute object to the AttributeSource through one of the two add methods of AttributeSource.

- <T extends Attribute> T addAttribute(Class<T> attClass) This method requires you to pass the interface class (inheriting Attribute) of the attribute you need to add, and return the corresponding implementation class instance to you. From interface to instance, this is why AttributeFactory is needed. void addAttributeImpl(AttributeImpl att)

- 2. Each added Attribute implementation class will only have one instance in AttributeSource. In the process of word segmentation, the sub-item is to reuse this instance to store the attribute information of the sub-item. Repeatedly call the add method to add it returns the stored instance object.

- 3. To obtain the attribute information of a sub-item, you need to hold an instance object of an attribute, obtain the Attribute object through the addAttribute method or the getAttribure method, and then call the instance method to obtain and set the value

- 4. In TokenStream, we use our own Attribute, the default factory. When we call this add method, how does it know which implementation class is? There are certain rules to follow: 1. The custom attribute interface MyAttribute inherits Attribute 2. The custom attribute implementation class must inherit Attribute and implement the custom interface MyAttribute 3. The custom attribute implementation class must provide a no-parameter construction method 4 , In order for the default factory to find the implementation class based on the custom interface, the implementation class name needs to be interface name+Impl. Please check whether the Attribute implementation provided in lucene is like this.

Steps to use TokenStream.

We do not directly use the tokenizer in our application, we only need to create the tokenizer object we want for indexing engines and search engines. But when we choose a tokenizer, we need to test the effect of the tokenizer, and we need to know how to use the obtained tokenizer TokenStream.

Steps for usage:

- 1. Obtain the attribute object you want to obtain from the tokenStream (the information is stored in the attribute object)

- 2. Call the reset() method of tokenStream to reset. Because tokenStream is reused.

- 3. Call the incrementToken() of tokenStream in a loop, one by one, until it returns false

- 4. Take out the attribute value you want for each sub-item in the loop.

- 5. Call end() of tokenStream to execute the end processing required by the task.

- 6. Call the close() method of tokenStream to release the occupied resources.

Thinking: tokenStream is a decorator mode, how does this reset / incrementToken / end / close work? Please check the source code of tokenStream / Tokenizer / TokenFilter.

Simple implementation of our own Analyzer, requirement description

Tokenizer: Realize the segmentation of English words by blank characters. The attribute information that needs to be recorded is: word

TokenFilter: processing to be performed: converted to lowercase

Note: When Tokenizer is used for word segmentation, it reads one character from the character stream and judges whether it is a blank character for word segmentation.

Thinking: Tokenizer is an AttributeSource object, and TokenFilter is an AttributeSource object. In these two our own implementation classes, we both call the addAttribute method. How come there is only one attribute object? Please check the source code to find the answer.

summary

Through the study of API and source code, do you feel some of the author's design ideas.

- 1. How does he deal with changes and changes, such as the design of Analyzer and TokenStream?

- 2. How does he deal with the problem that different tokenizers have different processing logics?

The tokenizer provided by Lucene

StandardAnalyzer English word segmentation in Lucene core module

See what attribute information it analyzes and stores, try its English word segmentation effect, Chinese word segmentation effect

Lucene's Chinese word segmentation device SmartChineseAnalyzer

<!-- Lucene提供的中文分词器模块,lucene-analyzers-smartcn -->

<dependency>

<groupId>org.apache.lucene</groupId>

<artifactId>lucene-analyzers-smartcn</artifactId>

<version>7.3.0</version>

</dependency>

Try its Chinese and English word segmentation effect

The tokenizer provided in the Lucene-Analyzers-common package

See what tokenizers are there

IKAnalizer integration

IKAnalyzer is an open source, lightweight Chinese word segmenter with many applications

It was first developed for use on Lucene, and later developed into an independent word segmentation component. Only provide support to Lucene 4.0 version. We need a simple integration to use Lucene in version 4.0 and later.

<!-- ikanalyzer 中文分词器 -->

<dependency>

<groupId>com.janeluo</groupId>

<artifactId>ikanalyzer</artifactId>

<version>2012_u6</version>

<exclusions>

<exclusion>

<groupId>org.apache.lucene</groupId>

<artifactId>lucene-core</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.lucene</groupId>

<artifactId>lucene-queryparser</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.lucene</groupId>

<artifactId>lucene-analyzers-common</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- lucene-queryparser 查询分析器模块 -->

<dependency>

<groupId>org.apache.lucene</groupId>

<artifactId>lucene-queryparser</artifactId>

<version>7.3.0</version>

</dependency>

The integration is required because the API of the createComponents method of Analyzer has changed.

Integration steps

1. Find the Lucene support class provided by the IkAnalyzer package body and compare the createComponets method of IKAnalyzer.

2. Create a new version according to these two classes, copy the code in the class directly, and modify the parameters.

IKAnalyzer provides two word segmentation modes: fine-grained word segmentation and intelligent word segmentation, depending on its construction parameters.

After integration, please test the effect of fine-grained word segmentation and intelligent word segmentation

Test sentence: What Zhang San said is true

Please compare IKAnalyzer and SmartChineseAnalyzer

Test sentence: What Zhang San said is true. Lucene is an open source, java-based search engine development kit.

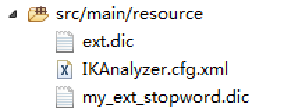

Extend IKAnalyzer's stop words

There are few default stop words in Ik, and we often need to extend it. You can download a relatively complete stopwords from the URL: https://github.com/cseryp/stopwords.

Expansion steps of stop words in Ik:

1. Create the IK configuration file in the class directory: IKAnalyzer.cfg.xml

2. Add a node for configuring the extended stop word file in the configuration file: <entry key="ext_stopwords">my_ext_stopword.dic</entry> If there are more than one, separate them with ";" 3. Create ours in the category directory Expand the stop word file my_ext_stopword.dic 4. Edit the file to add stop words, one per line

Expand IKAnalyzer's dictionary

A lot of new words are generated every year, the steps to add new words to the word segmenter dictionary:

1. In the IK configuration file under the category directory: IKAnalyzer.cfg.xml, add a node for configuring the extended word file: <entry key="ext_dict">ext.dic</entry> If there are more than one, separate with ";"

2. Create the extended word file ext.dic in the class directory

Note that if the file is UTF-8 encoded

4. Edit the file to add new words, one per line

Test sentence: Once my country was broadcasted, it was praised by all parties, and it strongly aroused the patriotism and pride of the people of the country! New words: amazing my country

IKAnalyzer.cfg.xml file example

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE properties SYSTEM "http://java.sun.com/dtd/properties.dtd">

<properties>

<comment>IK Analyzer 扩展配置</comment>

<!--用户可以在这里配置自己的扩展字典 -->

<entry key="ext_dict">ext.dic</entry>

<!--用户可以在这里配置自己的扩展停止词字典-->

<entry key="ext_stopwords">my_ext_stopword.dic</entry>

</properties>