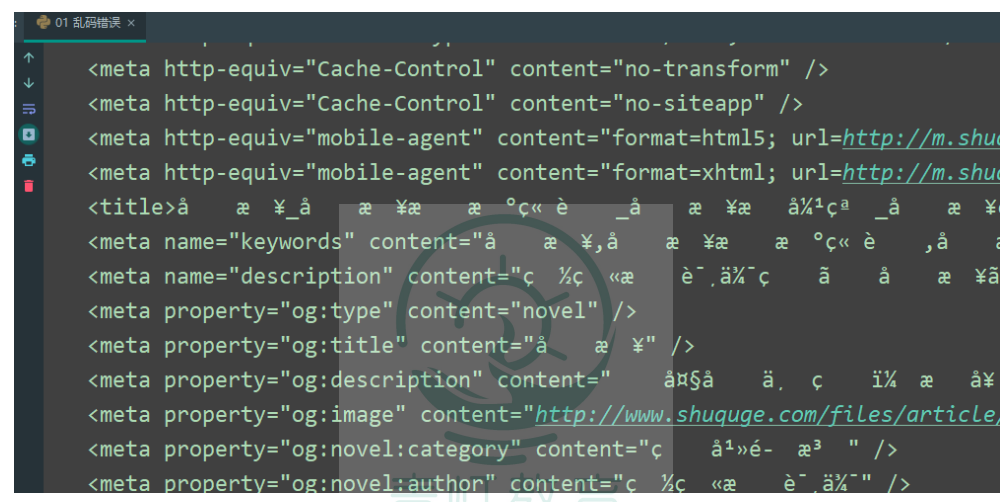

Garbled text on the webpage

The reason for the garbled code is because there is no setting how to encode the webpage during the decoding process

response.encoding = response.apparent_encoding

Python crawler, data analysis, website development and other case tutorial videos are free to watch online

https://space.bilibili.com/523606542

Python learning exchange group: 1039645993

Request header parameters

InvalidHeader: Invalid return character or leading space in header: User-Agent

import requests

headers = {

'User-Agent': ' Mozilla/5.0 (windows NT 10.0; wow64) Applewebkit/537.36(KHTML,like Gecko) chrome/84.0.4128.3 safari/537.36'

}

response = requests.get( ' http: //www.shuquge.com/txt/8659/index.htm1 ' ,

headers=headers)

response.encoding = response.apparent_encoding

html = response.text

print(htm7)

In fact, it is difficult to find out where the problem is, but in fact it is because there is an extra space before'Mozilla', just delete the space

No data & parameter error

import requests

headers = {

'Host' : 'www.guazi. com ' ,

'User-Agent ': 'Mozi11a/5.0 (windows NT 10.0; wOw64) ApplewebKit/537.36(KHTML,like Gecko) chrome/84.0.4128.3 safari/537.36',

}

response = requests.get( ' https: //www.guazi.com/cs/20e17311773b1706x.htm',

headers=headers)

response.encoding = response.apparent_encoding

print(response.text)

The requested data is not the same as the expected data. At this time, there must be a problem with some parameters. Check whether the parameter is missing or the wrong parameter is given.

The target computer actively refuses

import requests

proxy_response = requests.get( 'http://134.175.188.27:5010/get')

proxy = proxy_response.json()

print(proxy)

error

requests.exceptions.ConnectionError:

HTTPConnectionPoo1(host='134.175.188.27',port=5010):

Max retries exceeded with url: /get (caused byNewConnectionError( ' <ur1lib3.connection.HTTPConnection object at Ox0000023AB83AC828>: Failed to establish a new connection: [winError 10061]由于目标计算机积极拒绝,无法连接。',))

- Is recognized

- The URL is entered incorrectly

- The server stopped providing the server

Link timeout

import requests

proxy_response = requests.get( ' http://134.175.188.27:5010/get', timeout=0.0001)

proxy = proxy_response.json(

print(proxy)

error

requests.exceptions.connectTimeout:

HTTPConnectionPoo1(host='134.175.188.27'port=5010):

Max retries exceeded with ur1: /get (caused byconnectTimeoutError(<ur1lib3.connection.HTTPConnection object at ox000002045EF9B8DO>,'Connection to 134.175.188.27 timed out.(connecttimeout=O.0001) '))

Exception handling

import requests

try :

proxy_response = requests.get( 'http:/ /134.175.188.27:5010/get',timeout=O.0001)

proxy = proxy_response.json()

print(proxy)

except:

pass