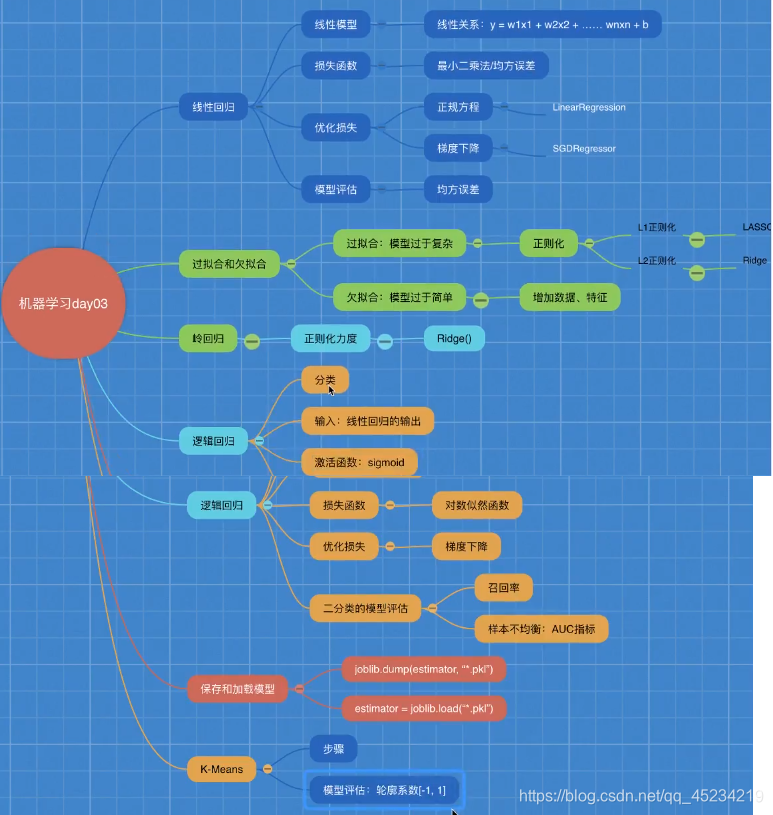

Learning Directory:

Linear regression:

Case: Boston housing price estimation (comparing formal equations and gradient descent optimization methods)

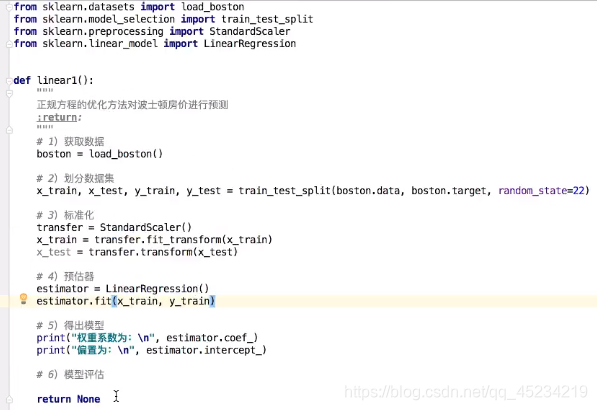

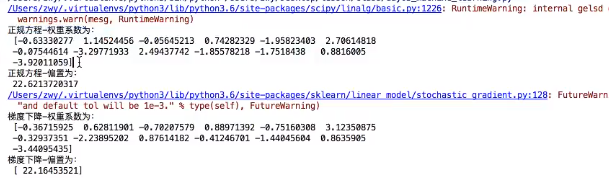

Use normal equation optimization:

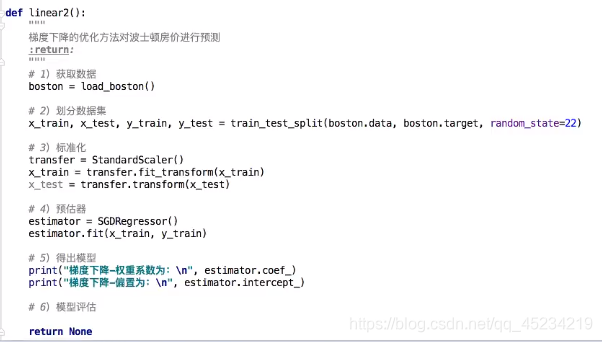

use gradient descent optimization:

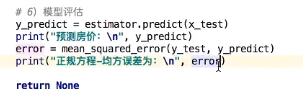

use mean square error (MSE) to evaluate the quality of the model:

to sum up:

Overfitting and underfitting

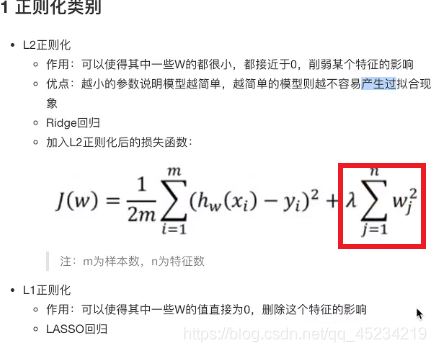

Regularization category:

**L2 regularization (commonly used): **Add a penalty term after the loss function. This penalty term is related to the weight. When optimizing the loss function to reduce the loss value, it can also reduce the weight of the feature.

L1 regularization:

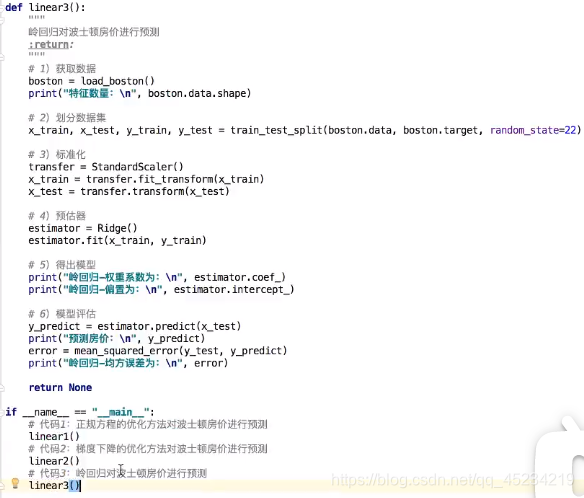

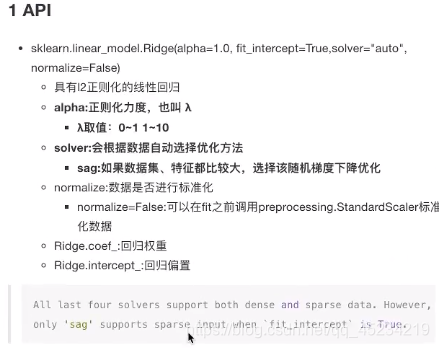

Ridge regression

Is linear regression with L2 regularization

Case: Using Ridge Regression to Forecast Holidays in Boston