The text and pictures in this article are from the Internet and are for learning and communication purposes only, and do not have any commercial use. If you have any questions, please contact us for processing.

The following article comes from Python crawler and data mining, the author Mr. Cup wine

I. Introduction

A web crawler (also known as a web spider or web robot) is a program or script that automatically crawls information on the World Wide Web in accordance with certain rules. Other less commonly used names are ants, automatic indexing, simulators, or worms. ------Baidu Encyclopedia

In human terms, crawlers are used to obtain massive amounts of data in a regular manner, and then process and use them. It is one of the necessary supporting conditions in big data, finance, machine learning, and so on.

At present, in the first-tier cities, the salary and treatment of crawlers are relatively objective. Later promotion to middle and senior crawler engineers, data analysts, and big data development positions are all good transitions.

Python crawler, data analysis, website development and other case tutorial videos are free to watch online

https://space.bilibili.com/523606542 Python learning exchange group: 1039645993

2. Project goals

In fact, the project introduced this time does not need to be too complicated. The ultimate goal is to crawl each comment of the post into the database, and to update the data, prevent repeated crawling, anti-crawling and other measures.

3. Project preparation

This part mainly introduces the tools used in this article, the libraries involved, web pages and other information, etc.

Software: PyCharm

Required libraries: Scrapy, selenium, pymongo, user_agent, datetime

Target website:

http://bbs.foodmate.netPlug-in: chromedriver (the version must be correct)

Four, project analysis

1. Determine the structure of the crawling website

In short: determine the loading method of the website, how to correctly enter the post to grab the data level by level, what format to use to save the data, etc.

Secondly, observe the hierarchical structure of the website, that is, how to enter the post page little by little according to the sections. This is very important for this crawler task, and it is also the main part of writing code.

2. How to choose the right way to crawl data?

Currently, the crawler methods I know are as follows (incomplete, but more commonly used):

1) Request framework: This http library can be used to crawl the required data flexibly, simple but the process is slightly cumbersome, and it can be used with packet capture tools to obtain data. But you need to determine the headers and the corresponding request parameters, otherwise the data cannot be obtained; a lot of app crawling, image and video crawling, crawling and stopping, relatively light and flexible, and high concurrency and distributed deployment are also very flexible, and the functions can be more Good realization.

2) Scrapy framework: The scrapy framework can be said to be the most commonly used and the best crawler framework for crawlers. It has many advantages: scrapy is asynchronous; it uses more readable xpath instead of regular; powerful statistics and log system; at the same time Crawl on different urls; support shell mode, which is convenient for independent debugging; support writing middleware to facilitate writing some unified filters; it can be stored in the database through a pipeline, and so on. This is also the framework to be introduced in this article (combined with the selenium library).

Five, project realization

1. The first step: determine the type of website

First, explain what it means and what website to look at. First of all, you need to see the loading method of the website, whether it is static loading, dynamic loading (js loading), or other methods; different loading methods require different ways to deal with it. Then we observed the website crawled today and found that this is a chronological forum. First guessed it was a statically loaded website; we opened the plugin to organize js loading, as shown in the figure below.

After refreshing, it is found that it is indeed a static website (if it can be loaded normally, it is basically statically loaded).

2. Step 2: Determine the hierarchy

Secondly, the website we want to crawl today is the food forum website, which is a statically loaded website. We have already understood it during the previous analysis, and then the hierarchical structure:

Probably the above process, there are a total of three levels of progressive visits, and then arrive at the post page, as shown in the figure below.

Part of the code display:

First-level interface:

def parse(self, response):

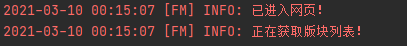

self.logger.info("已进入网页!")

self.logger.info("正在获取版块列表!")

column_path_list = response.css('#ct > div.mn > div:nth-child(2) > div')[:-1]

for column_path in column_path_list:

col_paths = column_path.css('div > table > tbody > tr > td > div > a').xpath('@href').extract()

for path in col_paths:

block_url = response.urljoin(path)

yield scrapy.Request(

url=block_url,

callback=self.get_next_path,

Secondary interface:

def get_next_path(self, response):

self.logger.info("已进入版块!")

self.logger.info("正在获取文章列表!")

if response.url == 'http://www.foodmate.net/know/':

pass

else:

try:

nums = response.css('#fd_page_bottom > div > label > span::text').extract_first().split(' ')[-2]

except:

nums = 1

for num in range(1, int(nums) + 1):

tbody_list = response.css('#threadlisttableid > tbody')

for tbody in tbody_list:

if 'normalthread' in str(tbody):

item = LunTanItem()

item['article_url'] = response.urljoin(

tbody.css('* > tr > th > a.s.xst').xpath('@href').extract_first())

item['type'] = response.css(

'#ct > div > div.bm.bml.pbn > div.bm_h.cl > h1 > a::text').extract_first()

item['title'] = tbody.css('* > tr > th > a.s.xst::text').extract_first()

item['spider_type'] = "论坛"

item['source'] = "食品论坛"

if item['article_url'] != 'http://bbs.foodmate.net/':

yield scrapy.Request(

url=item['article_url'],

callback=self.get_data,

meta={'item': item, 'content_info': []}

)

try:

callback_url = response.css('#fd_page_bottom > div > a.nxt').xpath('@href').extract_first()

callback_url = response.urljoin(callback_url)

yield scrapy.Request(

url=callback_url,

callback=self.get_next_path,

)

except IndexError:

passThree-level interface:

def get_data(self, response):

self.logger.info("正在爬取论坛数据!")

item = response.meta['item']

content_list = []

divs = response.xpath('//*[@id="postlist"]/div')

user_name = response.css('div > div.pi > div:nth-child(1) > a::text').extract()

publish_time = response.css('div.authi > em::text').extract()

floor = divs.css('* strong> a> em::text').extract()

s_id = divs.xpath('@id').extract()

for i in range(len(divs) - 1):

content = ''

try:

strong = response.css('#postmessage_' + s_id[i].split('_')[-1] + '').xpath('string(.)').extract()

for s in strong:

content += s.split(';')[-1].lstrip('\r\n')

datas = dict(content=content, # 内容

reply_id=0, # 回复的楼层,默认0

user_name=user_name[i], # ⽤户名

publish_time=publish_time[i].split('于 ')[-1], # %Y-%m-%d %H:%M:%S'

id='#' + floor[i], # 楼层

)

content_list.append(datas)

except IndexError:

pass

item['content_info'] = response.meta['content_info']

item['scrawl_time'] = datetime.now().strftime('%Y-%m-%d %H:%M:%S')

item['content_info'] += content_list

data_url = response.css('#ct > div.pgbtn > a').xpath('@href').extract_first()

if data_url != None:

data_url = response.urljoin(data_url)

yield scrapy.Request(

url=data_url,

callback=self.get_data,

meta={'item': item, 'content_info': item['content_info']}

)

else:

item['scrawl_time'] = datetime.now().strftime('%Y-%m-%d %H:%M:%S')

self.logger.info("正在存储!")

print('储存成功')

yield item

3. Step 3: Determine the crawling method

Because it is a static web page, I first decided to use the scrapy framework to obtain data directly, and through the preliminary test, it was found that the method was indeed feasible. However, at the time, I was young and frivolous and underestimated the protection measures of the website. Due to limited patience, no timer was added to limit crawling. The speed caused me to be restricted by the website, and the website changed from a statically loaded webpage to a dynamic loading webpage verification algorithm before entering the webpage, direct access will be rejected by the background.

But how can this kind of problem be my cleverness? After a brief period of thought (1 day), I changed the scheme to the method of scrapy framework + selenium library, and called chromedriver to simulate access to the website. After the website was loaded, it would not be crawled. That's it. Follow-up proves that this method is indeed feasible and efficient.

The implementation part of the code is as follows:

def process_request(self, request, spider):

chrome_options = Options()

chrome_options.add_argument('--headless') # 使用无头谷歌浏览器模式

chrome_options.add_argument('--disable-gpu')

chrome_options.add_argument('--no-sandbox')

# 指定谷歌浏览器路径

self.driver = webdriver.Chrome(chrome_options=chrome_options,

executable_path='E:/pycharm/workspace/爬虫/scrapy/chromedriver')

if request.url != 'http://bbs.foodmate.net/':

self.driver.get(request.url)

html = self.driver.page_source

time.sleep(1)

self.driver.quit()

return scrapy.http.HtmlResponse(url=request.url, body=html.encode('utf-8'), encoding='utf-8',

request=request)

4. Step 4: Determine the storage format of the crawled data

Needless to say this part, according to your own needs, set the data format that needs to be crawled in items.py. Just use this format to save in the project:

class LunTanItem(scrapy.Item):

"""

论坛字段

"""

title = Field() # str: 字符类型 | 论坛标题

content_info = Field() # str: list类型 | 类型list: [LunTanContentInfoItem1, LunTanContentInfoItem2]

article_url = Field() # str: url | 文章链接

scrawl_time = Field() # str: 时间格式 参照如下格式 2019-08-01 10:20:00 | 数据爬取时间

source = Field() # str: 字符类型 | 论坛名称 eg: 未名BBS, 水木社区, 天涯论坛

type = Field() # str: 字符类型 | 板块类型 eg: '财经', '体育', '社会'

spider_type = Field() # str: forum | 只能写 'forum'

5. Step 5: Confirm to save the database

The database chosen for this project is mongodb. Because it is a non-relational database, the advantages are obvious, and the format requirements are not so high. It can store multi-dimensional data flexibly. It is generally the preferred database for crawlers (don’t tell me redis, I will use it if I know it, Mainly not)

Code:

import pymongo

class FMPipeline():

def __init__(self):

super(FMPipeline, self).__init__()

# client = pymongo.MongoClient('139.217.92.75')

client = pymongo.MongoClient('localhost')

db = client.scrapy_FM

self.collection = db.FM

def process_item(self, item, spider):

query = {

'article_url': item['article_url']

}

self.collection.update_one(query, {"$set": dict(item)}, upsert=True)

mAt this time, some smart friends will ask: What if the same data is crawled twice? (In other words, it is the duplicate check function)

I didn’t think about this question before. Later, I found out when I asked the big guys. This was done when we saved the data, just this sentence:

query = {

'article_url': item['article_url']

}

self.collection.update_one(query, {"$set": dict(item)}, upsert=True)Determine whether there is duplicate data crawling through the link of the post. If it is duplicated, it can be understood as covering it, so that the data can also be updated.

6. Other settings

Issues such as multi-threading, headers, pipeline transmission sequence, etc., are all set in the settings.py file. For details, please refer to the editor’s project to see. I won’t go into details here.

Seven, effect display

1. Click Run, and the result will be displayed on the console, as shown in the figure below.

2. In the middle, there will be crawling tasks that pile up many posts in the queue, and then multi-threaded processing. I set 16 threads, and the speed is still very impressive.

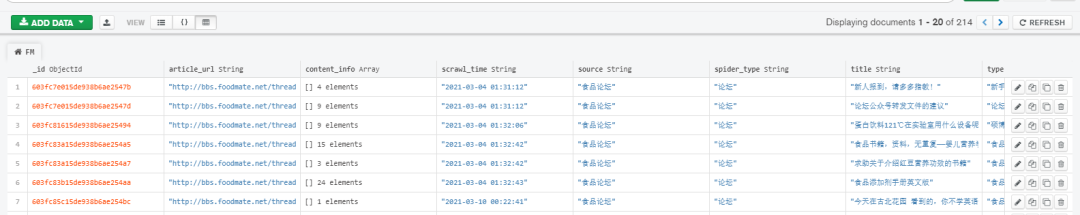

3. Database data display:

The content_info stores all the comments of each post and the public information of related users.