BPN algorithm

Learning the BPN algorithm can be divided into the following two processes:

Forward propagation: The input is fed to the network, and the signal propagates from the input layer to the output layer through the hidden layer. In the output layer, the error and loss functions are calculated.

Backpropagation: In backpropagation, the gradient of the loss function of the neurons in the output layer is first calculated, and then the gradient of the loss function of the neurons in the hidden layer is calculated. Next, update the weights with gradients.

Finally clarified the meaning of epoch, batch_size, and input and output shapes. The parameter tuning and training model will never be confused anymore.

Result

2020-01-15 21:30:10.556053: I tensorflow/stream_executor/platform/default/dso_loader.cc:44] Successfully opened dynamic library cublas64_100.dll

Epoch: 0 accuracy train%:9.748333333333333 accuracy test%:10.55

Epoch: 1000 accuracy train%:81.77333333333333 accuracy test%:89.64

Epoch: 2000 accuracy train%:83.83 accuracy test%:91.26

Epoch: 3000 accuracy train%:84.73333333333333 accuracy test%:91.96

Epoch: 4000 accuracy train%:85.51666666666667 accuracy test%:92.95

Epoch: 5000 accuracy train%:86.02 accuracy test%:93.38

Epoch: 6000 accuracy train%:86.46 accuracy test%:93.59

Epoch: 7000 accuracy train%:86.68666666666667 accuracy test%:93.49

Epoch: 8000 accuracy train%:86.965 accuracy test%:93.88

Epoch: 9000 accuracy train%:87.11833333333334 accuracy test%:93.71

Epoch: 10000 accuracy train%:87.335 accuracy test%:94.15

Epoch: 11000 accuracy train%:87.39166666666667 accuracy test%:94.06

Epoch: 12000 accuracy train%:87.65 accuracy test%:94.34

Process finished with exit code 0

BPN's network structure

Code: Knock out the code yourself, understand the execution principle of each step, so that you can clearly know how each step of the training network is carried out, forward propagation, hidden layer calculation, backward propagation, update weights, etc. work.

Input layer: x_in matrix structure 60x784 data matrix

W_h is 784x30 weight matrix

b_h is 1x30 bias matrix

W_o is 30x10 output layer weight matrix

b_o is 1x30 output layer bias The

diagram is as follows:

Between X_in and w_h is Matrix multiplication. That is, each row element of x_in is multiplied by the corresponding element of each column of w_h, and then the sum is accumulated to be the value at the corresponding position of the result matrix.

The above shows the decomposition of forward propagation.

Epoch is equal to a few, which means that the same step is performed, and then several rounds of training are performed to update the weight network.

Network implementation:

def multilayer_perception(x, weights, biases):

# 隐藏层的激活函数RELU

h_layer_1 = tf.add(tf.matmul(x, weights['h1']), biases['h1'])

h_layer_out = tf.sigmoid(h_layer_1)

out_layer = tf.matmul(h_layer_out, weights['out']) + biases['out']

return tf.sigmoid(out_layer),out_layer,h_layer_out,h_layer_1

Backpropagation

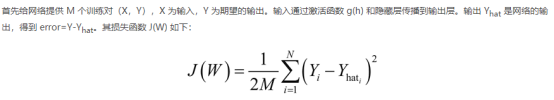

Loss function J(w):

J calculates the partial derivative of each parameter of the weight, which is equivalent to J to y_hat to output activation, to out_layer to h_layer_out to h_layer_1, and chain derivative in turn.

It is not clear yet, please refer to the high number. Or the activation function used in the tutorial tensorflow

learning is sigmoid, its derivative:

# 导函数:sigmoid(x)[1.0-sigmoid(x)]

def sigmaprime(x):

return tf.multiply(tf.sigmoid(x),tf.subtract(tf.constant(1.0), tf.sigmoid(x)))

Backward propagation to achieve :

# 向后传播

delta_2 = tf.multiply(err,sigmaprime(h_2))

# transpose 转置运算

delta_w_2 = tf.matmul(tf.transpose(o_1),delta_2)

wtd_error = tf.matmul(delta_2, tf.transpose(weight['out']))

delta_1 = tf.multiply(wtd_error, sigmaprime(h_1))

delta_w_1 = tf.matmul(tf.transpose(x_in), delta_1)

Then the weights are updated according to the direction of gradient descent combined with the learning rate.

# update weights

# tf.assign(ref, value, validate_shape=None, use_locking=None, name=None)

# 函数完成了将value赋值给ref的作用。其中:ref 必须是tf.Variable创建的tensor

step = [

tf.assign(weight['h1'], tf.subtract(weight['h1'],tf.multiply(eta,delta_w_1))),

tf.assign(bias['h1'], tf.subtract(bias['h1'],tf.multiply(eta,tf.reduce_mean(delta_1,axis=[0])))),

tf.assign(weight['out'], tf.subtract(weight['out'],tf.multiply(eta,delta_w_2))),

tf.assign(bias['out'], tf.subtract(bias['out'],tf.multiply(eta,tf.reduce_mean(delta_2,axis=[0]))))

]

Attach the code to practice success personally

#!/usr/bin/python

# -*- coding = utf-8 -*-

#author:beauthy

#date:2020.1.15

#version:1.0

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

# 加载数据集 使用独热编码标签

mnist = input_data.read_data_sets("MNIST_data",one_hot=True)

# 28 X 28 = 784 标签分为10个类0-9

# 超参数包括:学习率、最大迭代周期、每次批量训练的批量大小、以及每次隐藏层中的神经元数量

n_input = 784

n_classes = 10

max_epochs = 12001

learning_rate = 0.15

batch_size = 60

seed = 0

# number of neurons in the hidden layer

n_hidden = 30

# 使用sigmoid函数的导数进行权重更新

# 导函数:sigmoid(x)[1.0-sigmoid(x)]

def sigmaprime(x):

return tf.multiply(tf.sigmoid(x),tf.subtract(tf.constant(1.0), tf.sigmoid(x)))

def multilayer_perception(x, weights, biases):

# 隐藏层的激活函数RELU

h_layer_1 = tf.add(tf.matmul(x, weights['h1']), biases['h1'])

h_layer_out = tf.sigmoid(h_layer_1)

out_layer = tf.matmul(h_layer_out, weights['out']) + biases['out']

return tf.sigmoid(out_layer),out_layer,h_layer_out,h_layer_1

def train_mnist_model():

x_in = tf.placeholder(tf.float32, [None,n_input])

y = tf.placeholder(tf.float32, [None,n_classes])

weight = {

'h1': tf.Variable(tf.random_normal([n_input, n_hidden],seed=seed)),

'out': tf.Variable(tf.random_normal([n_hidden,n_classes],seed=seed))

}

bias = {

'h1': tf.Variable(tf.random_normal([1, n_hidden],seed=seed)),

'out': tf.Variable(tf.random_normal([1, n_classes],seed=seed))

}

# 前向传播

y_hat, h_2, o_1,h_1 = multilayer_perception(x_in,weight,bias)

# 误差

err = y_hat - y

# 向后传播

delta_2 = tf.multiply(err,sigmaprime(h_2))

# transpose 转置运算

delta_w_2 = tf.matmul(tf.transpose(o_1),delta_2)

wtd_error = tf.matmul(delta_2, tf.transpose(weight['out']))

delta_1 = tf.multiply(wtd_error, sigmaprime(h_1))

delta_w_1 = tf.matmul(tf.transpose(x_in), delta_1)

eta = tf.constant(learning_rate)

# update weights

# tf.assign(ref, value, validate_shape=None, use_locking=None, name=None)

# 函数完成了将value赋值给ref的作用。其中:ref 必须是tf.Variable创建的tensor

step = [

tf.assign(weight['h1'], tf.subtract(weight['h1'],tf.multiply(eta,delta_w_1))),

tf.assign(bias['h1'], tf.subtract(bias['h1'],tf.multiply(eta,tf.reduce_mean(delta_1,axis=[0])))),

tf.assign(weight['out'], tf.subtract(weight['out'],tf.multiply(eta,delta_w_2))),

tf.assign(bias['out'], tf.subtract(bias['out'],tf.multiply(eta,tf.reduce_mean(delta_2,axis=[0]))))

]

# accuracy

# tf.argmax(input)用途:返回最大的那个数值所在的下标

# 1表示按行比较返回最大值的索引

# equal(x, y, name=None)

# equal,相等的意思。顾名思义,就是判断,x, y 是不是相等,它的判断方法不是整体判断,

# 逐个元素判断,相等就是True,不相等就是False。

acct_mat = tf.equal(tf.argmax(y_hat,1), tf.argmax(y,1))

accuracy = tf.reduce_sum(tf.cast(acct_mat,tf.float32))

init = tf.global_variables_initializer()

# 计算图

with tf.Session() as sess:

sess.run(init)

for epoch in range(max_epochs):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

sess.run([y_hat, h_2, o_1, h_1], feed_dict={

x_in:batch_xs})

sess.run(step, feed_dict={

x_in: batch_xs,

y:batch_ys

})

if epoch%1000 == 0:

acc_test = sess.run(accuracy,feed_dict={

x_in: mnist.test.images,

y: mnist.test.labels

}) #测试数据集的大小是 1000

acc_train = sess.run(accuracy,feed_dict={

x_in: mnist.train.images,

y: mnist.train.labels

})

print('Epoch: {0} accuracy train%:{1} accuracy test%:{2}'

.format(epoch,acc_train/600,acc_test/100))

if __name__ == "__main__":

train_mnist_model()

pass