1. Introduction

BP network (Back Propagation), proposed by a group of scientists led by Rumelhart and McCelland in 1986, is a multi-layer feedforward network trained by error back propagation algorithm and is currently one of the most widely used neural network models. The BP network can learn and store a large number of input-output pattern mapping relationships without revealing the mathematical equations describing this mapping relationship in advance.

In the history of the development of artificial neural networks, an effective algorithm for adjusting the connection weight of the hidden layer has not been found for a long time. Until the error back propagation algorithm (BP algorithm) was proposed, the problem of weight adjustment of multilayer feedforward neural networks for solving nonlinear continuous functions was successfully solved.

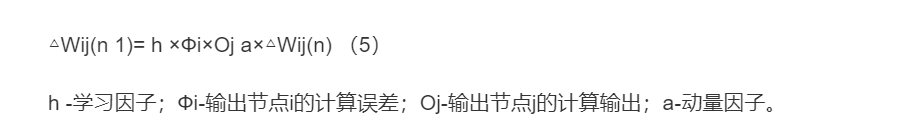

BP (Back Propagation) neural network, the learning process of error back propagation algorithm, consists of two processes: forward propagation of information and back propagation of error. Each neuron in the input layer is responsible for receiving input information from the outside and transmitting it to each neuron in the middle layer; the middle layer is the internal information processing layer and is responsible for information transformation. According to the demand for information change ability, the middle layer can be designed as a single hidden layer or Multi-hidden layer structure; the last hidden layer transmits the information of each neuron in the output layer, after further processing, completes a learning forward propagation process, and the output layer outputs the information processing result to the outside world. When the actual output does not match the expected output, it enters the error back propagation stage. The error passes through the output layer, corrects the weights of each layer in the way of error gradient descent, and transmits it back to the hidden layer and the input layer layer by layer. The repeated process of forward propagation of information and back propagation of errors is a process of constant adjustment of the weights of each layer, as well as a process of neural network learning and training. This process continues until the error of the network output is reduced to an acceptable level, or is preset Up to the set number of studies.

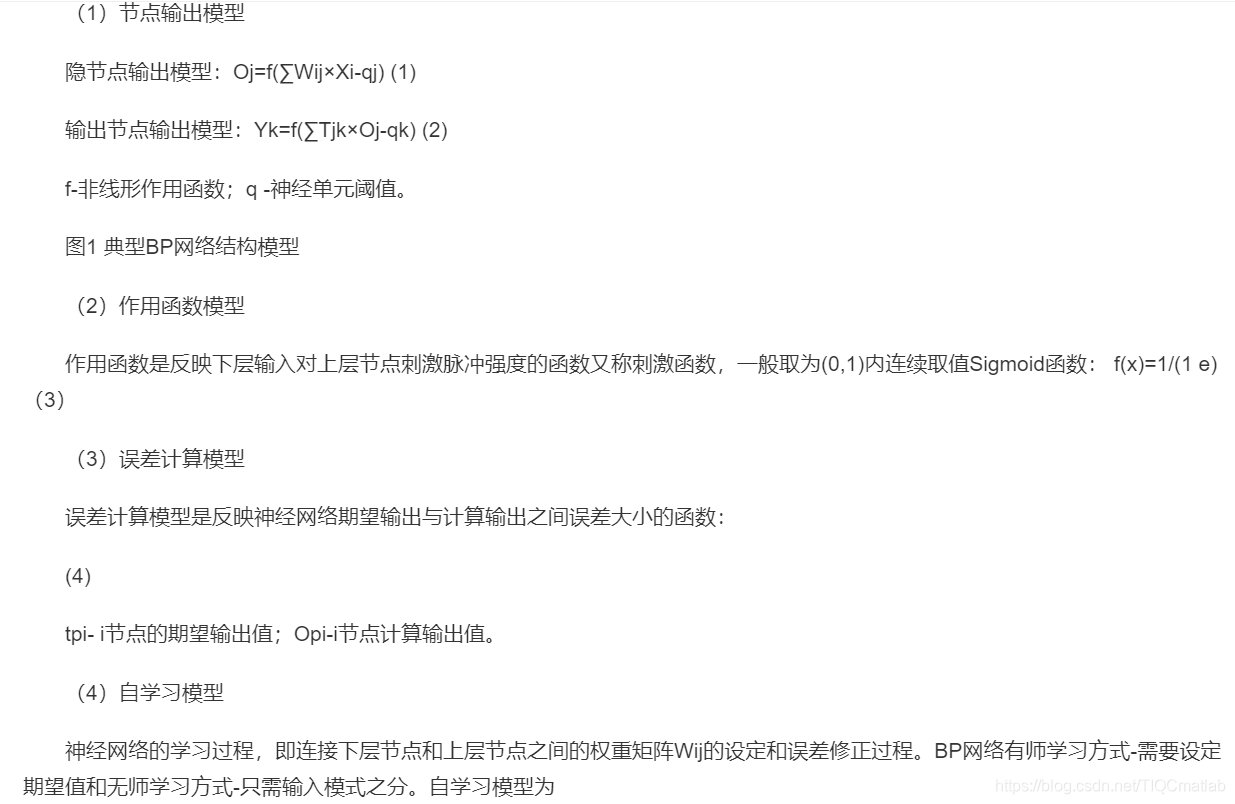

BP neural network model BP network model includes its input and output model, action function model, error calculation model and self-learning model.

2 BP neural network model and its basic principles

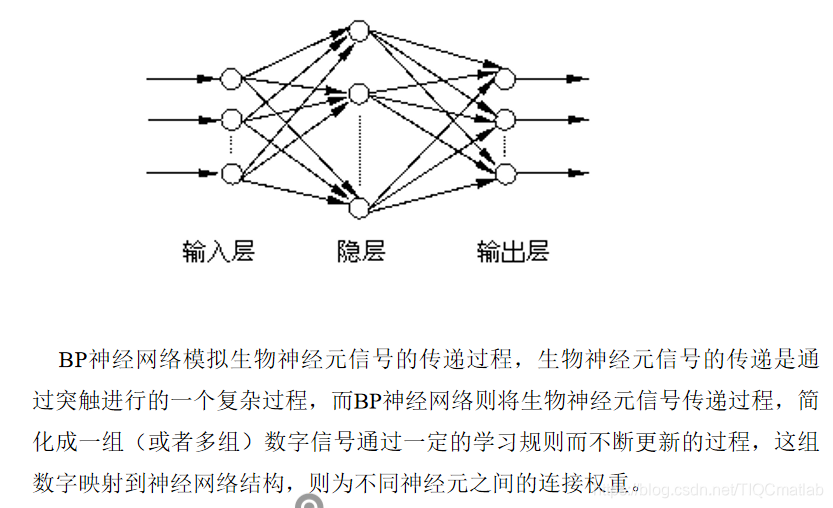

3 BP_PID algorithm flow

Second, the source code

%BP based PID Control

clc,clear,close all

warning off

xite=0.25; % 学习因子

alfa=0.05; % 惯量因子

S=1; %Signal type

%NN Structure

IN=4; % 输入层个数

H=5; % 隐藏层个数

Out=3; % 输出层个数

if S==1 %Step Signal

wi=[-0.6394 -0.2696 -0.3756 -0.7023;

-0.8603 -0.2013 -0.5024 -0.2596;

-1.0749 0.5543 -1.6820 -0.5437;

-0.3625 -0.0724 -0.6463 -0.2859;

0.1425 0.0279 -0.5406 -0.7660];

%wi=0.50*rands(H,IN);

wi_1=wi;wi_2=wi;wi_3=wi;

wo=[0.7576 0.2616 0.5820 -0.1416 -0.1325;

-0.1146 0.2949 0.8352 0.2205 0.4508;

0.7201 0.4566 0.7672 0.4962 0.3632];

%wo=0.50*rands(Out,H);

wo_1=wo;wo_2=wo;wo_3=wo;

end

if S==2 %Sine Signal

wi=[-0.2846 0.2193 -0.5097 -1.0668;

-0.7484 -0.1210 -0.4708 0.0988;

-0.7176 0.8297 -1.6000 0.2049;

-0.0858 0.1925 -0.6346 0.0347;

0.4358 0.2369 -0.4564 -0.1324];

%wi=0.50*rands(H,IN);

wi_1=wi;wi_2=wi;wi_3=wi;

wo=[1.0438 0.5478 0.8682 0.1446 0.1537;

0.1716 0.5811 1.1214 0.5067 0.7370;

1.0063 0.7428 1.0534 0.7824 0.6494];

%wo=0.50*rands(Out,H);

wo_1=wo;wo_2=wo;wo_3=wo;

end

x=[0,0,0];

u_1=0;u_2=0;u_3=0;u_4=0;u_5=0;

y_1=0;y_2=0;y_3=0;

% 初始化

Oh=zeros(H,1); %从隐藏层到输出层

I=Oh; %从输入层到隐藏层

error_2=0;

error_1=0;

ts=0.001;

for k=1:1:500

time(k)=k*ts;

if S==1

rin(k)=1.0;

elseif S==2

rin(k)=sin(1*2*pi*k*ts);

end

%非线性模型

a(k)=1.2*(1-0.8*exp(-0.1*k));

yout(k)=a(k)*y_1/(1+y_1^2)+u_1; % 输出

error(k)=rin(k)-yout(k); % 误差

xi=[rin(k),yout(k),error(k),1];

x(1)=error(k)-error_1;

x(2)=error(k);

x(3)=error(k)-2*error_1+error_2;

epid=[x(1);x(2);x(3)];

I=xi*wi';

for j=1:1:H

Oh(j)=(exp(I(j))-exp(-I(j)))/(exp(I(j))+exp(-I(j))); %Middle Layer

end

K=wo*Oh; %Output Layer

for l=1:1:Out

K(l)=exp(K(l))/(exp(K(l))+exp(-K(l))); %Getting kp,ki,kd

end

kp(k)=K(1);ki(k)=K(2);kd(k)=K(3);

Kpid=[kp(k),ki(k),kd(k)];

du(k)=Kpid*epid;

u(k)=u_1+du(k);

% 饱和限制

if u(k)>=10

u(k)=10;

end

if u(k)<=-10

u(k)=-10;

end

dyu(k)=sign((yout(k)-y_1)/(u(k)-u_1+0.0000001));

%Output layer

for j=1:1:Out

dK(j)=2/(exp(K(j))+exp(-K(j)))^2;

end

for l=1:1:Out

delta3(l)=error(k)*dyu(k)*epid(l)*dK(l);

end

Three, running results

Four, remarks

Complete code or writing add QQ1564658423 past review

>>>>>>

[prediction model] lssvm prediction based on matlab particle swarm [including Matlab source code 103]

[lSSVM prediction] based on matlab whale optimization algorithm lSSVM data prediction [including Matlab Source code 104]

[lstm prediction] Improved lstm prediction based on matlab whale optimization algorithm [including Matlab source code 105]

[SVM prediction] Improved SVM prediction based on matlab bat algorithm (1) [Containing Matlab source code 106]

[ SVM prediction 】Based on matlab gray wolf algorithm to optimize svm support vector machine prediction [including Matlab source code 107]

[Prediction model] based on matlab BP neural network prediction [including Matlab source code 108]

[lssvm prediction model] based on bat algorithm improved least squares Support vector machine lssvm prediction [Matlab 109 issue]

[lssvm prediction] Least squares support vector machine lssvm prediction based on moth extinguishing algorithm improved [Matlab 110]

[SVM prediction] Improved SVM prediction based on matlab bat algorithm (two ) [Include Matlab source code 141 period]

[Lssvm prediction] improved least squares support vector machine lssvm prediction based on matlab moth fire fighting algorithm [including Matlab source code 142]

[ANN prediction model] based on matlab difference algorithm to improve ANN network prediction [including Matlab source code 151]

[ Prediction model] based on matlab RBF neural network prediction model [including Matlab source code 177 period]

[Prediction model] based on matlab SVM regression prediction algorithm to predict stock trends [including Matlab source code 180 period]