On the way from get off work yesterday, I posted a circle of friends:

I caught a big fish today, and the TCP load throughput of the tunnel has more than doubled. Haha, have a nice weekend!

Many tunnels use the same thread to process the same tcp stream. This is obviously wrong. Different threads should be used to process the two directions of a stream.

But many user-mode tunnels handle the same tcp connection with the same thread, which is a problem.

This question is really low in the eyes of many people, and it is still a kind of but such a problem, because I am afraid of being laughed at, I deliberately said things very low, but this is just a technique of wording. That passed.

But it is my habit to think deeply. I found that this is a common problem, so I prepared to record it.

This is not a performance-related multi-processing optimization problem, it is just a very simple problem of maintaining full duplex!

If a midbox cannot maintain full-duplex processing, then it undermines the basic principles of the Internet.

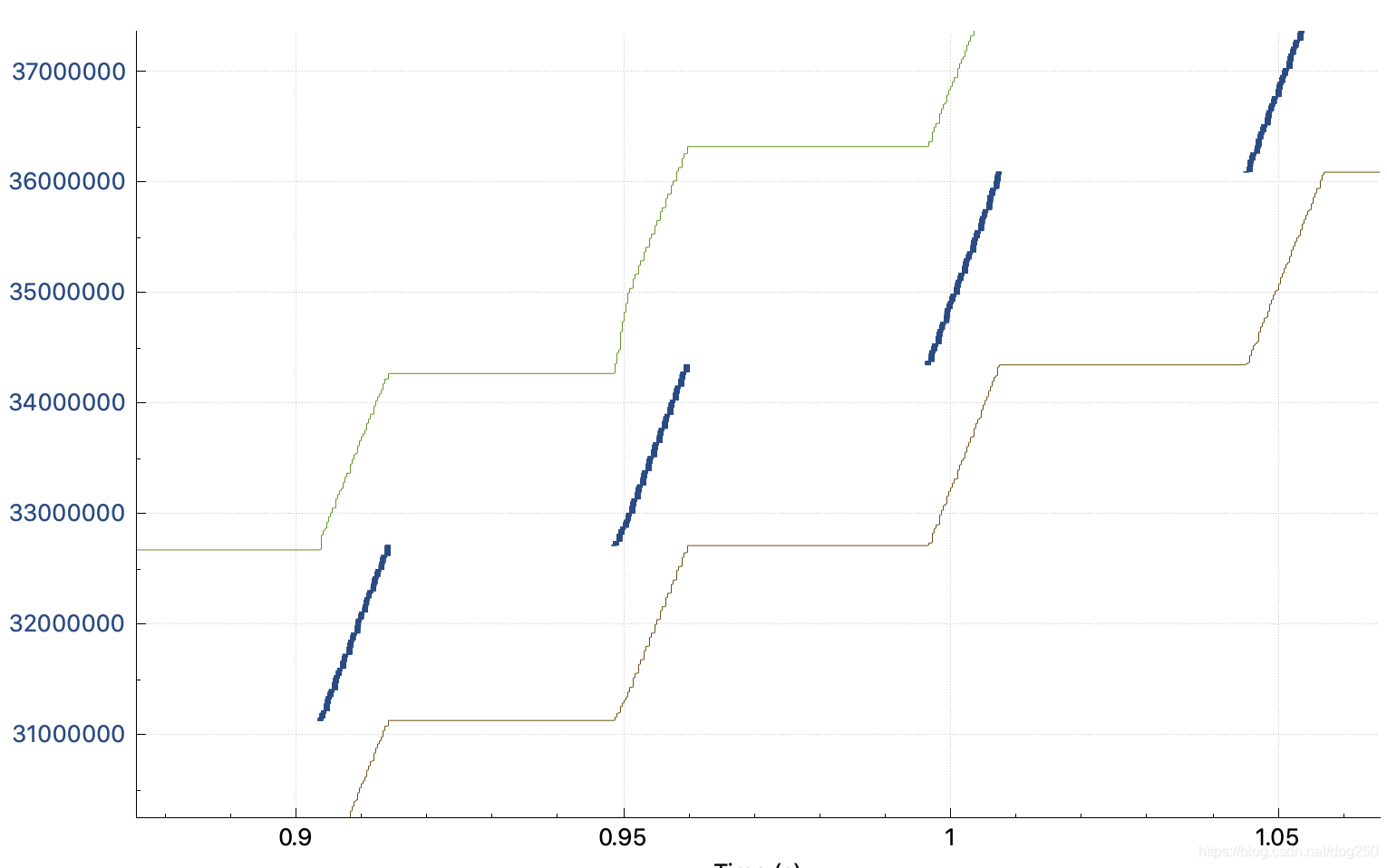

When a TCP stream passes through a tunnel, its trace waveform is as follows:

and the normal trace should be as follows:

I will not analyze the dynamics of how TCP fills the entire BDP pipeline evenly. Said too much. Just see the above-mentioned stepped after trace diagram, how would you do?

Let me talk about what I will do first.

Obviously, first of all, I would not go to check on the tunnel like what intermediate links, to see such a clear window period , adopted piecemeal fill it and that was it. Therefore:

debugging TCP CC is definitely a craft similar to bomb disposal, but it can often reflect a person's technical quality. For example, I am not qualified:

- I will not analyze the reasons for this stepped waveform, but I will try to fill the gaps as soon as I come up...

Filling the window like this is very simple, a stap -g script can do it. However, after filling the window, the trace waveform is horrible...A lot of packets are lost, all red, and the formation is completely chaotic!

Well, I'm just the quack doctor Hua Tuo who had a craniotomy without a doctor!

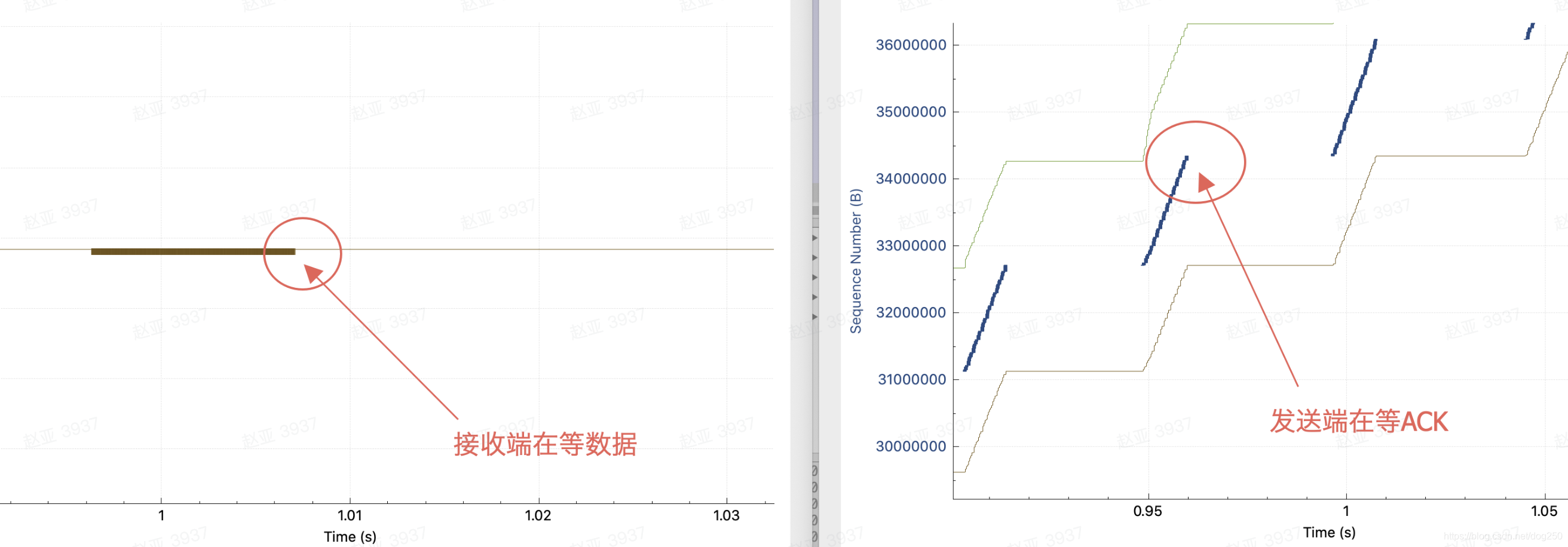

Okay, now put away the rusty scalpel and begin to analyze the root cause. At the same time, you can find the clues by comparing the trace waveform at the receiving end:

TCP's smooth trace waveform is formed by back-to-back data and ACK. ACK provides feedback and promotes the sending of data:

what does a smooth trace graph mean?

FIG smoothed trace means the same or substantially the same interval between each data segment! This requires:

- The interval between each ACK is the same or approximately the same (the delayed ACK is not considered or the delayed ACK is completely considered).

Smooth ACK flow promotes smooth data flow!

In short, the network must process the data segment and the ACK segment "simultaneously"! Only in this way can there be no delay between the data segment and the ACK segment due to mutual exclusion. We know that any continuous introduction of delay will gradually amplify, turning the trace waveform into a ladder.

What if there is a midbox in the middle of the network and it can only process one message at a time?

Originally, the back-to-back flow was formed by the midbox with a gap, so the trace waveform obviously changed from a diagonal line to a stepped shape.

Does it just become stepped? What are its actual consequences? We observe the stepped trace graph, the empty window period in the sending direction is actually the midbox processing the ACK in the other direction. Obviously, the time is shared by the two directions, and the throughput is reduced by twice.

When the processing in the two directions was separated, the throughput doubled as expected.

Okay, now the conclusion is very clear, it is the pot of midbox! But what exactly is midbox? What characteristics does the midbox have to cause a stepped trace waveform?

If midbox is a single-process, single-threaded service, OpenVXY must be opened, which is inevitable. But if midbox is a multi-process and multi-threaded service, will it avoid this pit in comparison?

Not necessarily. When it comes to the topic of multiprocessing in this midbox, many people will take it for granted that it is just fine to break up directly, either according to the stream or according to the package, but there are pitfalls:

- Breaking up by flow will cause the phenomenon described in this article. A flow is processed by the same thread, but a thread can only handle one direction at the same time!

- Breaking up by packets will cause an increase in the disorder of a single stream. If the SACK exceeds the tolerance of the sender to the disorder, it will increase retransmission and reduce the effective bandwidth.

There is actually one dimension, i.e. in the direction of broken , broken up and then press the flow in each direction. We have a ready-made example that does just that, and that is the Linux kernel protocol stack:

- The network card can make RSS according to the packet tuple hash (this obviously differentiates the direction), and then the Linux kernel protocol stack ensures that the process from receiving to forwarding is completed on the same CPU Core.

It's very simple to say, what the network is like, don't destroy it by midbox. Obviously, the network is full-duplex, and midbox must be designed as full-duplex. To achieve full-duplex, at least two threads are required, one in each direction, right?

The reason here is obviously very simple. It has nothing to do with concurrency and multi-core programming models, and it has nothing to do with hashing. This is just a question of maintaining full duplex.

There is also the problem of buffer bloat.

The single-threaded half-duplex midbox of a stream actually promotes the queuing of the data segment and the ACK segment. If a packet coming in one direction cannot be processed immediately, of course it will be queued. As we imagined, many midboxes can actually be called queuing buffers, so the pacing formation is difficult to maintain after a few hops. This is one of the reasons why BBR does not meet expectations in many cases.

In short, I don’t trust most of the operators’ forwarding devices. I don’t think they can keep your pacing rate ( if they don’t do it because they don’t have this obligation, then they don’t have the ability ), even if this pacing rate Below their speed limit, they can also make you a burst. The pure BBR behavior still needs to be observed in the internal network and put on the operator's public network. The pure formation is like the Roman legion in the Battle of Canney, which is instantly dispersed!

The leather shoes in Wenzhou, Zhejiang are wet, so they won’t get fat in the rain.