table of Contents

One, the creation of the DataSet:

2. Common functions of DataSet:

3. Rewrite the fashion_MNIST classification model using DataSet:

Similar to the ndarray data type and data operation in numpy, TensorFlow provides the tf.data.DataSet module, which conveniently handles data input and output, supports a large number of data calculations and conversions, and tf.data.DataSet contains one or more tensors Object.

One, the creation of the DataSet:

Create tf.data.DataSet directly from tensor, use tf.data.DataSet.from_tensor_slices() function, the function parameter can be python's own data type list, or numpy.ndarray:

# 可以从list,从numpy.ndarray创建 dataset

X= np.array([1.3,4.4,5.5,6.71,6.93,4.168,9.779,6.182,7.59,2.167,7.042,10.791,5.313,7.997,5.654,9.27,3.1])

Y= np.array([[1.3,4.4],[5.5,6.71]])

dataset1=tf.data.Dataset.from_tensor_slices([1,2,3,4]) # list 创建

dataset2=tf.data.Dataset.from_tensor_slices(X) # numpy 创建

dataset2![]()

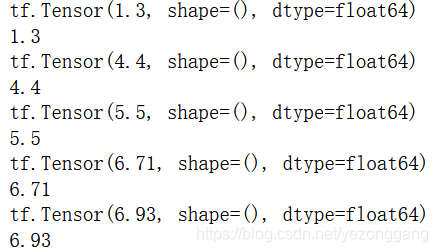

The type of dataset is tensorslicedataset, you can use a loop to see that each element is a tensor, or you can use the numpy method;

for i in dataset2.take(2):

print(i)

print(i.numpy())

2. Common functions of DataSet:

1. The data can be processed before modeling, such as:

① The shuffle() function provides out-of-order operations;

② The repeat() function provides data repeat operation;

③ The batch() function provides batch reading function;

X= np.array([1.3,4.4,5.5,6.71,6.93,4.168,9.779,6.182,7.59,2.167,7.042,10.791,5.313,7.997,5.654,9.27,3.1])

dataset2=tf.data.Dataset.from_tensor_slices(X) # numpy 创建

data_shuffle=dataset2.shuffle(3) # 打乱数据

data_repeat=dataset2.repeat(count=2) # 数据重复

data_batch=dataset2.batch(2) # 数据批量读取2. Data transformation, including map function

dataset_sq=dataset2.map(tf.square)3. Rewrite the fashion_MNIST classification model using DataSet:

The difference from the previous processing method is that some transformations are performed on the data before modeling, and the verification data in the model training process is added;

import matplotlib.pyplot as plt

import tensorflow as tf

import pandas as pd

import numpy as np

%matplotlib inline

(train_image,train_lable),(test_image,test_lable)=tf.keras.datasets.fashion_mnist.load_data()

plt.imshow(train_image[11]) # image show

ds_train_image=tf.data.Dataset.from_tensor_slices(train_image) # 加载数据

ds_train_lable=tf.data.Dataset.from_tensor_slices(train_lable) # 加载数据

# 打乱数据,无线重复,成批读取

da_train=tf.data.Dataset.zip((ds_train_image,ds_train_lable)).shuffle(10000).repeat().batch(64)

# 测试数据集

ds_test_image=tf.data.Dataset.from_tensor_slices(test_image) # 加载数据

ds_test_lable=tf.data.Dataset.from_tensor_slices(test_lable) # 加载数据

ds_test=tf.data.Dataset.zip((ds_test_image,ds_test_lable)).batch(64)

model=tf.keras.Sequential([

tf.keras.layers.Flatten(input_shape=(28,28)),

tf.keras.layers.Dense(128,activation="relu"),

tf.keras.layers.Dense(10,activation="softmax")

])

model.compile(optimizer="adam",loss="sparse_categorical_crossentropy",metrics=["acc"])

train_image.shape[0]//64

history=model.fit(da_train,

epochs=5,

steps_per_epoch=train_image.shape[0]//64,

validation_data=ds_test,

validation_steps=test_image.shape[0]//64

)

model.evaluate(test_image,test_lable)

plt.plot(history.epoch,history.history.get('loss'))

plt.plot(history.epoch,history.history.get('acc'))