1. Introduction to click-through rate estimation

What problem does click-through rate estimation solve?

Click-through rate estimation is a prediction of each ad click. It can output clicks or no clicks, or output the probability of that click. The latter is sometimes called pClick.

What does the click-through rate estimation model need to do?

Through the above basic concept of click-through rate estimation, we will find that the problem of click-through rate estimation is actually a two-category problem. In machine learning, logistic regression can be used as the output of the model, and the output is a probability value. The probability value output by machine learning is considered to be the probability of a certain user clicking on an advertisement.

What is the difference between click-through rate estimation and recommendation algorithm?

Advertising click-through rate estimation is to get the click-through rate of a certain user for a certain advertisement, and then combine the bidding of the advertisement for sorting; in most cases, the recommendation algorithm only needs to get an optimal recommendation order, that is, TopN recommended problem. Of course, the click-through rate of the advertisement can also be used for ranking as the recommendation of the advertisement.

2. Is FM not fragrant?

We have learned the FM model before. Isn’t it good enough? Why do we need to adjust this Wide&Deep? The disadvantage is that when the query-item matrix is sparse and high-rank (for example, users have special hobbies, or items are relatively small), it is difficult to learn low-dimensional representations very efficiently. In this case, most query-items are irrelevant. However, dense embedding will cause almost all query-item prediction values to be non-zero, which leads to over-generalization of recommendations and recommends some less relevant items. On the contrary, a simple linear model can remember these exception rules through cross-product transformation . What does cross-product transformation mean will be mentioned later.

3. "Memory" and "Generalization Ability" of Wide & Deep Model

Memorization and Generalization are two very common concepts in recommender systems. Memorization refers to learning rules through the interactive information matrix between users and products, and Generalization is generalization rules. The FM algorithm we introduced earlier is a good example of Generalization. It can learn a relatively short matrix V based on the interactive information, where vi stores the compressed representation of each user feature (embedding), while collaborative filtering and SVD are both The recommendation results inferred by remembering which items the user has interacted with before. Of course, there are some differences between the two recommendation results. Our Wide&Deep model can merge these two recommendation results to make the final recommendation, and get a better one than before. The recommended results are good models.

It can be said: Memorization tends to be more conservative, recommending items that users have had behavior before. In contrast, generalization tends to increase the diversity of the recommendation system. Memorization only needs to use a linear model to achieve, and Generalization needs to use DNN to achieve.

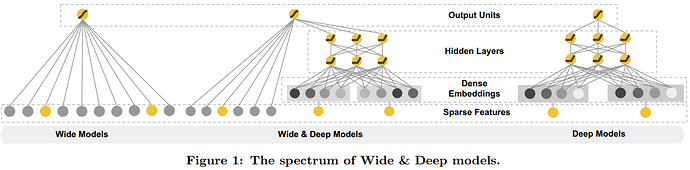

Below is the structure diagram of the wide&deep model, consisting of the wide part on the left (a simple linear model) and the deep part on the right (a typical DNN model).

In fact, the structure of the wide&deep model itself is very simple, and it is very easy to understand for people with a bit of machine learning and deep learning foundations, but how to choose which features to put in the Wide part according to your own scene, and which features to put in The Deep part needs to understand the intention of the author of this paper when designing different structures of the model, so this is also a premise for using this model well.

How to understand that the Wide part is beneficial to enhance the "memory ability" of the model, and the Deep part is beneficial to enhance the "generalization ability" of the model?

-

The wide part is a generalized linear model. The input features are mainly composed of two parts, one is the original part of the feature, and the other is the cross-product transformation of the original feature. The interactive feature can be defined as:

ϕk(x)=d∏i=1xckii,cki∈{0,1}

What does this formula mean? Readers can find the original paper for themselves. The general idea is that the new feature can be 1 if both features are 1 at the same time, otherwise it is 0. To put it bluntly, it is a feature combination. Take the example of the original paper as an example:

AND(user_installed_app=QQ, impression_app=WeChat), when the feature user_installed_app=QQ, and the feature impression_app=WeChat are both set to 1, the combined feature AND (user_installed_app=QQ, impression_app=WeChat) takes the value 1, otherwise Is 0.

For the wide part of the training, the optimizer used is the FTRL algorithm with L1 regularization (Follow-the-regularized-leader), and L1 FTLR pays great attention to the sparse nature of the model, which means that the W&D model uses L1 FTRL to allow the Wide part It becomes more sparse, that is, most of the parameters in the Wide part are 0, which greatly reduces the model weight and the dimension of the feature vector. The features left after the Wide part of the model are trained are very important, so the "memory ability" of the model can be understood as the ability to discover "direct", "violent", and "obvious" association rules. For example, Google W&D expects the wide part to find such a rule: the user installs application A, and at this time application B is exposed, the probability of users installing application B is high.

-

The Deep part is a DNN model. The input features are mainly divided into two categories, one is numerical features (you can directly input DNN), the other is category features (you need to pass Embedding to be input into DNN), and the mathematics of Deep part The form is as follows:

a(l+1)=f(Wla(l)+bl)

We know that as the number of layers of the DNN model increases, the features in the middle become more abstract, which improves the generalization ability of the model. For the DNN model of the Deep part, the author uses AdaGrad, a commonly used optimizer for deep learning, which is also to make the model get more accurate solutions.

Combination of Wide part and Deep part

The W&D model combines the results of the two parts of the output for joint training, and reuses the output of the deep and wide parts with a logistic regression model to make the final prediction and output the probability value. The mathematical form of joint training is as follows:

P (Y = 1 | x) = δ (wTwide [x, ϕ (x)] + wTdeepa (lf) + b)

4. Operation process

-

Retrieval : Use machine learning models and some artificially defined rules to return a small set of items that best matches the current Query. This set is the candidate set of the final recommendation list.

-

Ranking:

- Collect more detailed user characteristics, such as:

- User features (age, gender, language, ethnicity, etc.)

- Contextual features (contextual features: equipment, time, etc.)

- Impression features (display features: app age, app historical statistical information, etc.)

- Pass the features into Wide and Deep respectively for training . During training, calculate the gradient according to the final loss, backpropagate it to the Wide and Deep parts, and train your own parameters separately (wide components only need to fill in the shortcomings of deep components, so less cross-product is needed feature transformations, not full-size wide Model)

- The training method is to use mini-batch stochastic optimization.

- The Wide component is learned with FTRL (Follow-the-regularized-leader) + L1 regularization.

- The Deep component is learned using AdaGrad.

- Recommend TopN after training

- Collect more detailed user characteristics, such as:

Therefore, although the wide&deep model is very simple in the model structure, if you want to use the wide&deep model well, you still need to understand the business deeply, determine which features are used in the wide part, which features are used in the deep part, and the cross features of the wide part How to choose

5. Code combat

The actual code combat is mainly divided into two parts. The first part is to use the wide&deep model that has been encapsulated in tensorflow. This part is mainly to be familiar with the overall structure of the model training. The second part is to use keras in tensorflow to implement wide&deep. This part is mainly to see the details of the model as much as possible and implement it.

Tensorflow built-in WideDeepModel

The Wide-Deep model is already built-in in the Tensorflow library. If you want to view the source code to understand the specific implementation process, please see here 1 . Let's refer to the sample code 2 on Tensorflow official website for explanation. Click here for the download link of the data set we used 3 .

First look at the global implementation:

tf.keras.experimental.WideDeepModel(

linear_model, dnn_model, activation=None, **kwargs

)

This step is easy to see that the linear_model and dnn_model are spliced together, which corresponds to the last step in Wide-Deep FM. For example, we can make linear_model and dnn_model the simplest implementation:

linear_model = LinearModel()

dnn_model = keras.Sequential([keras.layers.Dense(units=64),

keras.layers.Dense(units=1)])

combined_model = WideDeepModel(linear_model, dnn_model)

combined_model.compile(optimizer=['sgd', 'adam'], 'mse', ['mse'])

# define dnn_inputs and linear_inputs as separate numpy arrays or

# a single numpy array if dnn_inputs is same as linear_inputs.

combined_model.fit([linear_inputs, dnn_inputs], y, epochs)

# or define a single `tf.data.Dataset` that contains a single tensor or

# separate tensors for dnn_inputs and linear_inputs.

dataset = tf.data.Dataset.from_tensors(([linear_inputs, dnn_inputs], y))

combined_model.fit(dataset, epochs)

The first step here is to directly call a linear_model in keras.experimental, the second step simply implements a fully connected neural network, and the third step uses WideDeepModel to splice the two models generated in the first two steps together to form the final combined_model. Then comes the regular compile and fit.

In addition, the linear model and the DNN model can be trained separately before joint training:

linear_model = LinearModel()

linear_model.compile('adagrad', 'mse')

linear_model.fit(linear_inputs, y, epochs)

dnn_model = keras.Sequential([keras.layers.Dense(units=1)])

dnn_model.compile('rmsprop', 'mse')

dnn_model.fit(dnn_inputs, y, epochs)

combined_model = WideDeepModel(linear_model, dnn_model)

combined_model.compile(optimizer=['sgd', 'adam'], 'mse', ['mse'])

combined_model.fit([linear_inputs, dnn_inputs], y, epochs)

Here the first three lines of code train a linear model, the middle three lines of code train a DNN model, and the last three lines of code train the two models jointly. The above completes the call to Tensorflow's WideDeepModel, and each function has some We will not elaborate on other parameters here. Readers can check on the tensorflow official website if necessary. In addition, the source code of this part is displayed on Tensorflow's Github, and the link is here 1 .

Tensorflow implements wide&deep model

This part converts the original features, as well as a series of feature operations such as the selection of deep features and wide features, and the intersection of features. The model is also divided into a wide part and a deep part. Compared with the above-mentioned directly using the built-in tensorflow model, it is more detailed , You can understand the model more deeply.

Here, the optimization of the wide and deep parts, for simple implementation, uses the same optimizer to optimize the two parts. For details, refer to the comments in the code.

Code

import pandas as pd

import numpy as np

import warnings

import random, math, os

from tqdm import tqdm

from tensorflow.keras import *

from tensorflow.keras.layers import *

from tensorflow.keras.models import *

from tensorflow.keras.callbacks import *

import tensorflow.keras.backend as K

from tensorflow.keras.regularizers import l2, l1_l2

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import OneHotEncoder, MinMaxScaler, StandardScaler, LabelEncoder

# 读取数据,并将标签做简单的转换

def get_data():

COLUMNS = ["age", "workclass", "fnlwgt", "education", "education_num",

"marital_status", "occupation", "relationship", "race", "gender",

"capital_gain", "capital_loss", "hours_per_week", "native_country",

"income_bracket"]

df_train = pd.read_csv("./data/adult_train.csv", names=COLUMNS)

df_test = pd.read_csv("./data/adult_test.csv", names=COLUMNS)

df_train['income_label'] = (df_train["income_bracket"].apply(lambda x: ">50K" in x)).astype(int)

df_test['income_label'] = (df_test["income_bracket"].apply(lambda x: ">50K" in x)).astype(int)

return df_train, df_test

# 特征处理分为wide部分的特征处理和deep部分的特征处理

def data_process(df_train, df_test):

# 年龄特征离散化

age_groups = [0, 25, 65, 90]

age_labels = range(len(age_groups) - 1)

df_train['age_group'] = pd.cut(df_train['age'], age_groups, labels=age_labels)

df_test['age_group'] = pd.cut(df_test['age'], age_groups, labels=age_labels)

# wide部分的原始特征及交叉特征

wide_cols = ['workclass', 'education', 'marital_status', 'occupation', \

'relationship', 'race', 'gender', 'native_country', 'age_group']

x_cols = (['education', 'occupation'], ['native_country', 'occupation'])

# deep部分的特征分为两大类,一类是数值特征(可以直接输入到网络中进行训练),

# 一类是类别特征(只能在embedding之后才能输入到模型中进行训练)

embedding_cols = ['workclass', 'education', 'marital_status', 'occupation', \

'relationship', 'race', 'gender', 'native_country']

cont_cols = ['age', 'capital_gain', 'capital_loss', 'hours_per_week']

# 类别标签

target = 'income_label'

return df_train, df_test, wide_cols, x_cols, embedding_cols, cont_cols, target

def process_wide_feats(df_train, df_test, wide_cols, x_cols, target):

# 合并训练和测试数据,后续一起编码

df_train['IS_TRAIN'] = 1

df_test['IS_TRAIN'] = 0

df_wide = pd.concat([df_train, df_test])

# 选出wide部分特征中的类别特征, 类别特征在DataFrame中是object类型

categorical_columns = list(df_wide.select_dtypes(include=['object']).columns)

# 构造交叉特征

crossed_columns_d = []

for f1, f2 in x_cols:

col_name = f1 + '_' + f2

crossed_columns_d.append(col_name)

df_wide[col_name] = df_wide[[f1, f2]].apply(lambda x: '-'.join(x), axis=1)

# wide部分的所有特征

wide_cols += crossed_columns_d

df_wide = df_wide[wide_cols + [target] + ['IS_TRAIN']]

# 将wide部分类别特征进行onehot编码

dummy_cols = [c for c in wide_cols if c in categorical_columns + crossed_columns_d]

df_wide = pd.get_dummies(df_wide, columns=[x for x in dummy_cols])

# 将训练数据和测试数据分离

train = df_wide[df_wide.IS_TRAIN == 1].drop('IS_TRAIN', axis=1)

test = df_wide[df_wide.IS_TRAIN == 0].drop('IS_TRAIN', axis=1)

cols = [c for c in train.columns if c != target]

X_train = train[cols].values

y_train = train[target].values.reshape(-1, 1)

X_test = test[cols].values

y_test = test[target].values.reshape(-1, 1)

return X_train, y_train, X_test, y_test

def process_deep_feats(df_train, df_test, embedding_cols, cont_cols, target, emb_dim=8, emb_reg=1e-3):

# 标记训练和测试集,方便特征处理完之后进行训练和测试集的分离

df_train['IS_TRAIN'] = 1

df_test['IS_TRAIN'] = 0

df_deep = pd.concat([df_train, df_test])

# 拼接数值特征和embedding特征

deep_cols = embedding_cols + cont_cols

df_deep = df_deep[deep_cols + [target,'IS_TRAIN']]

# 数值类特征进行标准化

scaler = StandardScaler()

df_deep[cont_cols] = pd.DataFrame(scaler.fit_transform(df_train[cont_cols]), columns=cont_cols)

# 类边特征编码

unique_vals = dict()

lbe = LabelEncoder()

for feats in embedding_cols:

df_deep[feats] = lbe.fit_transform(df_deep[feats])

unique_vals[feats] = df_deep[feats].nunique()

# 构造模型的输入层,和embedding层,虽然对于连续的特征没有embedding层,但是为了统一,将Reshape层

# 当成是连续特征的embedding层

inp_layer = []

emb_layer = []

for ec in embedding_cols:

layer_name = ec + '_inp'

inp = Input(shape=(1,), dtype='int64', name=layer_name)

emb = Embedding(unique_vals[ec], emb_dim, input_length=1, embeddings_regularizer=l2(emb_reg))(inp)

inp_layer.append(inp)

emb_layer.append(inp)

for cc in cont_cols:

layer_name = cc + '_inp'

inp = Input(shape=(1,), dtype='int64', name=layer_name)

emb = Reshape((1, 1))(inp)

inp_layer.append(inp)

emb_layer.append(inp)

# 训练和测试集分离

train = df_deep[df_deep.IS_TRAIN == 1].drop('IS_TRAIN', axis=1)

test = df_deep[df_deep.IS_TRAIN == 0].drop('IS_TRAIN', axis=1)

# 提取训练和测试集中的特征

X_train = [train[c] for c in deep_cols]

y_train = np.array(train[target].values).reshape(-1, 1)

X_test = [test[c] for c in deep_cols]

y_test = np.array(test[target].values).reshape(-1, 1)

return X_train, y_train, X_test, y_test, emb_layer, inp_layer

def wide_deep(df_train, df_test, wide_cols, x_cols, embedding_cols, cont_cols):

# wide部分特征处理

X_train_wide, y_train_wide, X_test_wide, y_test_wide = \

process_wide_feats(df_train, df_test, wide_cols, x_cols, target)

# deep部分特征处理

X_train_deep, y_train_deep, X_test_deep, y_test_deep, deep_inp_embed, deep_inp_layer = \

process_deep_feats(df_train, df_test, embedding_cols,cont_cols, target)

# wide特征与deep特征拼接

X_tr_wd = [X_train_wide] + X_train_deep

Y_tr_wd = y_train_deep # wide部分和deep部分的label是一样的

X_te_wd = [X_test_wide] + X_test_deep

Y_te_wd = y_test_deep # wide部分和deep部分的label是一样的

# wide部分的输入

w = Input(shape=(X_train_wide.shape[1],), dtype='float32', name='wide')

# deep部分的NN结构

d = concatenate(deep_inp_embed)

d = Flatten()(d)

d = Dense(50, activation='relu', kernel_regularizer=l1_l2(l1=0.01, l2=0.01))(d)

d = Dropout(0.5)(d)

d = Dense(20, activation='relu', name='deep')(d)

d = Dropout(0.5)(d)

# 将wide部分与deep部分的输入进行拼接, 然后输入一个线性层

wd_inp = concatenate([w, d])

wd_out = Dense(Y_tr_wd.shape[1], activation='sigmoid', name='wide_deep')(wd_inp)

# 构建模型,这里需要注意模型的输入部分是由wide和deep部分组成的

wide_deep = Model(inputs=[w] + deep_inp_layer, outputs=wd_out)

wide_deep.compile(optimizer='Adam', loss='binary_crossentropy', metrics=['AUC'])

# 设置模型学习率,不设置学习率keras默认的学习率是0.01

wide_deep.optimizer.lr = 0.001

# 模型训练

wide_deep.fit(X_tr_wd, Y_tr_wd, epochs=5, batch_size=128)

# 模型预测及验证

results = wide_deep.evaluate(X_te_wd, Y_te_wd)

print("\n", results)

if __name__ == '__main__':

# 读取数据

df_train, df_test = get_data()

# 特征处理

df_train, df_test, wide_cols, x_cols, embedding_cols, cont_cols, target = data_process(df_train, df_test)

# 模型训练

wide_deep(df_train, df_test, wide_cols, x_cols, embedding_cols, cont_cols)

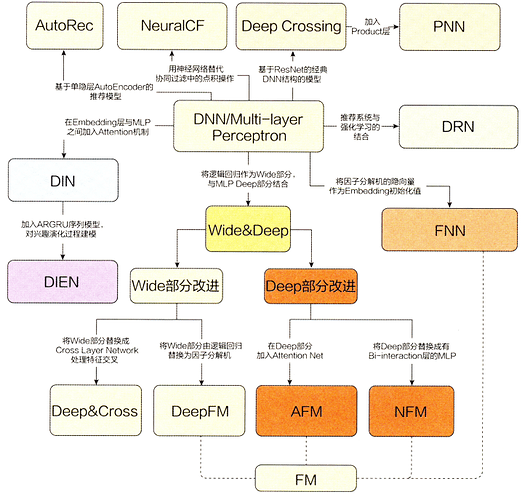

6. The development of deep learning recommendation system

As mentioned in the introduction, the Wide&Deep model plays a very important role in the development of deep learning. From the figure below, we can see its impact on subsequent model development.