linux related video analysis:

Analyze the architecture of the Linux kernel in 5 aspects, so that you are no longer unfamiliar with the kernel.

Take you to implement a Linux kernel file system.

1 Introduction

Memory management is a very important part of the Linux kernel. Let's learn it with you today.

When we want to learn a new knowledge point, the better process is to first understand the background reason for the emergence of this technology point, other solutions at the same time, what problems the new technology point solves, and what deficiencies and improvements it has, so that the whole study The process is closed loop, I personally think this is a good learning idea.

Everything is interlinked. Some problems in computer science can be prototyped in real life, so I think most computer scientists are good at observing life and summarizing them. Human society is a complex machine, which is full of mechanisms and rules, so sometimes it is better to jump into the ocean of code than to return to life first, look for prototypes and then explore the code, which may have a deeper understanding.

Linux memory management volumes are numerous, and this article can only give you a step-by-step introduction to the outline of the iceberg. Through this article, you will learn the following:

- Why do you need to manage memory

- Linux segment page management mechanism

- The mechanism of memory fragmentation

- The basic principles of the buddy system

- Advantages and disadvantages of the buddy system

- The basic principle of slab distributor

2 Why need to manage memory

Lao Tzu’s famous view is to rule by doing nothing. To put it simply, it is possible to operate in an orderly manner without too much intervention and relying on self-consciousness. Ideals are beautiful and reality is cruel.

There are some problems in managing memory in a primitive and simple way in a Linux system. Let's look at a few scenarios.

2.1 Problems with memory management

Process space isolation problem

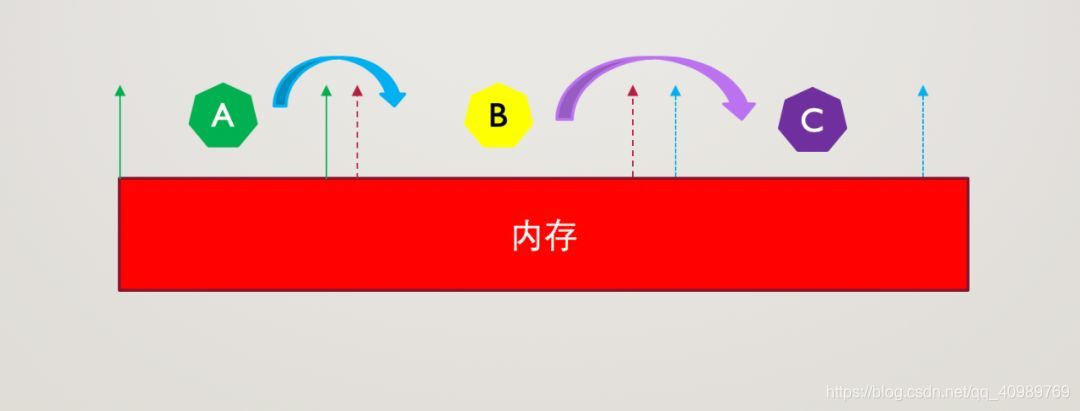

If there are three processes ABC running in the memory space of Linux, set the address space allocated by os to process A to be 0-20M, process B address space 30-80M, and process C address space 90-120M, as shown in the figure:

There may be problems with access to the program space at certain times. For example, process A accesses the space belonging to process B, process B accesses the space belonging to process C, and even modifies the value of the space. This will cause confusion and errors, so the actual China does not allow this to happen.

Memory efficiency and memory shortage problems

The memory of the machine is limited resources, and the number of processes cannot be determined. If a process that has been started at some point occupies all the memory space, a new process cannot be started at this time because there is no new memory available. Allocated, but we observe that the started process is sometimes sleeping, that is, the memory is not used, so the efficiency is really a bit low, so we need an administrator to vacate the unused memory, and continuous memory It is really precious. In many cases, we cannot allocate contiguous memory efficiently and in time. Therefore, virtualization and discretization may effectively increase memory usage.

Program positioning, debugging and compilation and running problems.

Because the position of the program is uncertain when the program is running, we will have many problems in locating problems, debugging code, and compiling and executing. We hope that each process has a consistent and complete address space. Heap, stack, code segment, etc. are placed in the starting position to simplify the use of linker and loader during compilation and execution.

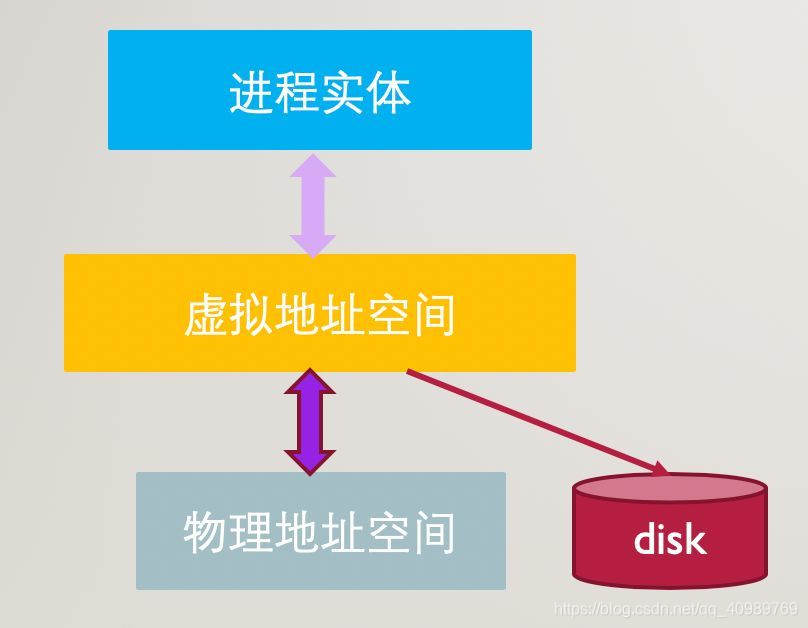

2.2 Virtual address space

In order to solve some of the above problems, the Linux system introduces the concept of virtual space. The emergence of virtualization is inextricably linked with hardware. It can be said that it is the result of the combination of software and hardware. The virtual address space is in the program and physical space. The added intermediate layer is also the focus of memory management.

Disk as a large-capacity storage, it also participates in the operation of the program as a part of "memory". The memory management system will page out the inactive memory that is not commonly used. It can be considered that the memory is the cache of the disk, and the active memory is reserved. Data, which indirectly expands the limited physical memory space, which is called virtual memory and is relative to physical memory.

[Article benefits] C/C++ Linux server architects are required to learn materials plus group 812855908 (data include C/C++, Linux, golang technology, Nginx, ZeroMQ, MySQL, Redis, fastdfs, MongoDB, ZK, streaming media, CDN, P2P, K8S, Docker, TCP/IP, coroutine, DPDK, ffmpeg, etc.)

3. Segment page management mechanism

This article does not go into the segment management memory and paging management memory, because there are many excellent articles about these details, and the interested ones can use the search engine to reach them with one click.

The segment page mechanism is not achieved overnight. It has gone through the stages of pure physical segmentation, pure paging, and pure logical segmentation, and finally evolved a memory management method that combines segmentation and paging. The combination of segment pages obtains the advantages of segmentation and paging. It also avoids the shortcomings of a single model and is a better management model.

This article only wants to explain some concepts in general about the paragraph management mechanism. The paragraph management mechanism is a combination of segment management and paging management. Segment management is a logical management method, and paging management is a physical management method.

Some technologies and implementations in computers can be found in real life. The so-called art and technology come from life probably means this.

Take a chestnut:

There is a concept of districts, counties and cities in the management of residents' household registration, but in fact there is no such entity. It is logical. The addition of these administrative units can make address management more direct.

For our residents, the only entity is their own house and residence. This is a physical unit, a real existence, and it is also the most basic unit.

Compared with the management of linux segment pages, segments are logical units equivalent to the concept of districts, counties and cities, and pages are physical units equivalent to the concept of community/house, which is much more convenient.

The multi-level page table is also easy to understand. If the total physical memory is 4GB and the page size is 4KB, then there are 2^20 pages in total, which is still very large. It is inconvenient to create index addressing by numbering, so Introduce multi-level page tables to reduce storage and facilitate management.

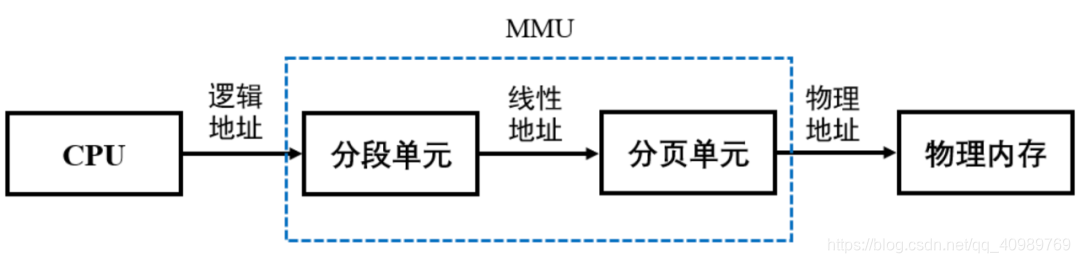

A simplified diagram of the mapping relationship between logical addresses and physical addresses supported by the segment page mechanism, that is, the corresponding relationship between virtual addresses and physical addresses:

The MMU Memory Management Unit (MMU Memory Management Unit) is a hardware layer component that mainly provides mapping of virtual addresses to physical addresses.

MMU work flow: CPU generates logical address and delivers it to segmentation unit, segmentation unit performs processing to convert logical address into linear address, and then linear address is delivered to paging unit, paging unit converts memory physical address according to page table mapping, which may occur Page fault interrupted.

Page fault interrupt (Page Fault) is only when the software tries to access a virtual address, after the segment page is converted to a physical address, it is found that the page is not in the memory at this time, then the cpu will report an interrupt, and then perform correlation The transfer or allocation of virtual memory may be directly interrupted if an exception occurs.

4. Physical memory and memory fragmentation

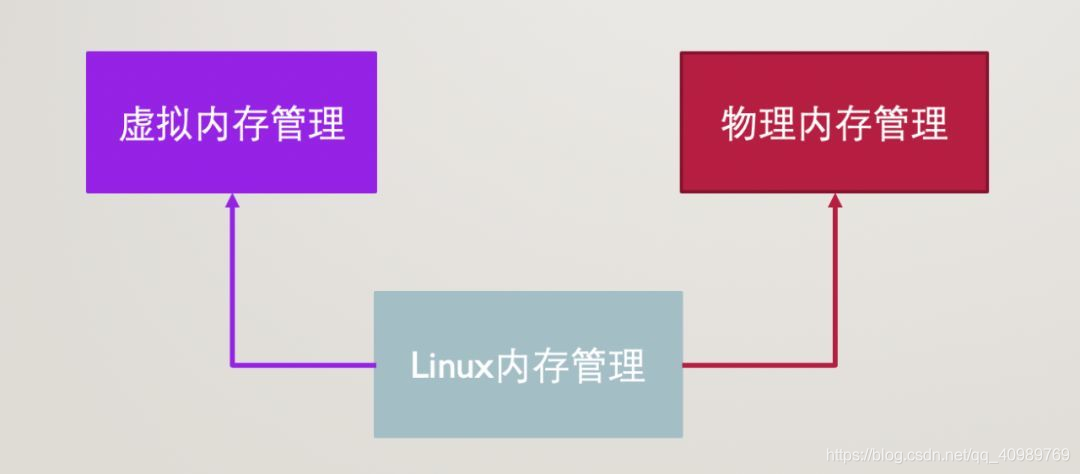

The segment page management mechanism mentioned above is part of the virtual space, but another important part of linux memory management is the management of physical memory, that is, how to allocate and reclaim physical memory, which involves some memory allocation algorithms and allocators.

4.1 Physical memory allocator

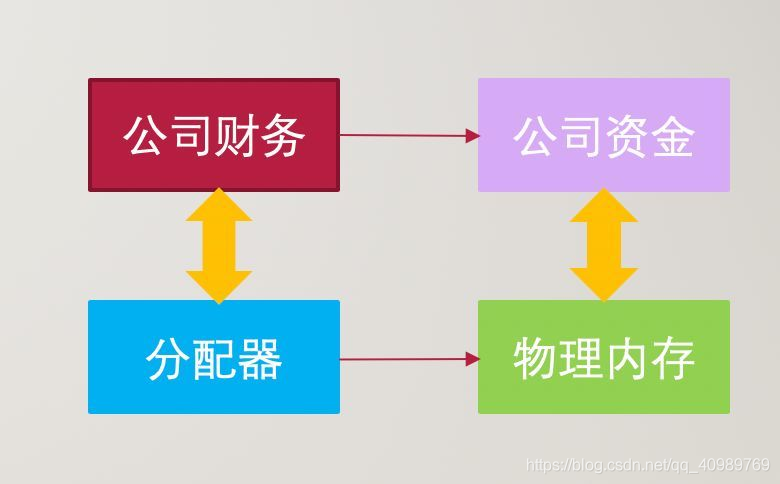

Allocators and allocation algorithms are like company finances, and memory is like company funds. How to use funds reasonably is the responsibility of finance, and how to use physical memory reasonably is the job of the allocator.

4.2 Classification and Mechanism of Memory Fragmentation

If we don't know what memory fragmentation is, just think about the fragmented time we often say, that is, the time that is idle but not used. In fact, the same is true for memory.

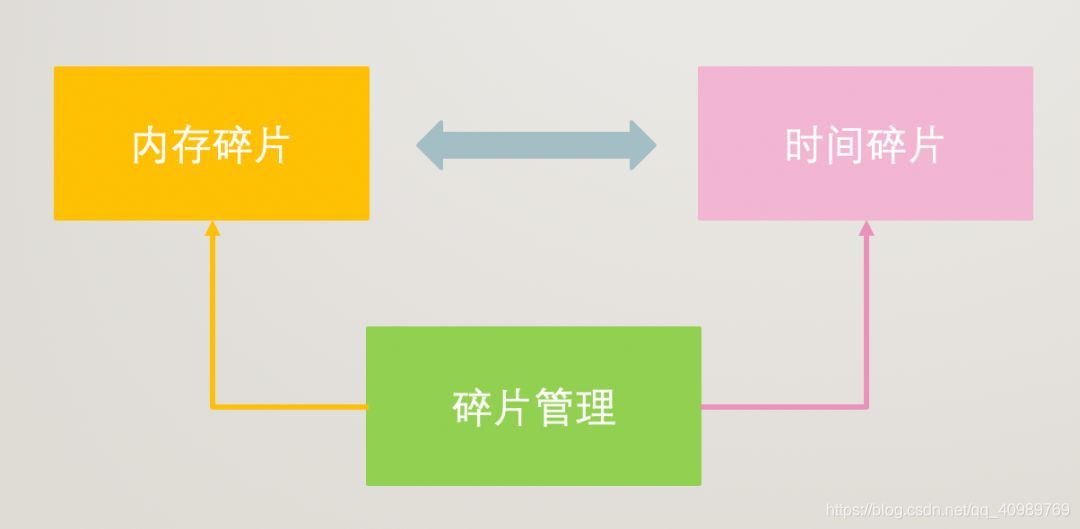

Neither time nor memory can be effectively used after being fragmented, so reasonable management and reduction of fragmentation are crucial to us, which is also the focus of physical memory allocation algorithms and allocators.

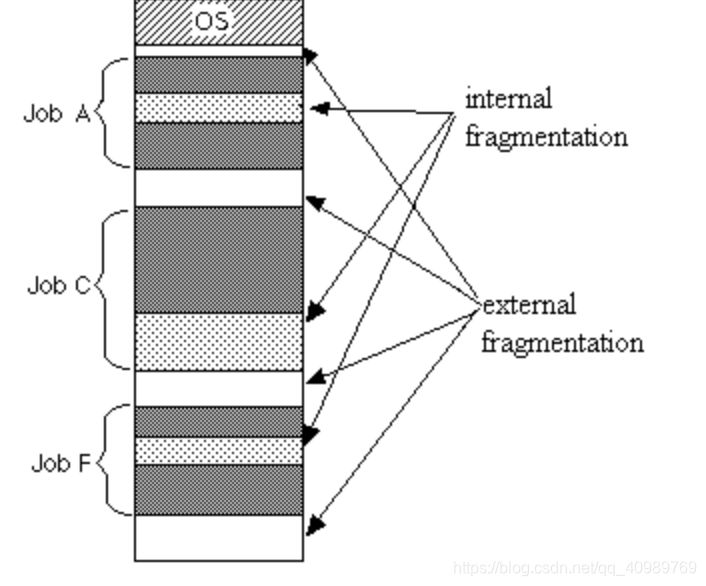

According to the location and cause of the fragments, memory fragments are divided into external fragments and internal fragments. Let's look at the visual display of these two fragments:

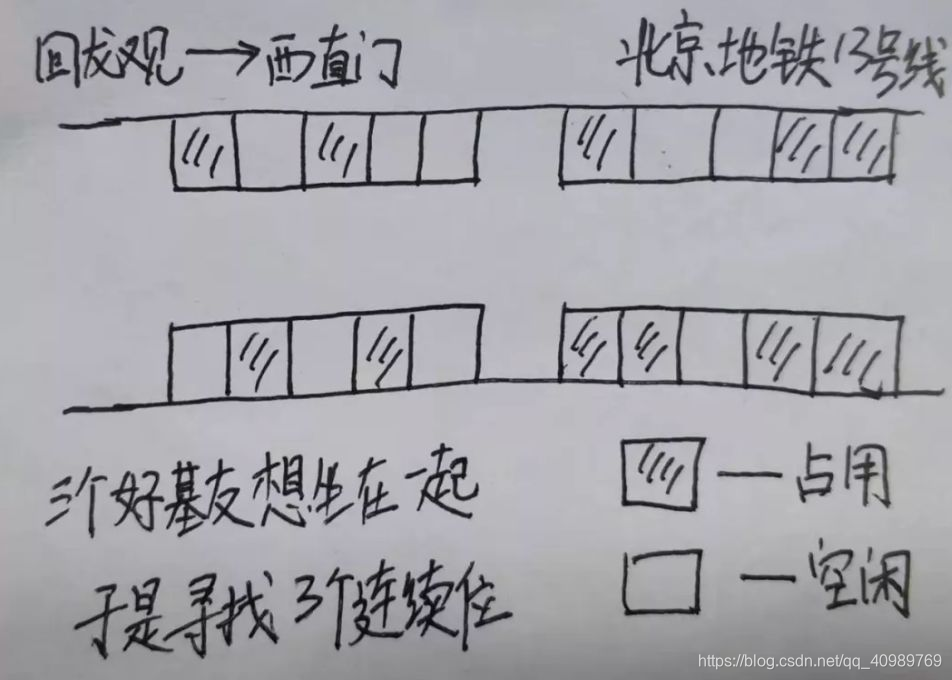

It can be seen from the figure that external fragments are unallocated memory space between processes. The appearance of external fragments is directly related to the frequent allocation and release of memory by processes. This is well understood. Simulate the release of processes that allocate different spaces at different times. You can see the generation of external debris.

Internal fragmentation is mainly due to the granularity of the allocator and some address restrictions that cause the actual allocated memory to be larger than the required memory, so there will be memory holes in the process.

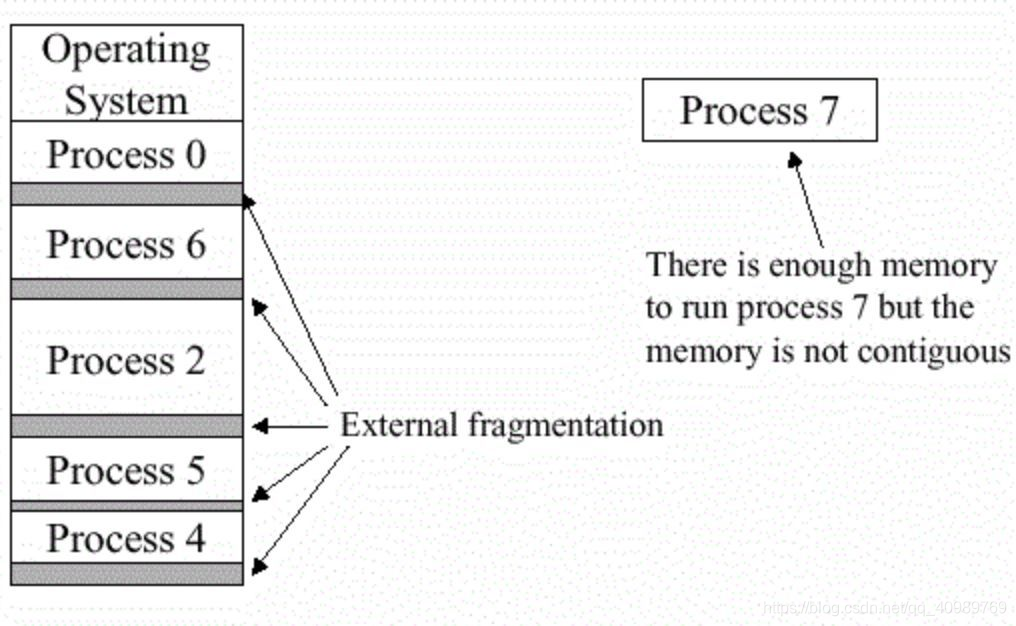

Although the virtual address makes the memory used by the process discrete in physical memory, many times the process requires a certain amount of continuous physical memory. If a large number of fragments exist, it will cause the problem of not being able to start the process, as shown in Process7, which requires a continuous physical memory. But cannot be assigned:

If it’s still not clear, imagine the scene where you usually go to the cafeteria or take a bus with three or five friends. There are no three consecutive seats in the whole car, so you must either sit separately or stand:

5. Basic principles of buddy system algorithm

5.1 Some preparatory knowledge

Physical page frame

Linux divides physical memory into pages. The size of memory pages may be different in different software and hardware. The Linux kernel is set to 4KB, and some kernels may be larger or smaller, but different at that time The size is always considered in practice, just like bread, there are big and small, not uniform.

Page frame record structure

In the kernel, in order to establish the monitoring of the usage of the physical memory page, there will be a data structure such as struct page to record the location address/usage of the page, etc., which is equivalent to an account of the kernel's management of memory pages .

Delayed allocation and real-time allocation

Linux systems are divided into kernel mode and user mode. The kernel mode application for memory is immediately satisfied and the request must be reasonable. However, the user mode request for memory is always delayed as much as possible to allocate physical memory, so the user mode process first obtains a virtual memory area, and obtains a piece of real physical memory through a page fault exception at runtime. What we get when we execute malloc is only Virtual memory is nothing but real physical memory, which is also caused by this reason.

5.2 Introduction to buddy system The

first time I hear this algorithm name, I am curious why it is called buddy system? Let us reveal the secret together.

What problem does the

buddy system solve? The buddy system algorithm is a powerful tool for solving external fragments. In simple terms, it establishes a set of management mechanisms to efficiently allocate and reclaim resources for scenarios where a group of continuous page frames of different sizes are frequently requested and released. Reduce external fragmentation.

The

first idea of solving external fragments : mapping the existing external fragments to continuous linear space through new technology to map these non-contiguous free memory to continuous linear space. In fact, it is equivalent to a governance solution instead of reducing the generation of external fragments. But this scheme is ineffective when contiguous physical memory is actually needed.

The second idea: record these small free discontinuous memory, if there is a new allocation requirement, search for suitable free memory to allocate, so as to avoid allocating memory in a new area, there is a variation The feeling of using waste as a treasure is actually very familiar. When you want to eat a pack of biscuits, your mother will definitely say to eat the remaining half of the biscuits first, instead of opening a new one.

Based on some other considerations, the Linux kernel chose the second idea to solve external fragmentation.

The definition of partner memory block

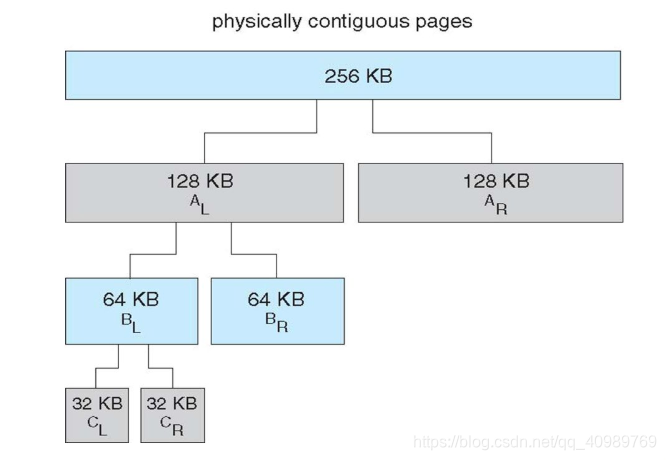

In the partner system, two memory areas with the same size and continuous physical addresses are called partners. The requirements for continuous addresses are actually more stringent, but this is also the key to the algorithm, because such two memory areas can Merge into a larger area.

The core idea of the

buddy system The buddy system manages continuous physical page frames of different sizes, allocates from the closest page frame size when applying, and disassembles the rest, and merges the memory with the partnership into Large page frame.

5.3 The basic process of the

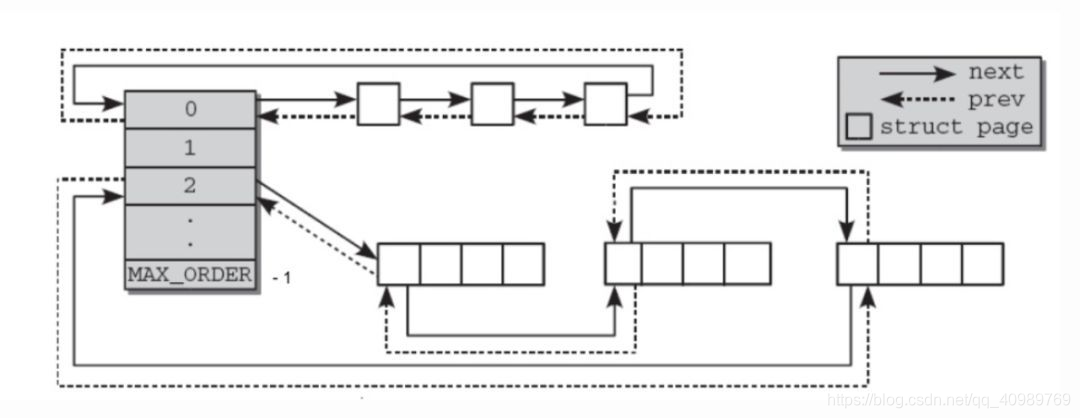

buddy system The buddy system maintains a total of 11 block linked lists with n=0~10, and each block linked list contains 2^n consecutive physical pages. When n=10, 1024 4KB pages correspond to 4MB contiguous physical memory blocks, where n is called order. In the partner system, the order is 0-10, that is, the smallest is 4KB, and the largest memory block is 4MB. These physical blocks of the same size form a doubly linked list for management. The figure shows the two doubly linked lists with order=0 and order=2:

Memory application process: Assuming that a page frame block is requested, the partner system algorithm first checks whether there is a free block to be allocated in the linked list with order=0. If not, search for the next larger block, find a free block in the linked list of order=1, split 2 page frames if there is in the linked list, and allocate 1 page frame and add 1 page frame to order=0 In the linked list. If no free block is found in the linked list of order=1, it will continue to search for a larger order. If it is found for splitting, if there is no free block in the linked list of order=10, the algorithm will report an error.

The process of merging memory: The process of merging memory is the embodiment of the partner block in the partner algorithm. The algorithm merges two blocks of memory with the same size A and their physical addresses into a single block with a size of 2A. The buddy algorithm is merged iteratively from the bottom up. In fact, this process is very similar to the sst merge process in leveldb. The difference is that the buddy algorithm requires memory blocks to be continuous. This process also reflects the friendliness of the partner system to large blocks of memory.

5.4 Advantages and disadvantages of the

buddy system The buddy system algorithm solves the problem of external fragmentation, and is more friendly to the allocation of large memory blocks. Small-grained memory may cause internal fragmentation, but the buddy system is very strict in the definition of the partner block. The process of merging partner blocks involves a lot of linked list operations. For some frequent applications, they may be split right after merging, which is useless, so the partner system still has some problems.

6. Slab distributor

From the introduction of the buddy system, it can be known that the smallest unit of allocation is a 4KB page frame, which is still very wasteful for some frequently requested memory as small as tens of bytes, so we need a more fine-grained allocator. It is the slab distributor.

The slab distributor is not separate from the partner system, but is built on the partner system. It can be regarded as a secondary distributor of the partner system and is closer to the user side. However, because the slab distributor is closer to the user, it is in the structure The realization is more complicated than the partner system, this article can only briefly summarize.

Personally feel that the highlights of the slab allocator include: the smallest granularity is the return of objects and memory lazily.

The basis of the slab allocator used by Linux is an algorithm first introduced by Jeff Bonwick for the SunOS operating system. Jeff's allocator revolves around object caching. In the kernel, a large amount of memory is allocated for a limited set of objects (such as file descriptors and other common structures). Jeff found that the time required to initialize ordinary objects in the kernel exceeds the time required to allocate and release them. Therefore, his conclusion is that the memory should not be released back to a global memory pool, but the memory should be kept in a specific initialization state.

from "Analysis of linux slab allocator"

The theoretical basis for slab's use of objects as the smallest unit is that the time to initialize a structure may exceed the time of allocation and release.

The slab allocator can be regarded as a memory pre-allocation mechanism, just like a supermarket will put commonly used items in a location that is easier for everyone to find, and these objects can be allocated immediately when they are ready for application in advance.

slabs_full: the slabs in the linked list have been completely allocated

slabs_partial: the slabs in the linked list have been allocated

slabs_empty: the slabs in the linked list are free, that is, the

objects that can be recycled are allocated and released from the slab, each kmem_cache The slab list is subject to state migration, but the reclaimed part of the slab will not be returned to the partner system immediately, and the most recently released object will be assigned first during allocation. The purpose is to use the locality principle of the cpu cache, which can be seen The details of the slab allocator are sufficient, but in order to implement this complex logic, maintaining multiple queues is more complicated than the partner system.

The content of slab is more complicated than buddy, so this article will not expand.

7. Conclusion

There are indeed a lot of things about linux memory management. This article is just a brief discussion. For in-depth understanding, you still need to read kernel books. There is no shortcut