Introduction to Kafka

Apache Kafka is a distributed publish-subscribe messaging system. It is the only king among the message queues in the big data field. It was originally developed by LinkedIn company using the scala language, and in 2010 it contributed to the Apache Foundation and became a top open source project. It has been more than ten years and it is still an indispensable and increasingly important component in the big data field.

Kafka is suitable for offline and online messages. Messages are kept on disk and replicated in the cluster to prevent data loss. Kafka is built on top of the zookeeper synchronization service. It has very good integration with Flink and Spark and is used in real-time streaming data analysis.

Kafka features:

- Reliability: With copy and fault tolerance mechanism.

- Scalability: Kafka can expand nodes and go online without downtime.

- Persistence: The data is stored on the disk and persisted.

- Performance: Kafka has high throughput. It also has very stable performance with terabytes of data.

- Fast speed: Sequential write and zero copy technology make Kafka's latency control in milliseconds.

The underlying principle of Kafka

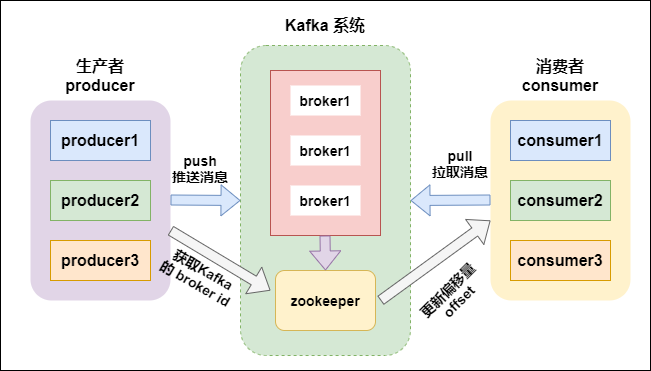

First look at the architecture of the Kafka system

Kafka architecture

Kafka architecture

Kafka supports message persistence. The consumer actively pulls data. The consumer state and subscription relationship are maintained by the client. After the message is consumed, it will not be deleted immediately, and historical messages will be retained . Therefore, when multiple subscriptions are supported, only one copy of the message can be stored.

- Broker : Kafka cluster contains one or more service instances (nodes), this service instance is called broker (a broker is a node/a server);

- topic : Every message published to the Kafka cluster belongs to a certain category, and this category is called topic;

- Partition : partition is a physical concept, each topic contains one or more partitions;

- segment : There are multiple segment file segments in a partition. Each segment is divided into two parts, a .log file and an .index file. The .index file is an index file, which is mainly used for quick query and the offset of data in the .log file Measurement location

- producer : The producer of the message, responsible for publishing the message to the Kafka broker;

- Consumer : The consumer of the message, the client that reads the message from the kafka broker;

- Consumer group : Consumer group, each consumer belongs to a specific consumer group (groupName can be specified for each consumer);

- .log : store data files;

- .index : Store the index data of the .log file.

Kafka main components

1. producer

The producer is mainly used to produce messages. It is the message producer in Kafka. The messages produced are classified by topic and stored in the broker of Kafka.

2. topic

- Kafka categorizes messages by topic;

- Topic specifically refers to the different categories of feeds of messages processed by Kafka;

- A topic is the nominal name of a series of records that are classified or published. Kafka topics always support multi-user subscriptions; that is, a topic can have zero, one or more consumers subscribe to write data;

- In a Kafka cluster, there can be countless topics;

- The consumption data of producers and consumers are generally based on themes. More granular can reach the partition level.

3. partition

In Kafka, topic is the classification of messages. A topic can have multiple partitions. Each partition stores the data of a part of the topic. The data in all partitions are all merged to form all the data in a topic.

Under a broker service, multiple partitions can be created. The number of brokers has nothing to do with the number of partitions;

in Kafka, each partition has a number: the number starts from 0.

The data in each partition is in order, but the global data cannot be guaranteed to be in order. (Ordered refers to the order of production and the order of consumption)

4. consumer

Consumers are consumers in Kafka. They are mainly used to consume data in Kafka. Consumers must belong to a certain consumer group.

5. Consumer group (consumer group)

A consumer group consists of one or more consumers, and consumers in the same group consume the same message only once .

Every consumer belongs to a certain consumer group, if not specified, then all consumers belong to the default group.

Each consumer group has an ID, the group ID. All consumers in the group coordinate together to consume all the partitions of a subscribed topic. Of course, each partition can only be consumed by one consumer (consumer) in the same consumer group , and can be consumed by different consumer groups .

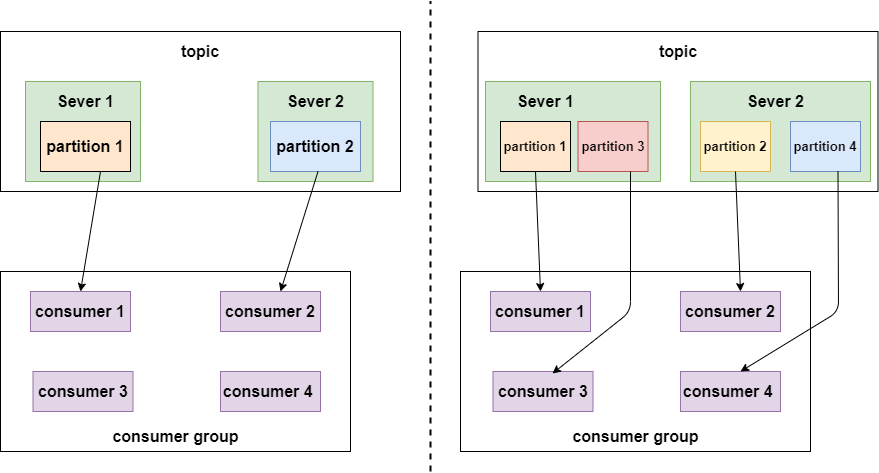

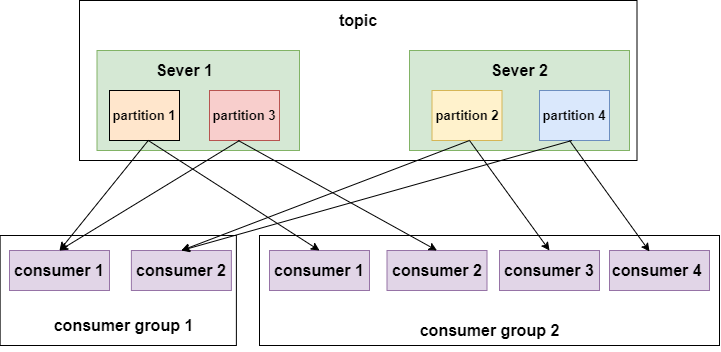

The number of partitions determines the maximum number of concurrent consumers in each consumer group . As shown below:

Example 1

Example 1

As shown in the left figure above, if there are only two partitions, even if there are 4 consumers in a group, there will be two free ones.

As shown in the right figure above, there are 4 partitions, each consumer consumes one partition, and the concurrency reaches a maximum of 4.

Look at the following picture:

Example 2

Example 2

As shown in the figure above, different consumer groups consume the same topic. This topic has 4 partitions, which are distributed on two nodes. The consumer group 1 on the left has two consumers, and each consumer needs to consume two partitions to consume the message completely. The consumer group 2 on the right has four consumers, and each consumer consumes one partition.

Summarize the relationship between partitions and consumer groups in Kafka :

Consumer group: Consists of one or more consumers. Consumers in the same group consume the same message only once.

The number of partitions under a certain topic, for the number of consumers in the same consumer group consuming the topic, should be less than or equal to the number of partitions under the topic .

For example, if a topic has 4 partitions, then the consumers in the consumer group should be less than or equal to 4, and it is best to be an integer multiple of 1 2 4 with the number of partitions. The data under the same partition cannot be consumed by different consumers of the same consumer group at the same time .

Summary: The more partitions there are, the more consumers can consume at the same time, the faster the consumption data will be, and the consumption performance will be improved .

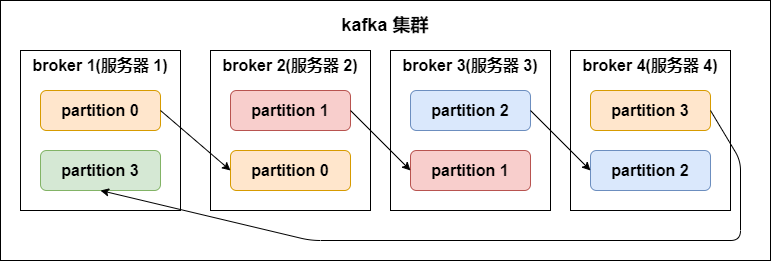

6. partition replicas (partition copy)

The partition replica in Kafka is shown in the following figure:

Kafka partition copy

Kafka partition copy

The number of copies (replication-factor): a control message stored on several broker (server), under normal circumstances the number of copies equal to the number of the broker.

Under a broker service, multiple replication factors cannot be created. When creating a topic, the replication factor should be less than or equal to the number of brokers available .

The replication factor operation is based on partitions. Each partition has its own master copy and slave copy;

The master copy is called the leader, the slave copy is called the follower (in the case of multiple copies, Kafka will set the role relationship for all the partitions under the same partition: a leader and N followers), and the copy in the synchronized state is called in -sync-replicas(ISR) ;

The follower synchronizes data from the leader by pulling.

Both consumers and producers read and write data from the leader, and do not interact with the follower .

The role of the replication factor: let Kafka read data and write data reliability.

The copy factor contains itself, and the same copy factor cannot be placed in the same broker.

If a partition has three replication factors, even if one of them fails, then only one leader will be selected among the remaining two, but another replica will not be started in other brokers (because it is started on the other , There is data transfer, as long as there is data transfer between machines, it will occupy the network IO for a long time. Kafka is a high-throughput messaging system, this situation is not allowed to happen) so it will not be started in another broker.

If all the copies are hung up, if the producer produces data to the specified partition, the write will be unsuccessful.

lsr means: the currently available copy.

7. Segment files

A partition is composed of multiple segment files. Each segment file contains two parts, one is a .log file and the other is a .index file. The .log file contains the data storage we sent, the .index file, and the record It is the data index value of our .log file so that we can speed up the data query speed.

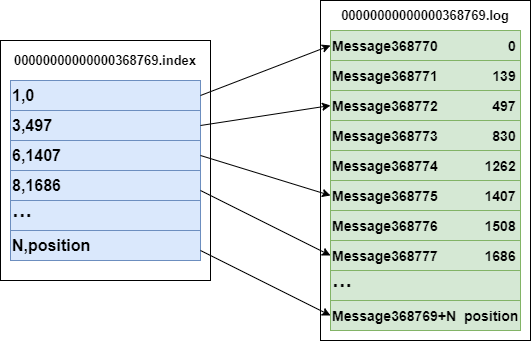

The relationship between index files and data files

Since they appear in pairs in a one-to-one correspondence, there must be a relationship. The metadata in the index file points to the physical offset address of the message in the corresponding data file.

For example, 3,497 in the index file represents: the third message in the data file, its offset address is 497.

Looking at the data file again, Message 368772 means: it is the 368772th message in the global partiton.

Note: The segment index file adopts a sparse index storage method to reduce the size of the index file. It can be directly operated on memory through mmap (memory mapping). The sparse index sets a metadata pointer for each corresponding message of the data file, which saves more than dense index More storage space, but it takes more time to find it.

The correspondence between .index and .log is as follows:

.index and .log

.index and .log

The left half of the above figure is the index file, which stores a pair of key-values, where the key is the number of the message in the data file (corresponding log file), such as "1, 3, 6, 8... ",

respectively indicate the first message, the third message, the sixth message, the eighth message in the log file...

So why are these numbers not consecutive in the index file?

This is because the index file does not create an index for every message in the data file, but uses a sparse storage method to create an index for every certain byte of data.

This prevents the index file from occupying too much space, so that the index file can be kept in memory.

But the disadvantage is that Messages that are not indexed cannot be located in the data file at one time, which requires a sequential scan, but the range of this sequential scan is very small.

The value represents the number of messages in the global partiton.

Take metadata 3,497 in the index file as an example, where 3 represents the third message from top to bottom in the log data file on the right, and

497 represents the physical offset address (location) of the message is 497 (also means that the global partiton represents the first 497 messages-sequential write feature).

The log log directory and its components

Kafka will create some folders in the log.dir directory we specify; the name is the folder composed of (topic name-partition name). There will be two files in the directory of (topic name-partition name), as shown below:

#索引文件

00000000000000000000.index

#日志内容

00000000000000000000.log

The files in the directory will be split according to the size of the log. When the size of the .log file is 1G, the file will be split; as follows:

-rw-r--r--. 1 root root 389k 1月 17 18:03 00000000000000000000.index

-rw-r--r--. 1 root root 1.0G 1月 17 18:03 00000000000000000000.log

-rw-r--r--. 1 root root 10M 1月 17 18:03 00000000000000077894.index

-rw-r--r--. 1 root root 127M 1月 17 18:03 00000000000000077894.log

In the design of Kafka, the offset value is used as part of the file name.

Segment file naming rules : The first segment of the global part starts from 0, and each subsequent segment file is named the maximum offset (the number of offset messages) of the previous global part. The maximum value is a 64-bit long size, and a length of 20-bit numeric characters. If there is no number, it is filled with 0.

Message can be quickly located by indexing information. By mapping all index metadata to memory, segment File IO disk operations can be avoided;

Through sparse storage of index files, the space occupied by index file metadata can be greatly reduced.

Sparse index: Create an index for data, but the range is not created for each item, but for a certain interval; the

advantage: it can reduce the number of index values.

Disadvantage: After finding the index range, you have to perform a second processing.

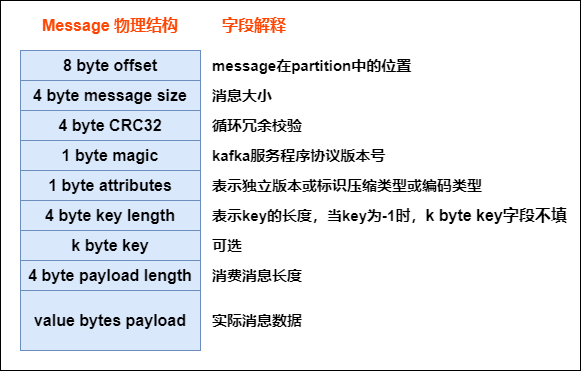

8. The physical structure of the message

Every message sent by the producer to Kafka is packaged into a message by Kafka

The physical structure of message is shown in the figure below:

.index and .log

.index and .log

Therefore, the messages sent by the producer to Kafka are not stored directly, but packaged by Kafka. Each message has the structure shown in the figure above. Only the last field is the message data sent by the real producer.

Data non-loss mechanism in Kafka

1. Producer production data is not lost

Send message method

The data sent by the producer to kafka can be synchronous or asynchronous

Synchronization method :

After sending a batch of data to Kafka, wait for Kafka to return the result:

- The producer waits for 10 seconds. If the broker does not give an ack response, it is considered a failure.

- The producer retries 3 times, and if there is no response, an error is reported.

Asynchronous way :

Send a batch of data to Kafka, but provide a callback function:

- First save the data in the buffer on the producer side. The buffer size is 20,000.

- Data can be sent when one of the data threshold or the quantity threshold is met.

- The size of a batch of data sent is 500.

Note: If the broker does not give an ack for a long time and the buffer is full, the developer can set whether to clear the data in the buffer directly.

ack mechanism (confirmation mechanism)

When the producer data is sent out, the server needs to return a confirmation code, which is the ack response code; the ack response has three status values 0, 1, and -1

0: The producer is only responsible for sending the data, and does not care whether the data is lost. The lost data needs to be sent again

1: The leader of the partition receives the data, regardless of whether the follower has finished synchronizing the data, the response status code is 1

-1: All slave nodes have received data, and the response status code is -1

If the broker does not return the ack status, the producer never knows whether it is successful or not; the producer can set a timeout period of 10s, and it will be considered a failure if the time is exceeded.

2. No data loss in the broker

In the broker, to ensure that data is not lost is mainly through the replication factor (redundancy) to prevent data loss.

3. No loss of consumer consumption data

When consumers consume data, as long as each consumer records the offset value, the data will not be lost. That is, we need to maintain the offset (offset) by ourselves, which can be stored in Redis.