background

Recently, the leader assigned a task to test some functions of the sit environment, which is much slower than before, and needs to be optimized.

Troubleshooting process

The browser F12 checks the response of the relevant interface and sees which interface is slow. According to the requirements of the Internet, the page has not been displayed for 3 seconds, and the user experience will be very poor.

Check the submission time of the relevant codes. These codes have not been changed since they went online. Based on my three-year career experience, it may be caused by unreasonable interface-related database table design or problems that need to be optimized. Therefore, this article Born!

Database single table optimization steps

When designing the table: 1. Select the appropriate field type

2. Create a high-performance index

During operation and maintenance: 1. Slow SQL optimization

2. mysql parameter tuning

3. Data fragmentation and index fragmentation optimization

I have done some database optimization before, and the optimization scheme we used today is: data fragmentation and index fragmentation optimization

Q: Why do data fragmentation and index fragmentation occur?

A: After the table record is deleted, it only indicates that the record is deleted on the corresponding record, and the table space is not released, resulting in data fragmentation and index fragmentation, occupying server storage space and affecting index efficiency.

mysql engine:

MyISAM engine: data is stored in MYD file, index is stored in MYI file

InnoDB engine: frm file storage table structure, idb file storage index and data

Data fragmentation optimization steps

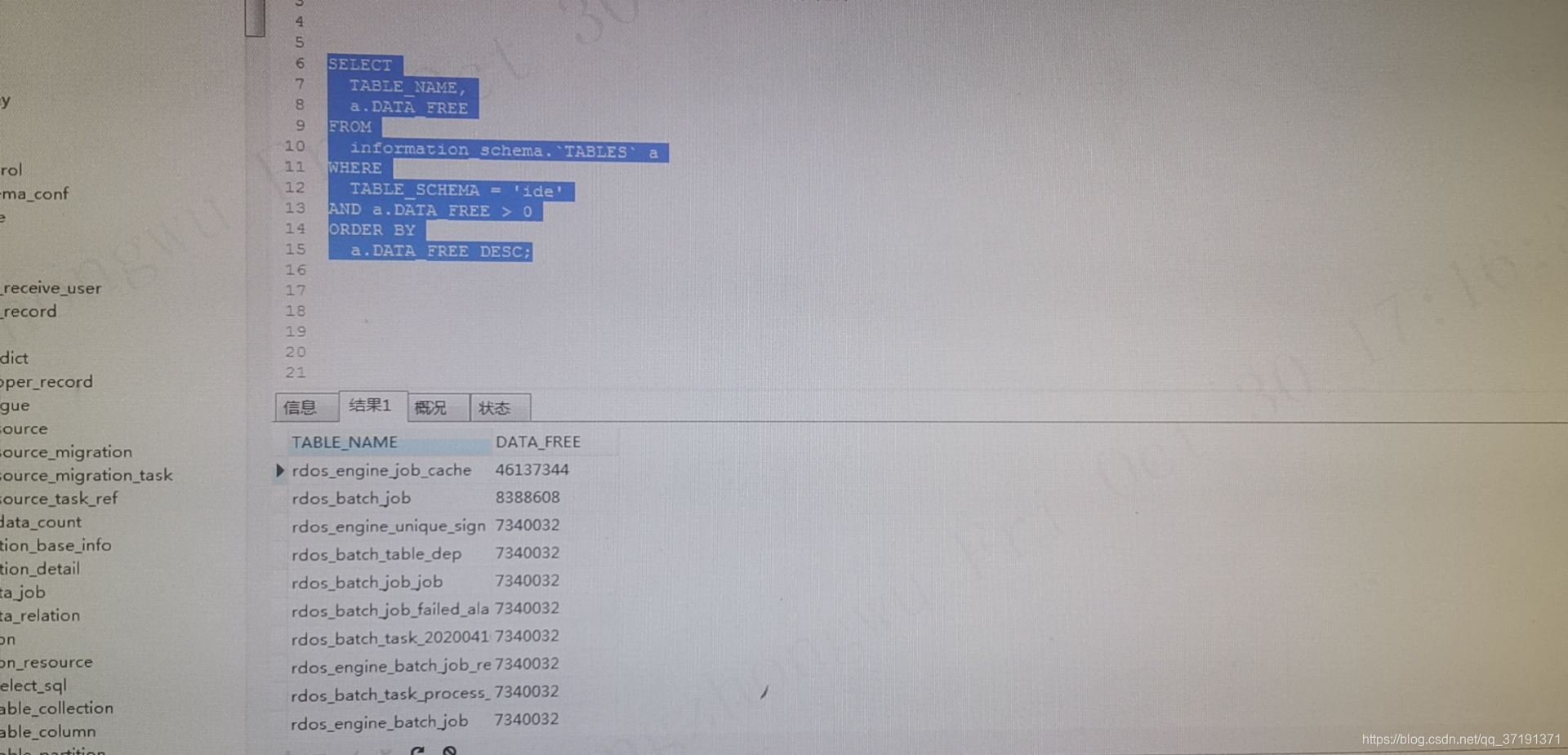

View the related table sql that needs to be optimized under the ide library:

select table_name,data_free from information_schema.tables where table_schema='ide' order by a.data_free desc; you can see the data fragments of each table, as shown in the figure:

We can see: the data fragmentation of the rdos_engine_job_cache table is more, you can clean it up

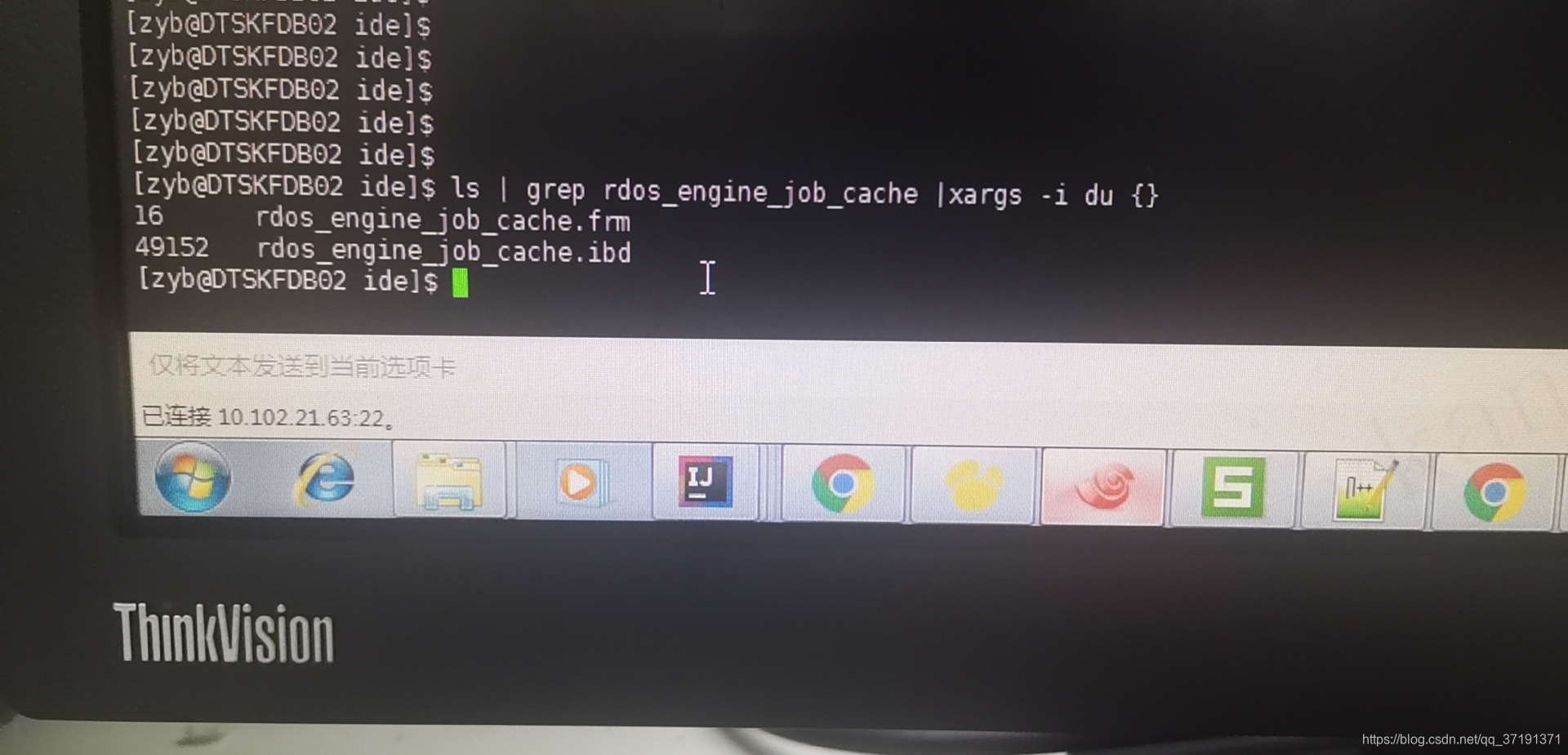

Check the size of the files related to the table before cleaning. The data and index files are 49452, as shown in the figure:

You can also use sql to query: the data size is the sum of data_length and data_free in the above figure.

Index size: show index from rdos_engine_job_cache;

Cardinality : An estimate of the number of unique values in the index. It can be updated by running ANALYZE TABLE or myisamchk -a. The base is counted based on statistical data stored as integers, so even for small tables, the value does not need to be accurate. The greater the cardinality, the greater the chance that MySQL will use the index when performing unions.

The relevant field values can be Baidu by themselves.

Note: During the operation of OPTIMIZE TABLE, MySQL will lock the table.

For myisam, you can directly use optimize table table.name. When it is an InnoDB engine, it will report "Table does not support optimize, doing recreate + analyze instead". Under normal circumstances, change from myisam to innodb will use alter table table.name Engine='innodb' for conversion, optimization can also use this. So when it is the InnoDB engine, we can use alter table table.name engine='innodb' instead of optimize for optimization.

Our table uses the Innodb engine, execute the command: alter table rdos_engine_job_cache engine='innodb'; as shown in the figure:

From the figure you can see the comparison before and after cleaning: the table space is indeed released a lot

Index fragmentation optimization steps

Analyze Table

MySQL's Optimizer (optimization component) first needs to collect some relevant information when optimizing SQL statements, including the cardinality of the table (which can be translated as "hash degree"), which indicates how many columns correspond to an index Different values-if the cardinality is much less than the actual hash degree of the data, then the index basically fails.

We can use the SHOW INDEX statement to view the degree of index hashing:

SHOW INDEX FROM PLAYERS;

TABLE KEY_NAME COLUMN_NAME CARDINALITY

------- -------- ------------ ----------

PLAYERS PRIMARY PLAYERNO 14

Because the number of different PLAYERNOs in the PLAYER table is far more than 14, the index basically fails.

Below we use the Analyze Table statement to repair the index:

ANALYZE TABLE PLAYERS;

SHOW INDEX FROM PLAYERS; The

result is:

TABLE KEY_NAME COLUMN_NAME CARDINALITY

------- -------- --------- - -----------

PLAYERS PRIMARY PLAYERNO 1000

At this time, the index has been repaired, and the query efficiency is greatly improved.

It should be noted that if binlog is enabled, the results of the Analyze Table will also be written to binlog. We can add the keyword local between analyze and table to cancel the write.