Fabric is based on the docker method and the detailed process of multi-machine kafka configuration and deployment.

More blockchain technology and application classification:

Blockchain applicationBlockchain development

Ethernet Square | Fabric | BCOS | cryptography | consensus algorithm | bitcoin | Other chain

Token Economy | Traditional Financial Scenarios | Decentralized Finance | Anti-counterfeiting Traceability | Data Sharing | Trusted Deposit

The steps for building a Fabric network of multi-machine kafka based on docker mode are as follows.

Distributed node planning:

node1: kafka1 zookeeper1 orderer1

node2: kafka2 zookeeper2 orderer2 peer1

node3: kafka3 zookeeper3 orderer3 peer2

node4: kafka4 peer3

Network ip configuration of each node (vim /etc/hosts)

172.27.34.201 orderer1.trace.com zookeeper1 kafka1

172.27.34.202 orderer2.trace.com zookeeper2 kafka2 peer0.org1.trace.com org1.trace.com

172.27.34.203 orderer3.trace.com zookeeper3 kafka3 peer1.org2.trace.com org2.trace.com

172.27.34.204 kafka4 peer2.org3.trace.com org3.trace.com

Environmental requirements

fabric v1.1

docker (17.06.2-ce or higher)

docker-compose (1.14.0 or higher)

go (1.9.x or higher)

docker installation

Delete previously installed

sudo yum remove docker docker-common docker-selinux docker-engine

Install some dependencies

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

Download and install the rpm package

sudo yum install https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-17.06.2.ce-1.el7.centos.x86_64.rpm

go update:

rm -rf /usr/local/go/

wget https://dl.google.com/go/go1.12.linux-amd64.tar.gz

Docker image download

docker pull registry.docker-cn.com/hyperledger/fabric-peer:x86_64-1.1.0

docker pull registry.docker-cn.com/hyperledger/fabric-orderer:x86_64-1.1.0

docker pull registry.docker-cn.com/hyperledger/fabric-tools:x86_64-1.1.0

docker pull hyperledger/fabric-couchdb

docker pull hyperledger/fabric-ca

docker pull hyperledger/fabric-ccenv

docker pull hyperledger/fabric-baseos

docker pull hyperledger/fabric-kafka

docker pull hyperledger/fabric-zookeeper

Change label:

docker tag b7bfddf508bc hyperledger/fabric-tools:latest

docker tag ce0c810df36a hyperledger/fabric-orderer:latest

docker tag b023f9be0771 hyperledger/fabric-peer:latest

Delete the same:

docker rmi registry.docker-cn.com/hyperledger/fabric-tools:x86_64-1.1.0

docker rmi registry.docker-cn.com/hyperledger/fabric-orderer:x86_64-1.1.0

docker rmi registry.docker-cn.com/hyperledger/fabric-peer:x86_64-1.1.0

Save the image and send it to other nodes:

=>node1:

mkdir -p /data/fabric-images-1.1.0-release

docker save 5b31d55f5f3a > /data/fabric-images-1.1.0-release/fabric-ccenv.tar

docker save 1a804ab74f58 > /data/fabric-images-1.1.0-release/fabric-ca.tar

docker save d36da0db87a4 > /data/fabric-images-1.1.0-release/fabric-zookeeper.tar

docker save a3b095201c66 > /data/fabric-images-1.1.0-release/fabric-kafka.tar

docker save f14f97292b4c > /data/fabric-images-1.1.0-release/fabric-couchdb.tar

docker save 75f5fb1a0e0c > /data/fabric-images-1.1.0-release/fabric-baseos.tar

docker save b7bfddf508bc > /data/fabric-images-1.1.0-release/fabric-tools.tar

docker save ce0c810df36a > /data/fabric-images-1.1.0-release/fabric-orderer.tar

docker save b023f9be0771 > /data/fabric-images-1.1.0-release/fabric-peer.tar

docker save b023f9be0771 > /data/fabric-images-1.1.0-release/fabric-peer.tar

docker save b023f9be0771 > /data/fabric-images-1.1.0-release/fabric-peer.tar

cd /data/fabric-images-1.1.0-release

scp -r ./* [email protected]:/tmp/docker/fabric-images/

In node2, node3, node4:

docker load < fabric-baseos.tar

docker tag 75f5fb1a0e0c hyperledger/fabric-baseos:latest

docker load < fabric-ca.tar

docker tag 1a804ab74f58 hyperledger/fabric-ca:latest

docker load < fabric-ccenv.tar

node1:

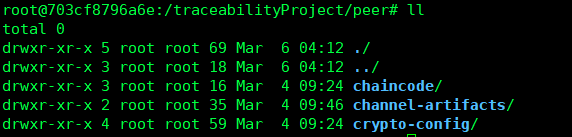

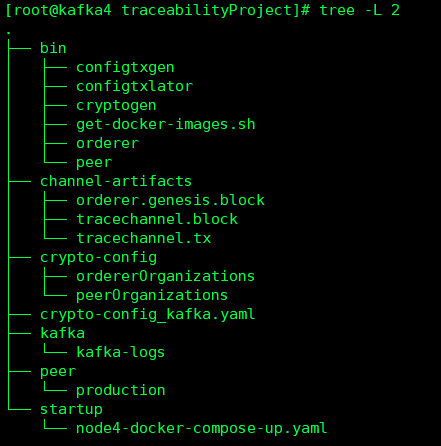

Operation is under /traceabilityProject

Download the binary file (v1.1 version):

1. Configuration file settings:

Generate the configuration files required for the node:

./bin/cryptogen generate --config=./crypto-config_kafka.yaml

2. Generate the founding block:

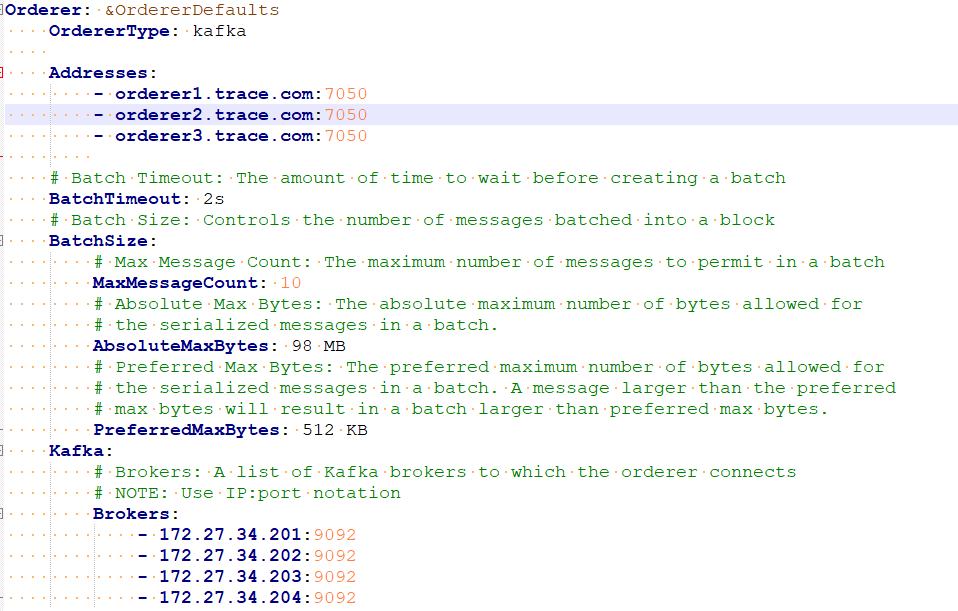

Change part (cluster address):

Put the file in /traceabilityProject, and then run it in this directory:

(Must be renamed to configtx.yaml file)

export FABRIC_CFG_PATH=$PWD

mkdir ./channel-artifacts

./bin/configtxgen -profile TestOrgsOrdererGenesis -outputBlock ./channel-artifacts/orderer.genesis.block

Three. Generate the channel configuration file:

./bin/configtxgen -profile TestOrgsChannel -outputCreateChannelTx ./channel-artifacts/tracechannel.tx -channelID tracechannel

4. Startup environment and file configuration

Note : Since the network and data disappear every time the container is destroyed, this is unacceptable for the production environment, so consider data persistence.

Components that need to persist data: Orderer, Peer, Kafka, zookeeper

Add in the startup file:

Orderer:

environment: - ORDERER_FILELEDGER_LOCATION=/traceabilityProject/orderer/fileLedger volumes: - /traceabilityProject/orderer/fileLedger:/traceabilityProject/orderer/fileLedger

Peer:

environment: - CORE_PEER_FILESYSTEMPATH=/traceabilityProject/peer/production volumes: - /traceabilityProject/peer/production:/traceabilityProject/peer/production

Kafka:

environment: #Kafka数据也要持久化 - KAFKA_LOG.DIRS=/traceabilityProject/kafka/kafka-logs #数据挂载路径 volumes: - /traceabilityProject/kafka/kafka-logs:/traceabilityProject/kafka/kafka-logs

zookeeper:

volumes: #因为zookeeper默认数据存储路径为/data及/datalog - /traceabilityProject/zookeeper1/data:/data - /traceabilityProject/zookeeper1/datalog:/datalog

The detailed configuration is shown in the following yaml document

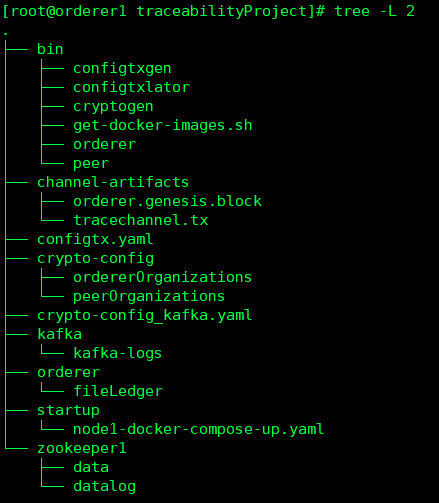

node1:

Then create three folders kafka, orderer, and zookeeper to store data persistently

docker-compose -f ./node1-docker-compose-up.yaml up -d

To stop the container, use:

docker-compose -f ./node1-docker-compose-up.yaml down

Note:

Copy the corresponding configuration files generated in the volume directory in the configuration file (these directories cannot be empty!)

(

The genesis block directory needs to mount files instead of folders, but docker-compose generates folders every time, manually change it to the genesis block file:

- ../channel-artifacts/orderer.genesis.block:/traceabilityProject/orderer.genesis.block

)

Copy the working directory/traceabilityProject to other nodes:

scp -r /traceabilityProject/ [email protected]:/

scp -r /traceabilityProject/ [email protected]:/

scp -r /traceabilityProject/ [email protected]:/

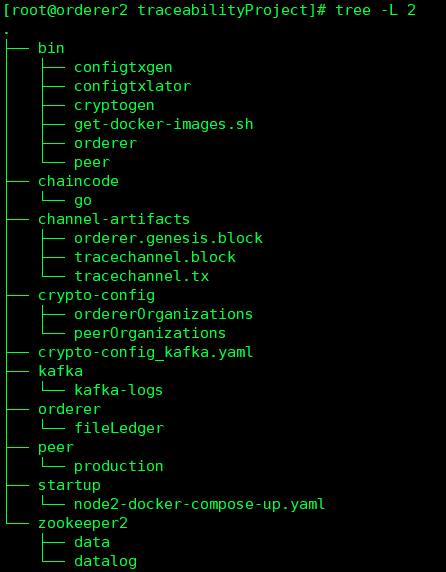

node2 :

Create four folders kafka, orderer, zookeeper, peer to store data persistently

docker-compose -f ./node2-docker-compose-up.yaml up -d

docker-compose -f ./node2-docker-compose-up.yaml down --remove-orphans

node2-docker-compose-up.yaml

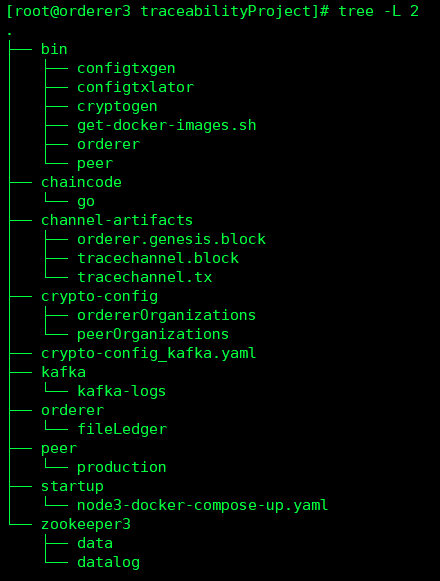

node3 :

Create three folders, kafka, orderer, and peer, to store data persistently

node4:

Create two folders, kafka and peer, to store data persistently

note:

Only after all zookeeper and kafka are started (four files are started at the same time, there will be some delay waiting time), the orderer node will not go down.

(Otherwise an error:

Cannot post CONNECT message = circuit breaker is open)

Five.Peer node operation (transaction, channel, chain code)

peer1 (node2):

(1) Enter the container

docker exec -it cli bash

Check if there are files in the working directory of the container, if not, manually copy them from the host to the docker container:

(2) Create a channel

In the container

cd /traceabilityProject/peer/channel-artifacts

peer channel create -o orderer1.trace.com:7050 -c tracechannel -t 50 -f ./tracechannel.tx

(3) Send tracechannel.block to other nodes:

Since /traceabilityProject/peer/channel-artifacts is the mount directory of the container, the file already exists in the physical machine.

Copy to two peer nodes:

Exit the container and enter the host directory: /traceabilityProject/channel-artifacts

scp -r tracechannel.block [email protected]:/traceabilityProject/channel-artifacts

scp -r tracechannel.block [email protected]:/traceabilityProject/channel-artifacts

Because /traceabilityProject/channel-artifacts is the mount point of volumes in the cli container, in the container.

(4) Join the channel

Enter the container directory /traceabilityProject/peer/channel-artifacts again:

peer channel join -b tracechannel.block

(

Complete restart instruction:

docker-compose -f node1-docker-compose-up.yaml down --volumes --remove-orphans docker volume prune

)

(Even if the anchor node is not set, the entire Fabric network can still operate normally)

(5) Install chain code

peer chaincode install -n cc_producer -v 1.0 -p github.com/hyperledger/fabric/examples/chaincode/go/tireTraceability-Demo/main/

(6) Initialization

peer chaincode instantiate -o orderer1.trace.com:7050 -C tracechannel -n cc_producer -v 1.0 -c '{"Args":["init"]}' -P "OR ('Org1MSP.member','Org2MSP.member','Org3MSP.member')"

pee2 (node3):

docker exec -it cli bash

cd channel-artifacts/

peer channel join -b tracechannel.block

peer chaincode install -n cc_agency -v 1.0 -p github.com/hyperledger/fabric/chaincode/go/traceability/src/origin_agency/main/

peer chaincode instantiate -o orderer1.trace.com:7050 -C tracechannel -n cc_agency -v 1.0 -c '{"Args":["init"]}' -P "OR ('Org1MSP.member','Org2MSP.member','Org3MSP.member')"

peer3(node4):

docker exec -it cli bash

cd channel-artifacts/

peer channel join -b tracechannel.block

peer chaincode install -n cc_producer -v 1.0 -p github.com/hyperledger/fabric/chaincode/go/traceability/src/origin_producer/main/

peer chaincode install -n cc_agency -v 1.0 -p github.com/hyperledger/fabric/chaincode/go/traceability/src/origin_agency/main/

peer chaincode install -n cc_retailer -v 1.0 -p github.com/hyperledger/fabric/chaincode/go/traceability/src/origin_retailer/main/

peer chaincode instantiate -o orderer1.trace.com:7050 -C tracechannel -n cc_retailer -v 1.0 -c '{"Args":["init","A","B","C"]}' -P "OR ('Org1MSP.member','Org2MSP.member','Org3MSP.member')"

(Every time you restart the container, you need to go through the process again, add channel, install, and install three chain codes on the retailer, but only initialize cc_retailer)

Complete restart instruction:

docker-compose -f node1-docker-compose-up.yaml down --volumes --remove-orphans

docker-compose -f node2-docker-compose-up.yaml down --volumes --remove-orphans

docker-compose -f node3-docker-compose-up.yaml down --volumes --remove-orphans

docker-compose -f node4-docker-compose-up.yaml down --volumes --remove-orphans docker volume prune

And delete persistent data

rm -rf kafka/* orderer/* zookeeper1/*

rm -rf kafka/* orderer/* peer/* zookeeper2/*

rm -rf kafka/* orderer/* peer/* zookeeper3/*

rm -rf kafka/* peer/*

When closing the container before shutting down:

docker-compose -f /traceabilityProject/startup/node1-docker-compose-up.yaml down

docker-compose -f /traceabilityProject/startup/node2-docker-compose-up.yaml down

docker-compose -f /traceabilityProject/startup/node3-docker-compose-up.yaml down

docker-compose -f /traceabilityProject/startup/node4-docker-compose-up.yaml down

Original link: Fabric multi-machine kafka deployment (docker mode)