Tutorial: Generate triplet training deep model based on triplet loss

I believe that many people who want to use triplet loss are stumped by the generation of triplet. Of course, if your machine is good enough, many codes on the network can satisfy you. There are many codes for handwritten digit recognition on github, and they are all very useful, for example:

https://github.com/charlesLucky/keras-triplet-loss-mnist

However, it is just how to calculate the loss, not how to generate the triplet. His triplet is picked from the batch every time. Fortunately, mnist has only ten classes. It is easy to choose enough positive and negetive. For large ones There are many datasets and classes, how do you generate them?

Some of the FaceNet source code is given:

https://github.com/davidsandberg/facenet

But the code of TF1 is hard to understand, so let's take a look at how to generate TF2!

First we need to look at tf.data.Dataset.interleave()

Interleave() is a class method of Dataset, so interleave works on a Dataset.

interleave(

map_func,

cycle_length=AUTOTUNE,

block_length=1,

num_parallel_calls=None

)

The following explanations and cases are from [1], [2]

Explanation:

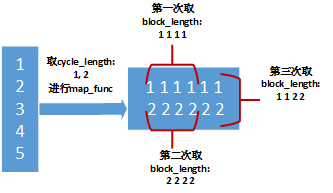

- Suppose we now have a Dataset-A

- Take out cycle_length elements from the A, and apply map_func to these elements to obtain cycle_length new Dataset objects.

- Then fetch data from these newly generated Dataset objects. The fetch logic is to fetch data from each object in turn, fetching block_length pieces of data each time

- When these newly generated objects of a certain Dataset are exhausted, take cycle_length elements from the original Dataset, and then apply map_func, and so on.

for example:

a = tf.data.Dataset.range(1, 6) # ==> [ 1, 2, 3, 4, 5 ]

# NOTE: New lines indicate "block" boundaries.

b=a.interleave(lambda x: tf.data.Dataset.from_tensors(x).repeat(6),

cycle_length=2, block_length=4)

for item in b:

print(item.numpy(),end=', ')

The result is:

1, 1, 1, 1, 2, 2, 2, 2, 1, 1, 2, 2, 3, 3, 3, 3, 4, 4, 4, 4, 3, 3, 4, 4, 5, 5, 5, 5, 5, 5,

The diagram of the above program may be clearer by looking at the schematic diagram:

where map_func is repeated 6 times-repeat(6).

Then we can use it to generate the triplet we want

def pair_parser(imgs):

# Note y_true shape will be [batch,3]

return (imgs[0], imgs[1], imgs[2]),([1,1,2])

def processOneDir4(basedir):

list_ds = tf.data.Dataset.list_files(basedir+"/*.jpg").shuffle(100).repeat()

return list_ds

def generateTriplet(imgs,label):

labels = [int(tf.strings.split(imgs[0],os.path.sep)[0,-2]),int(tf.strings.split(imgs[1],os.path.sep)[0,-2]),int(tf.strings.split(imgs[2],os.path.sep)[0,-2])]

return (imgs),(labels)

dbdir = "./data"

allsubdir = [os.path.join(dbdir, o) for o in os.listdir(dbdir)

if os.path.isdir(os.path.join(dbdir,o))]

path_ds = tf.data.Dataset.from_tensor_slices(allsubdir)

ds = path_ds.interleave(lambda x: processOneDir4(x), cycle_length=5751,

block_length=2,

num_parallel_calls=4).batch(4, True).map(pair_parser, -1).batch(1, True).map(generateTriplet, -1)

OK.

参考:

[1] https://tensorflow.google.cn/api_docs/python/tf/data/Dataset?version=stable#interleave

[2]https://blog.csdn.net/menghuanshen/article/details/104240189