table of Contents

1 Basic knowledge of pre-trained network (transfer learning)

1.2 Keras built-in pre-trained network

1.3.2 Comparison between VGG16 and VGG19

1.3.3 VGG has two big disadvantages

2 Classic pre-training network weight sharing and usage method

2.1, add a custom layer on the pre-trained convolution base

2.2, freeze all layers of the convolutional base

2.3. Training added classification layer

2.4. Unfreeze a part of the convolutional base

2.5. Joint training of unfrozen convolutional layers and added custom layers,

3.4 Training when freezing the convolutional layer

3.5 Unfreeze Xception fine-tuning

1 Basic knowledge of pre-trained network (transfer learning)

1.1 Transfer learning

Pre-training network, also known as transfer learning, pre-training network is a security previously stored in large data sets have been good trained on the (large-scale image classification task) convolution neural network

If this original data set is large enough and versatile enough , then the spatial hierarchy of features learned by the pre-trained network can be used as an effective extraction of visual world features

Model.

Even if the new problem and the new task are completely different from the original task, the learned features are portable between different problems . This is also an important advantage of deep learning and shallow learning methods. It makes deep learning very effective for small data problems.

1.2 Keras built-in pre-trained network

- Keras library contains

- VGG16、VGG19、

- ResNet50、

- Inception v3、

- Classic model architecture such as Xception .

1.3 ImageNet

ImageNet is a manually labeled image database (for machine vision research). There are currently 22,000 categories

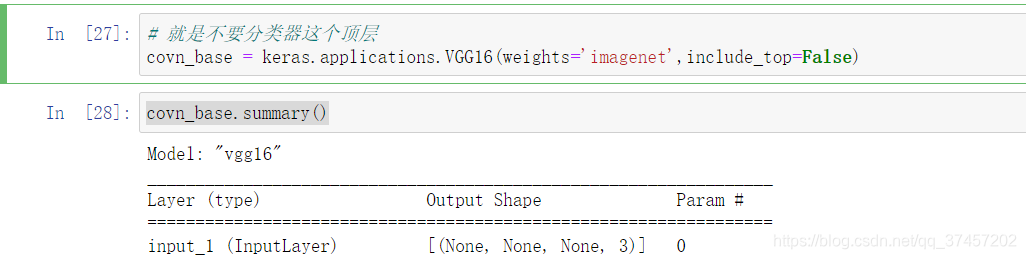

Code implementation of migration learning network architecture

1.3.0 VGG16 and VGG19

In 2014, VGG model architecture proposed by Simonyan and Zisserman, in the "deep large-scale image recognition convolution Network" ( Very Deep Convolutional Networks for Large Scale Image Recognition ) This paper describes the VGG has a simple and effective model structure, before Several layers only use 3×3 convolution kernels to increase the depth of the network. The number of neurons in each layer is sequentially reduced through max pooling . The last three layers are two fully connected layers with 4096 neurons and one softmax layer .

But in fact, it is outdated now, we will prove it through experiments later, but the VGG series is very similar to what we have learned before, so we need to analyze it

1.3.1 Implementation Principle

Schematic diagram of VGG implementation: Wrong to make the picture thinner and narrower

1.3.2 Comparison between VGG16 and VGG19

1.3.3 VGG has two big disadvantages

- The weight of the network architecture is quite large and consumes disk space.

- Training is very slow

2 Classic pre-training network weight sharing and usage method

This part of the study is mainly for fine-tuning the service

Fine-tuning

The so-called fine-tuning: freeze the convolutional layer at the bottom of the model library, and jointly train the newly added classifier layer and the top partial convolutional layer. This allows us to “fine-tune” the higher-order feature representations in the base model to make them more relevant to specific tasks.

2.1, add a custom layer on the pre-trained convolution base

2.2, freeze all layers of the convolutional base

2.3. Training added classification layer

2.4. Unfreeze a part of the convolutional base

2.5. Joint training of unfrozen convolutional layers and added custom layers,

Result analysis

3 Common training models

3.1 Available training models

3.2 Model introduction

3.3 Xception

# 就是不要分类器这个顶层

covn_base = keras.applications.xception.Xception(weights='imagenet',

include_top=False,

input_shape=(256,256,3),

pooling='avg')3.4 Training when freezing the convolutional layer

Note:

In fact, a pound is very high at this time, we are just for learning comparison (there may be overfitting)

3.5 Unfreeze Xception fine-tuning

Next, we continue to use the previous training to fine-tune