table of Contents

Hadoop installation and operation

1. The environment used by the author

2. From installation to operation

2.4 Perform pseudo-distributed configuration

2.5 Start pseudo-distributed hadoop

2.7 Running Hadoop pseudo-distributed instances

Hadoop installation and operation

Hadoop installation is roughly divided into 5 steps:

-

Create a hadoop user (Mac users should use their own users, this step is omitted, so as not to cause unnecessary trouble)

-

Set SSH login permissions

-

Install JAVA environment

-

Stand-alone installation and configuration

-

Pseudo-distributed installation configuration

1. The environment used by the author

macOS 10.14.6

2. From installation to operation

2.1 Set SSH login permissions

SSH is the abbreviation of Secure Shell , which is a security protocol based on the application layer and the transport layer. SSH is configured to enable passwordless login . In the process of using the Hadoop cluster, you need to continuously access each NameNode. Related processes on the NameNode must continuously access the daemons on other nodes to communicate with each other. For other machines, a passwordless SSH login method must be set.

First, go to Settings -> Sharing , check the remote login, and allows all users to access.

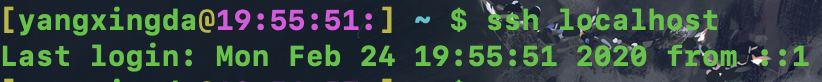

Then, open the terminal, enter ssh localhost , and then enter the password, you can ssh successfully.

2.2 Install JAVA environment

Hadoop is an open source framework developed in Java , which allows a simple programming model to store and process big data in a distributed environment across computer clusters. Therefore, we need to prepare the Java environment.

First open the terminal and enter java -version to view the current Java version. Macs in recent years should have built-in java, but it is possible that the version will be lower, and the lower version will affect the installation of hadoop. If the version is too low, go Oracle's official website to download and install the latest Java.

If java-version prompts that there is no java, then go to the official website to install.

Attached URL: Oracle official website to download Java to install dmg

After the installation is complete, we configure the JAVA environment variables. The JDK is installed in the / Library / Java / JavaVirtualMachines directory. There may be multiple JDK versions in this directory. We can write the appropriate (new) version to the environment variable .

Enter vim ~ / .bash_profile in the terminal to configure the Java path. Among them, "~" represents the root directory of the current user; the full name of bash in the ".bash_profile" file is Bourne-Again Shell, which is an extension of the shell. As the name implies, ".bash_profile" is the bash configuration file.

After opening the file with vim, use the English input method to randomly type a letter to open the insert mode for input. Then we use the export keyword to modify the environment variables, and enter export JAVA_HOME = "/ Library / Java / JavaVirtualMachines / your selected jdk version. Jdk / Contents / Home" statement on the blank line , pay attention to the path to the Home level Then press the ESC key to enter the command editing mode of vim, under the English input method, then enter : wq to write and exit.

Then, execute source.bash_profile to make the configuration file take effect. Then enter java -version in the terminal , you can see the Java version.

Note that by typing which java in the terminal , you can see that the receipt information shows that Java is located in usr / bin / java . In fact, it is a substitute file that actually points to / Library / Java / JavaVirtualMachines / jdk version.jdk / Contents / Home / bin / java .

2.3 Install hadoop

Here we use the Homebrew package manager to install the latest version of hadoop. Please refer to the official website for the installation of Homebrew, it is very simple, a sentence of code in the terminal, but pay attention to the network environment and may need to over the wall.

Attach the official website address: Homebrew official website

After the installation is complete, we enter brew install hadoop in the terminal to install the latest hadoop. Note that brew will update itself when it is used for the first time, and the brew source is abroad, so friends who ca n’t work on the Internet may always be stuck here. We can first replace it with a domestic source: first enter the Homebrew directory cd / usr / local in the terminal / Homebrew , then enter git remote set-url origin http://mirrors.ustc.edu.cn/homebrew.git to use Zhongke Dayuan.

After the installation is complete, we enter hadoop version to view the version, if there is information receipt, the installation is successful.

Type which hadoop in the terminal , you can see the installation location of hadoop: / usr / local / bin / hadoop . At this time, hadoop defaults to local mode.

2.4 Perform pseudo-distributed configuration

Hadoop has three operating modes:

- Local mode (default mode): no need to enable a separate process, it can be used directly for testing and development.

- Pseudo-distributed mode: equivalent to fully distributed, only one node.

- Fully distributed mode: multiple nodes run together.

Hadoop can be run in a pseudo-distributed manner on a single node. The Hadoop process runs as a separate Java process. The node acts as both a NameNode and a DataNode. At the same time, it reads files in HDFS. Pseudo-distributed needs to modify some configuration files.

As mentioned earlier, the installation location of hadoop queried in the terminal is / usr / local / bin / hadoop . Careful friends can see that this is also a stand-in file. We right-click it and choose to display the original body. You can find the actual installation path of hadoop It is /usr/local/Cellar/hadoop/3.2.1_1/bin/hadoop , and the configuration file is located in /usr/local/Cellar/hadoop/3.2.1_1/libexec/etc/hadoop , we need to modify the core- site.xml and hdfs-site.xml .

The configuration file of Hadoop is in xml format, and each configuration is implemented by declaring the name and value of the property.

First, modify the core-site.xml configuration file , we enter the actual hadoop directory, enter cd /usr/local/Cellar/hadoop/3.2.1_1/libexec/etc/hadoop in the terminal , and then use vim to open the relevant configuration file vim core -site.xml .

The following figure is the original core-site.xml file, inserting our configuration between lines 19 and 20:

Here you need to set up a tmp directory for hadoop to store temporary files . Otherwise, Hadoop will use the system's default temporary directory to store temporary files, so that when you exit the system, the system's default temporary directory will be cleared, so that the next time we log in to Hadoop, we will have to perform system initialization again.

Then you also need to set a logical name of the hdfs path, so that you can access the distributed file system through this logical name in the future. The logical name I set here is hdfs: // localhost: 9000 , and the port number is defined by myself. You can open another terminal window before defining the port number, enter lsof -i: xxx , xxx is the port number you want to define, if you find that there is a receipt information after execution, it means that the port number is already occupied, if there is no receipt Information, indicating that this port is empty, we can use this port number.

Note that 4 spaces are used as 1 indent.

Then, modify the hdfs-site.xml configuration file , or in this directory /usr/local/Cellar/hadoop/3.2.1_1/libexec/etc/hadoop , use vim to open the relevant configuration file vim hdfs-site.xml .

Also type our configuration between <configuration> and </ configuration>. First, we need to set the number of copies. In the distributed file system hdfs, there are several copies of each data block to ensure redundancy, so that after a machine is down, other machines can still be used normally. Pseudo distribution There is only one machine and one node, so the number is set to 1; then, we need to set up a local disk directory to store the fsimage file and a local disk directory to store the hdfs data block, block is the basic unit of hdfs file storage is block . Finally, set permissions so that non-root users can also write files to hdfs.

So far, the pseudo-distributed configuration has been completed. Note that at this time we have not configured the files related to yarn. Next we will run a routine directly with MapReduce.

2.5 Start pseudo-distributed hadoop

First, format the NameNode. In this directory /usr/local/Cellar/hadoop/3.2.1_1 , enter hdfs namenode -format in the terminal . Then a bunch of information comes out, as long as you see the information shown in the figure below, it means that the format is successful.

Then, start the NameNode and DataNode daemons. This time, in the directory /usr/local/Cellar/hadoop/3.2.1_1/libexec/sbin , directly enter start-dfs.sh , you can see the results shown in the following figure, the namenodes, datanodes and secondary namenodes.

You can also check whether the node is started by entering the jps command in the terminal .

At this time, open a browser and visit http: // localhost: 9870 / . The following interface appears to indicate that the startup is successful. On this interface, you can view the information of NameNode and DataNode. Note that the port number of hadoop 3.x version is 9870, and the port number described in many old tutorials is 50070.

Similarly, enter stop-dfs.sh in the terminal to close the process.

2.6 Handling WARN

After the author executes start-dfs.sh , although the namenodes, datanodes, and secondary namenodes are all started normally, there is a line of warning below.

![]()

This is because the hadoop installed through brew does not have the / lib / native directory under the /usr/local/Cellar/hadoop/3.2.1_1/libexec directory. After consulting the online information, I found that the problem can be solved in two ways: 1. Download the source code of hadoop to compile lib / native by maven; 2. Find some others compiled on the Internet and put it directly in hadoop. Here we choose to download lib / native directly on csdn and put it in /usr/local/Cellar/hadoop/3.2.1_1/libexec directory, then restart it.

Attach the lib / native address: link: https://pan.baidu.com/s/1JUmC5JZcVQapSzUzzZFQhA password: lvk0

2.7 Running Hadoop pseudo-distributed instances

Pseudo-distributed Hadoop reads data on HDFS.

On the browser, in the http: // localhost: 9870 / address, enter Utilities-> Browse the file system, you can see that there is currently no namenode node.

First, we need to create a user directory in HDFS to facilitate our management, enter the /usr/local/Cellar/hadoop/3.2.1_1/bin directory, this directory is the exec executable file directory of my hadoop, enter hdfs dfs -mkdir -p / user / yang , where -mkdir means creating a directory, -p means creating a hierarchical directory along the path, and the following directory path / user / yang can be defined by the reader. The hdfs dfs indicates that the current command is only applicable to the HDFS file system. In addition, there are the hadoop fs and hadoop dfs commands. The former is applicable to any different file system, such as the local file system and the HDFS file system, and the latter is related to the hadoop dfs command. The same effect can only be applied to the HDFS file system. Then we refresh the browser and a new namenode has been established.

Next, we run a MapReduce instance on our custom node. We continue to create two more directories under / user / yang , enter hdfs dfs -mkdir -p / user / yang / mapreduce_example / input in the terminal , and then enter /usr/local/Cellar/hadoop/3.2.1_1/libexec/etc/hadoop directory, upload the xml files under this directory to the input directory we just created, enter the command hdfs dfs -put * .xml / user / yang / mapreduce_example / input , where the role of the -put command is to upload a local file or directory to the path in HDFS, one of the two parameters behind -put is the file / directory to be uploaded, and the other is the path in HDFS.

After uploading, you can view the file list through the hdfs dfs -ls / user / yang / mapreduce_example / input command, of course, you can also view it directly on the browser.

Then enter the bin directory cd /usr/local/Cellar/hadoop/3.2.1_1/bin and execute hadoop jar /usr/local/Cellar/hadoop/3.2.1_1/libexec/share/hadoop/mapreduce/hadoop-mapreduce-examples -3.2.1.jar grep / user / yang / mapreduce_example / input / user / yang / mapreduce_example / output 'dfs [az.] +' , This is a grep instance, the format of this instruction is the jar package of the hadoop jar instance Path grep input file path output file path , note that the input folder here stores the file you want to process (the file that is input to the program), and output stores the output result generated after the program is completed . Before, the output folder could not exist .

After the operation is complete, you can use the hdfs dfs -cat / user / yang / mapreduce_example / output / * to view the results.

Can also be viewed on the browser page, you can see that the part-r-00000 file under output is the result of statistics.

You can also get the running results back to the local, use the -get command hadoop fs -get / user / yang / mapreduce_example / output / Users / yangxingda / Desktop / hdfs-local in the format of the output directory on the hadoop fs -get hdfs file system The local directory you want to put .

You can see the output, the text that meets the regular expression at the beginning of dfs and the number of occurrences are listed.