K8S Introduction

First of all, it is a brand-new leading solution of distributed architecture based on container technology. Kubernetes (k8s) is Google's open source container cluster management system (inside Google: Borg). Based on Docker technology, it provides a series of complete functions such as deployment and operation, resource scheduling, service discovery and dynamic scaling for containerized applications, which improves the convenience of large-scale container cluster management.

Kubernetes is a complete distributed system support platform with complete cluster management capabilities, multiple expansion and multi-level security protection and access mechanisms, multi-tenant application support capabilities, transparent service registration and discovery mechanisms, and built-in intelligent load balancer , Powerful fault discovery and self-repair capabilities, service rolling upgrade and online expansion capabilities, scalable automatic resource scheduling mechanism, and multi-granular resource quota management capabilities. At the same time, Kubernetes provides comprehensive management tools covering all aspects including development, deployment testing, operation and maintenance monitoring.

In Kubernetes, Service is the core of the distributed cluster architecture. A Service object has the following key features:

Have a uniquely assigned name,

have a virtual IP (Cluster IP, Service IP, or VIP) and port number

to integrate a certain remote service capability

to a set of container applications that provide this service capability

Advantages of Kubernetes:

Container orchestration

lightweight

open source

elastic scaling

load balancing

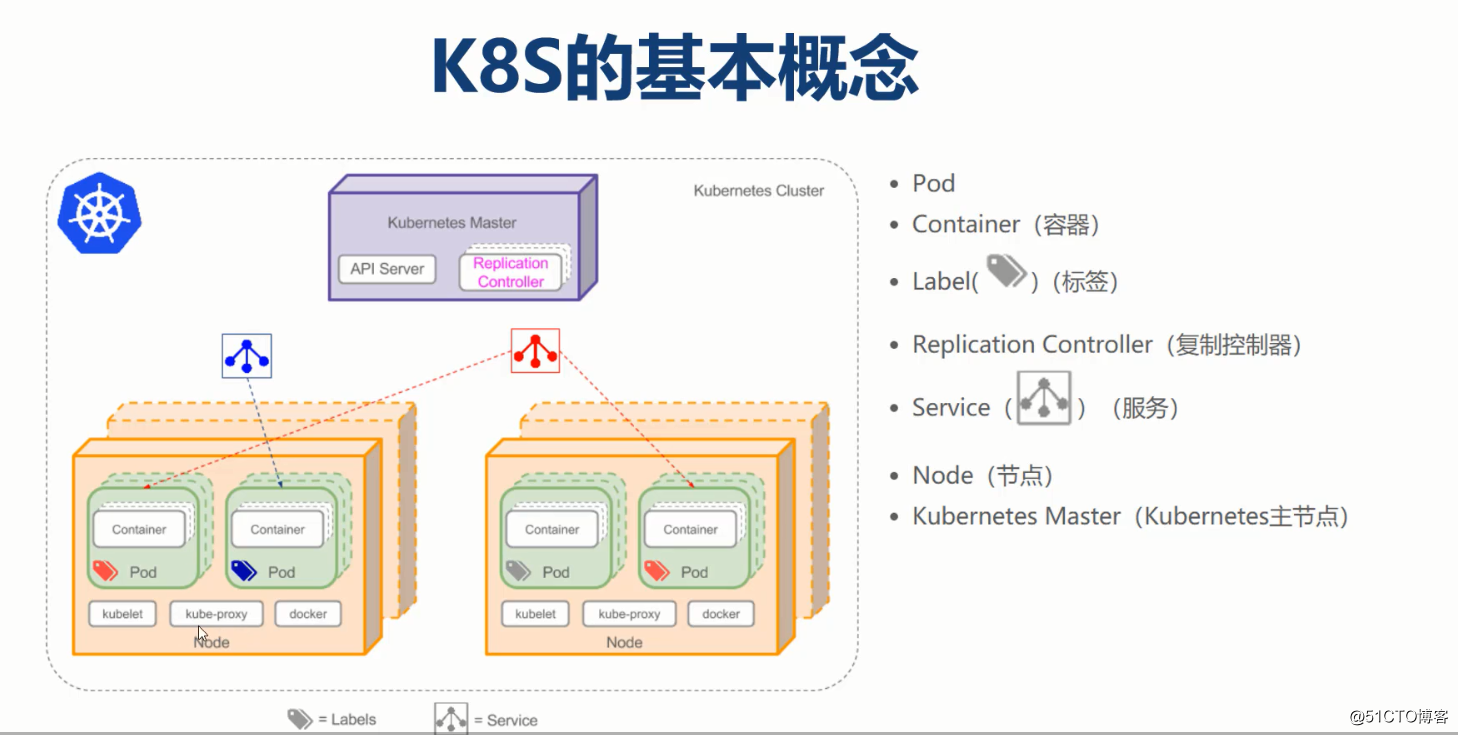

k8s concept

cluster

Cluster is a collection of computing, storage and network resources, and k8s uses these resources to run various container-based applications.

master

The master is the brain of the cluster. His main responsibility is scheduling, which is to decide to put the application to run there. The master runs the Linux operating system and can be a physical machine or a virtual machine. In order to achieve high availability, you can run multiple masters.

node

Node's responsibility is to run container applications. Node is managed by the master. Node is responsible for monitoring and reporting the status of the container, and at the same time manages the life cycle of the container according to the requirements of the master. Node runs on the Linux operating system and can be a physical machine or a virtual machine.

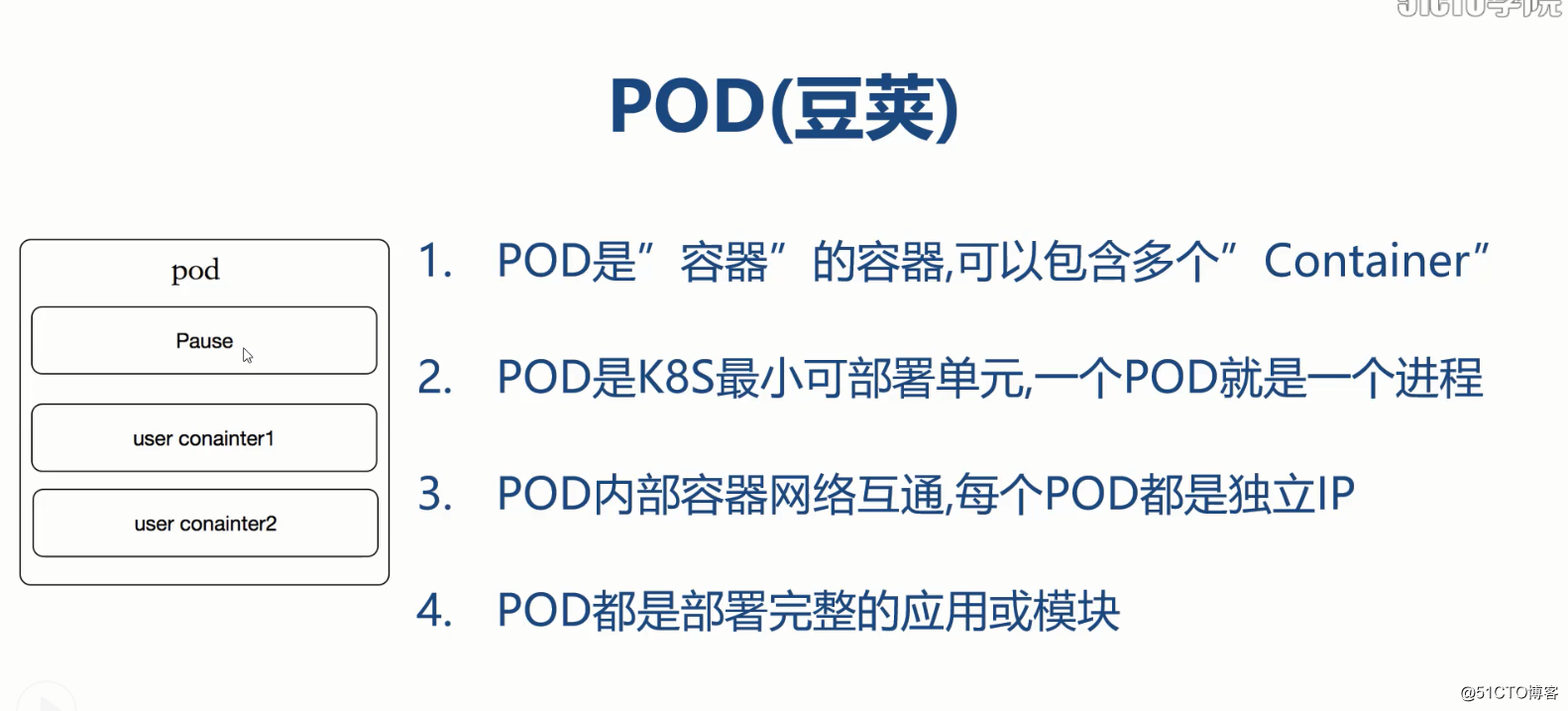

under

Pod is the smallest unit of work for k8s. Each pod contains one or more containers. The containers in the pod will be scheduled by the master as a whole to run on a node.

controller

K8s usually does not create pods directly, but manages pods through controllers. The controller defines the deployment characteristics of the pod, for example, there are several scripts and what kind of node is running on it. In order to meet different business scenarios, k8s provides a variety of controllers, including deployment, replication, daemonset, statefulset, job, etc.

deployment

It is the most commonly used controller. Deployment can manage multiple copies of the pod and ensure that the pod operates in the desired state.

replicaset

Achieve multiple copies of pod management. Replicaset is automatically created when using deployment, which means that deployment is to manage multiple copies of pods through replicaset. We usually don't need to use replicaset directly.

daemonset

It is used for scenarios where at most one copy of pod is run per node. As the name suggests, daemonset is usually used to run daemon.

statefuleset

It can ensure that each copy of the pod has the same name throughout the life cycle, and other controllers do not provide this function. When a pod fails and needs to be deleted and restarted, the name of the pod will change, and the statefulset will ensure that the copies are started, updated, or deleted in a fixed order.

job

Used for applications that are deleted at the end of the run, and pods in other controllers are usually continuously running for a long time.

service

Deployment can deploy multiple copies, each pod has its own IP, how does the outside world access these copies?

The answer is that service

k8s' service defines how the outside world accesses a specific set of pods. The service has its own IP and port, and the service provides load balancing for the pod.

The two tasks of running container pods and accessing containers in k8s are executed by controller and service respectively.

namespace

A physical cluster can be logically divided into multiple virtual clusters, each cluster is a namespace. Resources in different namespaces are completely isolated.

Kubernetes architecture:

Service grouping, small cluster, multi-cluster

service grouping, large cluster, single cluster

Kubernetes components:

Kubernetes Master control components, scheduling management of the entire system (cluster), including the following components:

Kubernetes API Server

As the entrance of the Kubernetes system, it encapsulates the addition, deletion, modification and inspection operations of the core objects, and provides external customers and internal components with RESTful API interface. The maintained REST objects are persisted to Etcd for storage.

Kubernetes Scheduler

Node selection (that is, allocation of machines) for newly established Pods, responsible for cluster resource scheduling. The components are separated and can be easily replaced with other schedulers.

Governor Controller

Responsible for the execution of various controllers, many controllers have been provided to ensure the normal operation of Kubernetes.

Replication Controller

Manage and maintain the Replication Controller, associate the Replication Controller and the Pod, and ensure that the number of copies defined by the Replication Controller is the same as the actual number of Pods.

Node Controller

Manage and maintain the Node, regularly check the health status of the Node, and identify (failed | unfailed) Node nodes.

Namespace Controller

Manage and maintain Namespace, and periodically clean up invalid Namespace, including API objects under Namesapce, such as Pod, Service, etc.

Service Controller

Manage and maintain Service, provide load and service agent.

EndPoints Controller

Manage and maintain Endpoints, associate Service and Pod, create Endpoints as the backend of Service, and update Endpoints in real time when Pod changes.

Service Account Controller

Manage and maintain Service Account, create default Service Account for each Namespace, and create Service Account Secret for Service Account at the same time.

Persistent Volume Controller

Manage and maintain Persistent Volume and Persistent Volume Claim, allocate Persistent Volume for new Persistent Volume Claim to bind, and perform cleanup and recovery for the released Persistent Volume.

Daemon Set Controller

Manage and maintain the Daemon Set, responsible for creating Daemon Pods and ensuring the normal operation of Daemon Pods on the specified Node.

Deployment Controller

Manage and maintain Deployment, associate Deployment and Replication Controller, and ensure that a specified number of pods are running. When the Deployment is updated, it controls the update of the Replication Controller and Pod.

Job Controller

Manage and maintain Jobs, create one-time task Pods for Jobs, and ensure the completion of the number of tasks specified by the Job

Pod Autoscaler Controller

Achieve automatic scaling of Pod, regularly obtain monitoring data, perform policy matching, and execute Pod's scaling action when conditions are met.

K8S Node running node, running management business container, contains the following components:

It's a baby

Responsible for controlling containers, Kubelet will receive Pod creation requests from Kubernetes API Server, start and stop containers, monitor container running status and report to Kubernetes API Server.

Kubernetes Proxy

Responsible for creating a proxy service for Pods. Kubernetes Proxy will obtain all Service information from the Kubernetes API Server and create a proxy service based on the Service information to implement the routing and forwarding of requests from Service to Pods, thereby implementing a Kubernetes-level virtual forwarding network.

Docker

Node needs to run container service

Environment configuration

| CPU name | IP address | Character | Configuration |

|---|---|---|---|

| master | 192.168.0.110 | Master node | 2C 2G |

| master | 192.168.0.104 | Work node | 2C 2G |

| master | 192.168.0.106 | Work node | 2C 2G |

Remarks: At least 2 cores and 2g of virtual machines in the experimental environment

The deployment process

1. Software package download and upload

1. Baidu network disk download address

Link: https://pan.baidu.com/s/1Qzs8tcf4O-8xlTmnl2Qx5g

extraction code: ah4y

Second, the optimization of the basic server environment (the same operation of the three units)

1. Close the protective wall, safety

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

setenforce 0

systemctl stop firewalld

systemctl disable firewalld

2. Server time synchronization

timedatectl set-timezone Asia/Shanghai

3. Modify the host name

hostnamectl set-hostname master &&bash

hostnamectl set-hostname node01 &&bash

hostnamectl set-hostname node02 &&bash

4. Add the hosts file

vim / etc / hosts #Add host name resolution

at the end:

192.168.0.110 master

192.168.0.104 node1

192.168.0.106 node2

The other 2 units can be added or copied files:

scp /etc/hosts [email protected]:/etc/hosts

scp /etc/hosts [email protected]:/etc/hosts

5. Close the swap memory swap

swapoff -a #Temporarily close

sed -i '12s / ^ \ // # \ // g' / etc / fstab #Permanently close

6. Bridge settings

echo -e "net.bridge.bridge-nf-call-ip6tables = 1 \ nnet.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.d/k8s.conf

sysctl --system #make Effective

Third, deploy docker (the same operation of the three)

1. Unzip and install docker

tar -zxvf docker-ce-18.09.tar.gz

cd docker && yum localinstall * .rpm -y #yum installation related dependencies

2. Start docker

systemctl start docker

systemctl enable docker #开机启动

docker version

3.docker accelerator

vim /etc/docker/daemon.json #Add

{

"registry-mirrors": ["https://fskvstob.mirror.aliyuncs.com/"]

}systemctl daemon-reload #Reload

Fourth, deploy K8s cluster

3 sets of the same operation

1. Unzip and install k8s

cd ../

tar -zxvf kube114-rpm.tar.gz

cd kube114-rpm && yum localinstall * .rpm -y #yum

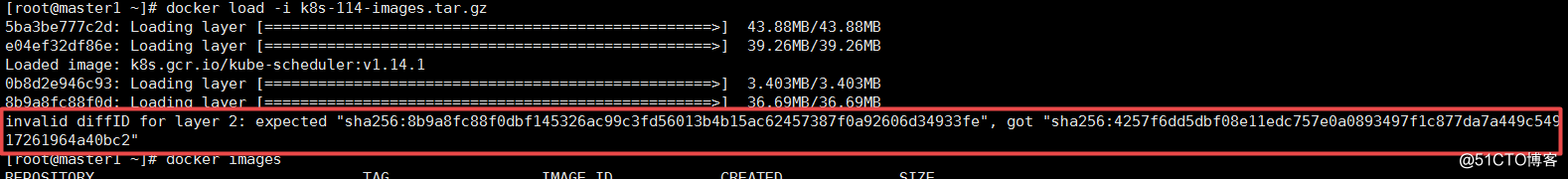

2. Import k8s image

cd ../

docker load -i k8s-114-images.tar.gz

docker load -i flannel-dashboard.tar.gz

Note: If the following error occurs when importing the image:

The reason for the error: a problem occurred when downloading the image compression package.

Solution: re-download or replace the image

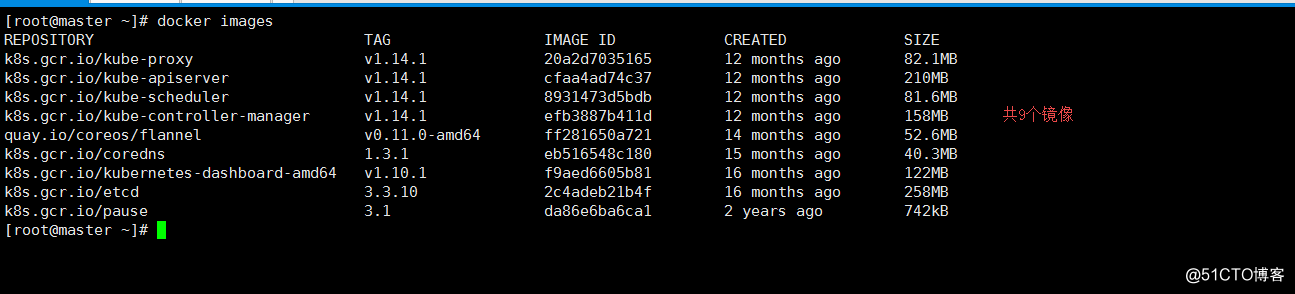

3. View k8s image

docker images # 9 images in total

Only operate on the master

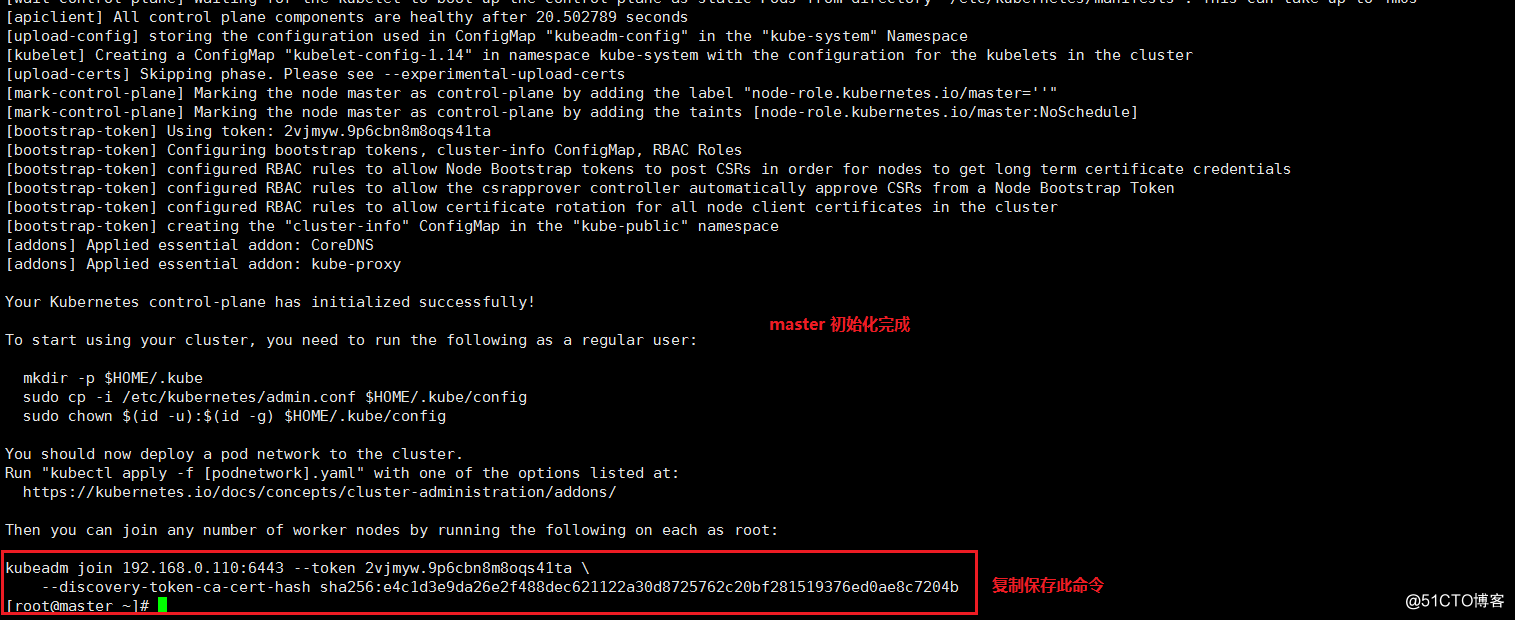

4.master initialization

kubeadm init - kubernetes-version = v1.14.1 - pod-network-cidr = 10.244.0.0 / 16

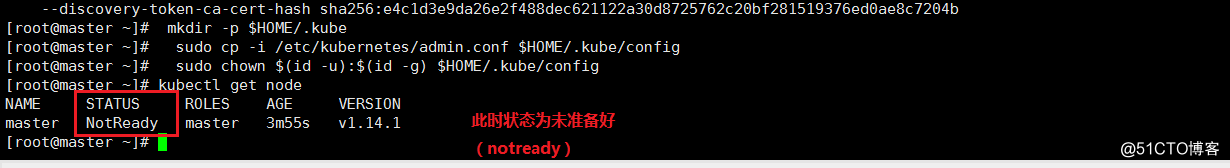

5. View Node

kubectl get node

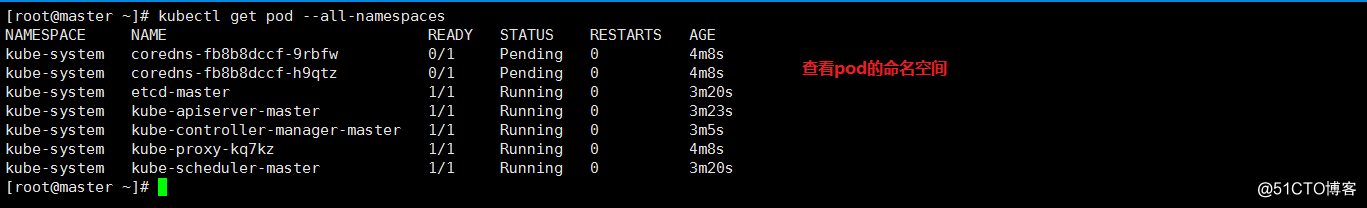

kubectl get pod --all-namespaces

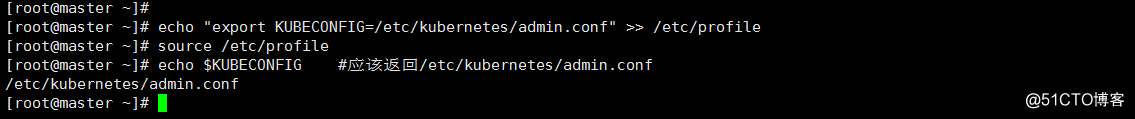

6. Configure the KUBECONFIG variable

echo "export KUBECONFIG = / etc / kubernetes / admin.conf" >> / etc / profile

source / etc / profile

echo $ KUBECONFIG #should return /etc/kubernetes/admin.conf

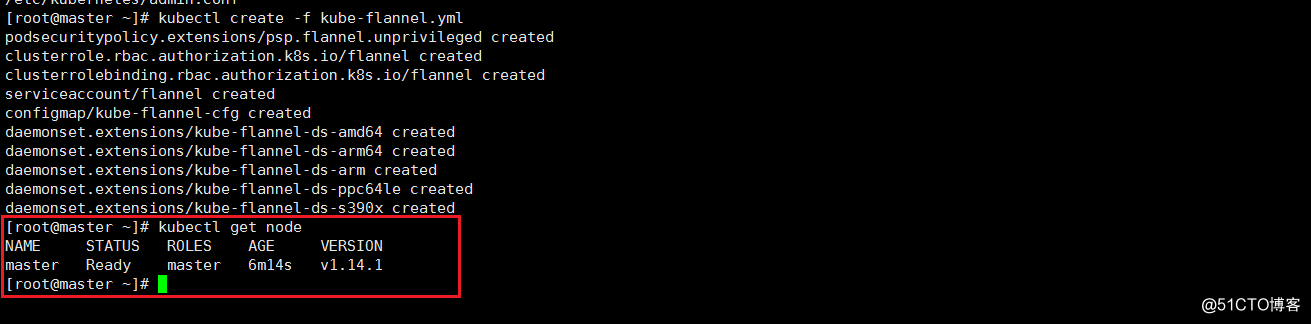

7. Deploy the flannel network

kubectl create -f kube-flannel.yml

kubectl get node

Server operation on node node

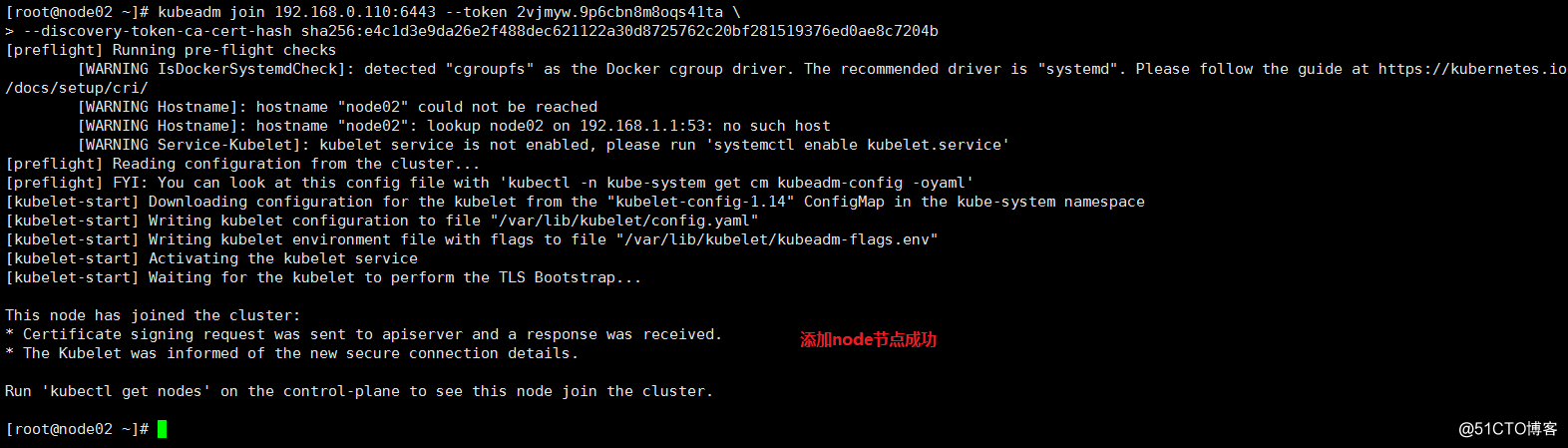

8.kubeadm join join node node

systemctl enable kubelet #Add boot k8s

kubeadm join 192.168.0.110:6443 --token 2vjmyw.9p6cbn8m8oqs41ta \

--discovery-token-ca-cert-hash sha256: e4c1d3e9da26e2f488dec621122a30d8725762c20bf2815194b

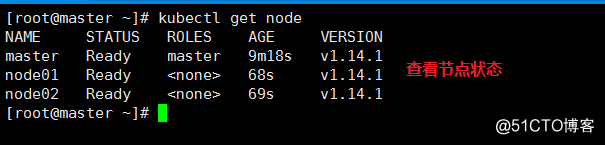

9. View on the master

kubectl get node

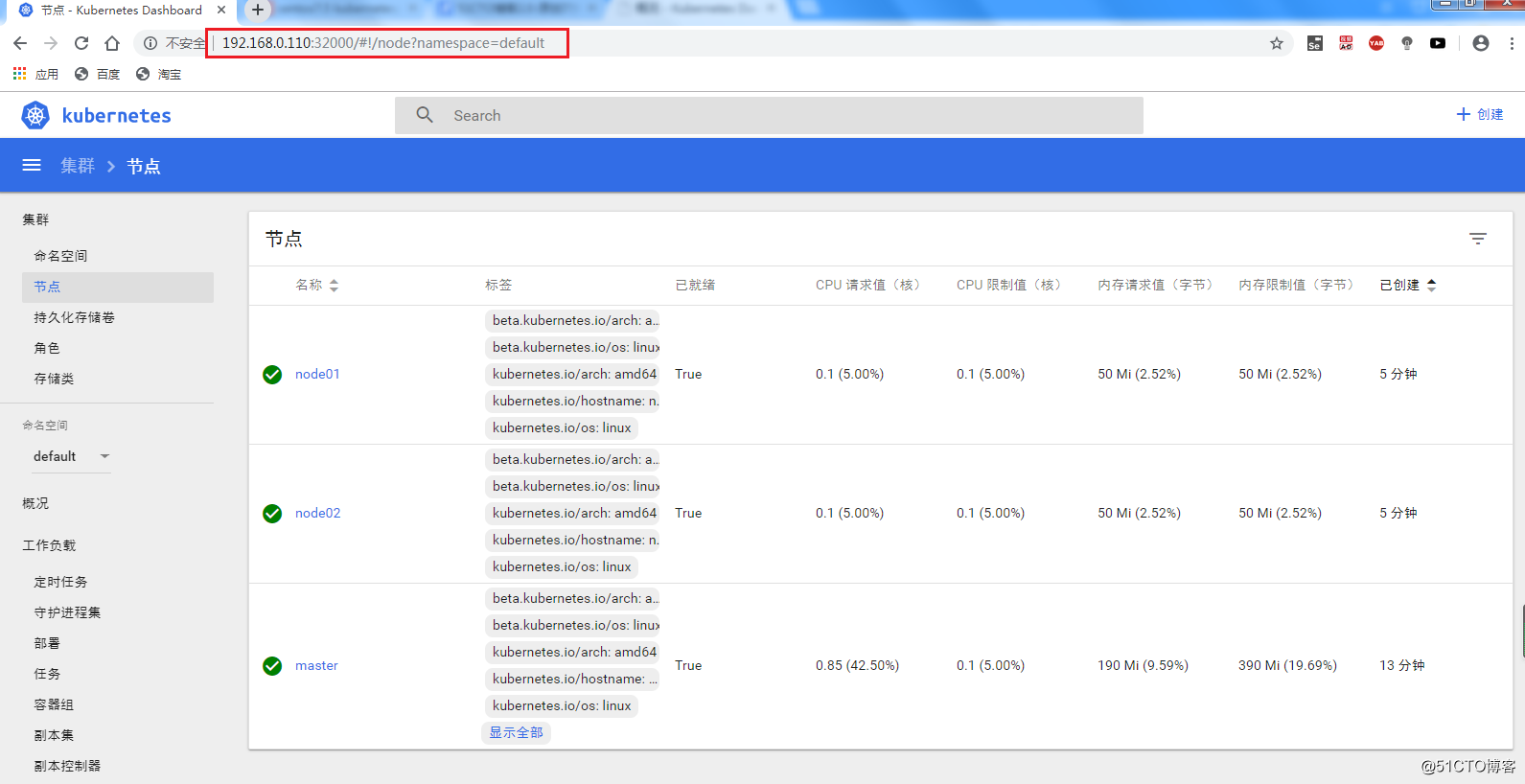

10. Deploy k8s UI interface (dashboard)

kubectl apply -f kubernetes-dashboard.yaml

kubectl apply -f admin-role.yaml

kubectl apply -f kubernetes-dashboard-admin.rbac.yaml

kubectl -n kube-system get svc

11. Web page verification

Reference materials:

1. Reference article https://www.jianshu.com/p/0e1a3412528e

2. Reference Lao Qi ’s k8s teaching video

3. Reference k8s official Chinese document https://kubernetes.io/zh/docs/home/