Overview

Data storage is for query and statistics. If the data only needs to be stored, there is no need to query, and this kind of data is of little value

This article introduces Mongodb

- Aggregation query (Aggregation)

- Capped Collections

Ready to work

Prepare 10000 data

var orders = new Array(); for (var i = 10000; i < 20000; i++) { orders[i] = { orderNo: i + Math.random().toString().substr(3, 3), price: Math.round(Math.random() * 10000) / 100, qty: Math.floor(Math.random() * 10) + 1, orderTime: new Date(new Date().setSeconds(Math.floor(Math.random() * 10000))) }; } db.order.insert(orders);

Aggregate query

Mongodb's aggregation function operations are all in db.collection.aggregate. By defining the aggregation pipeline (a set of rules) to achieve grouping, statistics and other functions, the following introduces several commonly used aggregation functions

Group pipeline ($ group)

format

{ $group: { _id: <expression>, // Group By Expression <field1>: { <accumulator1> : <expression1> }, ... } }

_id is a grouping field. If you specify _id = null or a constant field, the entire result set is grouped.

Grouping statistics field format {<accumulator1>: <expression1>}

Accumulator Operator (Accumulator Operator) Reference Accumulator Operator

Suppose now that we need to count the total order price, average price, maximum, minimum, and total number of orders per hour per day

db.order.aggregate ([ { $ group: { // Group field, here uses the $ dateToString format, here statistics by hours _id: {$ dateToString: {format: "% Y-% m-% d% H" , date: "$ orderTime" }}, // Total price totalPrice: {$ sum: "$ price" }, // Group the first order firstOrder: {$ first: "$ orderNo" }, // Group the last one Order lastOrder: {$ last: "$ orderNo" }, // average price averagePrice: {$ avg: "$ price" }, // maximum price maxPrice: {$ max: "$ price" }, // Minimum price minPrice: {$ min: "$ price" }, // Total order number totalOrders: {$ sum: 1 }, } } ])

Return result

{ "_id" : "2020-04-12 15", "totalPrice" : 172813.68, "firstOrder" : "10000263", "lastOrder" : "19999275", "averagePrice" : 49.20662870159453, "maxPrice" : 99.94, "minPrice" : 0.01, "totalOrders" : 3512 }

{ "_id" : "2020-04-12 13", "totalPrice" : 80943.98, "firstOrder" : "10004484", "lastOrder" : "19991554", "averagePrice" : 50.780414052697616, "maxPrice" : 99.81, "minPrice" : 0.08, "totalOrders" : 1594 }

{ "_id" : "2020-04-12 14", "totalPrice" : 181710.15, "firstOrder" : "10001745", "lastOrder" : "19998830", "averagePrice" : 49.76996713229252, "maxPrice" : 99.93, "minPrice" : 0.01, "totalOrders" : 3651 }

{ "_id" : "2020-04-12 16", "totalPrice" : 63356.12, "firstOrder" : "10002711", "lastOrder" : "19995793", "averagePrice" : 50.97032984714401, "maxPrice" : 99.95, "minPrice" : 0.01, "totalOrders" : 1243 }

Screening pipeline ($ match)

format

{ $match: { <query> } }

This is relatively simple, it is to filter the data

Suppose I now need to filter the amount between (10, 15)

db.orders.aggregate([ { $match: { "price": { $gt: 10, $lt: 15 } } } ])

Sort pipeline ($ sort)

format

{ $sort: { <field1>: <sort order>, <field2>: <sort order> ... } }

Specify field sorting, 1: ascending order, -1: reverse order

Limit number ($ limit)

format

{ $limit: <positive integer> }

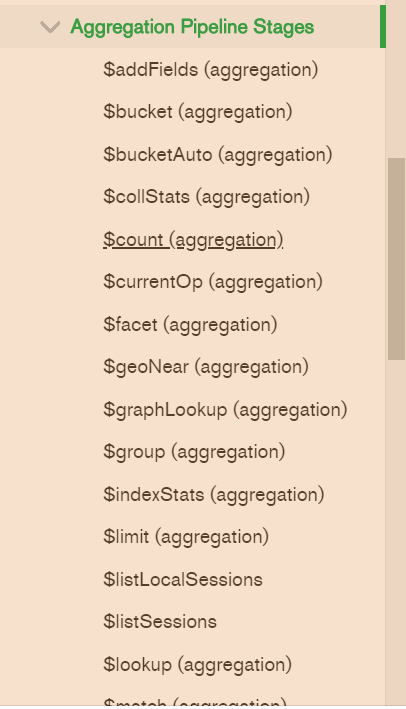

There are many aggregation pipelines in Mongodb, which are not listed one by one. Refer to Aggregation Pipeline Stages — MongoDB Manual

With (aggregation) can be used for aggregation pipeline

Having said that, in fact, none of the most powerful functions of the Mongodb aggregate function is the use of combined pipelines. We need data to query. Because Mongodb provides many aggregate pipeline functions, it is very powerful to use in combination.

It is worth noting that the order of the pipeline. Mongodb passes the result set of each step to the next pipeline for processing in the order you define. The output is the result set of the last pipeline. As a result, even an error is reported (in this case, an error is even better than an unexpected result)- Order amount greater than 10 yuan less than 50 yuan && quantity less than or equal to 5 and

- Remove 50 orders with the smallest amount && Remove 50 orders with the largest amount and

- Count the order quantity and order amount in each hour

- Output in ascending order amount

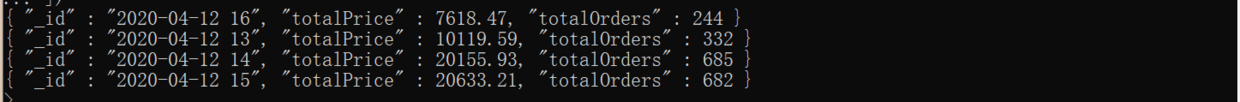

db.order.aggregate([ { $match: { "price": { $gt: 10, $lt: 50 }, "qty": { $lte: 5 } } }, { $sort: { "price": -1 } }, { $skip: 50 }, { $sort: { "price": 1 } }, { $skip: 50 }, { $group: { _id: { $dateToString: { format: "%Y-%m-%d %H", date: "$orderTime" } }, totalPrice: { $sum: "$price" }, totalOrders: { $sum: 1 } } }, { $sort: { "totalPrice": 1 } } ])

Solutions

- Filter records that meet the criteria ($ match)

- Reverse order by amount ($ sort: -1)

- Skip the 50 records with the largest amount ($ skip: 50)

- Ascending order by amount ($ sort: 1)

- Skip the 50 records with the smallest amount ($ skip: 50)

- Statistics by hour per day ($ group)

- Total amount of statistical results in ascending order ($ sort: 1)

Fixed set

Overview

capped-collection are fixed-size collections that support high-throughput operations that insert and retrieve documents based on insertion order. Capped collections work in a way similar to circular buffers: once a collection fills its allocated space, it makes room for new documents by overwriting the oldest documents in the collection.

It can be seen from the definition above that the fixed set has several characteristics

- Fixed size

- High throughput

- Retrieve documents according to insertion order

- Overwriting old documents beyond the limit size

According to the characteristics of the fixed set, the fixed set is suitable for the following scenarios

- Just keep the most recent log query system

- Cache data (hotspot data)

- and many more

Fixed collection limit

- The fixed collection size cannot be modified after creation

- You cannot delete the documents in the fixed collection, you can only delete the collection and rebuild the fixed collection

- Fixed collections cannot use fixed partitions

- The aggregation pipeline $ out cannot be used in a fixed collection

Fixed set use

1. Create a fixed collection

db.createCollection("log", { capped : true, size : 4096, max : 5000 } )

| Field | have to | Explanation |

|---|---|---|

| capped | Yes | Whether to create a fixed collection |

| size | Yes | Fixed collection size, unit: bytes |

| max | no | Document size limit |

size and max are OR relations, and if one of the limits is exceeded, the old document will be overwritten

2. Check if the collection is fixed

db.collection.isCapped()

3. Convert a non-fixed set to a fixed set

db.runCommand({"convertToCapped": "mycoll", size: 100000});

Test fixed collection

1. Exceeded the limit on the number of documents

// 1. Create a fixed collection, size 1M, maximum number of documents 10 db.createCollection ("log", {capped: true , size: 1024 * 1024, max: 10 }); // 2. Insert 200 data for ( var i = 0; i <200; i ++ ) { db.log.insertOne ({ "_id": i + 1 , "userId": Math.floor (Math.random () * 1000 ), "content": "Login "+ (" 0000 "+ i) .slice (-4 ), " createTime ": new Date (), }); }

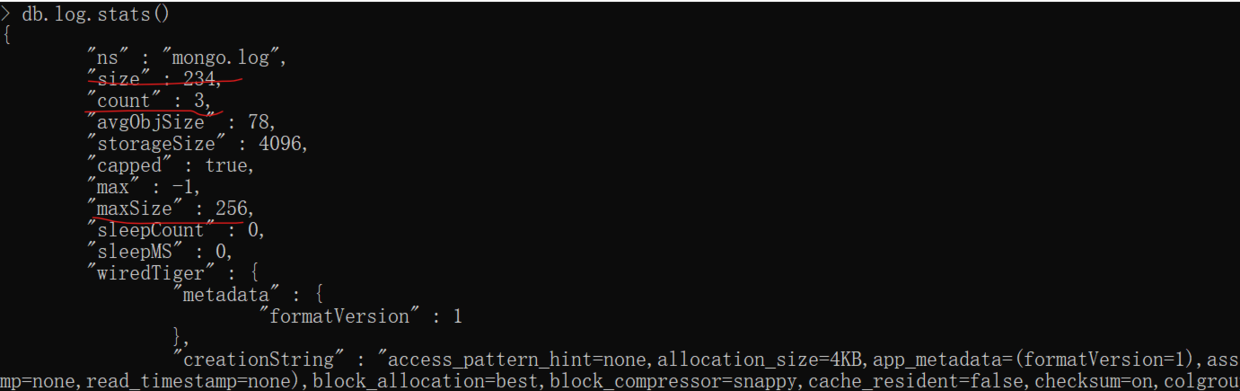

Then query the current Mongodb storage

db.log.stats()

It can be seen that each object occupies 78 bytes, because the fields are fixed-length

2. Verify operation storage size

If the size field is less than or equal to 4096, then the collection will have a cap of 4096 bytes. Otherwise, MongoDB will raise the provided size to make it an integer multiple of 256.

If the size field is set to less than 4096, Mongodb will provide a multiple of 256 data storage size

Assuming the size of 256, 256/78 = 3.282051282051282, 3 documents should be able to be stored

// 1. Remove the fixed collection before deleting db.log.drop (); // 2. Create a fixed collection, size <78, verify that a 256 size is created db.createCollection ("log", {capped: true , size: 78 }); // 2. Insert 200 data for ( var i = 0; i <200; i ++ ) { db.log.insertOne ({ "_id": i + 1 , "userId": Math.floor (Math .random () * 1000 ), "content": "Login" + ("0000" + i) .slice (-4 ), "createTime": new Date (), }); }

View collection statistics

db.log.stats()

It can be seen that the log collection uses 234 bytes (78 * 3), which is the size of 3 documents, the maximum usable size is 256

3. Query fixed collection

If Mongodb does not specify a sort field, it is retrieved in the order of deposit, you can use .sort ({$ natural: -1}) to change the output order

db.log.find({}).sort( { $natural: -1 } )

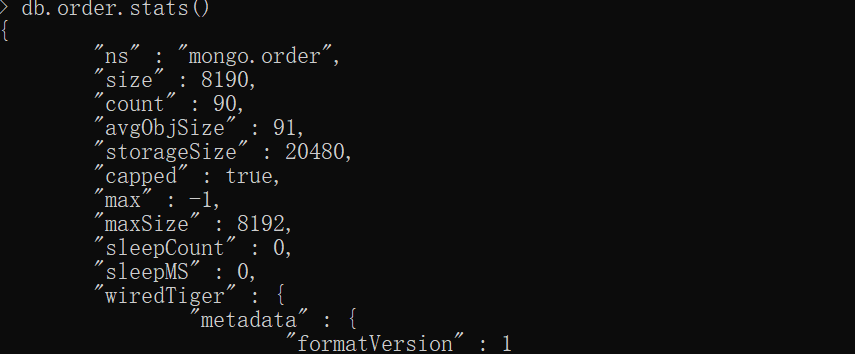

4. Convert non-fixed sets to fixed sets

Try to convert order

db.runCommand({"convertToCapped": "order", size: 8096});

View order collection statistics

Only 90 data left

Please indicate the source for forwarding: https://www.cnblogs.com/WilsonPan/p/12692642.html

Reference article

Aggregation — MongoDB Manual

Capped Collections — MongoDB Manual