Greedy algorithm theoretical basis

Definition:

In the process of seeking the optimal solution, the basis for some kind of greedy standards, starting from the initial state of the problem, go directly to the optimal solution for each step, by greedy selection several times, the whole question of the optimal final results solution, this solution method is a greedy algorithm.

Not greedy algorithm to consider the issue as a whole, choose to make it the optimal solution in the sense of just local, but their characteristics determine the problem by the use of the title greedy algorithm can get the optimal solution.

If a problem can be solved in several ways at the same time, greedy algorithm should be one of the best choice.

Rationale:

greedy algorithm is a choice at each step are taking the best or the best choice in its current state, hoping to get the best result or the best algorithm.

Greedy algorithm is a hierarchical approach can be the optimal solution under some measure of significance, solving a problem to get through a series of choices, and every choice it made some sense at all of the most current state Good choice. That hope optimal solution to the whole problem of the optimal solution obtained by local issues.

This strategy is a very simple method, many problems can best overall solution, but can not guarantee always effective, because it is not able to get the best overall solution to all problems.

Greedy problem-solving strategy, we need to solve two problems:

(1) The title is appropriate for solving the greedy strategy;

(2) how to choose the greedy standards in order to get the best / better solution of the problem.

The difference between Dynamic Programming:

greedy choice property refers to ask questions of the overall optimal solution can be the best choice through a series of local, namely greedy choose to achieve.

This is a greedy algorithm feasible first essential element, the main difference is the greedy algorithm and dynamic programming algorithm.

(1) In the dynamic programming algorithm, the choice made each step is often dependent on the solution to problems related to child, so that only after solve problems related to child, in order to make a choice.

(2) the greedy algorithm, only to make the best choice in the current state, i.e., local optimal choice, then the corresponding sub-problem solution produced after the selection again.

- When the optimal solution contains optimal solution to a problem of his son's problems, saying that this issue has structural sub-optimal.

Using greedy strategy at each conversion has achieved optimal solution. Optimal substructure nature of the problem is the key feature of this problem can be greedy algorithm or dynamic programming algorithm is. - Greedy every operation have a direct impact on the results of the algorithm, and dynamic programming is not.

- Greedy algorithm for each sub-problem solutions have to make a choice, you can not roll back; dynamic programming will be selected based on the current results of the previous selection, back-off function.

- Dynamic programming is mainly used in two- or three-dimensional problem, but greed is generally one-dimensional problem.

Greedy algorithm problem solving:

use a greedy algorithm to solve the problem should consider the following aspects:

(1) the candidate set A:

In order to construct solutions to problems, there is a problem as the candidate set A possible solution, that is the ultimate solution of the problem are taken from candidate set to A.

(2) a set of solutions S:

As the greedy choice, the set of solutions S continues to expand until the complete solution of the problem to meet configuration.

(3) function to solve the solution:

Check the complete solution set S solution constitutes the problem.

(4) Select the function select:

That greedy strategy, which is the key to greedy method, indicating which candidates most want to constitute a solution to the problem, the objective function and selection function is usually related.

(5) possible functions feasible:

Check added a solution set of feasible candidate, after that the solution satisfies the constraints set extension.

Typical example

I. ACTIVITIES arrangements

[Problem]

n with a set of events E = {1,2, ..., n }, where each activity require the same resource, such as a lecture hall or the like, and only one event can use this resource at the same time .

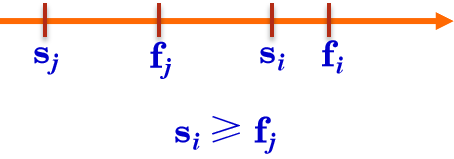

I Each activity has a requirement to use the resource si start time and an end time fi, and si <fi. If the event i is selected, the resources it occupies half-open time interval [si, fi) inside. If the interval [si, fi) with the interval [sj, fj) are disjoint, called activity i and j are compatible activity. When si ≥ fj or sj ≥ fi, j activities compatible with the active i.

Activities arrangements is selected activities compatible subset of the largest collections in the activities given in.

[Analysis]

all the activities chronological order, from front to back to take.

[Code]

#include<bits/stdc++.h>

using namespace std;

struct node

{

int s; //起始时间

int f; //结束时间

int index; //活动的编号

}a[100010];

int n;

int ss,ee;

int cmp(node a,node b)

{

if(a.s!=b.s)

return a.s<b.s;

else

return a.f<b.f;

}

vector<int>v;

int main()

{

cin>>n;

for(int i=1;i<=n;i++)

{

cin>>a[i].s>>a[i].f;

a[i].index=i;

}

sort(a+1,a+1+n,cmp);

int tmp=1;

v.push_back(a[1].index);

for(int i=2;i<=n;i++)

{

if(a[i].s>=a[tmp].f)

{

v.push_back(a[i].index);

tmp=i;

}

}

for(int i=0;i<v.size();i++)

cout<<v[i]<<" ";

cout<<endl;

return 0;

}

Second, the knapsack problem

[Problem]

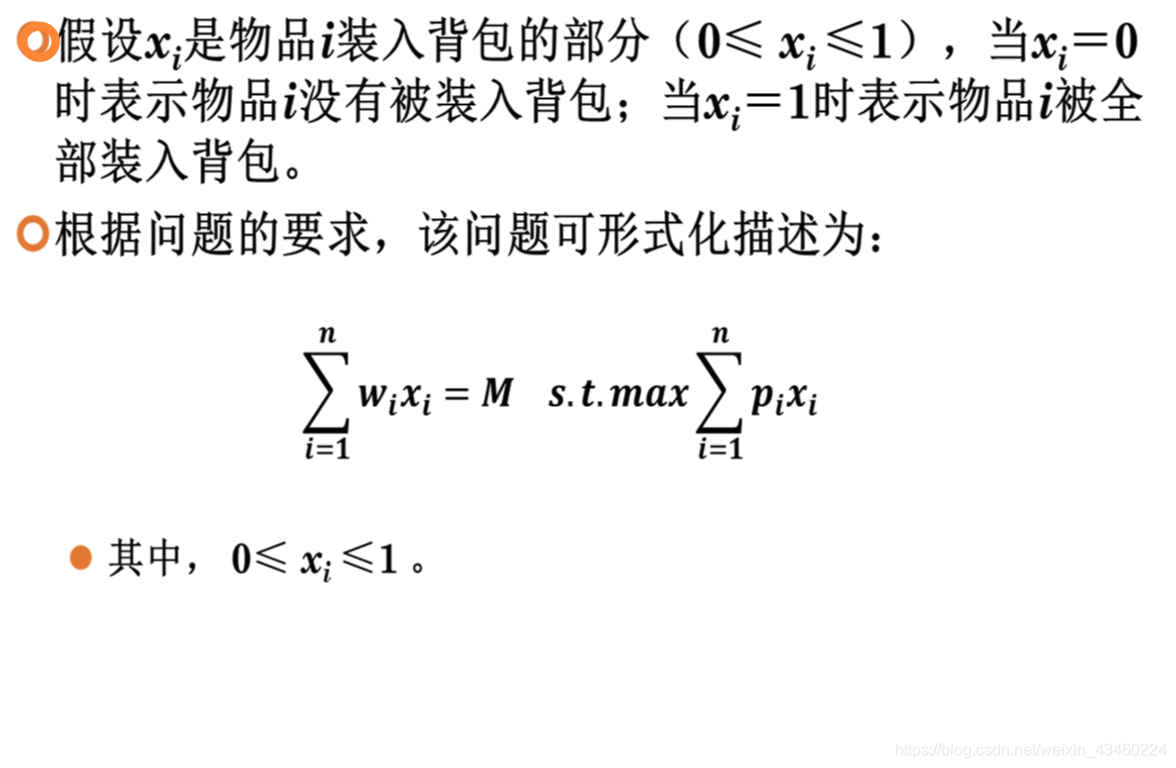

Given a backpack deadweight of M, considering the n items, wherein the i-th weight of the item, the value of wi (1≤i≤n), filled article requires the knapsack, and so the backpack the maximum value of items within.

There are two types knapsack problem (depending on whether the items can be separated), if the item can not be split, called the 0-1 knapsack problem (dynamic programming); If the item can be split, it is called knapsack problem (a greedy algorithm).

[Analysis]

There are three ways to select items:

(1) as the 0-1 knapsack problem, a dynamic programming algorithm to obtain the optimal value 220;

(2) as the 0-1 knapsack problem, greedy algorithm, according to cost selecting items from a high order in the end to obtain the optimal value 160. Because of indivisible goods, and the remaining space is wasted.

(3) as a knapsack problem, greedy algorithm, according to the order from the high cost items selected in the end obtaining the optimum value of 240. Since the article can be separated, the remaining space of the charged portion of the article 3, to obtain better performance.

[Code]

#include<bits/stdc++.h>

using namespace std;

struct node

{

double value,weight,tmp;

int index;

} a[100010];

int n;

double sum;

double ww;

int cmp(node a,node b)

{

return a.tmp>b.tmp;

}

vector<int>v;

int main()

{

cin>>n;

cin>>ww;

for(int i=1; i<=n; i++)

{

cin>>a[i].value>>a[i].weight;

a[i].tmp=a[i].value/a[i].weight;

a[i].index=i;

}

sort(a+1,a+1+n,cmp);

double x=0;

int cnt=1;

for(int i=1; i<=n; i++)

{

if(x<ww)

{

x+=a[i].weight;

sum+=a[i].value;

v.push_back(a[i].index);

cnt=i;

}

}

if(x>ww)

sum-=(x-ww)/a[cnt].weight*a[cnt].value;

cout<<sum<<endl;

return 0;

}