Hello, I'm Liu Chao.

Mention Java I / O, I am sure you are not familiar with. You may use the I / O operations to read and write files, it is also possible to use information transmission to achieve the Socket ... These are our most frequently encountered in the system and I / O operations related.

We all know, I / O speeds slower than memory, especially in the current context of the big data era, I / O performance problem is particularly prominent, I / O read and write has become a system in many application scenarios performance bottlenecks, we can not ignore.

Today, we take a deep knowledge of Java I / O in high concurrency, exposing the next big data business scene performance problem, from the source to start learning optimization methods.

What is the I / O

I / O access to and exchange machine main channel information, and the flow is the main way to complete the I / O operation.

In the computer, to an information stream is converted. Stream is ordered, and therefore with respect to a machine or an application, we usually machine or external application to receive information is referred to the input stream (the InputStream), information output from the application machine or outwardly called output stream (OutputStream), collectively referred to as input / output streams (I / O streams).

When the machine room or between the program or the data exchange during exchanging of information, or data objects always first converted into a form of stream, then the transport stream by, after reaching the designated machine or process, and then converted into target data stream. Thus, the flow may be regarded as a carrier of data, and the data exchange can be transmitted through it.

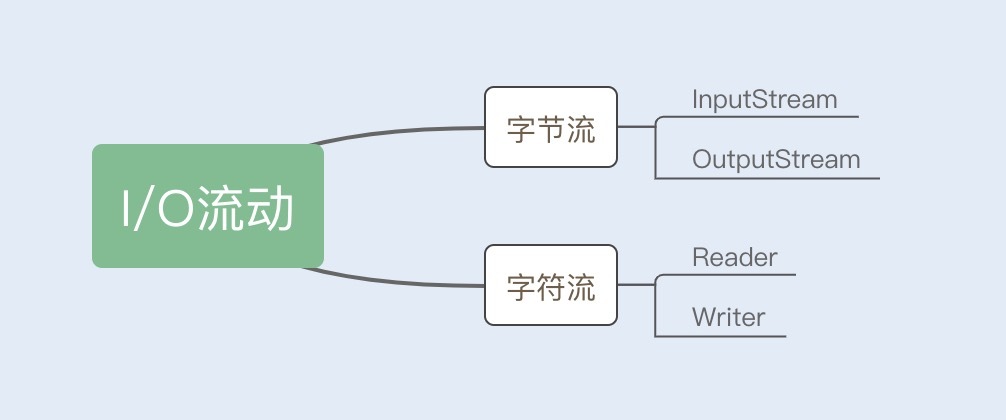

The Java I / O operation class in the java.io package, wherein InputStream, OutputStream and Reader, Writer class is the I / O package of four basic categories, which are processed character stream and byte stream. As shown below:

Looking back at my experience, I remember the first time reading Java I / O stream of the document, I have had such a question, here, too, share with you, that is: "

whether it is to read and write files or network transmission and reception, the minimum information The storage unit is a byte, why I / O operations to flow into a byte stream and character stream does it operate?

"

We know that the characters must be transcoded to a byte, this process is very time-consuming, if we do not know the type of coding is very prone to garbled. Therefore, I / O interface provides a direct flow of operation of the character, the characters are usually facilitate our stream operations. Here we were under the understanding of "byte stream" and "character stream."

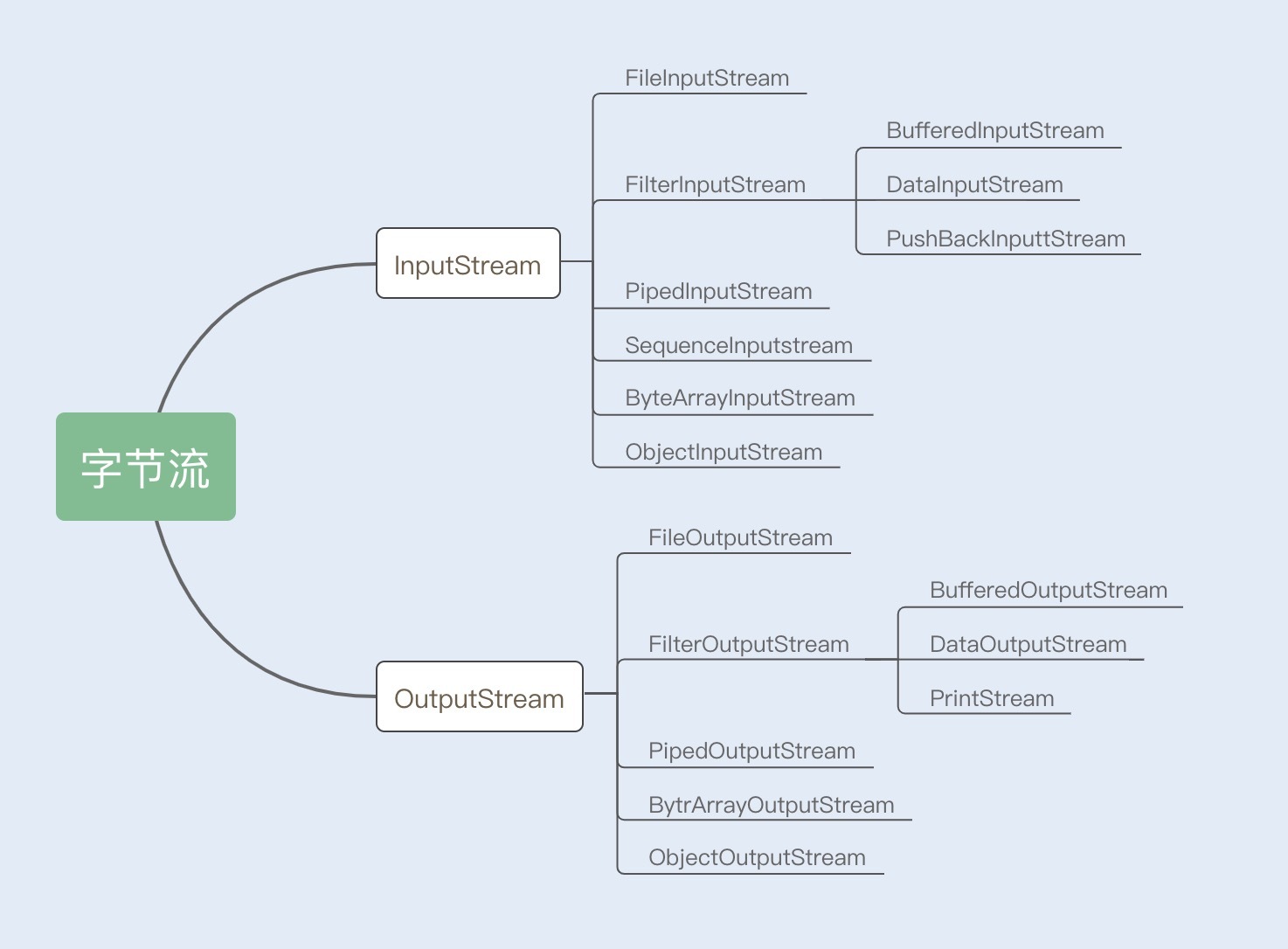

1. byte stream

InputStream / OutputStream abstract class is a byte stream, both abstract classes sent birth to a number of subclasses, different subclasses process different types of operations. If the file is read and write operations, use FileInputStream / FileOutputStream; read and write operations if the array is, on the use of ByteArrayInputStream / ByteArrayOutputStream; if ordinary read and write operations of the string, to use BufferedInputStream / BufferedOutputStream. Details as shown below:

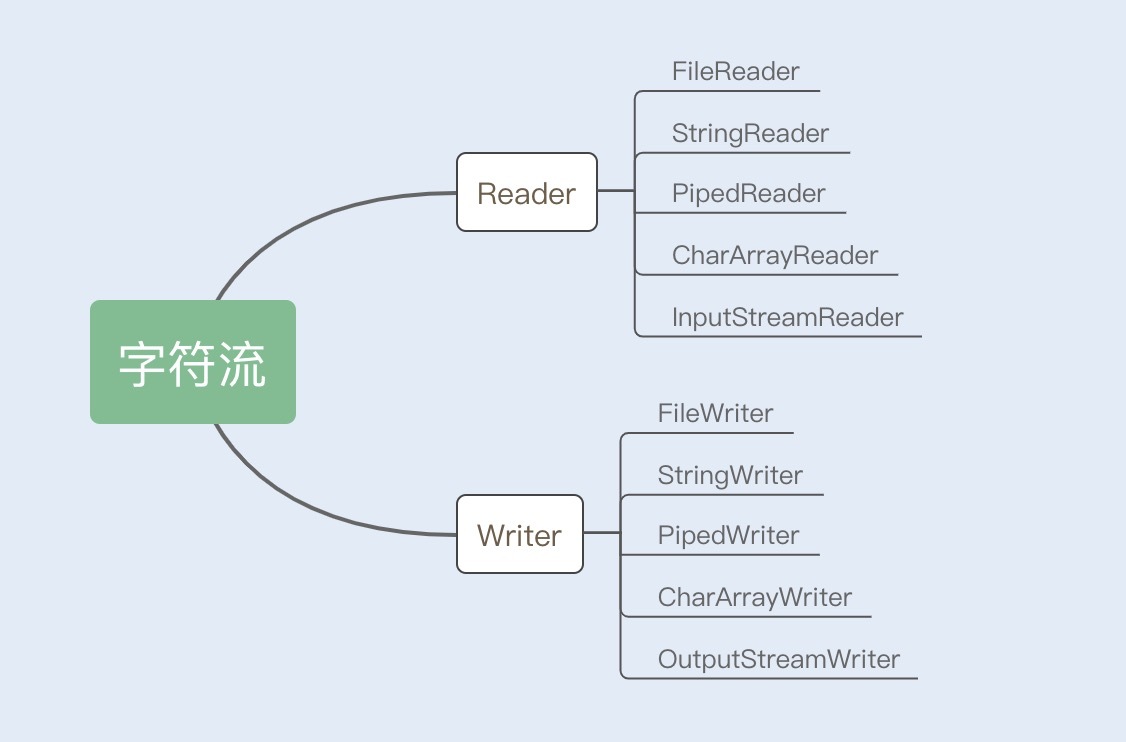

2. The character stream

Reader / Writer character stream is an abstract class, these two abstract classes also derive a number of subclasses, different subclasses process different types of operations, details as shown below:

Traditional I / O performance problems

We know, I / O operations are divided into disk I / O operations and network I / O operations. The former data is read from disk into memory input source, then the read information is output on the persistent physical disk; reading information from the network which is entered into memory, the final output information to the network. However, both the disk I / O or network I / O, there are serious performance problems in the conventional I / O in.

1. multiple memory replication

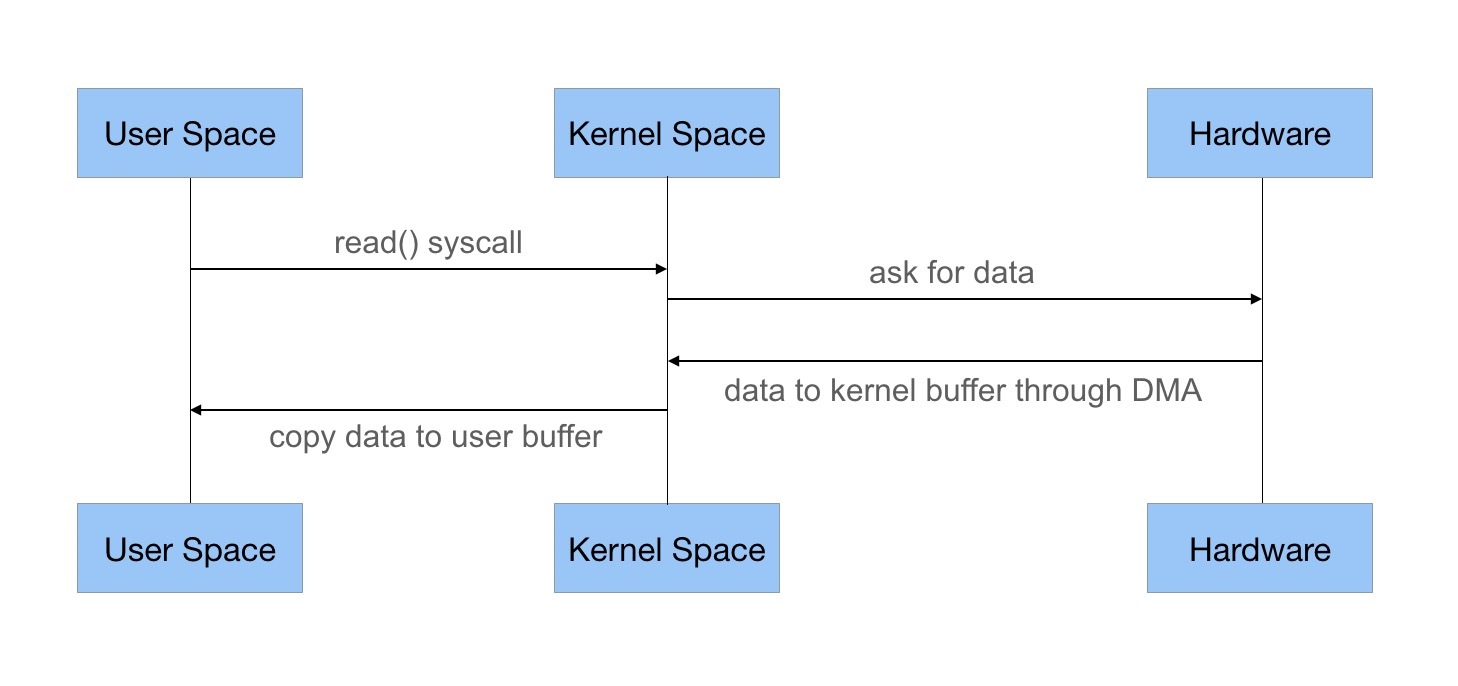

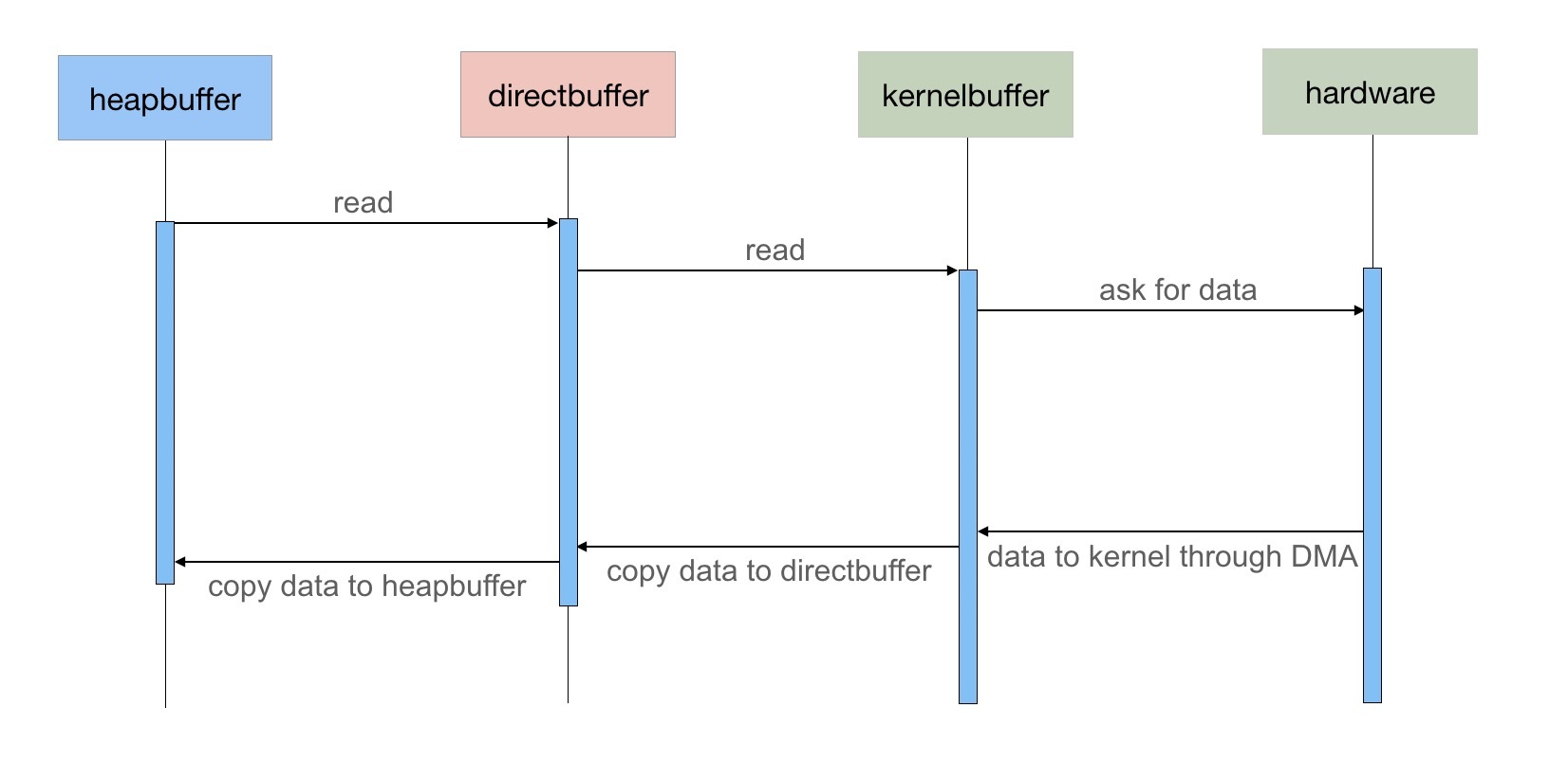

In the conventional I / O, we can read the data from the source through the InputStream data stream is input to the buffer, it outputs the data to an external device (including disks, network) by OutputStream. You can look at the specific input operation of the operating system process, as shown below:

JVM will issue a read () system calls, and calls to the kernel read request initiated by the read system;

The kernel sends a read command to the hardware, and wait for the reading readiness;

Kernel copying the data to be read into the buffer pointed to by the kernel;

The operating system kernel to copy data to user-space buffer, read system call and then return.

In this process, the data copied to from the external device to the kernel space, and then copied from the kernel space to user space, which occurred two memory copy operations. This action causes unnecessary data copying and context switching, thereby reducing I / O performance.

2. obstruction

In the traditional I / O in, InputStream's read () is a while loop operation, it would have been waiting for data to be read, will not return until the data is ready.

This means that if no data is ready, the read operation will always be suspended, the user will thread is blocked.

In the case of a small number of connection requests, using this method there is no problem, the response speed is also high. However, when a large number of connection requests occur, we need to create a lot of listening thread, then if the thread does not have data ready will be suspended, and then enter the blocked state. Once the thread is blocked occurs, these threads will continue to snatch CPU resources, resulting in a large amount of CPU context switching, increase the performance overhead of the system.

How to optimize I / O operations

More than two face performance issues, not only do this programming language optimized for each operating system is also further optimized I / O. JDK1.4 released java.nio package (new I / O acronym), NIO release optimizes memory copy and serious performance problems caused by obstruction. JDK1.7 has released NIO2, made to realize from the operating system level, asynchronous I / O. Here we come to understand the specific optimization under implementation.

1. Optimization of the buffer write stream operation

In the conventional I / O, there is provided a stream I / O based on the realization that the InputStream and the OutputStream, which process data in bytes implement flow-based.

NIO the conventional I / O different, which is based on a block (Block), which is a block as a basic unit of data processing. In NIO, the two most important components is the buffer (Buffer) and channel (Channel). Buffer is a continuous block of memory is read and write data transfer to the NIO. Channel indicates the source or the destination data buffer, which is used to read or write data buffering, a buffer access interface.

The biggest difference between the conventional I / O and is NIO legacy I / O stream oriented, oriented NIO Buffer. Buffer file may be read into memory one time to do the subsequent processing, the traditional way is to read the file side edge processing data. Although the traditional I / O is used behind the bumper, for example BufferedInputStream, but still not comparable to the NIO. NIO used instead of the traditional I / O operations, can improve overall system performance, immediate effect.

2. Use DirectBuffer reduce memory copy

NIO Buffer addition is made to optimize buffer block, but also provides a direct access to physical memory classes DirectBuffer. Common Buffer JVM heap memory is allocated, and allocate physical memory DirectBuffer direct (non-heap memory).

We know that the data to be output to an external device, you must copy start user space to kernel space, and then copied to the output device, while in Java, in user space and there is a copy of that copy from the Java heap memory to a temporary direct memory to be copied to a temporary memory space by direct memory. At this time, direct memory and heap memory belong to user space.

You will certainly wonder why Java needs to copy data through a temporary non-heap memory? If you simply use the Java heap memory to copy data, when a large amount of data copied circumstances, Java heap GC pressure will be relatively large, while the use of non-heap memory can reduce the pressure of the GC.

DirectBuffer step is directly reduced to save the data directly to a non-heap memory, thereby reducing a data copy. The following is a source code IOUtil.java write method JDK classes:

if (src instanceof DirectBuffer)

return writeFromNativeBuffer(fd, src, position, nd);

// Substitute a native buffer

int pos = src.position();

int lim = src.limit();

assert (pos <= lim);

int rem = (pos <= lim ? lim - pos : 0);

ByteBuffer bb = Util.getTemporaryDirectBuffer(rem);

try {

bb.put(src);

bb.flip();

// ...............

Here expand that, because of the physical memory is non-JVM DirectBuffer application, so costly to create and destroy. DirectBuffer application memory is not responsible for garbage collection directly by the JVM, but when DirectBuffer packaging is recycled, the memory block will be released by Java Reference mechanism.

DirectBuffer only optimized copy of the internal space of the user, but before we say is optimized copy of the user space and kernel space, whether that can be done in Java's NIO reduce copy user space and kernel space to optimize it?

The answer is yes, DirectBuffer memory is allocated by unsafe.allocateMemory (size) method, which is based on local class Unsafe class calls the native method of memory allocation. In NIO, there is also another class Buffer: MappedByteBuffer, with DirectBuffer except that, a MappedByteBuffer calling mmap by local class files memory-mapped, Map () system call method will be the file from the hard disk copied to the user space directly, copying data only once, thereby reducing the conventional read () method kernel space from hard copy to this step.

3. avoid blocking to optimize I / O operations

NIO many people called Non-block I / O, that is, non-blocking I / O, so called because, to better reflect its characteristics. Why do you say?

Traditional I / O buffer block even if, still blocking problem. Due to the limited number of thread pool threads, once a large number of concurrent requests occur, exceeding the maximum number of threads can only wait until the thread pool idle threads can be reused. While the Socket input stream for reading, read the stream will block until the occurrence of any of the following three cases will be unblocked:

Readable data;

Connection release;

Null pointer or I / O exception.

Blocking problem is the biggest drawbacks of conventional I / O. After NIO release, and channel multiplexers these two basic components to achieve non-blocking NIO, let's take a principle for optimizing these two components.

Channel (Channel)

Earlier we discussed the traditional I / O reads and writes data is copied back and forth from user space to kernel space and kernel space data is / O interface to read from or written to disk by the operating system level I.

The very beginning, the application calls the operating system I / O interface, is assigned to complete a CPU, the biggest problem with this approach is the "occurrence large I / O request, the CPU consuming"; after the introduction of the operating system DMA ( direct memory access), access between kernel space and disk entirely the responsibility of the DMA, but this approach still need to apply for permission to the CPU, and the need to use DMA data bus to complete the copy operation, if DMA bus too, will create a bus conflict.

The passage appears to solve the above problems, Channel has its own processor, complete I / O operations between kernel space and disk. In NIO, we have to read and write data through the Channel, because the Channel is bidirectional, so reading and writing can be performed simultaneously.

Multiplexer (Selector)

Java NIO Selector is the basis for programming. For checking whether the status of one or more of NIO Channel is readable, writable.

Selector is based on event-driven to achieve, we can register accpet a Selector, read listen for events, Selector will continue to poll registers on the Channel, if a monitor event occurs above the Channel, the Channel is in a ready state, then I / O operation.

A thread using a Selector, polling by the way, events on multiple Channel can be monitored. We can set the channel when registering Channel is non-blocking, when no on Channel I / O operation, the thread would not have been waiting for, but will continue to poll all the Channel, in order to avoid a blockage occurs.

Currently operating system I / O multiplexing mechanisms are used epoll, compared to the traditional mechanisms of select, epoll connection handle is no maximum limit of 1024. So, in theory, can Selector polling thousands of clients.

Now I use a life of scenarios, for example,

after reading the more you know and Channel Selector bear any role in non-blocking I / O, the play any role.

We can monitor multiple I / O requests than for a connecting station pit mouth. Before the ticket can only let visitors take a trip to the nearest grid early pit stop, and only one ticket inspectors, then if there are other trips travelers to stop, you can only queue at the station. This is equivalent to the first thread pool does not implement I / O operations.

Later upgrade the railway station, and a few more ticket entrance, allowing different trips from travelers stop their corresponding ticket entrance. This is equivalent to using multiple threads to create multiple threads listening, listening while I / O requests from the client.

Finally, the train station has been upgraded to accommodate more passengers, and each train carrying more, and trips also will be reasonable, passengers no longer get together and line up, you can stop from a large single wicket, which a plurality of ticket wicket can simultaneously trips. The big wicket equivalent Selector, trips equivalent Channel, passengers equivalent I / O streams.

to sum up

Java conventional I / O start is based on two operations InputStream and OutputStream stream implementation, this operation flow is measured in bytes, if the high concurrency, large data scene, it is easy to cause blocking, and therefore such operation performance is very poor. Further, the output copy data from user space to kernel space, and then copied to the output device, such action would increase the system performance overhead.

Traditional I / O Buffer later used to optimize the "blocking" this performance problem, to cushion the block as a minimum unit, but is still unsatisfactory compared to overall performance.

NIO then released, it is based on the stream buffer block unit operation, on the basis Buffer, two new components "pipe Multiplexers", to achieve non-blocking I / O, NIO applicable to a large number of occurrence I / O request to the scenes, these three components together to enhance the overall performance of I / O.

Questions

In JDK1.7 version, Java released an update package NIO2 NIO, which is the AIO. AIO asynchronous I realized the true sense / O, it is direct I / O operations to the operating system for asynchronous processing. It is also an optimized I / O operations, then

why are many communication framework NIO containers are still using it?

We look forward to seeing your opinions in the comments section. You are welcome to click on the "ask a friend to read", today the share content to their friends, invited him to study together.