spark what type of data https://spark.apache.org/docs/latest/sql-reference.html

Spark Data Types

Data Types

Spark SQL and DataFrames support the following data types:

- Numeric types

ByteType: Represents 1-byte signed integer numbers. The range of numbers is from-128to127.ShortType: Represents 2-byte signed integer numbers. The range of numbers is from-32768to32767.IntegerType: Represents 4-byte signed integer numbers. The range of numbers is from-2147483648to2147483647.LongType: Represents 8-byte signed integer numbers. The range of numbers is from-9223372036854775808to9223372036854775807.FloatType: Represents 4-byte single-precision floating point numbers.DoubleType: Represents 8-byte double-precision floating point numbers.DecimalType: Represents arbitrary-precision signed decimal numbers. Backed internally byjava.math.BigDecimal. ABigDecimalconsists of an arbitrary precision integer unscaled value and a 32-bit integer scale.

- String type

StringType: Represents character string values.

- Binary type

BinaryType: Represents byte sequence values.

- Boolean type

BooleanType: Represents boolean values.

- Datetime type

TimestampType: Represents values comprising values of fields year, month, day, hour, minute, and second.DateType: Represents values comprising values of fields year, month, day.

- Complex types

ArrayType(elementType, containsNull): Represents values comprising a sequence of elements with the type ofelementType.containsNullis used to indicate if elements in aArrayTypevalue can havenullvalues.MapType(keyType, valueType, valueContainsNull): Represents values comprising a set of key-value pairs. The data type of keys are described bykeyTypeand the data type of values are described byvalueType. For aMapTypevalue, keys are not allowed to havenullvalues.valueContainsNullis used to indicate if values of aMapTypevalue can havenullvalues.StructType(fields): Represents values with the structure described by a sequence ofStructFields (fields).StructField(name, dataType, nullable): Represents a field in aStructType. The name of a field is indicated byname. The data type of a field is indicated bydataType.nullableis used to indicate if values of this fields can havenullvalues.

Corresponding to the type of data here pyspark pyspark.sql.types

Some common conversion scenarios:

1. Converts a date / timestamp / string to a value of string, the string is converted into the format specified by the second argument

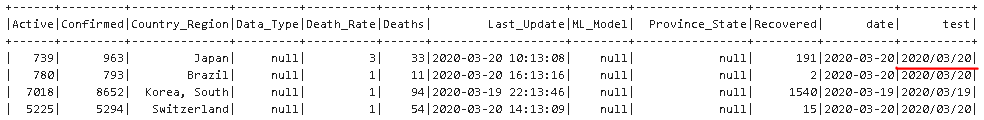

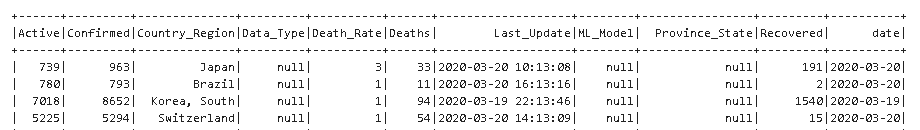

df.withColumn('test', F.date_format(col('Last_Update'),"yyyy/MM/dd")).show()

2. turn into a string, can be cast into the type you want, such as following the date type

df = df.withColumn('date', F.date_format(col('Last_Update'),"yyyy-MM-dd").alias('ts').cast("date"))

3. The timestamp number of seconds (from the beginning of 1970) turn into a date format string

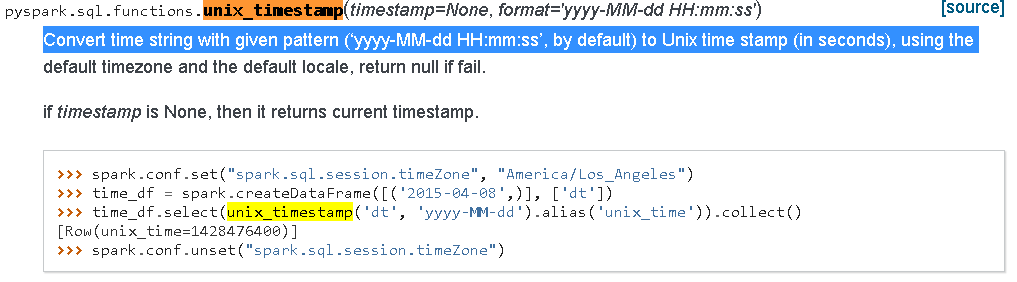

4. unix_timestamp String to timestamp the date seconds, the operation is the inverse operation of the above

Because unix_timestamp not consider ms, ms must consider if you can use the following method

df1 = df.withColumn("unix_timestamp",F.unix_timestamp(df.TIME,'dd-MMM-yyyy HH:mm:ss.SSS z') + F.substring(df.TIME,-7,3).cast('float')/1000)

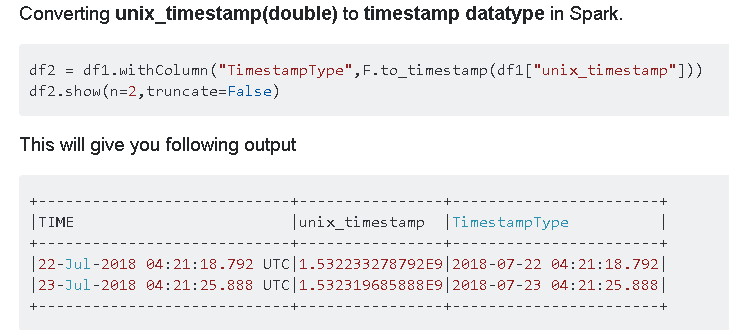

5. timestamp seconds converted timestamp type, can be used F.to_timestamp

Ref: