What is the projected image

To achieve image projection operation, we must first figure out what is projected. Image projecting horizontal projection and vertical projection is divided into: the horizontal direction is called the horizontal orthographic projection, the projection means a horizontal two-dimensional image projected in the y-axis direction in rows; refers to two vertical projection image projected in the x-axis direction by the column, projected the result can be seen as something one-dimensional image.

For a two-dimensional image, the pixel is projected after the binary image are stacked in a certain direction, to understand this principle, we're ready to write the projected picture code:

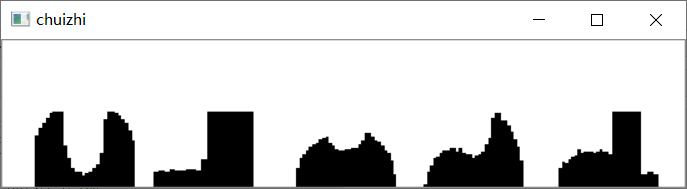

Horizontal projection:

import cv2

import numpy as np

img=cv2.imread('123.jpg')

cv2.imshow('origin',img)

cv2.waitKey(0)

grayimg=cv.cvtColor(img,cv.COLOR_BGR2GRAY)

img1,thres=cv.threshold(grayimg,130,255,cv.THRESH_BINARY) img1,thres1=cv.threshold(grayimg,130,255,cv.THRESH_BINARY) (h,w)=thres.shape a=[0 for i in range(0,w)] for j in range(0,w): for i in range(0,h): if thres[i,j]==0: a[j]+=1 thres[i,j]=255 for j in range(0,w): for i in range((h-a[j]),h): thres[i,j]=0 cv.imshow('chuizhi',thres) cv.waitKey(0)

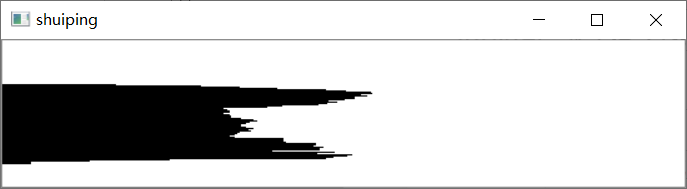

Vertical projection

import cv2

import numpy as np

img=cv2.imread('123.jpg')

cv2.imshow('origin',img) cv2.waitKey(0) grayimg=cv.cvtColor(img,cv.COLOR_BGR2GRAY) img1,thres=cv.threshold(grayimg,130,255,cv.THRESH_BINARY) img1,thres1=cv.threshold(grayimg,130,255,cv.THRESH_BINARY) (h,w)=thres.shape a=[0 for i in range(0,w)] for j in range(0,w): for i in range(0,h): if thres[i,j]==0: a[j]+=1 thres[i,j]=255 for j in range(0,w): for i in range((h-a[j]),h): thres[i,j]=0 cv.imshow('chuizhi',thres) cv.waitKey(0)

among them

grayimg=cv.cvtColor(img,cv.COLOR_BGR2GRAY)

img1,thres=cv.threshold(grayimg,130,255,cv.THRESH_BINARY)

img1,thres1=cv.threshold(grayimg,130,255,cv.THRESH_BINARY)

This is due to the three lines of code in a subsequent operation we need to use the length and width of the image pixel data, but the data obtained by the shape function in addition to the length and width as well as depth data of the image data, which we do not need a data, so required depth data image by removing the above-mentioned three lines of code.

This time to experiment is over here, I hope you read this experiment can practice it yourself.