自然语言处理(NLP)中的文本情感分析是一个重要的应用领域,多用于评价性的用户信息回馈,如电影影评和购物后的评价。而情感分析主要是通过用户的回答文本数据(中文),进行文本情感量化分析,现有的情感分析方法:1.情感词典分析方法。2.机器学习分析方法。

情感词典分析方法

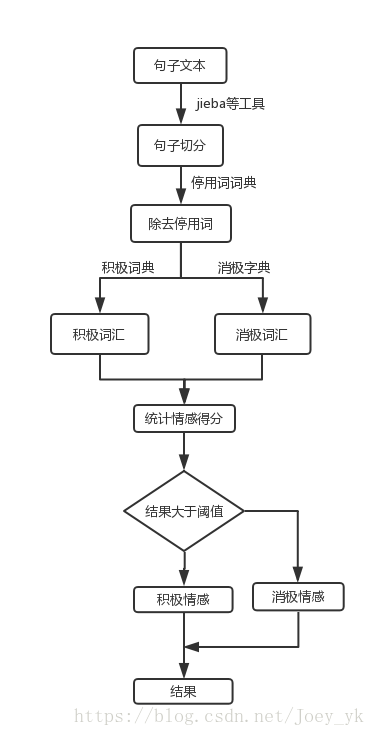

一句话来总结,就是对文本进行切词,出除掉停用词,提取出关键词中的积极关键词和消极关键词,计算出情感得分。

先是对文本数据进行切词,这里使用中文切词工具jieba,还有很多其他的切词工具,如snowNLP等,都有各自的优点,但是切词越准确得到的结果就越准确。其次就是关于字典的生成,这里主要是包含三个字典,停用词字典,积极情感字典,消极情感字典,来源于知网HowNet的字典。停用词字典包含的是“那么”,“怎么”这样的连接词还有一些标点符号等,不带有情感的词语,将这些词语去掉后,只剩下了带有情感的词语。词典包含的数据越多,情感预测的也就越准确。

代码:

# -*- coding: utf-8 -*-

__author__ = 'JoeyYK'

import jieba

print('loading dictionary dataset...')

#导入负面情绪字典

negitive_dic = open('negitive_word.txt')

negitive_arr= negitive_dic.readlines()

negitive_word = []

for arr in negitive_arr:

arr = arr.replace("\n", "")

negitive_word.append(arr)

# print(negitive_word)

#导入积极情绪词典

posstive_dic = open('posstive_word.txt')

posstive_arr = posstive_dic.readlines()

posstive_word = []

for arr in posstive_arr:

arr = arr.replace("\n", "")

posstive_word.append(arr)

# print(posstive_word)

#分词函数

def segmentation(sentence):

seg_list = jieba.cut(sentence)

seg_result = []

for w in seg_list:

seg_result.append(w)

return seg_result

#去掉停用词

def del_stopWord(seg_result):

# 将txt的文件转化为一个list,不带、n的

stop_dic = open('stop_word.txt')

stopwords = stop_dic.readlines()

arr = []

for stopword in stopwords:

stopword = stopword.replace("\n", "")

arr.append(stopword)

new_sent = []

for word in seg_result:

if word not in arr:

new_sent.append(word)

return new_sent

def sentence_score(sentences):

# sentence_score = []

seg_result = segmentation(sentences)

seg_result = del_stopWord(seg_result)

print(seg_result)

poscount = 0

negcount = 0

for word in seg_result:

if word in negitive_word:

negcount +=1

elif word in posstive_word:

poscount +=1

final_score = poscount - negcount

print('poscount:',poscount)

print('negcount:',negcount)

print('final_score:' ,final_score)

if __name__ == '__main__':

sentences = '以前你们很棒的,现在你们这个维修的进度也太慢了吧,我都等了几天了,真的是垃圾,太差劲了,我要投诉你们'

print(sentences)

print(segmentation(sentences))

sentence_score(sentences)输出结果:

/home/joey/anaconda3/bin/python /home/joey/PycharmProjects/workspace/emotion/emotion_test.py

loading dictionary dataset...

Building prefix dict from the default dictionary ...

Loading model from cache /tmp/jieba.cache

以前你们很棒的,现在你们这个维修的进度也太慢了吧,我都等了几天了,真的是垃圾,太差劲了,我要投诉你们

['以前', '你们', '很棒', '的', ',', '现在', '你们', '这个', '维修', '的', '进度', '也', '太慢', '了', '吧', ',', '我', '都', '等', '了', '几天', '了', ',', '真的', '是', '垃圾', ',', '太', '差劲', '了', ',', '我要', '投诉', '你们']

['很棒', '维修', '进度', '太慢', '几天', '真的', '垃圾', '太', '差劲', '我要', '投诉']

Loading model cost 1.298 seconds.

Prefix dict has been built succesfully.

poscount: 1

negcount: 3

final_score: -2

Process finished with exit code 0词典法存在很多缺点,词典法的精确度与词典的关系非常大,只有词典的规模足够大才能并且包含足够多的该领域的专业数据才能准确的预测情感情况,其次词典法只是简单的关键词匹配,数学化的计算出消极和积极词语的数量来判断情感是很局限的,例如“我开始觉得你们非常的差劲,进度慢,效率垃圾,但是我接触后才发现非常的好”这句话其实表达的是一种赞美的情感可以说是积极的,但是按照词典法就是不太好的结果。所以我们考虑第二种方法,也就是机器学习方法。

机器学习法

利用机器学习的方法,主要利用的是word2vec这个词向量模型,将词转化成数组,这样通过计算词间的数据距离,来衡量词之间的相似度,这样在模型有监督的学习了积极和消极词向量后,就可以得到结果了。这里使用的循环神经网络LSTM,LSTM的时延性能帮助到词向量的学习。这里使用的是tensorflow的深度学习框架。

借用大神代码,代码地址:https://github.com/vortexJCH/-LSTM-

代码:

import warnings

warnings.filterwarnings(action='ignore', category=UserWarning, module='gensim')

import gensim

from gensim.models import word2vec

import jieba

import tensorflow as tf

import numpy as np

import time

from random import shuffle

#----------------------------------

#停用词获取

def makeStopWord():

with open('停用词.txt','r',encoding = 'utf-8') as f:

lines = f.readlines()

stopWord = []

for line in lines:

words = jieba.lcut(line,cut_all = False)

for word in words:

stopWord.append(word)

return stopWord

#将词转化为数组

def words2Array(lineList):

linesArray=[]

wordsArray=[]

steps = []

for line in lineList:

t = 0

p = 0

for i in range(MAX_SIZE):

if i<len(line):

try:

wordsArray.append(model.wv.word_vec(line[i]))

p = p + 1

except KeyError:

t=t+1

continue

else:

wordsArray.append(np.array([0.0]*dimsh))

for i in range(t):

wordsArray.append(np.array([0.0]*dimsh))

steps.append(p)

linesArray.append(wordsArray)

wordsArray = []

linesArray = np.array(linesArray)

steps = np.array(steps)

return linesArray, steps

#将数据转化为数据

def convert2Data(posArray, negArray, posStep, negStep):

randIt = []

data = []

steps = []

labels = []

for i in range(len(posArray)):

randIt.append([posArray[i], posStep[i], [1,0]])

for i in range(len(negArray)):

randIt.append([negArray[i], negStep[i], [0,1]])

shuffle(randIt)

for i in range(len(randIt)):

data.append(randIt[i][0])

steps.append(randIt[i][1])

labels.append(randIt[i][2])

data = np.array(data)

steps = np.array(steps)

return data, steps, labels

#获得文件中的数据,并且分词,去除其中的停用词

def getWords(file):

wordList = []

trans = []

lineList = []

with open(file,'r',encoding='utf-8') as f:

lines = f.readlines()

for line in lines:

trans = jieba.lcut(line.replace('\n',''), cut_all = False)

for word in trans:

if word not in stopWord:

wordList.append(word)

lineList.append(wordList)

wordList = []

return lineList

#产生训练数据集和测试数据集

def makeData(posPath,negPath):

#获取词汇,返回类型为[[word1,word2...],[word1,word2...],...]

pos = getWords(posPath)

print("The positive data's length is :",len(pos))

neg = getWords(negPath)

print("The negative data's length is :",len(neg))

#将评价数据转换为矩阵,返回类型为array

posArray, posSteps = words2Array(pos)

negArray, negSteps = words2Array(neg)

#将积极数据和消极数据混合在一起打乱,制作数据集

Data, Steps, Labels = convert2Data(posArray, negArray, posSteps, negSteps)

return Data, Steps, Labels

#----------------------------------------------

# Word60.model 60维

# word2vec.model 200维

timeA=time.time()

word2vec_path = 'word2vec/word2vec.model'

model=gensim.models.Word2Vec.load(word2vec_path)

dimsh=model.vector_size

MAX_SIZE=25

stopWord = makeStopWord()

print("In train data:")

trainData, trainSteps, trainLabels = makeData('data/A/Pos-train.txt',

'data/A/Neg-train.txt')

print("In test data:")

testData, testSteps, testLabels = makeData('data/A/Pos-test.txt',

'data/A/Neg-test.txt')

trainLabels = np.array(trainLabels)

del model

# print("-"*30)

# print("The trainData's shape is:",trainData.shape)

# print("The testData's shape is:",testData.shape)

# print("The trainSteps's shape is:",trainSteps.shape)

# print("The testSteps's shape is:",testSteps.shape)

# print("The trainLabels's shape is:",trainLabels.shape)

# print("The testLabels's shape is:",np.array(testLabels).shape)

num_nodes = 128

batch_size = 16

output_size = 2

#使用tensorflow来建立模型

graph = tf.Graph() #定义一个计算图

with graph.as_default(): #构建计算图

tf_train_dataset = tf.placeholder(tf.float32,shape=(batch_size,MAX_SIZE,dimsh))

tf_train_steps = tf.placeholder(tf.int32,shape=(batch_size))

tf_train_labels = tf.placeholder(tf.float32,shape=(batch_size,output_size))

tf_test_dataset = tf.constant(testData,tf.float32)

tf_test_steps = tf.constant(testSteps,tf.int32)

#使用LSTM的循环神经网络

lstm_cell = tf.nn.rnn_cell.BasicLSTMCell(num_units = num_nodes,state_is_tuple=True)

w1 = tf.Variable(tf.truncated_normal([num_nodes,num_nodes // 2], stddev=0.1))

b1 = tf.Variable(tf.truncated_normal([num_nodes // 2], stddev=0.1))

w2 = tf.Variable(tf.truncated_normal([num_nodes // 2, 2], stddev=0.1))

b2 = tf.Variable(tf.truncated_normal([2], stddev=0.1))

def model(dataset, steps):

outputs, last_states = tf.nn.dynamic_rnn(cell = lstm_cell,

dtype = tf.float32,

sequence_length = steps,

inputs = dataset)

hidden = last_states[-1]

hidden = tf.matmul(hidden, w1) + b1

logits = tf.matmul(hidden, w2) + b2

return logits

train_logits = model(tf_train_dataset, tf_train_steps)

loss = tf.reduce_mean(

tf.nn.softmax_cross_entropy_with_logits(labels=tf_train_labels,logits=train_logits))

optimizer = tf.train.GradientDescentOptimizer(0.01).minimize(loss)

#预测

test_prediction = tf.nn.softmax(model(tf_test_dataset, tf_test_steps))

num_steps = 20001

summary_frequency = 500

with tf.Session(graph = graph) as session:

tf.global_variables_initializer().run()

print('Initialized')

mean_loss = 0

for step in range(num_steps):

offset = (step * batch_size) % (len(trainLabels)-batch_size)

feed_dict={tf_train_dataset:trainData[offset:offset + batch_size],

tf_train_labels:trainLabels[offset:offset + batch_size],

tf_train_steps:trainSteps[offset:offset + batch_size]}

_, l = session.run([optimizer,loss],

feed_dict = feed_dict)

mean_loss += l

if step >0 and step % summary_frequency == 0:

mean_loss = mean_loss / summary_frequency

print("The step is: %d"%(step))

print("In train data,the loss is:%.4f"%(mean_loss))

mean_loss = 0

acrc = 0

prediction = session.run(test_prediction)

for i in range(len(prediction)):

if prediction[i][testLabels[i].index(1)] > 0.5:

acrc = acrc + 1

print("In test data,the accuracy is:%.2f%%"%((acrc/len(testLabels))*100))

#####################################

timeB=time.time()

print("time cost:",int(timeB-timeA))

结果:

经过20000次的迭代后得到结果

...

The step is: 18000

In train data,the loss is:0.0230

In test data,the accuracy is:97.04%

The step is: 18500

In train data,the loss is:0.0210

In test data,the accuracy is:96.94%

The step is: 19000

In train data,the loss is:0.0180

In test data,the accuracy is:96.89%

The step is: 19500

In train data,the loss is:0.0157

In test data,the accuracy is:97.49%

The step is: 20000

In train data,the loss is:0.0171

In test data,the accuracy is:96.64%

time cost: 809在语料足够的情况下,机器学习的方法效果更好一点。