前言

本文基于chainer实现ShuffleNet_V1网络结构,并基于torch的结构方式构建chainer版的,并计算ShuffleNet_V1的参数量。

代码实现

def conv3x3(in_channels, out_channels, stride=1, padding=1, bias=True, groups=1):

return L.Convolution2D(

in_channels,

out_channels,

ksize=3,

stride=stride,

pad=padding,

nobias=not bias,

groups=groups)

def conv1x1(in_channels, out_channels, groups=1):

"""1x1 convolution with padding

- Normal pointwise convolution When groups == 1

- Grouped pointwise convolution when groups > 1

"""

return L.Convolution2D(

in_channels,

out_channels,

ksize=1,

groups=groups,

stride=1)

def channel_shuffle(x, groups=2):

n, c, h, w = x.shape

x = x.reshape(n, groups, c // groups, h, w) # x.view(batchsize, groups, channels_per_group, height, width)

x = x.transpose(0, 2, 1, 3, 4)

x = x.reshape(n, c, h, w)

return x

class ShuffleUnit(chainer.Chain):

def _make_grouped_conv1x1(self,group_num, in_channels, out_channels, groups, batch_norm=True, relu=False):

self.layers += [('conv1x1_{0}'.format(group_num),conv1x1(in_channels, out_channels, groups=groups))]

if batch_norm:

self.layers += [('batch_norm_{0}'.format(group_num),L.BatchNormalization(out_channels))]

if relu:

self.layers += [('_relu_{0}'.format(group_num),ReLU())]

def __init__(self, in_channels, out_channels, groups=3, grouped_conv=True, combine='add'):

super(ShuffleUnit, self).__init__()

self.in_channels = in_channels

self.out_channels = out_channels

self.grouped_conv = grouped_conv

self.combine = combine

self.groups = groups

self.bottleneck_channels = self.out_channels // 4

if self.combine == 'add':

self.depthwise_stride = 1

self._combine_func = self._add

elif self.combine == 'concat':

self.depthwise_stride = 2

self._combine_func = self._concat

self.out_channels -= self.in_channels

else:

raise ValueError("Cannot combine tensors with \"{}\"" \

"Only \"add\" and \"concat\" are" \

"supported".format(self.combine))

self.first_1x1_groups = self.groups if grouped_conv else 1

self.layers = []

# g_conv_1x1_compress

self._make_grouped_conv1x1(1,self.in_channels, self.bottleneck_channels, self.first_1x1_groups, batch_norm=True, relu=True)

self.layers += [('@channel_shuffle',None)]

# 3x3 depthwise convolution followed by batch normalization

self.layers += [('depthwise_conv3x3',conv3x3(self.bottleneck_channels, self.bottleneck_channels, stride=self.depthwise_stride, groups=self.bottleneck_channels))]

self.layers += [('bn_after_depthwise',L.BatchNormalization(self.bottleneck_channels))]

# Use 1x1 grouped convolution to expand from

# bottleneck_channels to out_channels

# g_conv_1x1_expand

self._make_grouped_conv1x1(2, self.bottleneck_channels, self.out_channels, self.groups, batch_norm=True, relu=False)

with self.init_scope():

for n in self.layers:

if not n[0].startswith('_') and not n[0].startswith('@') :

setattr(self, n[0], n[1])

def _add(self,x, out):

return x + out

def _concat(self,x, out):

return F.concat((x, out))

def forward(self, x):

# save for combining later with output

residual = x

if self.combine == 'concat':

residual = average_pooling_2d(residual,ksize=3,stride=2,pad=1)

for n, f in self.layers:

# print(n,x.shape)

if n.startswith('_'):

x = f.apply((x,))[0]

elif n.startswith('@'):

x = channel_shuffle(x, self.groups)

else:

x = getattr(self, n)(x)

x = self._combine_func(residual, x)

return F.relu(x)

class ShuffleNet_V1(chainer.Chain):

cfgs={

'shufflenetv1_groups_1':{

'groups':1,'stages_repeats':[3, 7, 3],'stage_out_channels':[-1, 24, 144, 288, 567]},

'shufflenetv1_groups_2':{

'groups':2,'stages_repeats':[3, 7, 3],'stage_out_channels':[-1, 24, 200, 400, 800]},

'shufflenetv1_groups_3':{

'groups':3,'stages_repeats':[3, 7, 3],'stage_out_channels':[-1, 24, 240, 480, 960]},

'shufflenetv1_groups_4':{

'groups':4,'stages_repeats':[3, 7, 3],'stage_out_channels':[-1, 24, 272, 544, 1088]},

'shufflenetv1_groups_8':{

'groups':8,'stages_repeats':[3, 7, 3],'stage_out_channels':[-1, 24, 384, 768, 1536]}

}

def _make_stage(self, stage):

stage_name = "ShuffleUnit_Stage{}".format(stage)

grouped_conv = stage > 2

self.layers += [(stage_name+"_0",ShuffleUnit(self.stage_out_channels[stage-1],self.stage_out_channels[stage],groups=self.groups,grouped_conv=grouped_conv,combine='concat'))]

# add more ShuffleUnits depending on pre-defined number of repeats

for i in range(self.stage_repeats[stage-2]):

name = stage_name + "_{}".format(i+1)

self.layers += [(name,ShuffleUnit(self.stage_out_channels[stage], self.stage_out_channels[stage], groups=self.groups, grouped_conv=True, combine='add'))]

def __init__(self, model_name='shufflenetv1_groups_1', channels=3, num_classes=1000,batch_size=4,image_size = 244,**kwargs):

super(ShuffleNet_V1, self).__init__()

self.groups = self.cfgs[model_name]['groups']

self.stage_repeats = self.cfgs[model_name]['stages_repeats']

self.in_channels = channels

self.num_classes = num_classes

self.image_size = image_size

# index 0 is invalid and should never be called.

# only used for indexing convenience.

self.stage_out_channels = self.cfgs[model_name]['stage_out_channels']

self.layers = []

# Stage 1 always has 24 output channels

self.layers += [('conv1',conv3x3(self.in_channels, self.stage_out_channels[1], stride=2))]

output_size = int((self.image_size-3+2*1)/2+1)

self.layers += [('_maxpool',MaxPooling2D(ksize=3, stride=2,pad=1))]

output_size = math.ceil((output_size-3+2*1)/2+1)

# Stage 2

self._make_stage(2)

output_size = int((output_size-3+2*1)/2+1)

# Stage 3

self._make_stage(3)

output_size = int((output_size-3+2*1)/2+1)

# Stage 4

self._make_stage(4)

output_size = int((output_size-3+2*1)/2+1)

num_inputs = self.stage_out_channels[-1]

self.layers += [('_avgpool',AveragePooling2D(ksize=output_size,stride=1,pad=0))]

self.layers += [('_reshape',Reshape((batch_size,num_inputs)))]

self.layers += [('fc',L.Linear(num_inputs, self.num_classes))]

with self.init_scope():

for n in self.layers:

if not n[0].startswith('_'):

setattr(self, n[0], n[1])

def forward(self, x):

for n, f in self.layers:

origin_size = x.shape

if not n.startswith('_'):

x = getattr(self, n)(x)

else:

x = f.apply((x,))[0]

print(n,origin_size,x.shape)

if chainer.config.train:

return x

return F.softmax(x)

注意此类就是ShuffleNet_V1的实现过程,注意网络的前向传播过程中,分了训练以及测试。

训练过程中直接返回x,测试过程中会进入softmax得出概率

调用方式

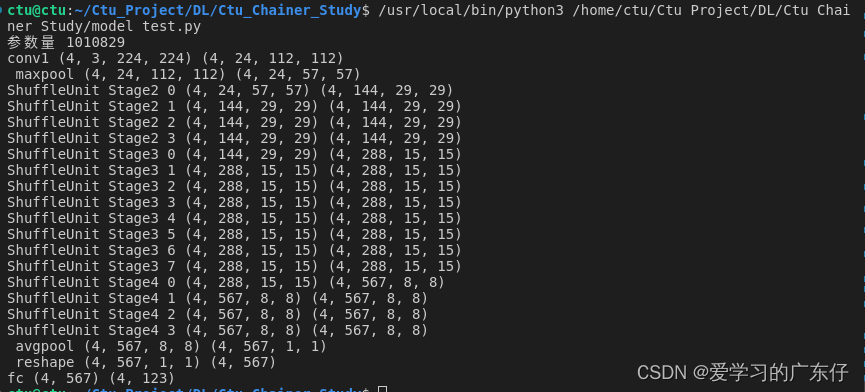

if __name__ == '__main__':

batch_size = 4

n_channels = 3

image_size = 224

num_classes = 123

model = ShuffleNet_V1(num_classes=num_classes, channels=n_channels,image_size=image_size,batch_size=batch_size)

print("参数量",model.count_params())

x = np.random.rand(batch_size, n_channels, image_size, image_size).astype(np.float32)

t = np.random.randint(0, num_classes, size=(batch_size,)).astype(np.int32)

with chainer.using_config('train', True):

y1 = model(x)

loss1 = F.softmax_cross_entropy(y1, t)