Desenvolvimento de ambiente de simulação

O ambiente de simulação aqui visa simular o desenvolvimento e depuração da placa de desenvolvimento no PC e não tem nada a ver com a própria placa de desenvolvimento. Meu ambiente de sistema operacional aqui é Ubuntu 22.04. Você precisa de uma placa gráfica NVIDIA, no meu caso é 3090, caso contrário será relatado um erro ao instalar o componente Python.

Primeiro instale o Anaconda. Para operações específicas, consulte o Ubuntu para instalar o ambiente Pytorch e Tensorflow Cuda . Em seguida, crie um ambiente Python 3.10.

conda create -n rknn python=3.10.0

source activate

conda activate rknnBaixe o NPU SDK para a placa de desenvolvimento RK35XX no endereço de download https://github.com/rockchip-linux/rknn-toolkit2

Existem muitas versões aqui, estou usando 1.5.2.

Instale dependências relacionadas

sudo apt-get install libxslt1-dev zlib1g-dev libglib2.0 libsm6 libgl1-mesa-glx libprotobuf-dev gccInstale componentes Python

cd RK_NPU_SDK/RK_NPU_SDK_1.5.2/release

unzip rknn-toolkit2-1.5.2.zip

cd rknn-toolkit2-1.5.2/doc

pip install -r requirements_cp310-1.5.2.txt -i https://mirror.baidu.com/pypi/simple

Instale o componente NPU SDK Python

cd ../packages

pip install rknn_toolkit2-1.5.2+b642f30c-cp310-cp310-linux_x86_64.whl Instale o PyCharm.

Olá Mundo

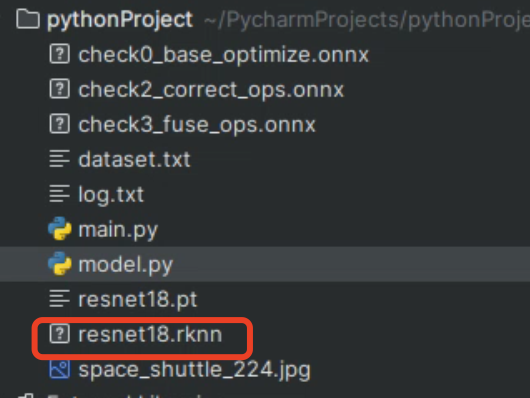

Aqui convertemos um parâmetro do modelo Pytorch em um modelo específico do RKNN. Primeiro, copie dataset.txt e space_shuttle_224.jpg em exemplos/pytorch/resnet18 para o projeto PyCharm.

Extrair parâmetros do modelo

importar tocha do torchvision importar modelos se __name__ == '__main__' : net = models.resnet18( pré-treinado = True ) net.eval() trace_model = torch.jit.trace(net, torch.Tensor( 1 , 3 , 224 , 224 )) trace_model.save( './resnet18.pt' )

Converter

de rknn.api import RKNN if __name__ == '__main__' : rknn = RKNN( verbose = True , verbose_file = 'log.txt' ) rknn.config( mean_values =[[ 123.675 , 116.28 , 103.53 ]], std_values =[[ 58.395 , 58.395 , 58.395 ]], target_platform = 'rk3568' ) rknn.load_pytorch( model = './resnet18.pt' , input_size_list =[[ 1 , 3 , 224 , 224 ]]) rknn.build( do_quantization = True , conjunto de dados = 'dataset.txt' , rknn_batch_size = 1 ) rknn.export_rknn( 'resnet18.rknn' ) rknn.release()

resultado da operação

Raciocínio do modelo

de rknn.api importar RKNN importar cv2 importar tocha se __name__ == '__main__' : rknn = RKNN ( verbose = True , verbose_file = 'log.txt' ) rknn.config ( valores médios = [[ 123.675 , 116,28 , 103,53 ]], std_values =[[ 58.395 , 58.395 , 58.395 ]], target_platform = 'rk3568' ) rknn.load_pytorch( model = './resnet18.pt' , input_size_list =[[ 1 , 3 , 224 , 224 ]]) rknn.build( do_quantization = True , dataset = 'dataset.txt' , rknn_batch_size = 1 ) rknn.export_rknn( 'resnet18.rknn' ) rknn.init_runtime( target = None , target_sub_class = None , device_id = None , perf_debug = False , eval_mem = False , async_mode = False , core_mask =RKNN.NPU_CORE_AUTO ) img = cv2.imread( './space_shuttle_224.jpg' ) img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB) saídas = rknn.inference( entradas =[img], data_format = 'nhwc' ) imprimir (torch.argmax(torch.softmax(torch.Tensor(saídas[ 0 ][ 0 ]), dim = 0 ))) rknn.release()

resultado da operação

tensor(812)A imagem que inferimos é

Como os parâmetros do modelo ResNet18 são treinados no ImgNet, que é um conjunto de dados de 1000 categorias, o rótulo correspondente ao nº 812 é

Portanto, o raciocínio aqui está correto. O que precisa ser explicado aqui é que no ambiente de simulação não podemos usar resnet18.rknn diretamente para inferência, este arquivo de modelo só pode ser usado para inferência na placa de desenvolvimento real.

análise de precisão

de rknn.api import RKNN if __name__ == '__main__' : rknn = RKNN( verbose = True , verbose_file = 'log.txt' ) rknn.config( mean_values =[[ 123.675 , 116.28 , 103.53 ]], std_values =[[ 58.395 , 58.395 , 58.395 ]], target_platform = 'rk3568' ) rknn.load_pytorch( model = './resnet18.pt' , input_size_list =[[ 1 , 3 , 224 , 224 ]]) rknn.build( do_quantization = True , conjunto de dados = 'dataset.txt' , rknn_batch_size = 1 ) rknn.export_rknn ( 'resnet18.rknn' ) rknn.accuracy_análise ( entradas = [ "space_shuttle_224.jpg" ], saída_dir = "instantâneo" , alvo = Nenhum , dispositivo_id = Nenhum ) rknn.release()

resultado da operação

layer_name simulator_error

entire single

------------------------------------------------------

[Input] x.3 1.000000 1.000000

[exDataConvert] x.3_int8 0.999987 0.999987

[Conv] input.11

[Relu] 82 0.999908 0.999908

[MaxPool] input.13 0.999946 0.999982

[Conv] input.19

[Relu] 120 0.999657 0.999785

[Conv] out.3 0.999644 0.999964

[Add] input.25

[Relu] 142 0.999784 0.999965

[Conv] input.29

[Relu] 169 0.999179 0.999821

[Conv] out.5 0.998712 0.999934

[Add] input.33

[Relu] 191 0.999385 0.999959

[Conv] input.37

[Relu] 222 0.998591 0.999908

[Conv] out.7 0.998813 0.999929

[Conv] identity.2 0.998559 0.999720

[Add] input.43

[Relu] 267 0.998864 0.999918

[Conv] input.47

[Relu] 294 0.998188 0.999869

[Conv] out.9 0.998970 0.999969

[Add] input.51

[Relu] 316 0.998615 0.999916

[Conv] input.55

[Relu] 347 0.998649 0.999901

[Conv] out.11 0.998943 0.999943

[Conv] identity.4 0.999071 0.999812

[Add] input.61

[Relu] 392 0.998877 0.999931

[Conv] input.65

[Relu] 419 0.998469 0.999886

[Conv] out.13 0.999253 0.999970

[Add] input.69

[Relu] 441 0.998728 0.999915

[Conv] input.8

[Relu] 472 0.998261 0.999917

[Conv] out.2 0.999045 0.999963

[Conv] identity.1 0.998884 0.999855

[Add] input.12

[Relu] 517 0.998270 0.999901

[Conv] input.7

[Relu] 544 0.998046 0.999916

[Conv] out.1 0.998175 0.999964

[Add] input.3

[Relu] 566 0.998509 0.999976

[Conv] x.1 0.999332 0.999990

[Conv] 572_conv 0.999125 0.999914

[Reshape] 572_int8 0.999125 0.999933

[exDataConvert] 572 0.999125 0.999933 Desenvolvimento do ambiente da placa de desenvolvimento

A placa de desenvolvimento que uso aqui é RK3568, e o sistema operacional da placa de desenvolvimento também é Ubuntu 20.04.

Primeiro, conecte a placa de desenvolvimento ao PC anterior através do cabo de dados USB e execute

adb devicesResultados do

List of devices attached

62295353cf78c61d deviceIndica conexão bem-sucedida

Verifique o endereço IP da placa de desenvolvimento

adb shellDigite a linha de comando dentro da placa de desenvolvimento e execute

ifconfigDesta forma podemos nos conectar diretamente usando ssh e não usar mais o comando adb.

Veja a arquitetura da placa de desenvolvimento

uname -aretornar

Linux ubuntu2004 5.10.160 #8 SMP Thu Oct 19 14:04:52 CST 2023 aarch64 aarch64 aarch64 GNU/LinuxObserve que a placa de desenvolvimento é baseada na arquitetura Arm. Baixe o Anaconda no endereço de download: https://repo.anaconda.com/archive/Anaconda3-2023.09-0-Linux-aarch64.sh

Após a conclusão da instalação, crie também um ambiente Python 3.10.

conda create -n py310 python=3.10.0

source activate

conda activate py310Instalar dependências

sudo apt-get install gcc g++Se a instalação falhar, você pode fazer o seguinte

sudo apt-get install aptitude

sudo aptitude install gcc g++Instale componentes Python

pip install numpy==1.23.4 -i https://mirror.baidu.com/pypi/simple

pip install opencv-python==4.5.5.64 -i https://mirror.baidu.com/pypi/simpleCopie a pasta rknn_toolkit_lite2 na pasta rknn-toolkit2-1.5.2 para a placa de desenvolvimento e instale o componente NPU-lite Python.

cd rknn_toolkit_lite2/packages

pip install rknn_toolkit_lite2-1.5.2-cp310-cp310-linux_aarch64.whl -i https://mirror.baidu.com/pypi/simpleUse o modelo rknn para inferência, coloque a imagem anterior da espaçonave na pasta do projeto e crie uma nova

vim main.pyO conteúdo é o seguinte

from rknnlite.api import RKNNLite

import numpy as np

import cv2

def show_outputs(output):

output_sorted = sorted(output, reverse=True)

top5_str = '\n-----TOP 5-----\n'

for i in range(5):

value = output_sorted[i]

index = np.where(output == value)

for j in range(len(index)):

if (i + j) >= 5:

break

if value > 0:

topi = '{}: {}\n'.format(index[j], value)

else:

topi = '-1: 0.0\n'

top5_str += topi

print(top5_str)

def softmax(x):

return np.exp(x)/sum(np.exp(x))

if __name__ == '__main__':

rknn = RKNNLite()

rknn.load_rknn('./resnet18.rknn')

rknn.init_runtime()

img = cv2.imread('./space_shuttle_224.jpg')

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

outputs = rknn.inference(inputs=[img], data_format=None)

show_outputs(softmax(np.array(outputs[0][0])))

rknn.release()implemento

python main.pyOs resultados são os seguintes

-----TOP 5-----

[812]: 0.9996760487556458

[404]: 0.00024927023332566023

[657]: 1.449744013370946e-05

[466 833]: 9.023910024552606e-06

[466 833]: 9.023910024552606e-06Raciocínio YOLOV5

Podemos primeiro baixar o arquivo de modelo RKNN do YoloV5, para que não precisemos gerá-lo nós mesmos. Claro, se você mesmo treinar os parâmetros do modelo, precisará convertê-los em arquivos de modelo RKNN em seu PC.

O endereço de download é: https://github.com/airockchip/rknn_model_zoo

Carregamos rknn_model_zoo/models/CV/object_detection/yolo/yolov5/deploy_models/toolkit2/model_cvt/RK356X/yolov5s_tk2_RK356X_i8.rknn para a pasta do projeto da placa de desenvolvimento. Em seguida, carregue RK_NPU_SDK/RK_NPU_SDK_1.5.2/release/rknn-toolkit2-1.5.2/examples/onnx/yolov5/bus.jpg no SDK para a pasta do projeto da placa de desenvolvimento.

Crie um novo main.py com o seguinte conteúdo

from rknnlite.api import RKNNLite

import numpy as np

import cv2

OBJ_THRESH = 0.25

NMS_THRESH = 0.45

IMG_SIZE = 640

CLASSES = ("person", "bicycle", "car", "motorbike ", "aeroplane ", "bus ", "train", "truck ", "boat", "traffic light",

"fire hydrant", "stop sign ", "parking meter", "bench", "bird", "cat", "dog ", "horse ", "sheep", "cow", "elephant",

"bear", "zebra ", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite",

"baseball bat", "baseball glove", "skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup", "fork", "knife ",

"spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza ", "donut", "cake", "chair", "sofa",

"pottedplant", "bed", "diningtable", "toilet ", "tvmonitor", "laptop ", "mouse ", "remote ", "keyboard ", "cell phone", "microwave ",

"oven ", "toaster", "sink", "refrigerator ", "book", "clock", "vase", "scissors ", "teddy bear ", "hair drier", "toothbrush ")

def xywh2xyxy(x):

# Convert [x, y, w, h] to [x1, y1, x2, y2]

y = np.copy(x)

y[:, 0] = x[:, 0] - x[:, 2] / 2 # top left x

y[:, 1] = x[:, 1] - x[:, 3] / 2 # top left y

y[:, 2] = x[:, 0] + x[:, 2] / 2 # bottom right x

y[:, 3] = x[:, 1] + x[:, 3] / 2 # bottom right y

return y

def process(input, mask, anchors):

anchors = [anchors[i] for i in mask]

grid_h, grid_w = map(int, input.shape[0:2])

box_confidence = input[..., 4]

box_confidence = np.expand_dims(box_confidence, axis=-1)

box_class_probs = input[..., 5:]

box_xy = input[..., :2]*2 - 0.5

col = np.tile(np.arange(0, grid_w), grid_w).reshape(-1, grid_w)

row = np.tile(np.arange(0, grid_h).reshape(-1, 1), grid_h)

col = col.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)

row = row.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)

grid = np.concatenate((col, row), axis=-1)

box_xy += grid

box_xy *= int(IMG_SIZE/grid_h)

box_wh = pow(input[..., 2:4]*2, 2)

box_wh = box_wh * anchors

box = np.concatenate((box_xy, box_wh), axis=-1)

return box, box_confidence, box_class_probs

def filter_boxes(boxes, box_confidences, box_class_probs):

boxes = boxes.reshape(-1, 4)

box_confidences = box_confidences.reshape(-1)

box_class_probs = box_class_probs.reshape(-1, box_class_probs.shape[-1])

_box_pos = np.where(box_confidences >= OBJ_THRESH)

boxes = boxes[_box_pos]

box_confidences = box_confidences[_box_pos]

box_class_probs = box_class_probs[_box_pos]

class_max_score = np.max(box_class_probs, axis=-1)

classes = np.argmax(box_class_probs, axis=-1)

_class_pos = np.where(class_max_score >= OBJ_THRESH)

boxes = boxes[_class_pos]

classes = classes[_class_pos]

scores = (class_max_score* box_confidences)[_class_pos]

return boxes, classes, scores

def nms_boxes(boxes, scores):

x = boxes[:, 0]

y = boxes[:, 1]

w = boxes[:, 2] - boxes[:, 0]

h = boxes[:, 3] - boxes[:, 1]

areas = w * h

order = scores.argsort()[::-1]

keep = []

while order.size > 0:

i = order[0]

keep.append(i)

xx1 = np.maximum(x[i], x[order[1:]])

yy1 = np.maximum(y[i], y[order[1:]])

xx2 = np.minimum(x[i] + w[i], x[order[1:]] + w[order[1:]])

yy2 = np.minimum(y[i] + h[i], y[order[1:]] + h[order[1:]])

w1 = np.maximum(0.0, xx2 - xx1 + 0.00001)

h1 = np.maximum(0.0, yy2 - yy1 + 0.00001)

inter = w1 * h1

ovr = inter / (areas[i] + areas[order[1:]] - inter)

inds = np.where(ovr <= NMS_THRESH)[0]

order = order[inds + 1]

keep = np.array(keep)

return keep

def draw(image, boxes, scores, classes):

for box, score, cl in zip(boxes, scores, classes):

top, left, right, bottom = box

print('class: {}, score: {}'.format(CLASSES[cl], score))

print('box coordinate left,top,right,down: [{}, {}, {}, {}]'.format(top, left, right, bottom))

top = int(top)

left = int(left)

right = int(right)

bottom = int(bottom)

cv2.rectangle(image, (top, left), (right, bottom), (255, 0, 0), 2)

cv2.putText(image, '{0} {1:.2f}'.format(CLASSES[cl], score),

(top, left - 6),

cv2.FONT_HERSHEY_SIMPLEX,

0.6, (0, 0, 255), 2)

def letterbox(im, new_shape=(640, 640), color=(0, 0, 0)):

# Resize and pad image while meeting stride-multiple constraints

shape = im.shape[:2] # current shape [height, width]

if isinstance(new_shape, int):

new_shape = (new_shape, new_shape)

r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])

# Compute padding

ratio = r, r # width, height ratios

new_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))

dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - new_unpad[1] # wh padding

dw /= 2 # divide padding into 2 sides

dh /= 2

if shape[::-1] != new_unpad: # resize

im = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR)

top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))

left, right = int(round(dw - 0.1)), int(round(dw + 0.1))

im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add border

return im, ratio, (dw, dh)

def yolov5_post_process(input_data):

masks = [[0, 1, 2], [3, 4, 5], [6, 7, 8]]

anchors = [[10, 13], [16, 30], [33, 23], [30, 61], [62, 45],

[59, 119], [116, 90], [156, 198], [373, 326]]

boxes, classes, scores = [], [], []

for input, mask in zip(input_data, masks):

b, c, s = process(input, mask, anchors)

b, c, s = filter_boxes(b, c, s)

boxes.append(b)

classes.append(c)

scores.append(s)

boxes = np.concatenate(boxes)

boxes = xywh2xyxy(boxes)

classes = np.concatenate(classes)

scores = np.concatenate(scores)

nboxes, nclasses, nscores = [], [], []

for c in set(classes):

inds = np.where(classes == c)

b = boxes[inds]

c = classes[inds]

s = scores[inds]

keep = nms_boxes(b, s)

nboxes.append(b[keep])

nclasses.append(c[keep])

nscores.append(s[keep])

if not nclasses and not nscores:

return None, None, None

boxes = np.concatenate(nboxes)

classes = np.concatenate(nclasses)

scores = np.concatenate(nscores)

return boxes, classes, scores

if __name__ == '__main__':

rknn = RKNNLite()

rknn.load_rknn('./yolov5s_tk2_RK356X_i8.rknn')

rknn.init_runtime()

img = cv2.imread('./bus.jpg')

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img = cv2.resize(img, (IMG_SIZE, IMG_SIZE))

outputs = rknn.inference(inputs=[img])

input0_data = outputs[0]

input1_data = outputs[1]

input2_data = outputs[2]

input0_data = input0_data.reshape([3, -1]+list(input0_data.shape[-2:]))

input1_data = input1_data.reshape([3, -1]+list(input1_data.shape[-2:]))

input2_data = input2_data.reshape([3, -1]+list(input2_data.shape[-2:]))

input_data = list()

input_data.append(np.transpose(input0_data, (2, 3, 0, 1)))

input_data.append(np.transpose(input1_data, (2, 3, 0, 1)))

input_data.append(np.transpose(input2_data, (2, 3, 0, 1)))

boxes, classes, scores = yolov5_post_process(input_data)

img_1 = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)

if boxes is not None:

draw(img_1, boxes, scores, classes)

cv2.imwrite('result.jpg', img_1)

rknn.release()implemento

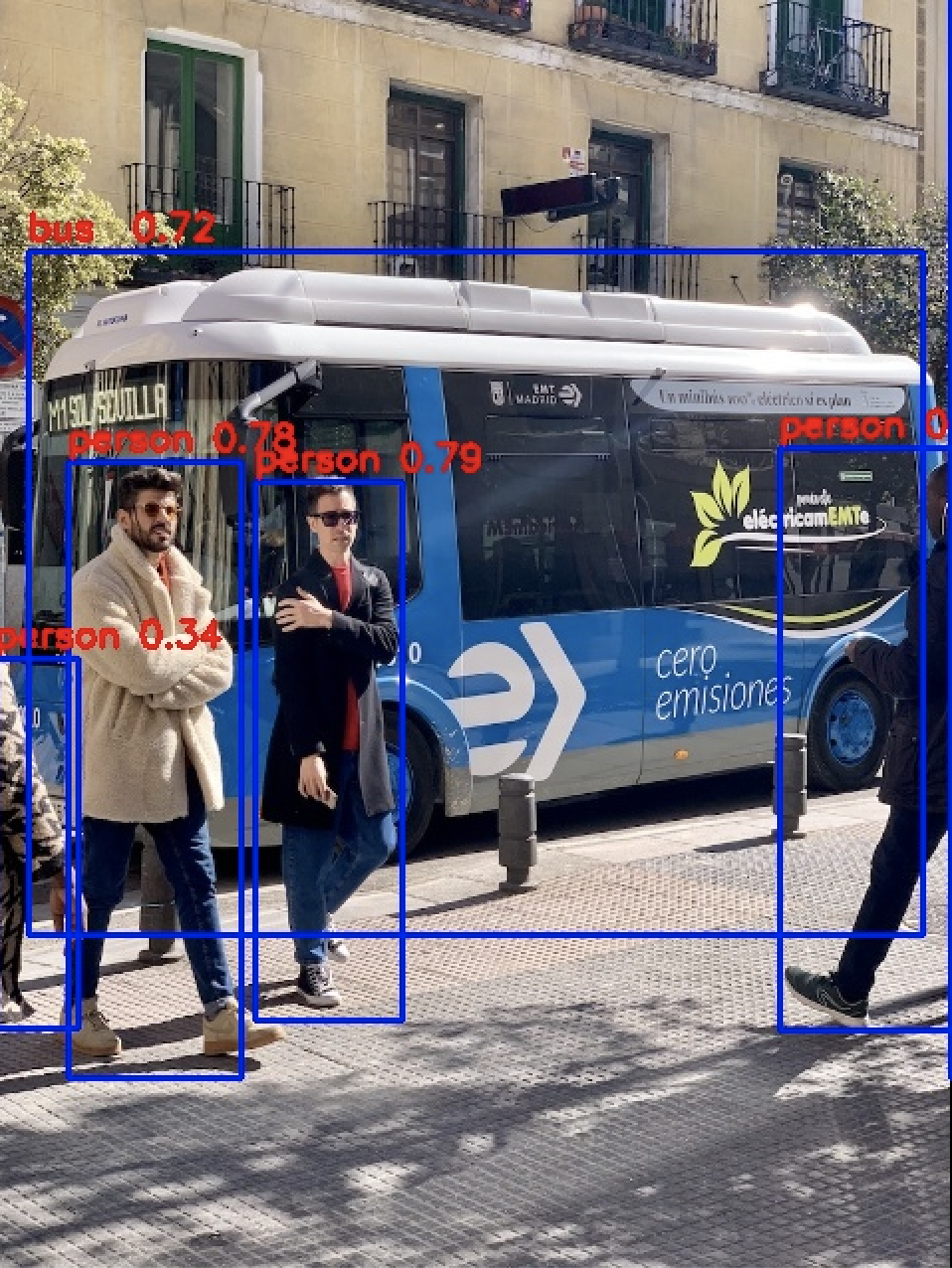

python main.pyresultado da operação

class: person, score: 0.786016583442688

box coordinate left,top,right,down: [210.50113344192505, 244.7387936115265, 284.80147886276245, 515.0964114665985]

class: person, score: 0.7838758230209351

box coordinate left,top,right,down: [116.20471256971359, 234.06367230415344, 203.35171443223953, 544.5328242778778]

class: person, score: 0.7641521096229553

box coordinate left,top,right,down: [474.45237481594086, 227.86068081855774, 564.7276788949966, 520.4304568767548]

class: person, score: 0.339653879404068

box coordinate left,top,right,down: [79.4285237789154, 333.9151940345764, 121.72851967811584, 519.2878088951111]

class: person, score: 0.20468413829803467

box coordinate left,top,right,down: [560.677864074707, -2.5390124320983887, 640.130485534668, 544.7765002250671]

class: bus , score: 0.7226678729057312

box coordinate left,top,right,down: [96.8691902756691, 128.06663250923157, 546.5100211501122, 472.1479060649872]A imagem do nosso raciocínio é a seguinte

Resultados de inferência

Raciocínio C++YOLOV5

- Use a estrutura diretamente

O endereço de download da estrutura é https://github.com/rockchip-linux/rknpu2

Coloque o rknpu2-master.zip baixado na placa de desenvolvimento e descompacte-o, execute

cd rknpu2-master/examples/rknn_yolov5_demo

chmod 777 build-linux_RK3566_RK3568.sh

./build-linux_RK3566_RK3568.shNeste momento, uma pasta de construção será gerada. Copie o yolov5s_tk2_RK356X_i8.rknn e bus.jpg anteriores para a pasta atual e execute

./build/build_linux_aarch64/rknn_yolov5_demo ./yolov5s_tk2_RK356X_i8.rknn ./bus.jpgResultados do

post process config: box_conf_threshold = 0.25, nms_threshold = 0.45

Loading mode...

sdk version: 1.4.0 (a10f100eb@2022-09-09T09:07:14) driver version: 0.8.8

model input num: 1, output num: 3

index=0, name=x.1, n_dims=4, dims=[1, 640, 640, 3], n_elems=1228800, size=1228800, w_stride = 640, size_with_stride=1228800, fmt=NHWC, type=INT8, qnt_type=AFFINE, zp=-128, scale=0.003922

index=0, name=172, n_dims=4, dims=[1, 255, 80, 80], n_elems=1632000, size=1632000, w_stride = 0, size_with_stride=1632000, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=-128, scale=0.003921

index=1, name=173, n_dims=4, dims=[1, 255, 40, 40], n_elems=408000, size=408000, w_stride = 0, size_with_stride=408000, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=-128, scale=0.003920

index=2, name=174, n_dims=4, dims=[1, 255, 20, 20], n_elems=102000, size=102000, w_stride = 0, size_with_stride=102000, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=-128, scale=0.003921

model is NHWC input fmt

model input height=640, width=640, channel=3

Read ./bus.jpg ...

img width = 640, img height = 640

once run use 119.293000 ms

loadLabelName ./model/coco_80_labels_list.txt

person @ (210 244 284 515) 0.786017

person @ (115 238 202 537) 0.783876

person @ (474 227 564 520) 0.764152

bus @ (96 128 546 472) 0.722668

person @ (79 333 121 519) 0.339654

person @ (560 0 640 544) 0.204684

save detect result to ./out.jpg

loop count = 10 , average run 115.777300 msResultados de inferência

- Fácil desenvolvimento de código

Aqui criamos duas pastas para colocar os arquivos da biblioteca rknpu2. A localização é arbitrária. Aqui está

/home/user/rknn/include

/home/user/rknn/libColoque todos os arquivos .so em rknpu2-master/runtime/RK356X/Linux/librknn_api/aarch64 em /home/user/rknn/lib; coloque todos os arquivos .so em rknpu2-master/runtime/RK356X/Linux/librknn_api/include O arquivo .h é colocado em /home/user/rknn/include.

implemento

sudo vim /etc/ld.so.confadicione no final

include /home/user/rknn/libimplemento

sudo ldconfigInstale a versão C++ do OpenCV. Aqui você pode consultar a compilação do tensorrtx/yolov5 no artigo de implantação do modelo .

Copie rknpu2-master/examples/rknn_yolov5_demo/model/coco_80_labels_list.txt para a pasta do projeto atual. Copie o yolov5s_tk2_RK356X_i8.rknn e o bus.jpg anteriores para a pasta do projeto atual. Detectamos apenas pessoas aqui, não outros tipos.

Crie main.cpp com o seguinte conteúdo

#include <iostream>

#include <stdio.h>

#include <stdlib.h>

#include <opencv2/core.hpp>

#include <opencv2/videoio.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/imgproc/types_c.h>

#include <string>

#include <string.h>

#include <vector>

#include "rknn_api.h"

#include "postprocess.h"

using namespace std;

using namespace cv;

static void dump_tensor_attr(rknn_tensor_attr *attr)

{

string shape_str = attr->n_dims < 1 ? "" : to_string(attr->dims[0]);

for (int i = 1; i < attr->n_dims; ++i)

{

shape_str += ", " + to_string(attr->dims[i]);

}

printf(" index=%d, name=%s, n_dims=%d, dims=[%s], n_elems=%d, size=%d, w_stride = %d, size_with_stride=%d, fmt=%s, "

"type=%s, qnt_type=%s, "

"zp=%d, scale=%f\n",

attr->index, attr->name, attr->n_dims, shape_str.c_str(), attr->n_elems, attr->size, attr->w_stride,

attr->size_with_stride, get_format_string(attr->fmt), get_type_string(attr->type),

get_qnt_type_string(attr->qnt_type), attr->zp, attr->scale);

}

static unsigned char *load_data(FILE *fp, size_t ofst, size_t sz)

{

unsigned char *data;

int ret;

data = NULL;

if (NULL == fp)

{

return NULL;

}

ret = fseek(fp, ofst, SEEK_SET);

if (ret != 0)

{

printf("blob seek failure.\n");

return NULL;

}

data = (unsigned char *)malloc(sz);

if (data == NULL)

{

printf("buffer malloc failure.\n");

return NULL;

}

ret = fread(data, 1, sz, fp);

return data;

}

static unsigned char *load_model(const char *filename, int *model_size)

{

FILE *fp;

unsigned char *data;

fp = fopen(filename, "rb");

if (NULL == fp)

{

printf("Open file %s failed.\n", filename);

return NULL;

}

fseek(fp, 0, SEEK_END);

int size = ftell(fp);

data = load_data(fp, 0, size);

fclose(fp);

*model_size = size;

return data;

}

int main() {

const float nms_threshold = 0.45;

const float box_conf_threshold = 0.25;

const int width = 640;

const int height = 640;

const int channel = 3;

char *model_name = "./yolov5s_tk2_RK356X_i8.rknn";

int model_data_size = 0;

unsigned char *model_data = load_model(model_name, &model_data_size);

rknn_context ctx;

rknn_init(&ctx, model_data, model_data_size, 0, NULL);

Mat src = imread("./bus.jpg");

Mat dst, img;

cvtColor(src, dst, CV_BGR2RGB);

resize(dst, img, Size(width,height));

rknn_input inputs[1];

memset[inputs, 0, sizeof(inputs)];

inputs[0].index = 0;

inputs[0].type = RKNN_TENSOR_UINT8;

inputs[0].size = width * height * channel;

inputs[0].fmt = RKNN_TENSOR_NHWC;

inputs[0].pass_through = 0;

inputs[0].buf = img.data;

rknn_inputs_set(ctx, 1, inputs);

rknn_output outputs[3];

memset(outputs, 0, sizeof(outputs));

for(int i = 0; i < 3; i++) {

outputs[i].want_float = 0;

}

rknn_run(ctx, NULL);

rknn_outputs_get(ctx, 3, outputs, NULL);

rknn_tensor_attr output_attrs[3];

memset(output_attrs, 0, sizeof(output_attrs));

for (int i = 0; i < 3; i++) {

output_attrs[i].index = i;

rknn_query(ctx, RKNN_QUERY_OUTPUT_ATTR, &(output_attrs[i]), sizeof(rknn_tensor_attr));

dump_tensor_attr(&(output_attrs[i]));

}

detect_result_group_t detect_result_group;

vector<float> out_scales;

vector<int32_t> out_zps;

for (int i = 0; i < 3; ++i) {

out_scales.push_back(output_attrs[i].scale);

out_zps.push_back(output_attrs[i].zp);

}

BOX_RECT pads;

memset(&pads, 0, sizeof(BOX_RECT));

float scale_w = (float)width / img.cols;

float scale_h = (float)height / img.rows;

post_process((int8_t *)outputs[0].buf, (int8_t *)outputs[1].buf, (int8_t *)outputs[2].buf, height, width,

box_conf_threshold, nms_threshold, pads, scale_w, scale_h, out_zps, out_scales, &detect_result_group);

char text[256];

for (int i = 0; i < detect_result_group.count; i++) {

detect_result_t *det_result = &(detect_result_group.results[i]);

if (strcmp(det_result->name, "person") == 0) {

sprintf(text, "%s %.1f%%", det_result->name, det_result->prop * 100);

printf("%s @ (%d %d %d %d) %f\n", det_result->name, det_result->box.left, det_result->box.top,

det_result->box.right, det_result->box.bottom, det_result->prop);

int x1 = det_result->box.left;

int y1 = det_result->box.top;

int x2 = det_result->box.right;

int y2 = det_result->box.bottom;

rectangle(src, Point(x1, y1), Point(x2, y2), Scalar(255, 0, 0), 3);

putText(src, text, Point(x1, y1 + 12), FONT_HERSHEY_SIMPLEX, 0.4, Scalar(255, 255, 255));

}

}

imwrite("./out.jpg", src);

rknn_outputs_release(ctx, 3, outputs);

return 0;

}pós-processamento.h

#ifndef _RKNN_YOLOV5_DEMO_POSTPROCESS_H_

#define _RKNN_YOLOV5_DEMO_POSTPROCESS_H_

#include <stdint.h>

#include <vector>

#define OBJ_NAME_MAX_SIZE 16

#define OBJ_NUMB_MAX_SIZE 64

#define OBJ_CLASS_NUM 80

#define NMS_THRESH 0.45

#define BOX_THRESH 0.25

#define PROP_BOX_SIZE (5 + OBJ_CLASS_NUM)

typedef struct _BOX_RECT

{

int left;

int right;

int top;

int bottom;

} BOX_RECT;

typedef struct __detect_result_t

{

char name[OBJ_NAME_MAX_SIZE];

BOX_RECT box;

float prop;

} detect_result_t;

typedef struct _detect_result_group_t

{

int id;

int count;

detect_result_t results[OBJ_NUMB_MAX_SIZE];

} detect_result_group_t;

int post_process(int8_t *input0, int8_t *input1, int8_t *input2, int model_in_h, int model_in_w,

float conf_threshold, float nms_threshold, BOX_RECT pads, float scale_w, float scale_h,

std::vector<int32_t> &qnt_zps, std::vector<float> &qnt_scales,

detect_result_group_t *group);

void deinitPostProcess();

#endif //_RKNN_YOLOV5_DEMO_POSTPROCESS_H_pós-processamento.cpp

#include "postprocess.h"

#include <math.h>

#include <stdint.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/time.h>

#include <set>

#include <vector>

#define LABEL_NALE_TXT_PATH "./coco_80_labels_list.txt"

static char *labels[OBJ_CLASS_NUM];

const int anchor0[6] = {10, 13, 16, 30, 33, 23};

const int anchor1[6] = {30, 61, 62, 45, 59, 119};

const int anchor2[6] = {116, 90, 156, 198, 373, 326};

inline static int clamp(float val, int min, int max) { return val > min ? (val < max ? val : max) : min; }

char *readLine(FILE *fp, char *buffer, int *len)

{

int ch;

int i = 0;

size_t buff_len = 0;

buffer = (char *)malloc(buff_len + 1);

if (!buffer)

return NULL; // Out of memory

while ((ch = fgetc(fp)) != '\n' && ch != EOF)

{

buff_len++;

void *tmp = realloc(buffer, buff_len + 1);

if (tmp == NULL)

{

free(buffer);

return NULL; // Out of memory

}

buffer = (char *)tmp;

buffer[i] = (char)ch;

i++;

}

buffer[i] = '\0';

*len = buff_len;

// Detect end

if (ch == EOF && (i == 0 || ferror(fp)))

{

free(buffer);

return NULL;

}

return buffer;

}

int readLines(const char *fileName, char *lines[], int max_line)

{

FILE *file = fopen(fileName, "r");

char *s;

int i = 0;

int n = 0;

if (file == NULL)

{

printf("Open %s fail!\n", fileName);

return -1;

}

while ((s = readLine(file, s, &n)) != NULL)

{

lines[i++] = s;

if (i >= max_line)

break;

}

fclose(file);

return i;

}

int loadLabelName(const char *locationFilename, char *label[])

{

printf("loadLabelName %s\n", locationFilename);

readLines(locationFilename, label, OBJ_CLASS_NUM);

return 0;

}

static float CalculateOverlap(float xmin0, float ymin0, float xmax0, float ymax0, float xmin1, float ymin1, float xmax1,

float ymax1)

{

float w = fmax(0.f, fmin(xmax0, xmax1) - fmax(xmin0, xmin1) + 1.0);

float h = fmax(0.f, fmin(ymax0, ymax1) - fmax(ymin0, ymin1) + 1.0);

float i = w * h;

float u = (xmax0 - xmin0 + 1.0) * (ymax0 - ymin0 + 1.0) + (xmax1 - xmin1 + 1.0) * (ymax1 - ymin1 + 1.0) - i;

return u <= 0.f ? 0.f : (i / u);

}

static int nms(int validCount, std::vector<float> &outputLocations, std::vector<int> classIds, std::vector<int> &order,

int filterId, float threshold)

{

for (int i = 0; i < validCount; ++i)

{

if (order[i] == -1 || classIds[i] != filterId)

{

continue;

}

int n = order[i];

for (int j = i + 1; j < validCount; ++j)

{

int m = order[j];

if (m == -1 || classIds[i] != filterId)

{

continue;

}

float xmin0 = outputLocations[n * 4 + 0];

float ymin0 = outputLocations[n * 4 + 1];

float xmax0 = outputLocations[n * 4 + 0] + outputLocations[n * 4 + 2];

float ymax0 = outputLocations[n * 4 + 1] + outputLocations[n * 4 + 3];

float xmin1 = outputLocations[m * 4 + 0];

float ymin1 = outputLocations[m * 4 + 1];

float xmax1 = outputLocations[m * 4 + 0] + outputLocations[m * 4 + 2];

float ymax1 = outputLocations[m * 4 + 1] + outputLocations[m * 4 + 3];

float iou = CalculateOverlap(xmin0, ymin0, xmax0, ymax0, xmin1, ymin1, xmax1, ymax1);

if (iou > threshold)

{

order[j] = -1;

}

}

}

return 0;

}

static int quick_sort_indice_inverse(std::vector<float> &input, int left, int right, std::vector<int> &indices)

{

float key;

int key_index;

int low = left;

int high = right;

if (left < right)

{

key_index = indices[left];

key = input[left];

while (low < high)

{

while (low < high && input[high] <= key)

{

high--;

}

input[low] = input[high];

indices[low] = indices[high];

while (low < high && input[low] >= key)

{

low++;

}

input[high] = input[low];

indices[high] = indices[low];

}

input[low] = key;

indices[low] = key_index;

quick_sort_indice_inverse(input, left, low - 1, indices);

quick_sort_indice_inverse(input, low + 1, right, indices);

}

return low;

}

static float sigmoid(float x) { return 1.0 / (1.0 + expf(-x)); }

static float unsigmoid(float y) { return -1.0 * logf((1.0 / y) - 1.0); }

inline static int32_t __clip(float val, float min, float max)

{

float f = val <= min ? min : (val >= max ? max : val);

return f;

}

static int8_t qnt_f32_to_affine(float f32, int32_t zp, float scale)

{

float dst_val = (f32 / scale) + zp;

int8_t res = (int8_t)__clip(dst_val, -128, 127);

return res;

}

static float deqnt_affine_to_f32(int8_t qnt, int32_t zp, float scale) { return ((float)qnt - (float)zp) * scale; }

static int process(int8_t *input, int *anchor, int grid_h, int grid_w, int height, int width, int stride,

std::vector<float> &boxes, std::vector<float> &objProbs, std::vector<int> &classId, float threshold,

int32_t zp, float scale)

{

int validCount = 0;

int grid_len = grid_h * grid_w;

int8_t thres_i8 = qnt_f32_to_affine(threshold, zp, scale);

for (int a = 0; a < 3; a++)

{

for (int i = 0; i < grid_h; i++)

{

for (int j = 0; j < grid_w; j++)

{

int8_t box_confidence = input[(PROP_BOX_SIZE * a + 4) * grid_len + i * grid_w + j];

if (box_confidence >= thres_i8)

{

int offset = (PROP_BOX_SIZE * a) * grid_len + i * grid_w + j;

int8_t *in_ptr = input + offset;

float box_x = (deqnt_affine_to_f32(*in_ptr, zp, scale)) * 2.0 - 0.5;

float box_y = (deqnt_affine_to_f32(in_ptr[grid_len], zp, scale)) * 2.0 - 0.5;

float box_w = (deqnt_affine_to_f32(in_ptr[2 * grid_len], zp, scale)) * 2.0;

float box_h = (deqnt_affine_to_f32(in_ptr[3 * grid_len], zp, scale)) * 2.0;

box_x = (box_x + j) * (float)stride;

box_y = (box_y + i) * (float)stride;

box_w = box_w * box_w * (float)anchor[a * 2];

box_h = box_h * box_h * (float)anchor[a * 2 + 1];

box_x -= (box_w / 2.0);

box_y -= (box_h / 2.0);

int8_t maxClassProbs = in_ptr[5 * grid_len];

int maxClassId = 0;

for (int k = 1; k < OBJ_CLASS_NUM; ++k)

{

int8_t prob = in_ptr[(5 + k) * grid_len];

if (prob > maxClassProbs)

{

maxClassId = k;

maxClassProbs = prob;

}

}

if (maxClassProbs > thres_i8)

{

objProbs.push_back((deqnt_affine_to_f32(maxClassProbs, zp, scale)) * (deqnt_affine_to_f32(box_confidence, zp, scale)));

classId.push_back(maxClassId);

validCount++;

boxes.push_back(box_x);

boxes.push_back(box_y);

boxes.push_back(box_w);

boxes.push_back(box_h);

}

}

}

}

}

return validCount;

}

int post_process(int8_t *input0, int8_t *input1, int8_t *input2, int model_in_h, int model_in_w, float conf_threshold,

float nms_threshold, BOX_RECT pads, float scale_w, float scale_h, std::vector<int32_t> &qnt_zps,

std::vector<float> &qnt_scales, detect_result_group_t *group)

{

static int init = -1;

if (init == -1)

{

int ret = 0;

ret = loadLabelName(LABEL_NALE_TXT_PATH, labels);

if (ret < 0)

{

return -1;

}

init = 0;

}

memset(group, 0, sizeof(detect_result_group_t));

std::vector<float> filterBoxes;

std::vector<float> objProbs;

std::vector<int> classId;

// stride 8

int stride0 = 8;

int grid_h0 = model_in_h / stride0;

int grid_w0 = model_in_w / stride0;

int validCount0 = 0;

validCount0 = process(input0, (int *)anchor0, grid_h0, grid_w0, model_in_h, model_in_w, stride0, filterBoxes, objProbs,

classId, conf_threshold, qnt_zps[0], qnt_scales[0]);

// stride 16

int stride1 = 16;

int grid_h1 = model_in_h / stride1;

int grid_w1 = model_in_w / stride1;

int validCount1 = 0;

validCount1 = process(input1, (int *)anchor1, grid_h1, grid_w1, model_in_h, model_in_w, stride1, filterBoxes, objProbs,

classId, conf_threshold, qnt_zps[1], qnt_scales[1]);

// stride 32

int stride2 = 32;

int grid_h2 = model_in_h / stride2;

int grid_w2 = model_in_w / stride2;

int validCount2 = 0;

validCount2 = process(input2, (int *)anchor2, grid_h2, grid_w2, model_in_h, model_in_w, stride2, filterBoxes, objProbs,

classId, conf_threshold, qnt_zps[2], qnt_scales[2]);

int validCount = validCount0 + validCount1 + validCount2;

// no object detect

if (validCount <= 0)

{

return 0;

}

std::vector<int> indexArray;

for (int i = 0; i < validCount; ++i)

{

indexArray.push_back(i);

}

quick_sort_indice_inverse(objProbs, 0, validCount - 1, indexArray);

std::set<int> class_set(std::begin(classId), std::end(classId));

for (auto c : class_set)

{

nms(validCount, filterBoxes, classId, indexArray, c, nms_threshold);

}

int last_count = 0;

group->count = 0;

/* box valid detect target */

for (int i = 0; i < validCount; ++i)

{

if (indexArray[i] == -1 || last_count >= OBJ_NUMB_MAX_SIZE)

{

continue;

}

int n = indexArray[i];

float x1 = filterBoxes[n * 4 + 0] - pads.left;

float y1 = filterBoxes[n * 4 + 1] - pads.top;

float x2 = x1 + filterBoxes[n * 4 + 2];

float y2 = y1 + filterBoxes[n * 4 + 3];

int id = classId[n];

float obj_conf = objProbs[i];

group->results[last_count].box.left = (int)(clamp(x1, 0, model_in_w) / scale_w);

group->results[last_count].box.top = (int)(clamp(y1, 0, model_in_h) / scale_h);

group->results[last_count].box.right = (int)(clamp(x2, 0, model_in_w) / scale_w);

group->results[last_count].box.bottom = (int)(clamp(y2, 0, model_in_h) / scale_h);

group->results[last_count].prop = obj_conf;

char *label = labels[id];

strncpy(group->results[last_count].name, label, OBJ_NAME_MAX_SIZE);

// printf("result %2d: (%4d, %4d, %4d, %4d), %s\n", i, group->results[last_count].box.left,

// group->results[last_count].box.top,

// group->results[last_count].box.right, group->results[last_count].box.bottom, label);

last_count++;

}

group->count = last_count;

return 0;

}

void deinitPostProcess()

{

for (int i = 0; i < OBJ_CLASS_NUM; i++)

{

if (labels[i] != nullptr)

{

free(labels[i]);

labels[i] = nullptr;

}

}

}Makefile

EXE=main

INCLUDE=/home/user/rknn/include/

LIBPATH=/home/user/rknn/lib/

PKGS=opencv4

CFLAGS= -I$(INCLUDE)

CFLAGS+= `pkg-config --cflags $(PKGS)`

LIBS= `pkg-config --libs $(PKGS)`

LIBS+= -lrknn_api

LIBS+= -L$(LIBPATH)

CXX_OBJECTS := $(patsubst %.cpp,%.o,$(shell find . -name "*.cpp"))

DEP_FILES =$(patsubst %.o, %.d, $(CXX_OBJECTS))

$(EXE): $(CXX_OBJECTS)

$(CXX) $(CXX_OBJECTS) -o $(EXE) $(LIBS)

%.o: %.cpp

$(CXX) -c -o $@ $(CFLAGS) $(LIBS) $<

clean:

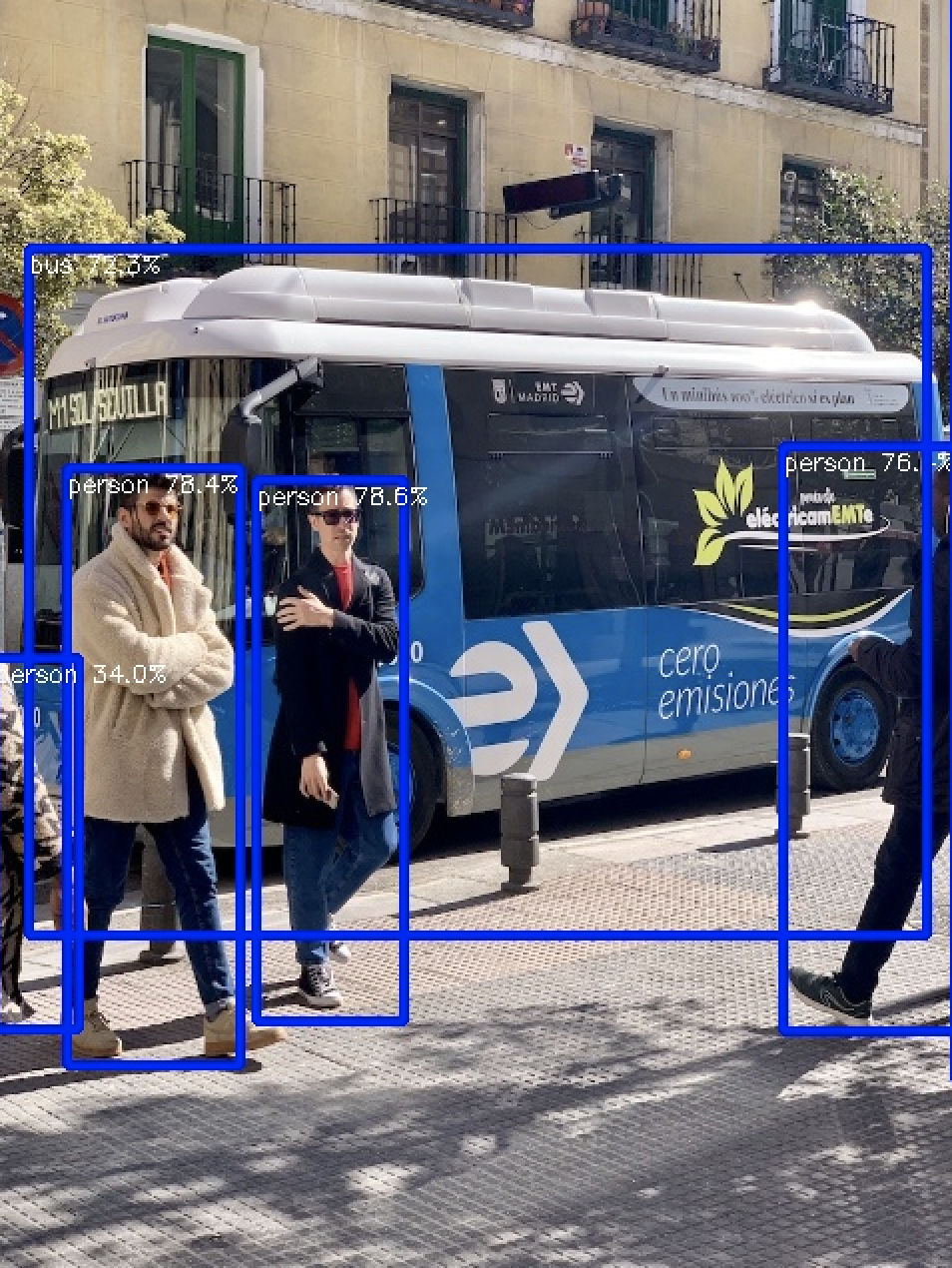

rm -rf $(CXX_OBJECTS) $(DEP_FILES) $(EXE)Resultado dos testes

Você pode ver aqui que o barramento não foi detectado.