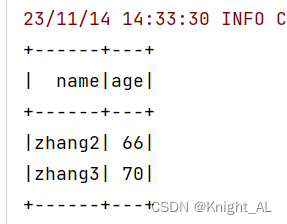

シンプルなケース

import org.apache.spark.sql.SparkSession

import org.junit.Test

case class Person(id:Int,name:String,sex:String,age:Int)

class DataSetCreate {

val spark = SparkSession

.builder()

.appName("test")

.master("local[4]")

.getOrCreate()

import spark.implicits._

@Test

def createData():Unit={

val list = List(Person(1,"zhangsan","man",10),

Person(2,"zhang2","woman",66),

Person(3,"zhang3","man",70),

Person(4,"zhang4","man",22))

val df = list.toDF()

//TODO 1.将dataFrame/dataSet数据集注册成表

df.createOrReplaceTempView("person")

spark.sql(

"""

|select

|name,age

|from person

|where age >=30

|""".stripMargin).show()

}

}

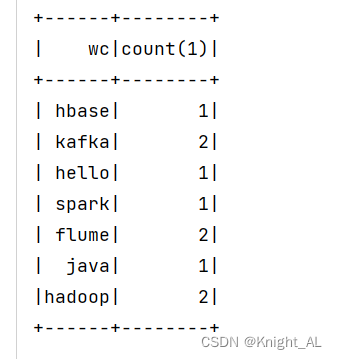

小さなケースを書く

- 各単語の番号を取得する

情報元

hello java

spark hadoop flume kafka

hbase kafka flume hadoop

横方向ビューのexplode(split(value," "))は列を行に変換します。

import org.apache.spark.sql.SparkSession

import org.junit.Test

class DataSetCreate {

val spark = SparkSession

.builder()

.appName("test")

.master("local[4]")

.getOrCreate()

import spark.implicits._

@Test

def createData():Unit={

val ds = spark.read.textFile("src/main/resources/wc.txt")

ds.createOrReplaceTempView("wordCount")

spark.sql(

"""

|select

| wc,

| count(1)

|from wordCount Lateral View explode(split(value," ")) as wc

|group by wc;

|""".stripMargin).show()

}

}