前言

冷启动指标是App体验中相当重要的指标,在电商App中更是对用户的留存意愿有着举足轻重的影响。通常是指App进程启动到首页首帧出现的耗时,但是在用户体验的角度来看,应当是从用户点击App图标,到首页内容完全展示结束。

将启动阶段工作分配为任务并构造出有向无环图的设计已经是现阶段组件化App的启动框架标配,但是受限于移动端的性能瓶颈,高并发度的设计使用不当往往会让锁竞争、磁盘IO阻塞等耗时问题频繁出现。如何百尺竿头更进一步,在启动阶段有限的时间里,将有限的资源最大化利用,在保障业务功能稳定的前提下尽可能压缩主线程耗时,是本文将要探讨的主题。

本文将介绍我们是如何通过对启动阶段的系统资源做统一管控,按需分配和错峰加载等手段将得物App的线上启动指标降低10%,线下指标降低34%,并在同类型的电商App中提升至Top3。

一、指标选择

传统的性能监控指标,通常是以Application的attachBaseContext回调作为起点,首页decorView.postDraw任务执行作为结束时间点,但是这样并不能统计到dex加载以及contentProvider初始化的耗时。

因此为了更贴近用户真实体验,在启动速度监控指标的基础上,我们添加了一个线下的用户体感指标,通过对录屏文件逐帧分析,找到App图标点击动画开始播放(图标变暗)作为起始帧,首页内容出现的第一帧作为结束帧,计算出结果作为启动耗时。

例:启动过程为03:00 - 03:88,故启动耗时为880ms。

二、Application优化

App在不同的业务场景下可能会落到不同的首页(社区/交易/H5),但是Application运行的流程基本是固定的,且很少变更,因此Application优化是我们的首要选择。

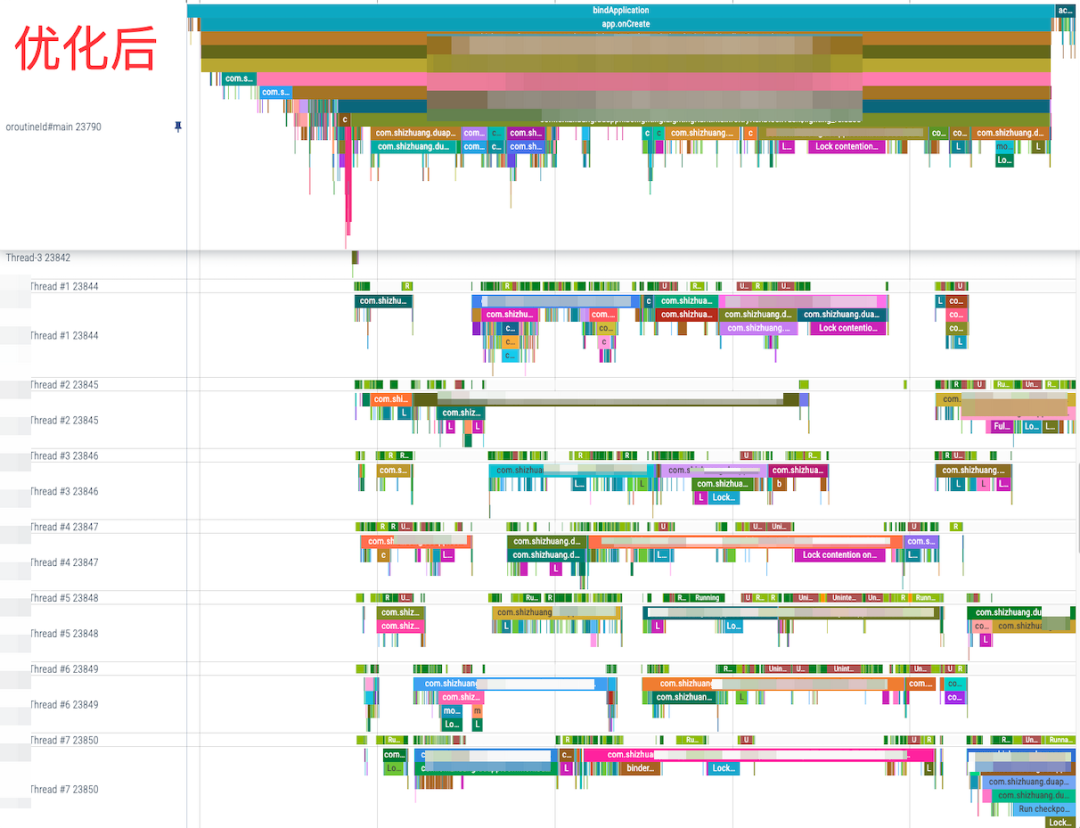

得物App的启动框架任务在近几年已经先后做过多轮优化,常规的抓trace寻找耗时点并异步化已经不能带来明显的收益,得从锁竞争,CPU利用率的角度去挖掘优化点,这类优化可能短期收益不会特别明显,但从长远来看能够提前规避很多劣化问题。

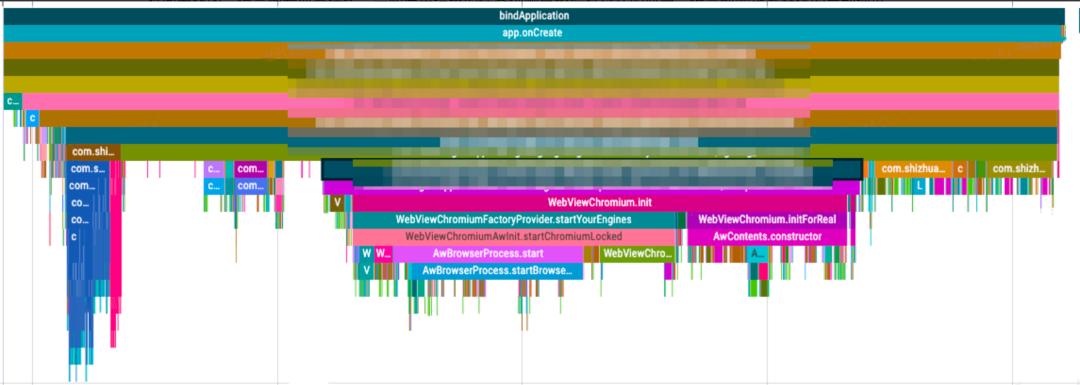

1.WebView优化

App在首次调用webview的构造方法时会拉起系统对webview的初始化流程,一般会耗时200+ms,如此耗时的任务常规思路都是直接丢到子线程去执行,但是chrome内核中加入了非常多的线程检查,使得webview只能在构造它的线程中使用。

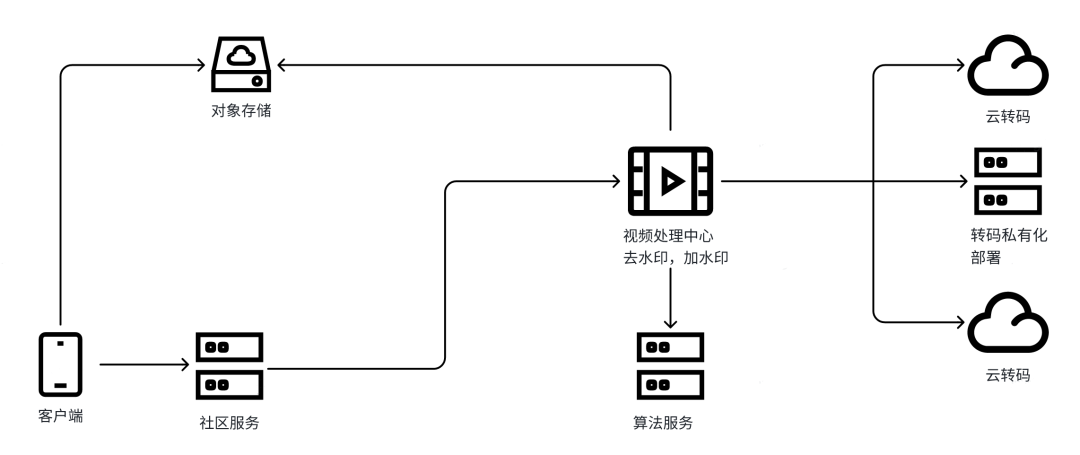

为了加速H5页面的启动,App通常会选择在Application阶段就初始化webview并缓存,但是webview的初始化涉及跨进程交互和读文件,因此CPU时间片,磁盘资源和binder线程池中任何一种不足都会导致其耗时膨胀,而Application阶段任务繁多,恰恰很容易出现以上资源短缺的情况。

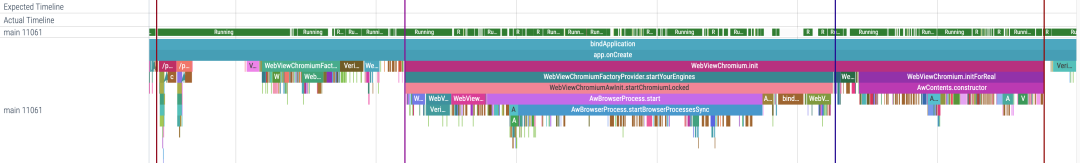

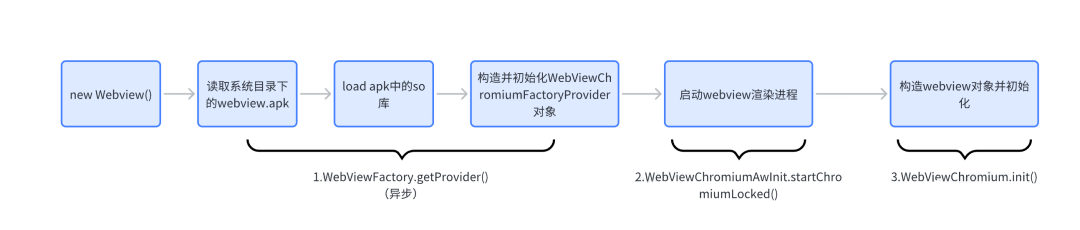

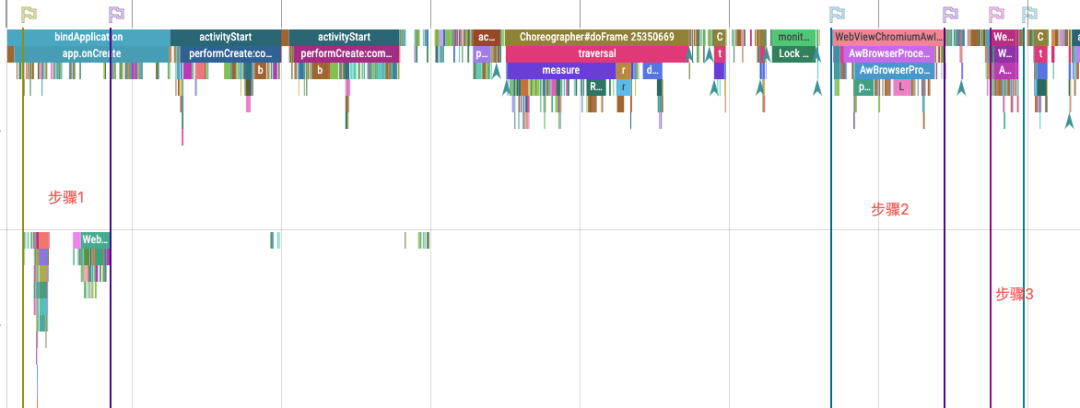

因此我们将webview拆分成三个步骤,分散到启动的不同阶段来执行,这样可以降低因为竞争资源导致的耗时膨胀问题,同时还可以大幅度降低出现ANR的几率。

1.1 任务拆分

a. provider预加载

WebViewFactoryProvider是用于和webview渲染进程交互的接口类,webview初始化的第一步就是加载系统webview的apk文件,构建出classloader并反射创建了WebViewFactoryProvider的静态实例,这一操作并没有涉及线程检查,因此我们可以直接将其交给子线程执行。

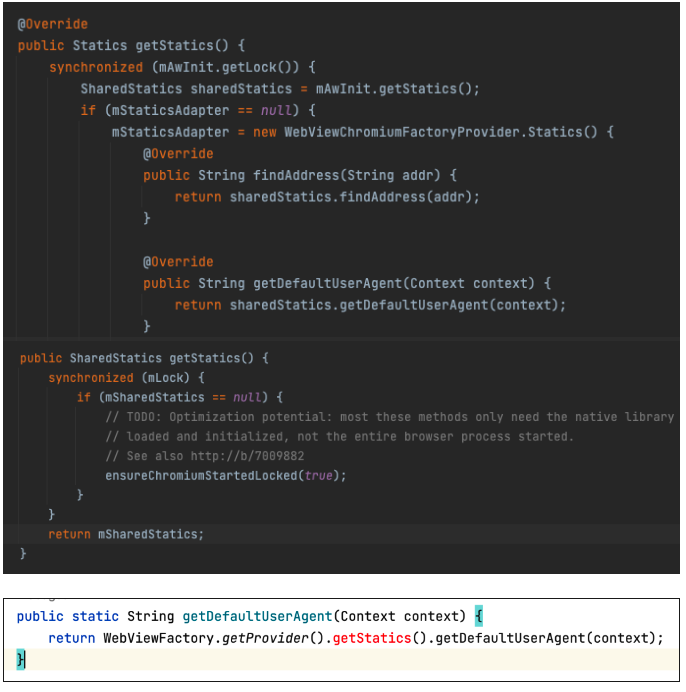

b. 初始化webview渲染进程

这一步对应着chrome内核中的WebViewChromiumAwInit.ensureChromiumStartedLocked()方法,是webview初始化最耗时的部分,但是和第三步是连续执行的。走码分析发现WebViewFactoryProvider暴露给应用的接口中,getStatics这个方法会正好会触发ensureChromiumStartedLocked方法。

至此,我们就可以通过执行WebSettings.getDefaultUserAgent()来达到仅初始化webview渲染进程的目的。

c. 构造webview

即new Webview()

1.2 任务分配

为了最大程度缩短主线程耗时,我们的任务安排如下:

- a.provider预加载,可以异步执行,且没有任何前置依赖,因此放在Application阶段最早的时间点执行即可。

- b.初始化webview渲染进程,必须在主线程,因此放到首页首帧结束之后。

- c.构造webview,必须在主线程,在第二步完成时post到主线程执行。这样可以确保和第二步不在同一个消息中,降低ANR的几率。

1.3 小结

尽管我们已经将webview初始化拆分为了三个部分,但是耗时占比最高的第二步在低端机或者极端情况还是可能触达ANR的阈值,因此我们做了一些限制,例如当前设备会统计并记录webview完整初始化的耗时,仅当耗时低于配置下发的阈值时,开启上述的分段执行优化。

App如果是通过推送、投放等渠道打开,一般打开的页面大概率是H5营销页,因此这类场景不适用于上述的分段加载,所以需要hook主线程的messageQueue,解析出启动页面的intent信息,再做判断。

受限于开屏广告功能,我们目前只能对无开屏广告的启动场景开启此优化,后续将计划利用广告倒计时的间隙执行步骤2,来覆盖有开屏广告的场景。

2.ARouter优化

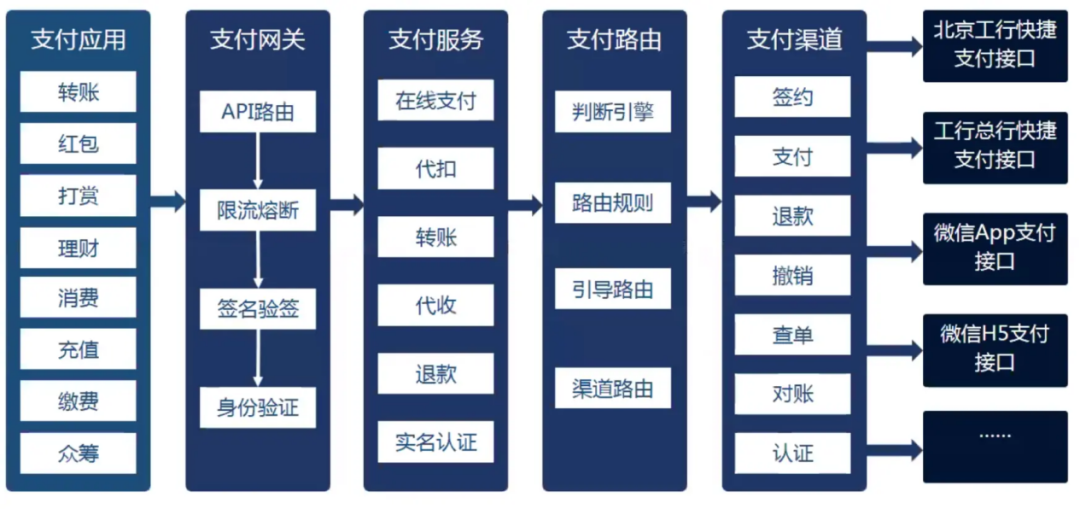

在当下组件化流行的时代,路由组件已经几乎是所有大型安卓App必备的基础组件,目前得物使用的是开源的ARouter框架。

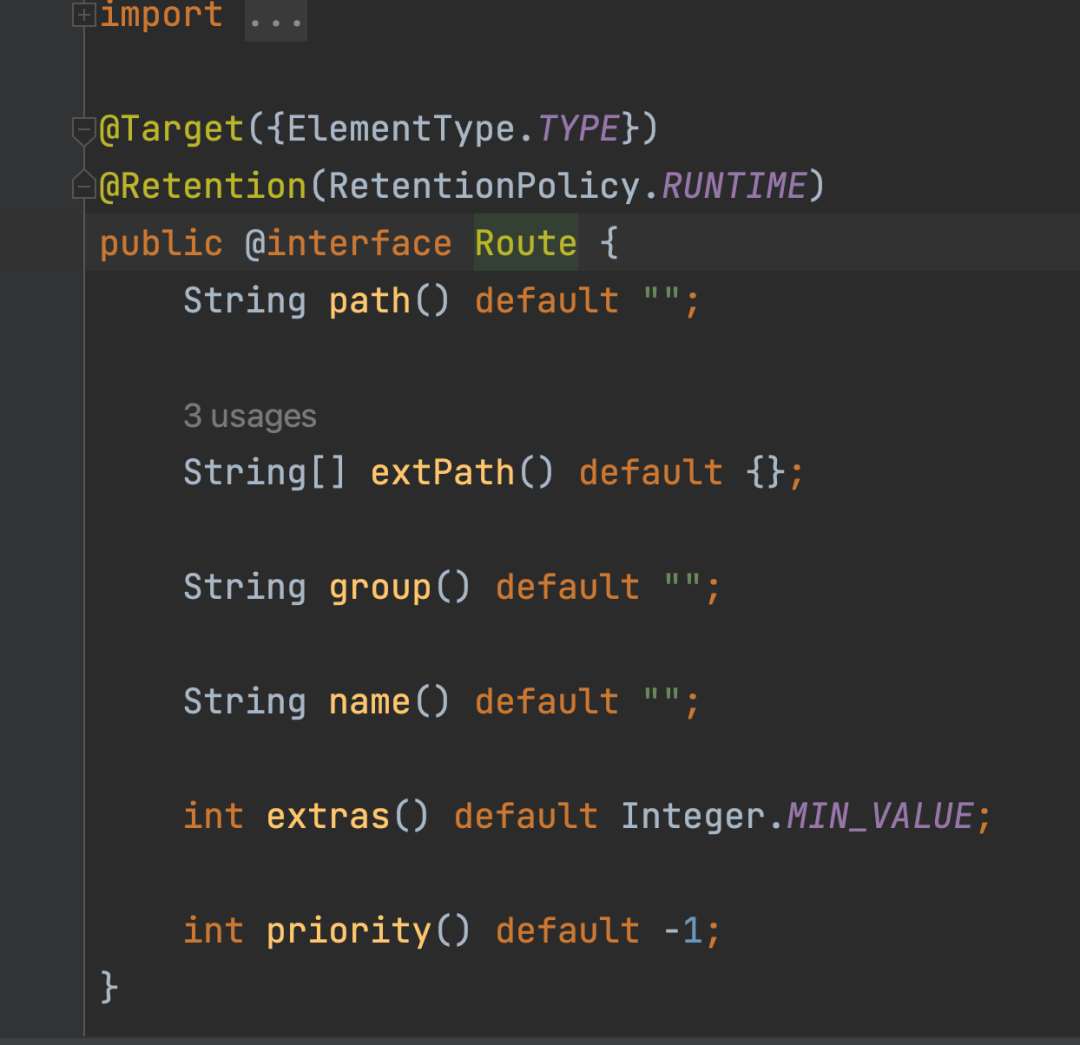

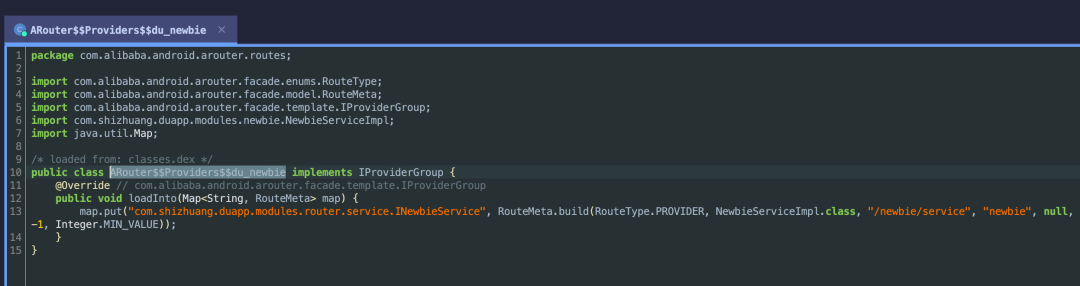

ARouter 框架的设计是它默认会将注解中注册path路径中第一个路由层级 (例如 "/trade/homePage"中的trade)作为该路由信息所的Group, 相同Group路径的路由信息会合并到最终生成的同一个类 的注册函数中进行同步注册。在大型项目中,对于复杂业务线同一个Group下可能包含上百个注册信息,注册逻辑执行过程耗时较长,以得物为例,路由最多的业务线在初始化路由上的耗时已经来到了150+ms。

路由的注册逻辑本身是懒加载的,即对应Group之下的首个路由组件被调用时会触发路由注册操作。然而ARouter通过SPI(服务发现)机制来帮助业务组件对外暴露一些接口,这样不需要依赖业务组件就可以调用一些业务层的视线,在开发这些服务时,开发者一般会习惯性的按照其所属的组件为其设置路由path,这使得首次构造这些服务的时候也会触发同一个Group下的路由加载。

而在Application阶段肯定需要用到业务模块的服务中的一些接口,这就会提前触发路由注册操作,虽然这一操作可以在异步线程执行,但是Application阶段的绝大部分工作都需要访问这些服务,所以当这些服务在首次构造的耗时增大时,整体的启动耗时势必会随之增长。

2.1 ARouter Service路由分离

The original intention of ARouter using SPI design is for decoupling. The role of Service should only be to provide interfaces. Therefore, an empty implemented Service should be added specifically to trigger route loading. The original Service needs to be replaced with a Group, which will only be used later. Provide an interface so that other tasks in the Application phase do not need to wait for the completion of the route loading task.

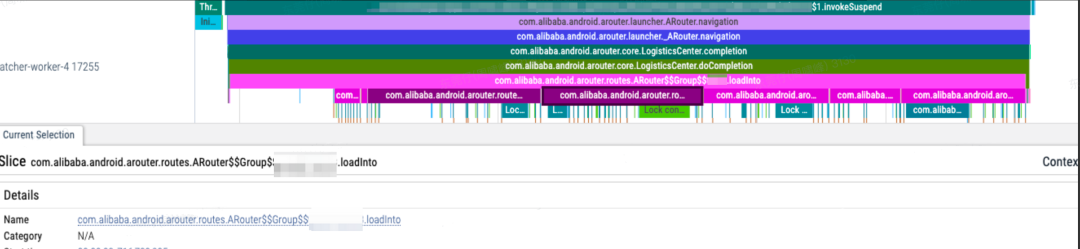

2.2 ARouter supports concurrent loading of routes

After we implemented route separation, we found that the total time taken to load existing hotspot routes was greater than the time spent by Application. In order to ensure that the loading of routes was completed before entering the splash screen page, the main thread had to sleep and wait for the loading of routes to be completed.

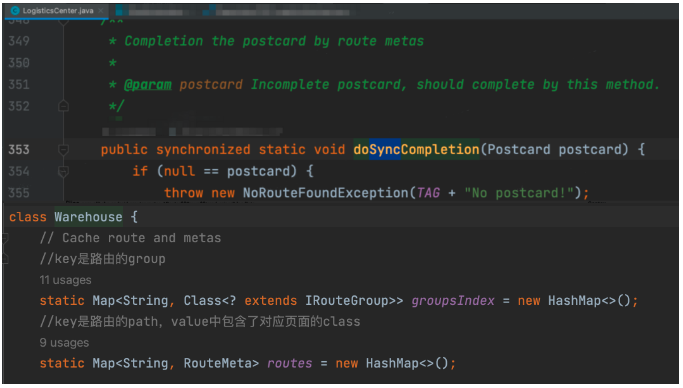

Analysis shows that ARouter's route loading method adds a class lock because it needs to load routes into maps in the warehouse class. These maps are thread-unsafe HashMap, which means that all route loading operations are actually executed serially, and There is lock competition, which ultimately leads to the cumulative time consumption being greater than the application time consumption.

Analyzing the trace shows that the time consuming is mainly due to frequent calls to the loadInto operation of the loading route. Analyzing the role of the lock here, it can be seen that the main purpose of adding class locks is to ensure the thread safety of the map operation in the warehouse WareHouse.

Analyzing the trace shows that the time consuming is mainly due to frequent calls to the loadInto operation of the loading route. Analyzing the role of the lock here, it can be seen that the main purpose of adding class locks is to ensure the thread safety of the map operation in the warehouse WareHouse.

Therefore, we can downgrade the class lock and lock the class object GroupMeta (this class is the class generated by ARouter apt, corresponding to the ARouter$$Provider$$xxx class in the apk) to ensure thread safety during the route loading process. As for the The previous thread safety issues with map operations can be completely solved by replacing these maps with concurrentHashMap. In extreme concurrency situations, there will be some thread safety issues, which can also be solved by adding empty judgments as shown in the figure.

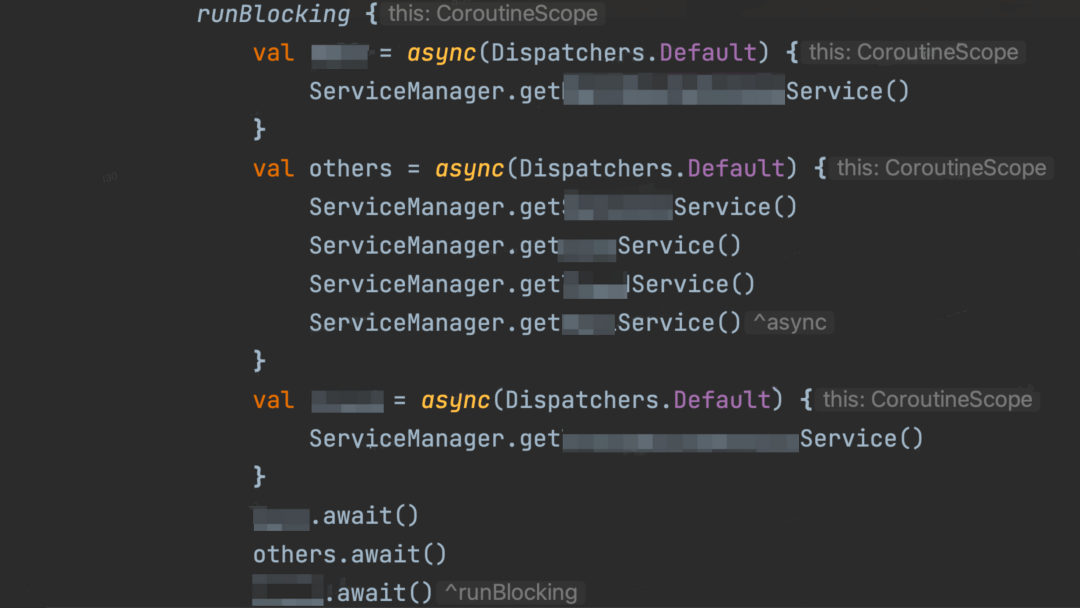

At this point, we have implemented concurrent loading of routes, and then we group the services to be preloaded reasonably according to the bucket effect, and then put them into coroutines for concurrent execution to ensure that the overall time is minimized.

At this point, we have implemented concurrent loading of routes, and then we group the services to be preloaded reasonably according to the bucket effect, and then put them into coroutines for concurrent execution to ensure that the overall time is minimized.

3. Lock optimization

Most of the tasks performed in the Application phase are the initialization of the basic SDK. Its running logic is usually relatively independent, but there will be dependencies between SDKs (for example, the hidden library will depend on the network library), and most of them will involve reading files and loading so. Library and other operations, in order to compress the time-consuming of the main thread in the Application stage, time-consuming operations will be put into sub-threads to run concurrently as much as possible to make full use of CPU time slices, but this will inevitably lead to some lock competition issues.

3.1 Load so lock

The System.loadLibrary() method is used to load the so library in the current apk. This method locks the Runtime object, which is equivalent to a class lock.

The basic SDK is usually designed to write the load so operation into the static code block of the class to ensure that the so library is prepared before the SDK initialization code is executed. If this basic SDK happens to be a basic library such as a network library and will be called by many other SDKs, multiple threads will compete for this lock at the same time. In the worst case scenario, when IO resources are tight, reading so files becomes slow, and the main thread is the last one in the lock waiting queue, then the startup time will be far longer than expected.

To this end, we need to uniformly control and converge the operations of loadSo into one thread for execution, forcing them to run in a serial manner, so as to avoid the above situation. It is worth mentioning that the so file in webview.apk will also be loaded during the previous webview provider preloading process, so you need to ensure that the preloadProvider operation is also placed in this thread.

The loading operation of so will trigger the JNI_onload method of the native layer, and some so may perform some initialization work therein. Therefore, we cannot directly call the System.loadLibrary() method to load so, otherwise problems may occur with repeated initialization.

We finally adopted the class loading method, which is to move all the codes loaded by these SOs into the static code blocks of related classes, and then trigger the loading of these classes. The class loading mechanism is used to ensure that the loading operations of these SOs will not Repeat the execution, and the order in which these classes are loaded should also be arranged according to the order in which these so are used.

In addition, it is not recommended that the loading task of so be executed concurrently with other tasks that require IO resources . According to the actual measurement in Dewu App, the time-consuming difference between the two cases is huge.

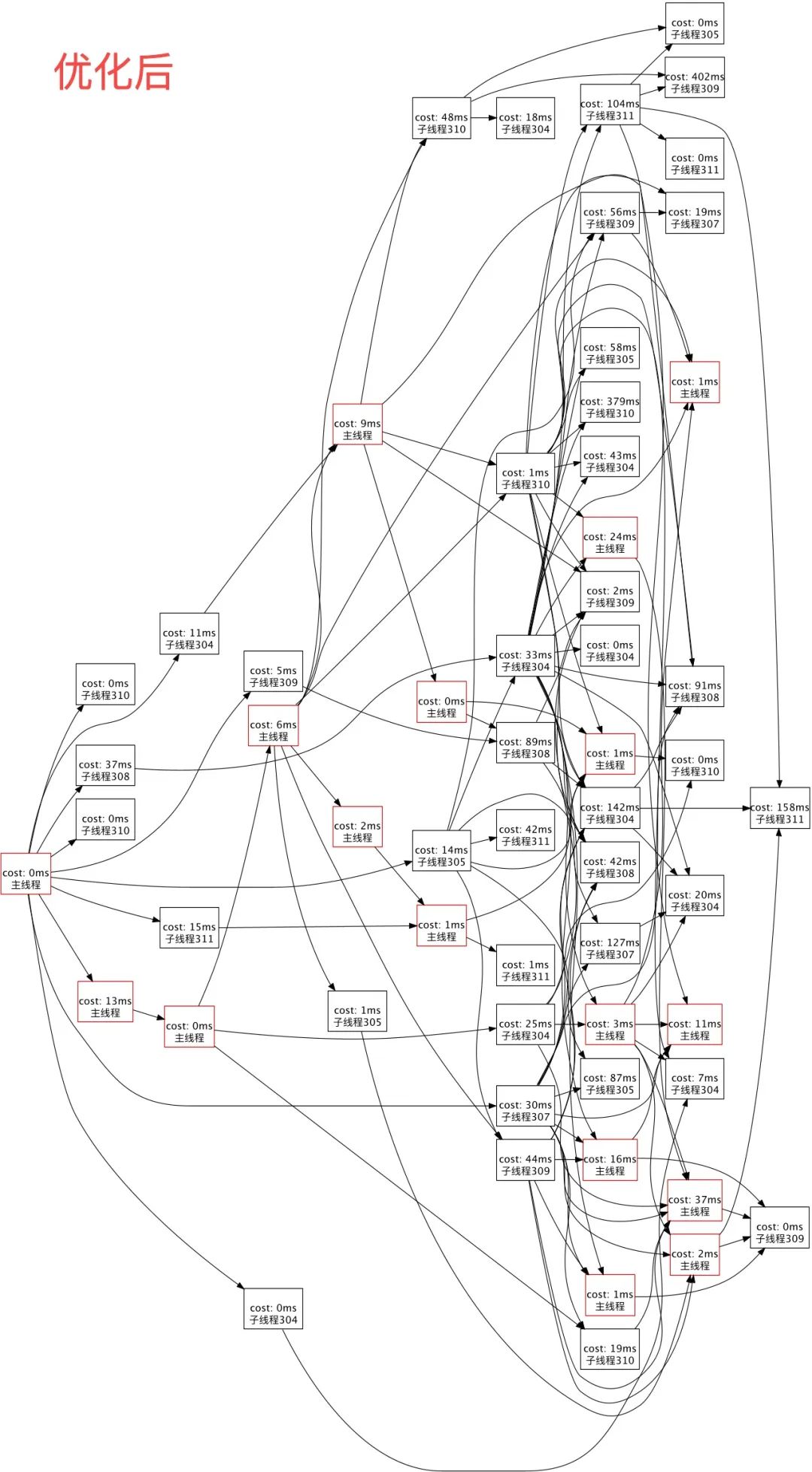

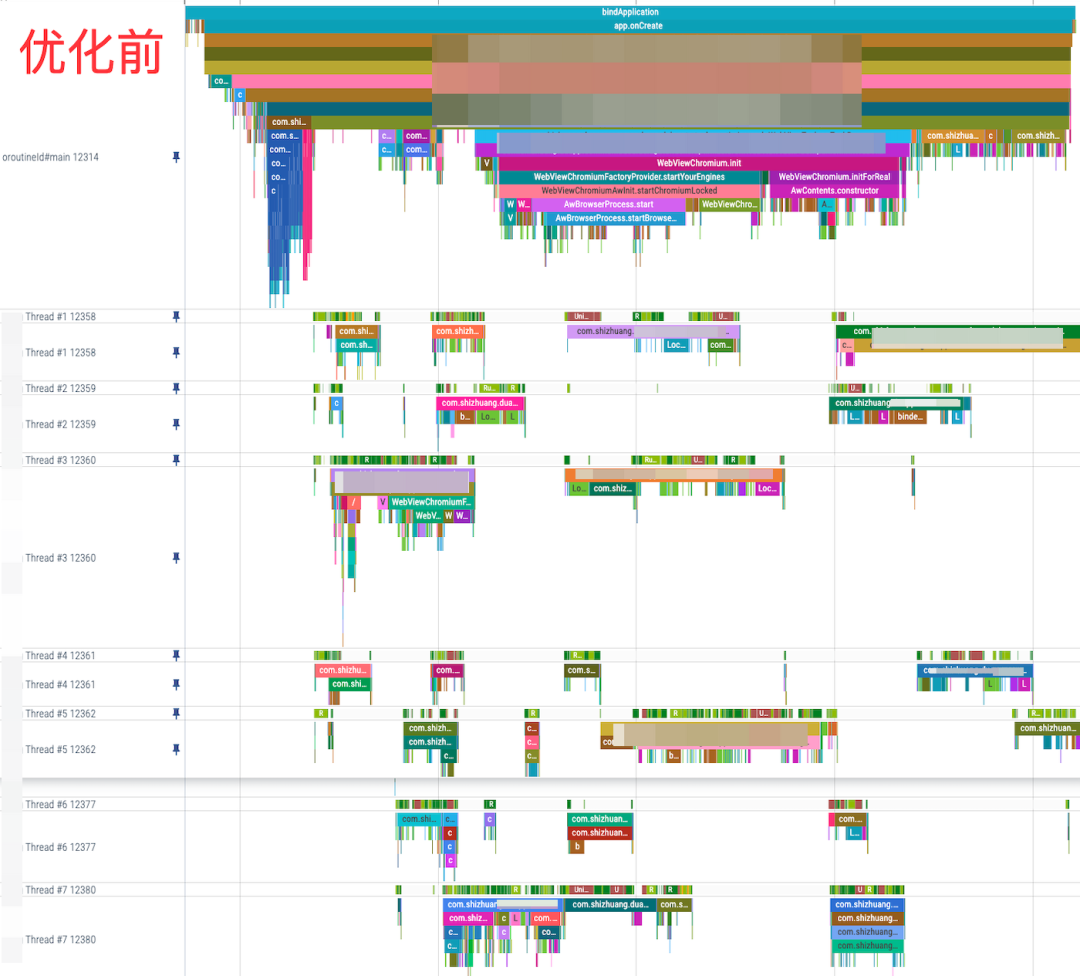

4. Start framework optimization

The current common startup framework design is to allocate the work in the startup phase to a group of task nodes, and then construct a directed acyclic graph based on the dependencies of these task nodes. However, with the business iteration, some historical task dependencies have been There is no need to exist, but it will slow down the overall startup speed.

Most of the work in the startup phase is the initialization of the basic SDK, which often has complex dependencies between them. In order to compress the time-consuming of the main thread when doing startup optimization, we usually find out the time-consuming tasks of the main thread and throw them into The child thread executes it, but in the Application stage with complex dependencies, if you just throw it into asynchronous execution, you may not get the expected benefits.

After we completed the webview optimization, we found that the startup time did not directly reduce the webview initialization time as expected, but was only about half of the expected amount. After analysis, we found that our main thread task depends on the sub-thread task, so when When the sub-thread task is not completed, the main thread will sleep and wait.

And the reason why webview is initialized at this point in time is not because of dependency restrictions, but because the main thread happens to have a relatively long sleep time that can be utilized during this period, but the workload of asynchronous tasks is much greater than that of the main thread. , even if seven sub-threads are running concurrently, the time-consuming task is greater than that of the main thread.

Therefore, if you want to further expand your benefits, you must optimize the task dependencies in the startup framework.

The first picture above is a directed acyclic graph of tasks in the startup phase of Dewu App before optimization. The red box indicates that the task is executed on the main thread. We focus on tasks that block main thread task execution.

It can be observed that there are several exits and tasks with particularly many entrances on the dependency links of the main thread tasks. Many exits indicate that such tasks are usually very important basic libraries (such as the network library in the figure), and many entrances indicate that this task There are too many pre-dependencies, and the time point when it starts to execute fluctuates greatly. The combination of these two points shows that the time at which this task ends is very unstable and will directly affect subsequent main thread tasks.

The main ideas for optimizing this type of tasks are:

- Dismantle the task itself and divide out operations that can be executed in advance or delayed. However, before dividing out, it is necessary to consider whether there is any time slice left in the corresponding time period, or whether it will aggravate the competition for IO resources;

- Optimize the predecessor tasks of the task so that the execution of the task ends as early as possible, which can reduce the time it takes for subsequent tasks to wait for the task;

- Remove unnecessary dependencies. For example, the initialization of the embedded library only requires registering a listener to the network library, but does not initiate a network request. (recommend)

It can be seen that in our second directed acyclic graph after optimization, the dependency levels of tasks are significantly reduced, and tasks with particularly large entrances and exits basically no longer appear.

Comparing the traces before and after optimization, we can also see that the task concurrency of the sub-threads has been significantly improved, but the higher the task concurrency, the better. On low-end machines where the time slice itself is insufficient, the higher the concurrency, the worse the performance. Because it is easier to solve problems such as lock competition and IO waiting, it is necessary to leave a certain gap and conduct sufficient performance testing on mid- to low-end machines before going online, or use different task arrangements for mid- to low-end machines.

3. Home Page Optimization

1. Time-consuming optimization of general layout

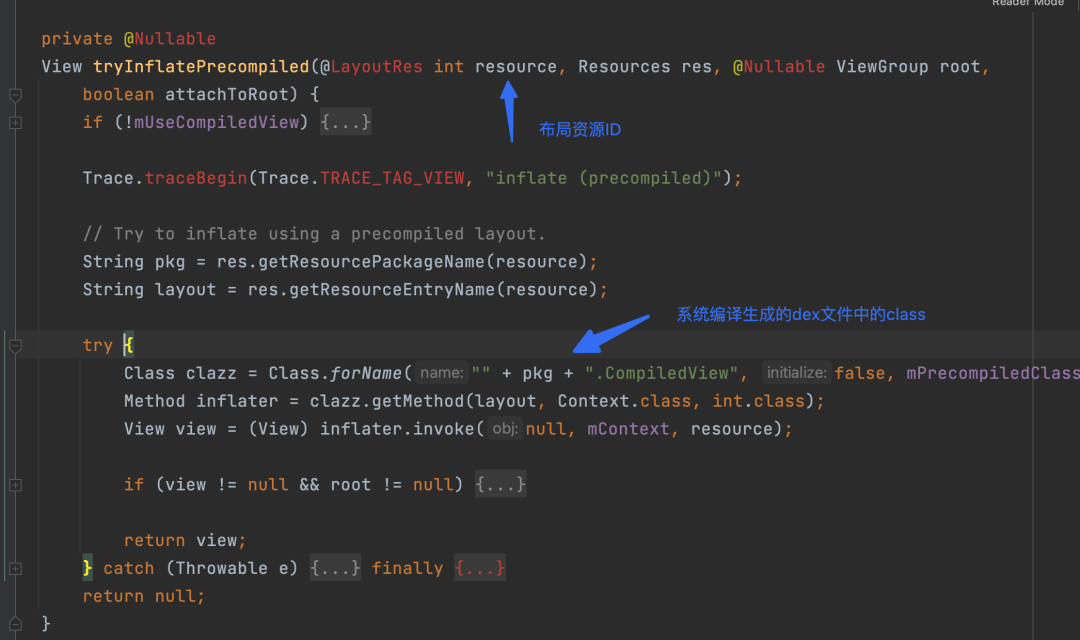

The system parses the layout by reading the layout xml file through the inflate method and parsing it to build the view tree. This process involves IO operations and is easily affected by the device status. Therefore, we can parse the layout file through apt during compilation to generate the corresponding view construction class. . Then asynchronously execute the methods of these classes in advance during runtime to build and assemble the view tree, which can directly optimize the time-consuming page inflate.

2. Message scheduling optimization

During the startup phase, we usually register some ActivityLifecycleListener to monitor the page life cycle, or post some delayed tasks to the main thread. If there are time-consuming operations in these tasks, it will affect the startup speed, so you can hook the main thread. Message queue, move the page life cycle callback and page drawing related msg to the head of the message queue, so as to speed up the display of the first frame of the home page.

Please look forward to the follow-up content of this series for details.

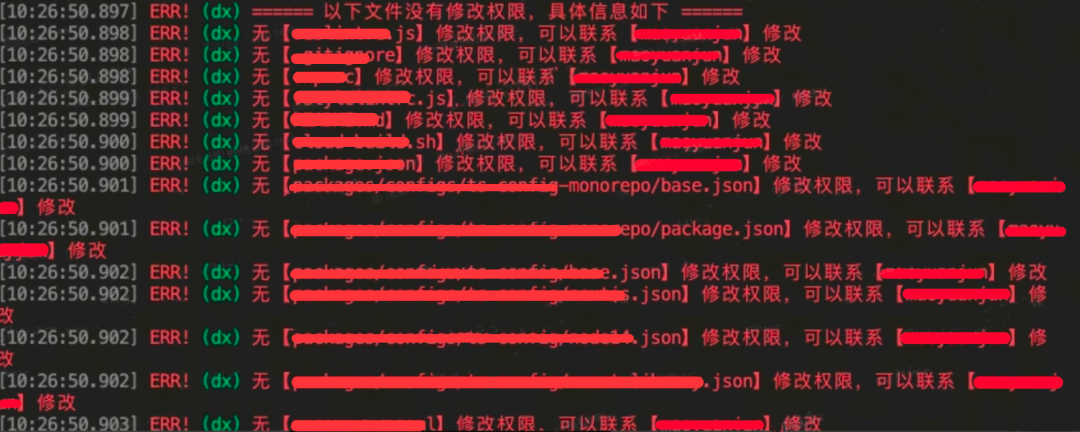

4. Stability

Performance optimization can only be regarded as the icing on the cake for the App, and stability is the life redline. Optimization and transformation are initiated in the Application stage where the execution time is very early. The degree of stability risk is very high, so it is necessary to prepare for crash protection. When optimizing, even if there are unavoidable stability issues, negative impacts must be minimized.

1. Crash protection

Since the tasks performed in the startup phase are all important basic library initializations, it is of little significance to identify and eat exceptions when a crash occurs, because there is a high probability that it will cause subsequent crashes or functional abnormalities, so our main protection work is to prevent problems. Subsequent hemostasis .

The configuration center SDK is usually designed to read the cached configuration from a local file, and then refresh it after the interface request is successful. Therefore, if a crash occurs after the configuration is hit during the startup phase, the new configuration cannot be pulled. In this case, you can only clear the App cache or uninstall and reinstall it, which will cause very serious user losses.

crash fallback

crash fallback

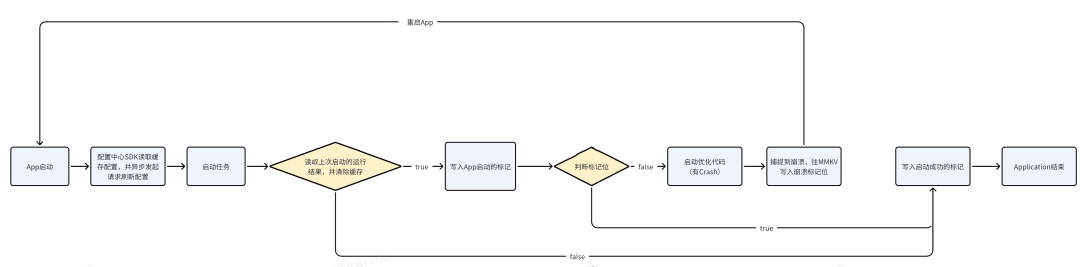

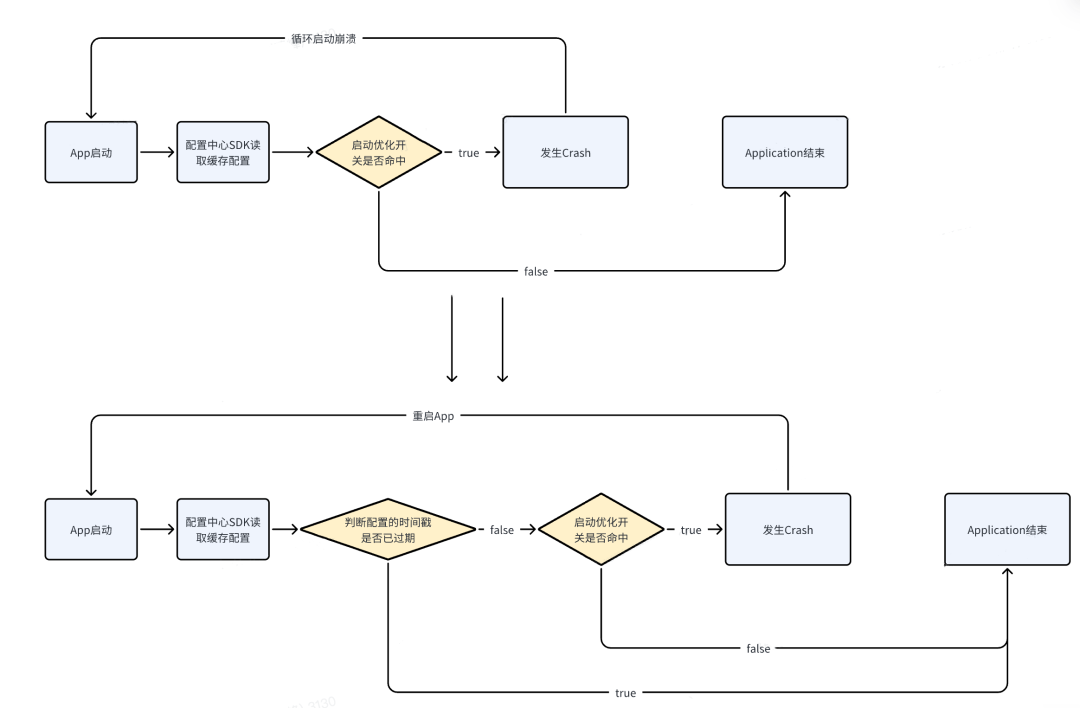

Add try-catch protection to all changes. After catching the exception, report the hidden point and write the crash mark bit into MMKV. In this way, the device will no longer enable startup optimization-related changes in the current version, and then throw out The original abnormality caused him to collapse. As for native crash, you can perform the same operation in the native crash callback of Crash monitoring.

Running status detection

We can catch Java Crash by registering unCaughtExceptionHandler, but native crash needs to be captured with the help of crash monitoring SDK. However, crash monitoring may not be initialized at the earliest time point of startup, such as the preloading of Webview's Provider and the preloading of so library. They are all earlier than crash monitoring, and these operations all involve native layer code.

In order to avoid the risk of crash in this scenario, we can bury the MMKV mark bit at the starting point of the Application and change it to another state at the end point, so that some code whose execution time is earlier than the configuration center can obtain this mark bit. Determine whether the last run was normal. If some unknown crashes occurred during the last startup (such as a native crash that occurred before the crash monitoring was initialized), then this flag can be used to turn off startup optimization changes in time.

Combined with the automatic restart operation after a crash, the crash is not actually observable from the user's perspective, but it is felt that the startup time is about 1-2 times as long as usual.

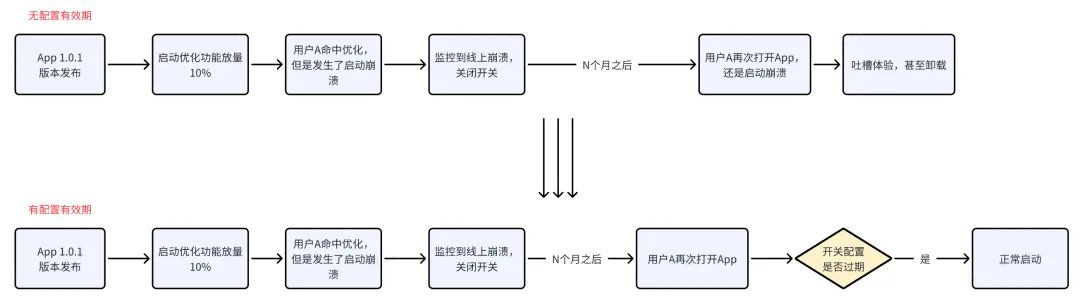

Configure validity period

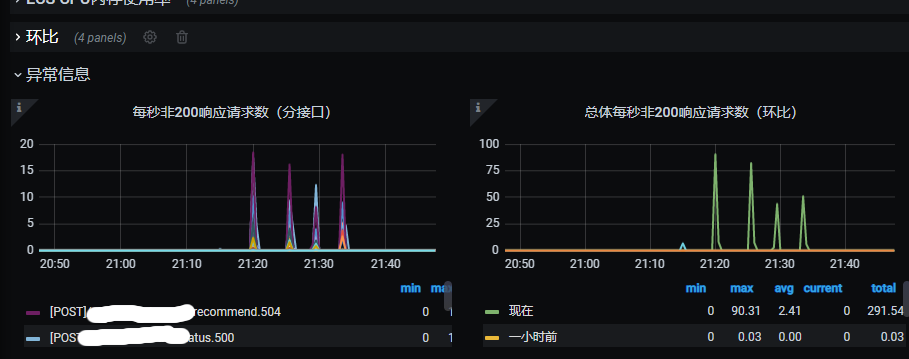

Online technology changes usually configure the sampling rate and combine it with random numbers to gradually increase the volume. However, the design of configuring the SDK is usually to take the last local cache by default. When an online crash or other fault occurs, it can be rolled back in time. configuration, but the design of the cache will cause users to experience at least one crash due to the cache.

To this end, we can add a matching expiration timestamp to each switch configuration, and limit the current volume switch to only take effect before this timestamp. This ensures that the bleeding can be stopped in time when encountering faults such as online crashes, and the timestamp is accurate. The design can also avoid crashes caused by the lag in online configuration taking effect.

From the user's perspective, comparison before and after adding the configuration validity period:

5. Summary

So far, we have analyzed the common cold start time-consuming cases in Android Apps. However, the biggest pain point in startup optimization is often the App's own business code. Tasks should be allocated reasonably based on business needs. If you blindly rely on preloading , Delayed loading and asynchronous loading cannot fundamentally solve the time-consuming problem, because the time-consuming time does not disappear but is transferred, followed by low-end machine startup degradation or abnormal functions.

Performance optimization requires not only the user's perspective, but also an overall view. If all time-consuming tasks are thrown after the first frame because the startup indicator is considered to be the end of the first frame of the homepage, it will inevitably cause lags or even ANR in the user's subsequent experience. . Therefore, when splitting tasks, you not only need to consider whether it will compete with concurrent tasks for resources, but also consider whether the functional stability and performance of each stage of startup and a period of time after startup will be affected. Verify everything, at least make sure there is no performance degradation.

1. Prevent deterioration

Startup optimization is by no means a one-time task. It requires long-term maintenance and polishing. A technical modification of the basic library may bring the indicators back to before liberation overnight, so deterioration prevention must be implemented as soon as possible.

By adding buried points at key points, when online indicators are found to be degraded, the approximate location of the degraded code (such as xxActivity's onCreate) can be quickly located and alerted. This not only helps R&D quickly locate problems, but also avoids specific online scenarios. Indicator degradation cannot be reproduced offline because the time-consuming fluctuation range of a single startup can be up to 20%. If you directly conduct trace analysis, it may be difficult to locate the approximate range of degradation.

For example, when two startups are used for trace comparison, one of the file reading operations is obviously slowed down due to IO blocking, while the other time the IO is normal. This will mislead developers to analyze these normal codes, and actually lead to Degraded code may just be masked by fluctuations.

2. Outlook

For ordinary scenarios started by clicking on the icon, the complete initialization work will be performed in the Application by default. However, some deeper functions, such as customer service center and editing of delivery addresses, will not work even if the user directly enters these pages as quickly as possible. It requires at least 1 second of operation time, so the initialization work related to these functions can be postponed until after the Application, or even changed to lazy loading, depending on the importance of the specific functions.

The launch scenarios of recall/recruitment through delivery and push usually account for a small proportion, but their business value is much greater than ordinary scenarios. Since the current startup time mainly comes from webview initialization and some homepage preloading-related tasks, if starting the landing page does not require all basic libraries (such as H5 pages), then we can delay loading of all the tasks that are not required. In this way, the startup speed can be greatly increased, and it can be started in a real second.

*Text/Jordas

This article is original to Dewu Technology. For more exciting articles, please see: Dewu Technology official website

Reprinting without the permission of Dewu Technology is strictly prohibited, otherwise legal liability will be pursued according to law!

Spring Boot 3.2.0 is officially released. The most serious service failure in Didi’s history. Is the culprit the underlying software or “reducing costs and increasing laughter”? Programmers tampered with ETC balances and embezzled more than 2.6 million yuan a year. Google employees criticized the big boss after leaving their jobs. They were deeply involved in the Flutter project and formulated HTML-related standards. Microsoft Copilot Web AI will be officially launched on December 1, supporting Chinese PHP 8.3 GA Firefox in 2023 Rust Web framework Rocket has become faster and released v0.5: supports asynchronous, SSE, WebSockets, etc. Loongson 3A6000 desktop processor is officially released, the light of domestic production! Broadcom announces successful acquisition of VMware