1. Description

OpenAI recently released a new speech recognition model called Whisper in 2022. Unlike DALLE-2 and GPT-3, Whisper is a free and open-source model. Its main function is to translate speech into text. This article describes how to use this important application library.

2. Whisper concept

2.1 What is Whisper?

Whisper is an automatic speech recognition model trained on 680,000 hours of multilingual data collected from the Web. According to OpenAI, the model is robust to accents, background noise, and technical language. In addition, it supports transcription and translation into English from 99 different languages.

This article explains how to convert speech to text using the Whisper model and Python. Also, it won't cover how the model works or the model architecture. You can check out more about Whisper here .

2.2 Basic concepts of Whisper library

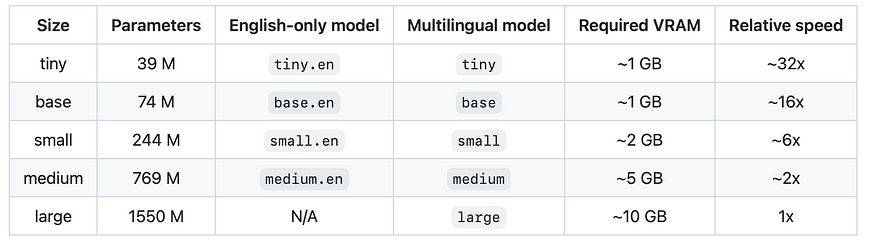

Whisper comes in five models (see table below). Below is the table available on OpenAI's GitHub page. According to OpenAI, four models for English-only applications, denoted as . The model performs better, however, the difference becomes less significant for the and models..entiny.enbase.ensmall.enmedium.en

Reference: OpenAI's GitHub page

In this article, I convert a Youtube video to audio and pass the audio into a Whisper model to convert it to text.

I use Google Colab with GPU to execute the following code.

3. How to use Whisper

3.1 Import the Pytube library

!pip install -— upgrade pytube Read a Youtube video and download it as an MP4 file for transcription

In the first example, I am reading the famous Taken movie dialogue as shown in the YouTube video below

#Importing Pytube library

import pytube

# Reading the above Taken movie Youtube link

video = ‘https://www.youtube.com/watch?v=-LIIf7E-qFI'

data = pytube.YouTube(video)

# Converting and downloading as 'MP4' file

audio = data.streams.get_audio_only()

audio.download()output

![]()

The YouTube link above has been downloaded as an "MP4" file and stored under Content. Now, the next step is to convert the audio to text. We can do this in three lines of code using whisper.

3.2 Import Whisper library

# Installing Whisper libary

!pip install git+https://github.com/openai/whisper.git -q

import whisper3.3 Loading the model

I am using multilingual model here and passing the above audio file and storing as text objectmediumI will find YouI will Kill You Taken Movie best scene ever liam neeson.mp4

model = whisper.load_model(“large”)

text = model1.transcribe(“I will find YouI will Kill You Taken Movie best scene ever liam neeson.mp4”)

#printing the transcribe

text['text']output

Below is the text from the audio. It matches the audio exactly.

I don’t know who you are. I don’t know what you want. If you are looking for ransom, I can tell you I don’t have money. But what I do have are a very particular set of skills. Skills I have acquired over a very long career. Skills that make me a nightmare for people like you. If you let my daughter go now, that will be the end of it. I will not look for you. I will not pursue you. But if you don’t, I will look for you. I will find you. And I will kill you. Good luck.

4. How about switching between different audio languages?

Whisper is known to support 99 languages; I'm trying to use Hindi and convert the movie clip video below to text.Tamil

In this example, I used the modellarge

#Importing Pytube library

import pytube

# Reading the above tamil movie clip from Youtube link

video = ‘https://www.youtube.com/watch?v=H1HPYH2uMfQ'

data = pytube.YouTube(video)

# Converting and downloading as ‘MP4’ file

audio = data.streams.get_audio_only()

audio.download()output

4.1 Loading large models

#Loading large model

model = whisper.load_model(“large”)

text = model1.transcribe(“Petta mass dialogue with WhatsApp status 30 Seconds.mp4”)

#printing the transcribe

text['text']output

The model converts Tamil audio clips to text. The model transcribed the audio well; however, I could see some small changes in the language.

I am going to see more quality events from now on. Hey.. hey.. hey.. I'm telling the truth. I will leave it to the end. See that honor does not come back. Hey.. If someone has a baby boy who wants to go and drink, then run away.

I mainly try medium and large models. It's powerful and transcribes audio with precision. Also, I used Azure Synapse Notebook with GPU to transcribe long audio up to 10 minutes and it worked fine.

This is completely open source and free; we can use it directly for speech recognition applications in your projects. We can also translate other languages into English. I will introduce it in my next article with long audio and different English language.

You can check out more information on the Whisper model; visit Whisper's Github page .

Thanks for reading. Keep learning and stay tuned for more!