It has been half a year since the large language model based on ChatGPT appeared. The research has gradually extended from the evolution and function of the model itself to how to use the large model more effectively, combine it with other tools, and implement it to solve problems in the actual field. The problem.

The enhancement here mainly refers to the combination of large language model (LM) and external extension modules , so as to obtain the ability beyond pure natural language modeling . Specific abilities include: reasoning, using tools, and acting . Not only can it solve more types of problems , but its ability to deal with natural language processing has also made breakthroughs after connecting external modules .

This article presents an overview of augmented language models, as well as several recently published articles on specific application methods and frameworks.

A Survey of Augmented Language Models

English Title: Augmented Language Models: a Survey

Chinese Title: A Review of Augmented Language Models

Paper Address: http://arxiv.org/abs/2302.07842

Interpretation: https://blog.csdn.net/xieyan0811/article/details/130910473? spm=1001.2014.3001.5501

(nearly 5000 words, too long to post)

A review article, Come Meta, published on 2023-02-15.

The article enters the interpretation from the perspective of methodology . The content is divided into six parts: introduction, reasoning, using tools and actions, learning methods, discussion, conclusion, 22 pages of text.

For readers who are more concerned with the field of LM, there is no surprising special method mentioned in this article. However, the article presents a comprehensive and meticulous collation of existing methods, providing a panoramic overview with detailed references to relevant literature and software examples. It is a good overview and combing of knowledge, which can be used as an introductory reading.

Chameleon: Plug-and-Play Compositional Inference Using Large Language Models

This article is from the University of California & Microsoft, published on 2023-04-19.

English title: Chameleon: Plug-and-Play Compositional Reasoning with Large Language Models

Chinese title: Chameleon: Plug-and-Play Compositional Reasoning with Large Language Models

Paper address: http://arxiv.org/abs/2304.09842

Interpretation:

- Goal: Use LLM in combination with other tools to solve domain-specific problems. A bridge is established between different types of data and various modeling tools, and LLM is used to realize the calling sequence and methods that previously required manual design.

- Current problem: LLM, due to its own limitations, cannot access the latest information, cannot use external tools, and cannot perform precise mathematical reasoning.

- Effect: Combined with GPT-4, it has been significantly improved in ScienceQA (86.54%) and TabMWP (98.78) tasks.

- Method:

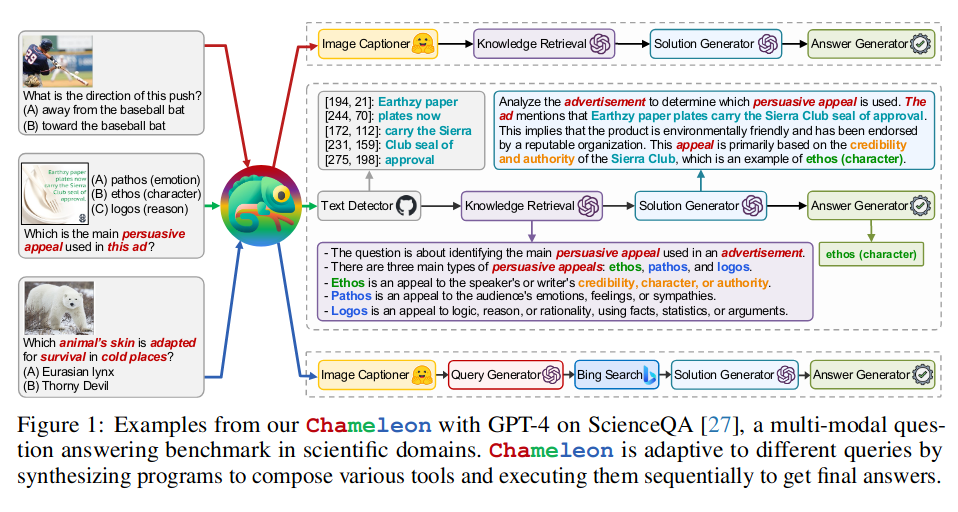

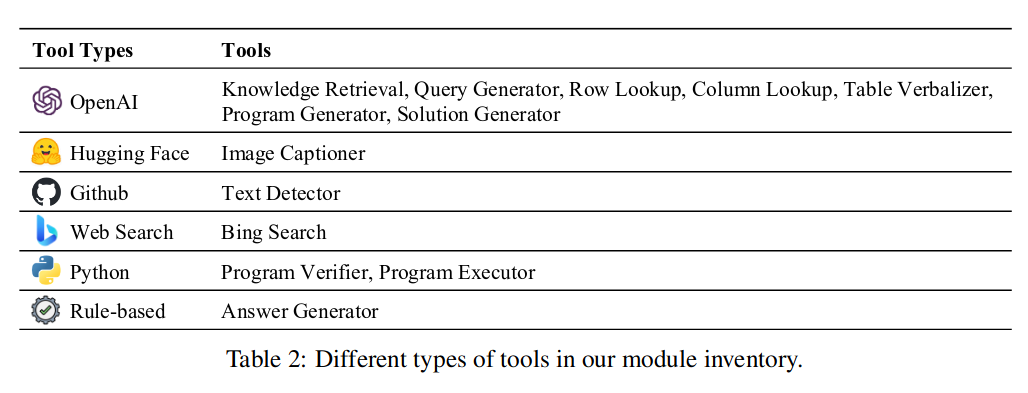

We propose chameleon, a plug-and-play compositional reasoning framework that can combine multiple tools, which can include LLM models, off-the-shelf visual models, web search engines, Python functions, and rule-based module, and LLM is used as a natural language planner to disassemble the problem into a chain of multiple tool combinations (design workflow), then call the tools to solve the problem collaboratively, and finally generate an answer through the answer generator.

Figure-1 shows three examples of answering questions by looking at pictures. For the second question, it shows the process from text recognition, information retrieval, generation of solutions, and finally generation of answers.

Among the available tools are:

SuperICL: small models as plugins for large language models

This article is from the University of California & Microsoft, published on 2023-05-15.

English Title: Small Models are Valuable Plug-ins for Large Language Models

Chinese Title: Small Models are Valuable Plug-ins for Large Language Models

Paper Address: http://arxiv.org/abs/2305.08848

interpret

- Goal: Use natural language large model (LLM) to improve the prediction effect on large-scale supervised data.

- Current problem: Due to the limitation of the length of the context, only limited context hints (In-Context Learning) can be provided to the LLM in the dialogue.

- Effect: It has shown its superiority in terms of effect evaluation, stability, multilingualism and interpretability.

- Methods

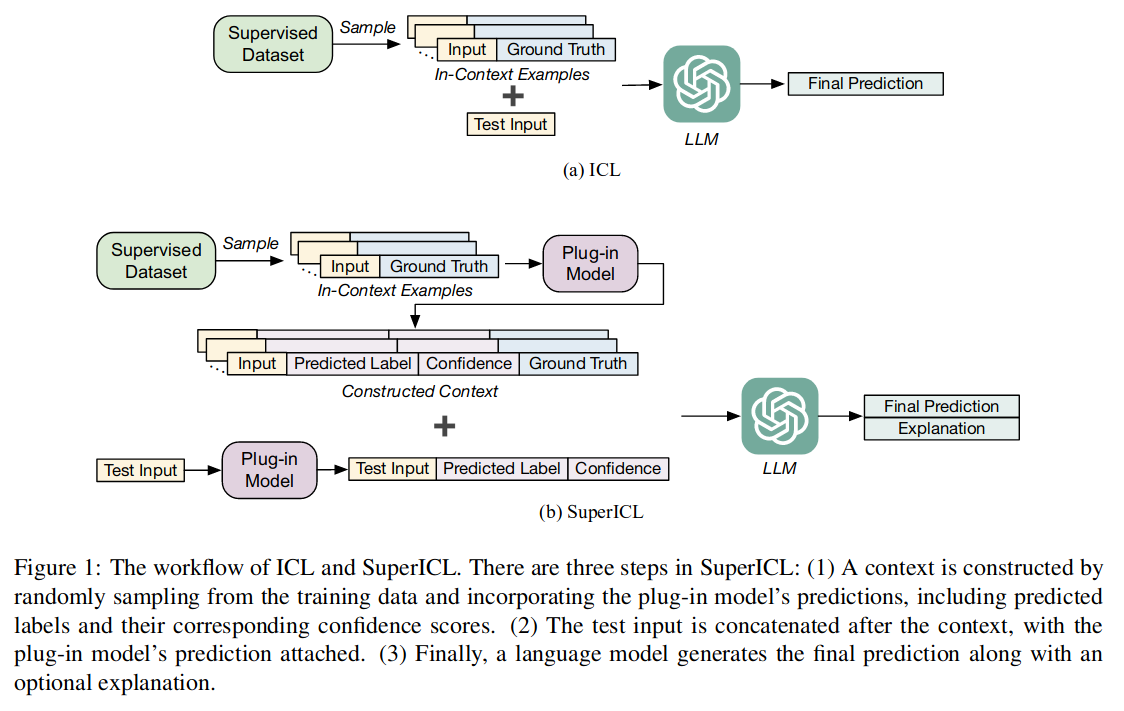

In this paper, SuperICL is proposed, which treats LLM as a black box and combines it with a local tuned small model to improve the ability of supervised tasks.

Previously, the supervised examples and the test data to be predicted were passed to the LLM to obtain the answer. The method proposed in this paper first trains a local model on the training set and test set data to predict the label and confidence. These results are then passed to the LLM along with the test data, so that the LLM learns not only the inference results but also the decision-making process, leading to better reasoning and interpretation capabilities.

Figure-1(a) part shows the working process of ICL (before), it samples from the context of the training set, and then passes it to LLM together with the test set data to get the output; Figure-1(b) shows the work of

SuperICL The process is divided into three steps:

- The context is constructed by randomly sampling from the training data combined with the local model's predictions, including predicted labels and their corresponding confidence scores.

- The test input is concatenated after the context and appended with the local model's predictions on the test data.

- The language model generates the final predictions as well as explanations.

PKG: Augmented Large Language Models for Parameterized Knowledge Guidance

This article is from the University of Hong Kong & Microsoft, published on 2023-05-18.

English title: Augmented Large Language Models with Parametric Knowledge Guiding

Chinese title: Parametric Knowledge Guidance Enhanced Large Language Model

Paper Address: http://arxiv.org/abs/2305.04757

Interpretation:

- Goal: Facilitate the application of large model LLM in domain knowledge-intensive tasks

- Current Issues: When solving specific problems, more domain-related knowledge, state-of-the-art knowledge, and private data are involved.

- Effects: Improved model performance on a range of domain-knowledge-intensive tasks , including factual (+7.9%), tabular (+11.9%), medical (+3.0%), and multimodal (+8.1%) knowledge.

- Method:

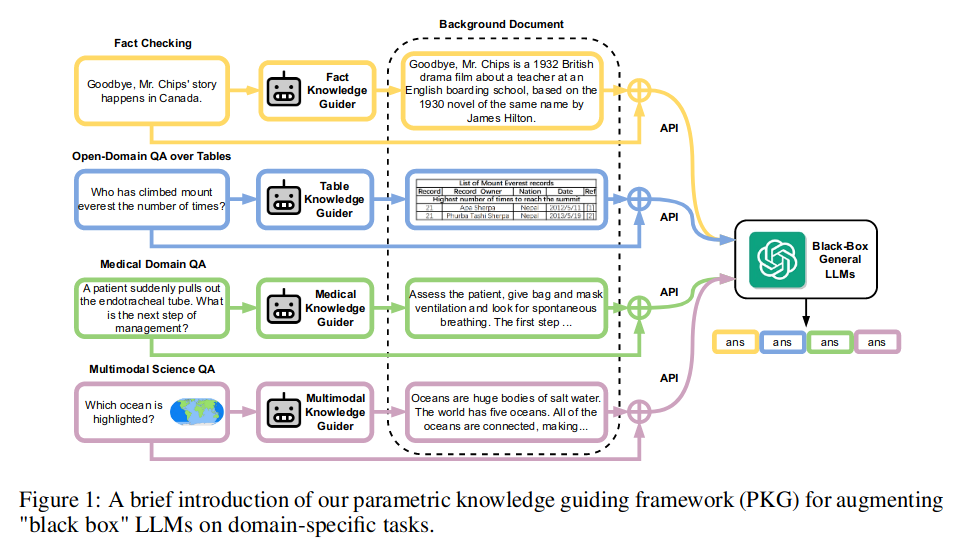

A PKG (Parametric Knowledge Guiding) parameterized knowledge guidance framework is proposed, combined with the local model and the LLM model. The local model is based on the open source natural language model (Llama), which can store offline domain knowledge and convert domain knowledge into parameter output. Pass in the large model as the background along with the question.

Figure-1 in the text shows the working process of PKG: