1. A simple example

Please see the example sentences below: A dog ate the food because it was hungry (A dog ate the food because it was hungry)

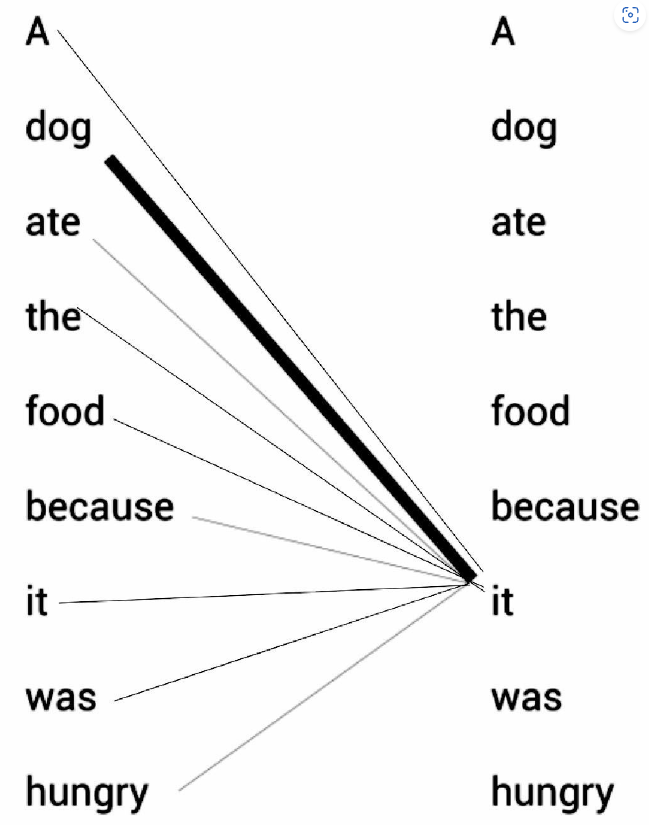

The pronoun it (it) in the example sentence can refer to dog (dog) or food (food). When reading this text, we naturally think that it refers to dog, not food. But how should the computer model decide when faced with these two choices? At this time, the self-attention mechanism helps to solve this problem.

Taking the above sentence as an example, our model first needs to calculate the eigenvalue of word A, then calculate the eigenvalue of dog, then calculate the eigenvalue of ate, and so on. When calculating the feature value of each word, the model needs to traverse the relationship between each word and other words in the sentence. The model can better understand the meaning of the current word through the relationship between words and words.

For example, when calculating the feature value of it, the model will associate it with other words in the sentence to better understand its meaning.

As shown, the feature value of it is calculated from its relationship with other words in the sentence. Through the relationship connection, the model can clearly know that it refers to dog instead of food in the original sentence. This is because the relationship between it and dog is closer, and the relationship connection line is thicker than other words.

We have a preliminary understanding of what is the self-attention mechanism, next