Title: InternImage: Exploring Large-Scale Vision Foundation Models with Deformable Convolutions

Paper: https://arxiv.org/abs/2211.05778

Code: https://github.com/OpenGVLab/InternImage

guide

InternImageCOCOIt reached the highest 65.4 mAP on the data set, which is the highest record at present (click to view). This achievement has already received extensive attention from the media. Now that the paper has been CVPR 2023accepted , this article will present the interpretation from the research team. Recently, related models, codes, and TensorRTdeployment have been open sourced, and the inference service API of InternImagethe model . Everyone is welcome to try it out!

InternImageIt is a new convolution-based basic model, which is different from CNNthe network . It InternImageuses deformable convolution as the core operator, so that the model not only has the large-scale effectiveness required for downstream tasks such as detection and segmentation. Receptive field, and capable of adaptive spatial aggregation conditioned on input information and tasks.

motivation

Limitations of Traditional Convolutional Neural Networks

Expanding the scale of the model is an important strategy to improve the quality of feature representation. In the field of computer vision, the expansion of model parameters can not only effectively enhance the representation learning ability of deep models, but also enable learning and knowledge acquisition from massive data. ViT and Swin Transformer expanded the depth model to 2 billion and 3 billion parameter levels for the first time, and the classification accuracy rate of their single model in the ImageNet dataset also exceeded 90%, far exceeding the traditional CNN network and small-scale models, breaking through the technical bottleneck . However, due to the lack of long-distance dependence and spatial relationship modeling capabilities, the traditional CNN model cannot achieve a model scale expansion capability similar to the Transformer structure. The researchers summarized the differences between the traditional convolutional neural network and the visual Transformer:

Operator level

From the perspective of operators, the multi-head attention mechanism of Visual Transformer has long-distance dependence and adaptive spatial aggregation capabilities. Benefiting from this, Visual Transformer can learn more powerful and robust representations than CNN networks from massive data.

model architecture

From the perspective of model architecture, in addition to the multi-head attention mechanism, the visual Transformer has more advanced modules that CNN networks do not have, such as Layer Normalization (LN), feed-forward neural network FFN, GELU, etc.

TransformerAlthough some recent works try to use large kernel convolutions to capture long-distance dependencies, they are far from the state-of-the-art vision in terms of model scale and accuracy .

Further extensions of variable convolutional networks

InternImage improves the scalability of the convolutional model and alleviates the inductive bias by redesigning the operator and model structure, including:

-

DCNv3 operator : Based on the DCNv2 operator, the shared projection weight, multi-group mechanism and sampling point modulation are introduced.

-

Basic module : Integrating advanced modules as the basic module unit for model construction

-

Module stacking rules : Normalize the hyperparameters of the model, such as width, depth, and number of groups, when expanding the model.

This work is dedicated to constructing a CNN model that can efficiently scale to a large number of parameters. First, the redesigned deformable convolution operator DCNv2 to adapt to long-distance dependence and weaken the inductive bias; then, the adjusted convolution operator is combined with advanced components to establish the basic unit module; finally, explore and implement Stacking and scaling rules of modules to build a base model with large-scale parameters and learn powerful representations from massive data.

method

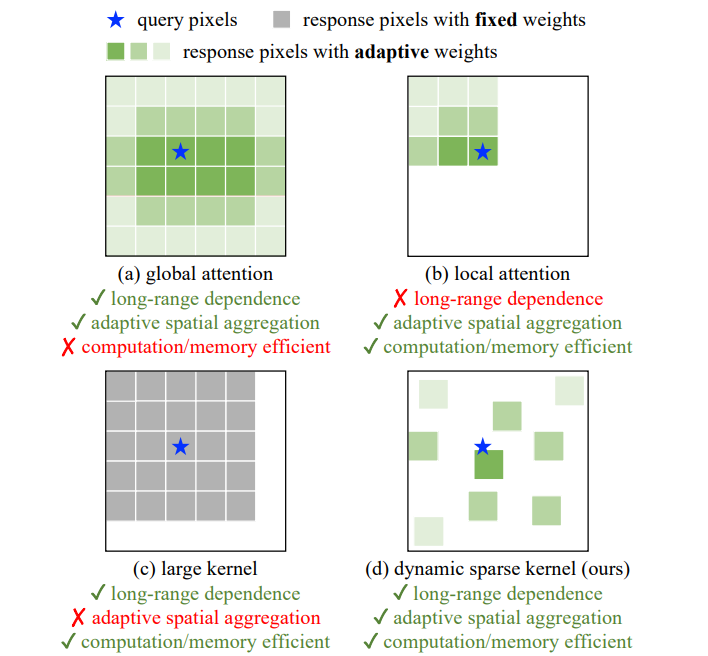

At the operator level, first summarize the main differences between convolution operators and other mainstream operators. The current mainstream Transformerseries of models mainly rely on the multi-head self-attention mechanism to realize large-scale model construction. Its operators have long-distance dependencies , which are sufficient to construct long-distance feature dependencies , and also have spatial adaptive aggregation capabilities to realize pixel-level relationships.

However, this global attention mechanism requires huge computation and storage , making it difficult to achieve efficient training and fast convergence . Likewise, the local attention mechanism lacks the dependence on distant features. Due to the lack of spatial aggregation ability of large kernel dense convolution, it is difficult to overcome the natural inductive bias of convolution, which is not conducive to expanding the model. Therefore, InternImage designs a dynamic sparse convolution operator to achieve global attention effects without wasting too much computing and storage resources to achieve efficient training.

Based on the operator, the researchers DCNv2redesigned and adjusted and proposed DCNv3the operator. The specific improvements include the following parts.

Shared projection weights.

Similar to regular convolution, different sampling points in DCNv2 have independent projection weights, so its parameter size is linear with the total number of sampling points. In order to reduce the complexity of parameters and memory, we use the idea of separable convolution for reference, replace grouping weights with position-independent weights, share projection weights among different sampling points, and preserve all sampling position dependencies.

Introduce multi-group mechanism

The multi-group design was first introduced in group convolution and widely used in Transformer's multi-head self-attention. It can cooperate with adaptive spatial aggregation to effectively improve the diversity of features. Inspired by this, the researchers divide the spatial aggregation process into several groups, each with independent sampling offsets. Henceforth, different groups of a single DCNv3 layer possess different spatial aggregation patterns, resulting in rich feature diversity.

Sample point modulation scalar normalization.

In order to alleviate the instability problem when the model capacity expands, the researchers set the normalization mode as Softmax normalization by sampling point, which not only makes the training process of large-scale models more stable, but also constructs the model of all sampling points. connection relationship.

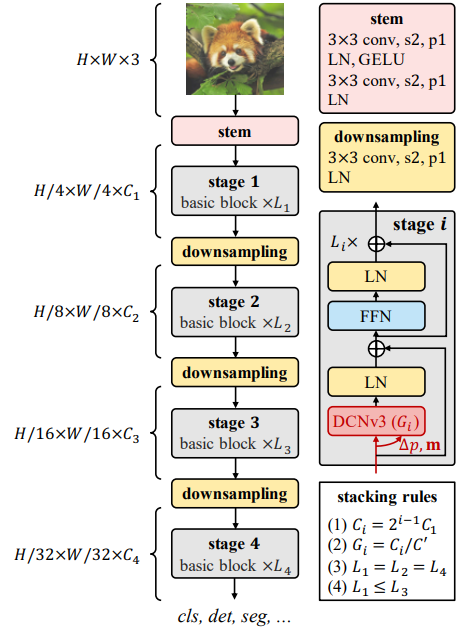

After constructing the DCNv3 operator, the first step is to standardize the overall details of the basic modules and other layers of the model, and then construct the InternImage by exploring the stacking strategies of these basic modules. Finally, according to the extension rules of the proposed model, models with different parameter quantities are constructed.

Basic module

Different from the bottleneck structure widely used in traditional CNNs, a basic module closer to ViTs is adopted, equipped with more advanced components, including GELU, layer normalization (LN) and feed-forward network (FFN), which have been proven in More efficient in various visual tasks. The details of the basic module are shown in the figure above, where the core operator is DCNv3, which predicts the sampling offset and modulation scale by passing the input features through a lightweight separable convolution. For other components, follow the same design as normal Transformer.

Stacking rules

In order to clarify the block stacking process, two module stacking rules are proposed. The first rule is that the number of channels in the last three stages is determined by the number of channels in the first stage. The second rule is that each module group number corresponds to the number of channels in each stage; third, the stacking mode is fixed to "AABA", that is, the stacking numbers of modules in stages 1, 2, and 4 are the same and are not greater than those in stage 3 .

Therefore, a model with a parameter quantity of 30M is chosen as the basis, and its specific parameters are: the number of Steam output channels is 64; the number of groups is 1/16 of the number of input channels in each stage, and the modules in the first, second, and fourth stages are stacked The number is 4, the number of module stacks in the third stage is 18, and the model parameters are 30M.

Model Scaling Rules

Based on the optimal model under the above constraints, the two scaling dimensions of the network model are normalized: the depth D (number of module stacks) and the width C (number of channels), and the depth and width are scaled using the limiting factor and along the composite coefficient .

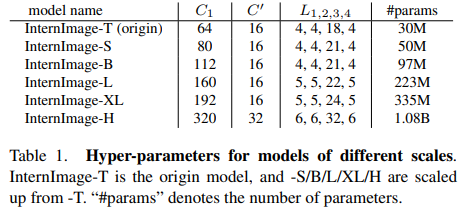

Following this rule, models of different model scales, namely InternImage-T, S, B, L, XL, H, were constructed. The specific parameters are:

Experimental results

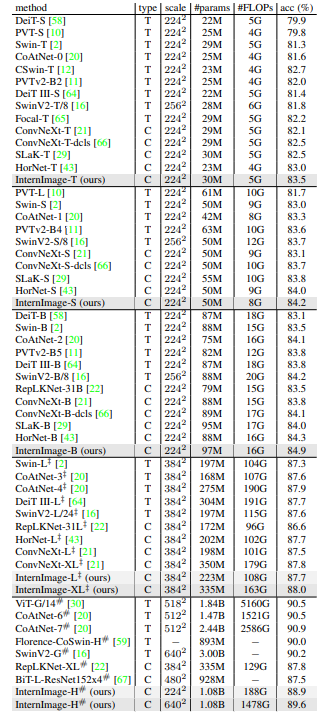

image classification

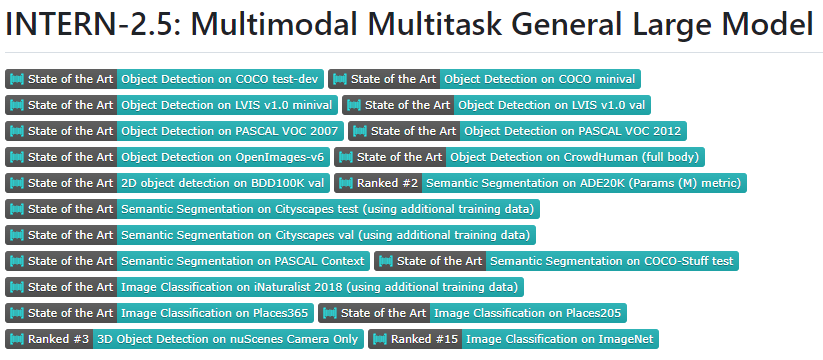

By using 427M public data sets: Laion-400M, YFCC15M, CC12M, InternImage-H achieved an accuracy of 89.6% on ImageNet-1K. And in the recent research and development, the accuracy of the InternImage classification model on ImageNet-1K has reached 90.1%.

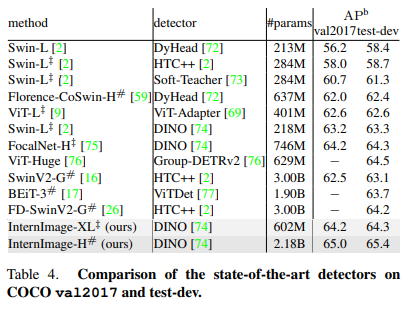

Target Detection

With the largest InternImage-H as the backbone network, DINO is used as the basic detection framework, pre-trained on the Objects365 dataset, and then fine-tuned on the COCO dataset. The model achieved the best result of 65.4% in the object detection task, breaking through the performance boundary of COCO object detection.

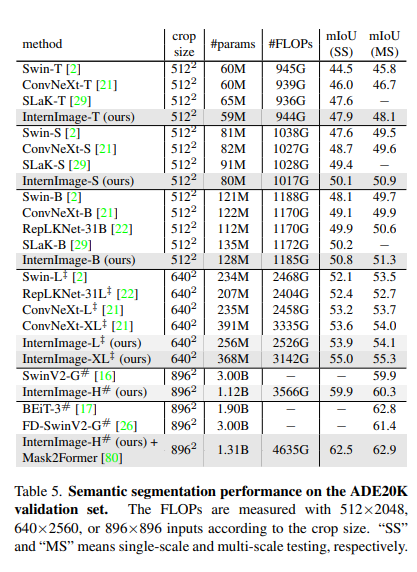

semantic segmentation

In terms of semantic segmentation, InternImage-H also achieved very good performance, combined with Mask2Former to achieve the current highest 62.9% on ADE20K.

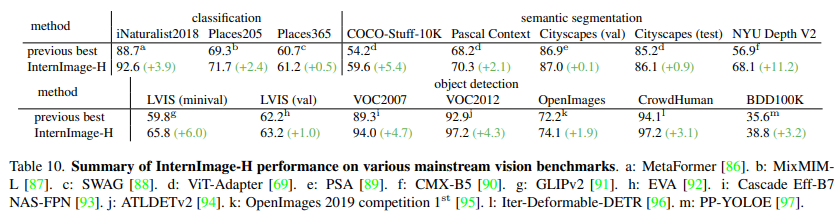

Other SOTA: In addition, InternImage-H has achieved the best results in the other 15 public datasets of different scenarios and tasks, and generally widens the gap with the predecessors.

Summarize

The researchers propose InternImage, a new CNN-based large-scale fundamental model that can provide powerful representations for multifunctional vision tasks such as image classification, object detection, and semantic segmentation. The researchers adjusted the flexible DCNv2 operator to meet the requirements of the basic model, and developed a series of block, stacking and scaling rules based on the core operator. Extensive experiments on object detection and semantic segmentation benchmarks verify that InternImage can achieve comparable or better performance than well-designed large-scale vision transformers trained with a large amount of data, which shows that CNN is also a considerable basis for large-scale vision basic model research. choose. Still, large-scale CNNs are still in the early stages of development, and the researchers hope InternImage can serve as a good starting point.