TOGETHER announced that its RedPajama 7B has completed all training and is fully open source under the Apache 2.0 license.

RedPajama is an open source and commercially available large-scale model project, jointly initiated by TOGETHER, AAI CERC Laboratory of the University of Montreal, EleutherAI and LAION. Currently includes a RedPajama basic data set (5 TB size) based on the LLaMA paper, which has been downloaded thousands of times since its release in April and has been used to train more than 100 models; RedPajama 3B, and the newly announced RedPajama 7B large model that has been trained.

- RedPajama-INCITE-7B-Base is trained on 1T tokens of the RedPajama-1T dataset and publishes 10 checkpoints of training and open data generation scripts, allowing full reproducibility of the model. This model is 4 points behind LLaMA-7B on HELM and 1.3 points behind Falcon-7B/MPT-7B.

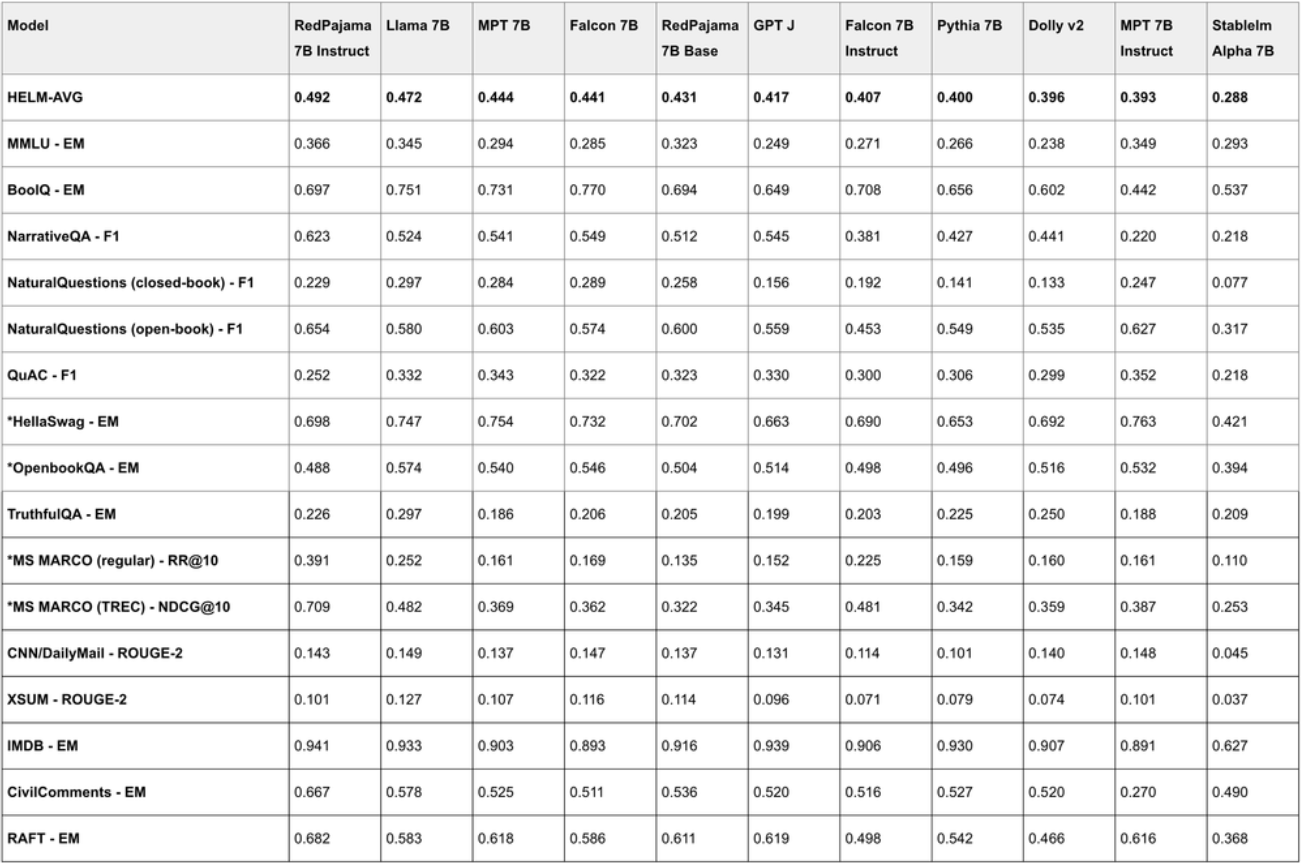

- RedPajama-INCITE-7B-Instruct is the highest scoring open model on the HELM benchmark, making it ideal for a variety of tasks. Its performance on HELM is 2-9 points higher than LLaMA-7B and current state-of-the-art open models such as Falcon-7B (Base and Instruct) and MPT-7B (Base and Instruct).

- RedPajama-INCITE-7B-Chat is available in OpenChatKit, includes a training script for easy fine-tuning of the model, and is available for trial now. Chat models are built on fully open-source data and do not use refined data from closed models like OpenAI - ensuring use in open or commercial applications.

Among them, the Base model is a basic large language model, which is trained on the RedPajama dataset and uses the same architecture as the Pythia model. LM Harness Results:

Instruct is based on the results of fine-tuning the Base model for few-shot prompts. It is optimized for few-shot performance by training various NLP tasks of P3 (BigScience) and Natural Instruction (AI2). The Instruct version shows excellent performance on a handful of tasks, surpassing leading open-ended models of similar scale; RedPajama-INCITE-7B-Instruct appears to be the best open-ended instruction model at this scale. HELM benchmark results:

In addition, the official also announced that a new version of RedPajama, namely RedPajama2, is being developed, with the goal of training on the 2-3T tokens dataset . The main plans are as follows:

- Try similar techniques based on DoReMi to automatically learn the mixture of different data.

- Introduce datasets such as Pile v1 (from Eleuther.ai) and Pile v2 (CrperAI) to enrich the diversity and scale of current datasets.

- Handles more CommonCrawl.

- Explore more data deduplication strategies.

- Introduce a code dataset of at least 150 billion tokens to help improve the quality of coding and inference tasks.

More details can be found on the official blog .