Receive 04_PyTorch model training [weight initialization of Finetune] code

Code and output:

(1) Remove the parameters of the fc3 layer from the original network parameters

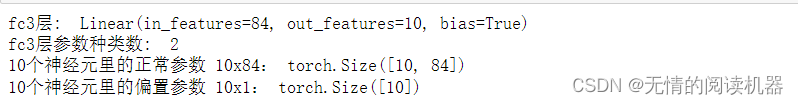

print("fc3层: ",net.fc3)

print("fc3层参数种类数: ",len(list(net.fc3.parameters())))

print("10个神经元里的正常参数 10x84:" ,list(net.fc3.parameters())[0].shape)

print("10个神经元里的偏置参数 10x1:" ,list(net.fc3.parameters())[1].shape)

# 将fc3层的参数从原始网络参数中剔除 fc3

#id() 函数返回对象的唯一标识符,标识符是一个整数。

#CPython 中 id() 函数用于获取对象的内存地址。

#map() 会根据提供的函数对指定序列做映射。

#得到了正常参数和偏置参数的内存地址

ignored_params = list(map(id, net.fc3.parameters()))

#filter用于过滤序列,过滤掉不符合条件的元素,返回由符合条件的元素组成的新列表

base_params = filter(lambda p: id(p) not in ignored_params, net.parameters())(2) Set the required learning rate separately for the fc3 layer

lr_init = 0.001# 为fc3层设置需要的学习率

optimizer = optim.SGD([

{'params': base_params},

{'params': net.fc3.parameters(), 'lr': lr_init*10}], lr_init, momentum=0.9, weight_decay=1e-4)(3) Choose loss function and learning rate adjustment measure

criterion = nn.CrossEntropyLoss() # 选择损失函数

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=50, gamma=0.1) # 设置学习率调整策略(4) Start training

from torch.autograd import Variablemax_epoch = 5

for epoch in range(max_epoch):

loss_sigma = 0.0 # 记录一个epoch的loss之和

correct = 0.0

total = 0.0

scheduler.step() # 更新学习率

for i, data in enumerate(train_loader): #一批一批得读,直到读完

# 获取图片和标签

inputs, labels = data #inputs torch.Size([16, 3, 32, 32]) # labels torch.Size([16])

#Variable是篮子,而tensor是鸡蛋,鸡蛋应该放在篮子里才能方便拿走

#Variable这个篮子里除了装了tensor外还有requires_grad参数,表示是否需要对其求导,默认为False

inputs, labels = Variable(inputs), Variable(labels)

# forward, backward, update weights

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# 统计预测信息

#torch.max 返回每一行(dim = 1)最大元素和对应的索引

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).squeeze().sum().numpy()

loss_sigma += loss.item()

# 每10个iteration 打印一次训练信息,loss为10个iteration的平均

if i % 10 == 9:

loss_avg = loss_sigma / 10

loss_sigma = 0.0

print("Training: Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch + 1, max_epoch, i + 1, len(train_loader), loss_avg, correct / total))

print('参数组1的学习率:{}, 参数组2的学习率:{}'.format(scheduler.get_lr()[0], scheduler.get_lr()[1]))

(5) Test set

import numpy as np classes_name = ['plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

# ------------------------------------ 观察模型在验证集上的表现 ------------------------------------

loss_sigma = 0.0

cls_num = len(classes_name)

conf_mat = np.zeros([cls_num, cls_num]) # 混淆矩阵

net.eval()

for i, data in enumerate(valid_loader):

# 获取图片和标签

images, labels = data

images, labels = Variable(images), Variable(labels)

# forward

outputs = net(images)

outputs.detach_()

# 计算loss

loss = criterion(outputs, labels)

loss_sigma += loss.item()

# 统计

_, predicted = torch.max(outputs.data, 1)

# labels = labels.data # Variable --> tensor

# 统计混淆矩阵

for j in range(len(labels)):

cate_i = labels[j].numpy()

pre_i = predicted[j].numpy()

conf_mat[cate_i, pre_i] += 1.0

print('{} set Accuracy:{:.2%}'.format('Valid', conf_mat.trace() / conf_mat.sum()))

print('Finished Training')