1. SDN related concepts

1. Large Layer 2 network

In the Internet era, user access is called north-south traffic, while data transmission between data centers is called east-west traffic.

In many cases, data access and data synchronization between different data centers are required. And to synchronize these traffic requires certain challenges to this security and stability. The technology that makes these data center networks into a seemingly large logical Layer 2 network is called virtual network technology. This logically large network is a Layer 2 network. Currently, large Layer 2 networks exist in the virtualization of data centers. Direction related, cloud computing and other major network technologies.

The realization of different data communication relies on vlan to communicate directly, so the essence of layer 2 communication is to tunnel, and let the data follow the tunnel for forwarding, which is somewhat similar to mpls (tunnel forwarding), but between establishing tunnels, the routing of these data centers is required. Reachable, in the case of ensuring that the three layers can communicate with each other, a tunnel is established to provide high-speed and secure data access.

There are really many types of mpls, including MPLS L2VPN, MPLS L3VPN, LDP, mlps 6vpn/6pe, and mpls TE. They all have one thing in common, relying on the LDP protocol (label distribution). This LDP is also a major protocol of Mpls.

- mlps 6vpn/6pe: a transitional technology that allows scattered ipv6 networks to pass through ipv4 tunnels to access the ipv6 network at the other end

- mpls l2vpn: It can provide a point-to-point or point-to-multipoint service, and forward user packets according to the mac of the private network, (two-layer vpn)

- mpls l3vpn: This is a relatively classic three-tier VPN technology, based on pe-based three-tier VPN technology (pe: the router at both ends of the lsp, the earliest router device that provides label switching)

- mpls Te: This is the traffic engineering of mpls, which enables high-priority traffic to occupy low-priority LSP bandwidth.

2. SDN related terms

The following are terms related to SDN technology.

- Software-Defined Networking (SDN): Software-Defined Networking (SDN) can build an open and programmable network environment, which realizes centralized control and management of the network on the basis of virtualizing various underlying network resources.

- Software-Defined Local Area Network (SD-LAN): Local area network based on software-defined networking, which can create a flexible and cost-effective wireless and wired access network.

- Software-defined wide-area network (SD-WAN): a wide-area network based on software-defined networks, which is often used to connect enterprises with large regional spans and their data centers.

- OpenFlow: OpenFlow is a protocol for configuring flows, flowtables, and TCAMs.

- OpenDaylight: OpenDaylight is an open standard controller dominated by the Linux platform.

- OpenStack: OpenStack is an open source cloud operating system used to create and manage cloud resources.

- CloudStack: CloudStack is an open source cloud computing software used to create, manage and deploy cloud service infrastructure.

- Orchestration: Orchestration is a system that automatically creates, initializes, coordinates, and manages the physical and virtual resources required for cloud service delivery.

- OSS: OSS is a short-term operation and maintenance support system that can help service operators monitor, analyze and manage telephone or computer networks.

- SDN Controller: An SDN Controller is an application in Software Defined Networking (SDN) that is responsible for traffic control to ensure an intelligent network. It's based on protocols like OpenFlow that allow servers to tell switches where to send packets.

- White box switch: also known as an openflow switch, is a consumer switch hardware pre-installed with a third-party network operating system.

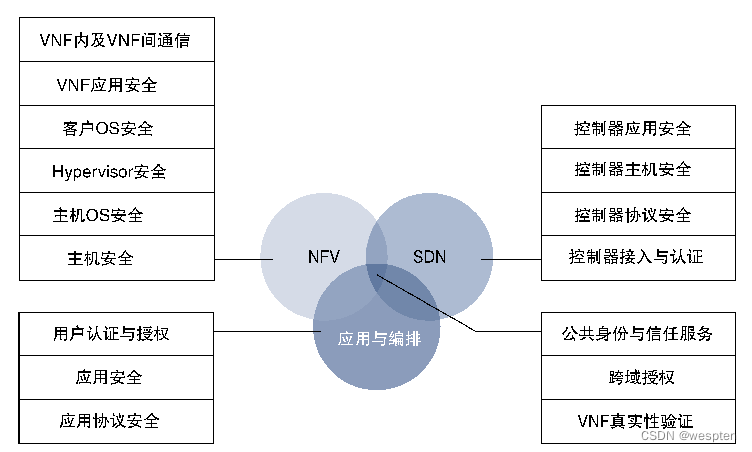

- NFV (Network Functions Virtualization Network Function Virtualization): A concept for network architecture, using virtualization technology to divide the functions of the network node level into several functional blocks, which are implemented in software, and are no longer limited to hardware architecture.

3. SDN-related protocols

As a new technology, the following is the relevant agreement content of SDN technology.

Data center: bgp evpn vxlan, vxlan

sdn technology: pcep, bgp-ls, OpenFlow, ovsdb, SR/SRv6, netconf, bgp flowspec

The most common protocol for SDN is OpenFlow, but in addition to OpenFlow, the following protocols can also be used for SDN.

- OpenFlow protocol: OpenFlow is the first-generation standard protocol for software-defined networking (SDN), which defines an open protocol that enables SDN controllers to interact with forwarding platforms of network devices.

- NETCONF protocol: Defined by RFC 6241, it is used to replace command line interface (CLI, command line interface), Simple Network Management Protocol (SNMP, Simple Network Management Protocol) and other proprietary configuration mechanisms. The management software can use the NETCONF protocol to write configuration data to the device and retrieve data from the device.

- OF-Config protocol: The OF-Config protocol is a protocol for configuring OpenFlow switches. Its main functions include configuration of multiple controllers connected to the switch, configuration and allocation of resources such as ports and queues, and status modification of resources such as ports. .

- XMPP protocol: XMPP is a protocol based on XML, a subset of the standard general markup language, which inherits the flexible development in the XML environment.

- OpFlex protocol: It is an alternative to the OpenFlow protocol introduced by Cisco (Cisco). The OpFlex protocol is designed to preserve network infrastructure hardware as the fundamental control element of a programmable network.

The principles of SR and SRV6 are different. One is label forwarding and igp protocol extension, and the other is the use of the ipv6 header extension field & use the SRH information in this field for forwarding.

Vxlan is classified into the application of the data center. It is used for tunneling and has a certain relationship with SDN. The other eight are classified as protocols with SDN attributes. SDN is to allow the network to have the ability to program, to adjust network resources more dynamically through programs, and to allocate network resources. This is the mainstream trend in the 21st century.

Among them, openflow and ovsdb mainly work on the PCC (computer controller, the brain responsible for computing programming). The main function is that the controller communicates with multiple switches, allowing the switch to receive the lsp information of the controller, so that the switch Multiple different tunnels are formed. Data is then quickly forwarded through these tunnels. To improve network quality and capacity, the purpose of SR and SRv6 is also to achieve the same goal.

2. Overview of SDN

1. Background of SDN

A disadvantage of the current routing protocol is that the main routing algorithm is very small, and most of them are used to maintain the topology and neighbor relationship, such as RIP, OSPF, IS-IS, etc., this point is defined by the distribution system, in order to solve this problem , So there is SDN.

Software-defined networking (SDN) provides high programmability, making network expansion, system design and management easier. That is to say, SDN actually integrates the same part of different protocols, that is, the distrilbuted system, into Network OS, and the routing algorithm becomes an APP. Therefore, the routing protocol has not disappeared, but has become another form.

SDN is a new type of network structure with logically centralized control. Its main feature is the separation of the data plane and the control plane, and the information exchange between the data plane and the control plane is realized through the standard open interface OpenFlow.

SDN has absorbed the experience of the evolution of the computing model from a closed, integrated, dedicated system to an open system. By separating the data plane and the control plane in the traditional closed network equipment, the network hardware and the control software are separated, and an open standard interface is formulated. Allows network software developers and network administrators to control the network through programming, turning traditional dedicated network devices into standardized general-purpose network devices that can be defined through programming.

SDN can implement all protocols, and can also abandon all protocols, because SDN is not at the same level as control protocols.

The problem that SDN solves is to separate the implementation of the protocol from the hardware layer, describe the hardware with a unified model, and turn the parts other than the unified model into flow tables and controllers. Arbitrary network protocols can be implemented through flow tables and controllers, including traditional control protocols. Upgrading the controller allows the SDN network to support more protocols.

Rationally speaking, no matter how developed SDN is, it is impossible to control the entire Internet with one controller. Routing and switching protocols are the foundation of the modern Internet, so whether it is a network implemented by SDN or a traditional network, routing and switching protocols will still be unavoidable in the foreseeable future.

2. Abstract structure of SDN network

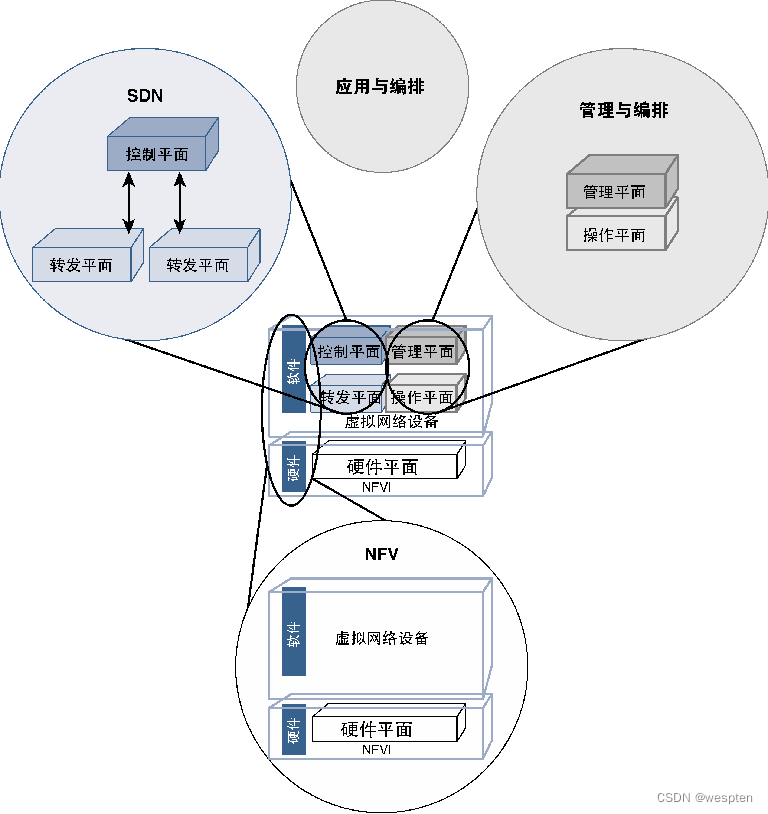

The network abstract structure of SDN consists of four abstract models: control plane abstract model, forwarding plane, management plane, and operation plane.

The interface between the control plane and the data plane, and the interface between the control plane and the application plane are called the south bound interface and the north bound interface, respectively, and the interfaces between the SDN controllers inside the control plane are called the east bound interface and the north bound interface. Westbound interface.

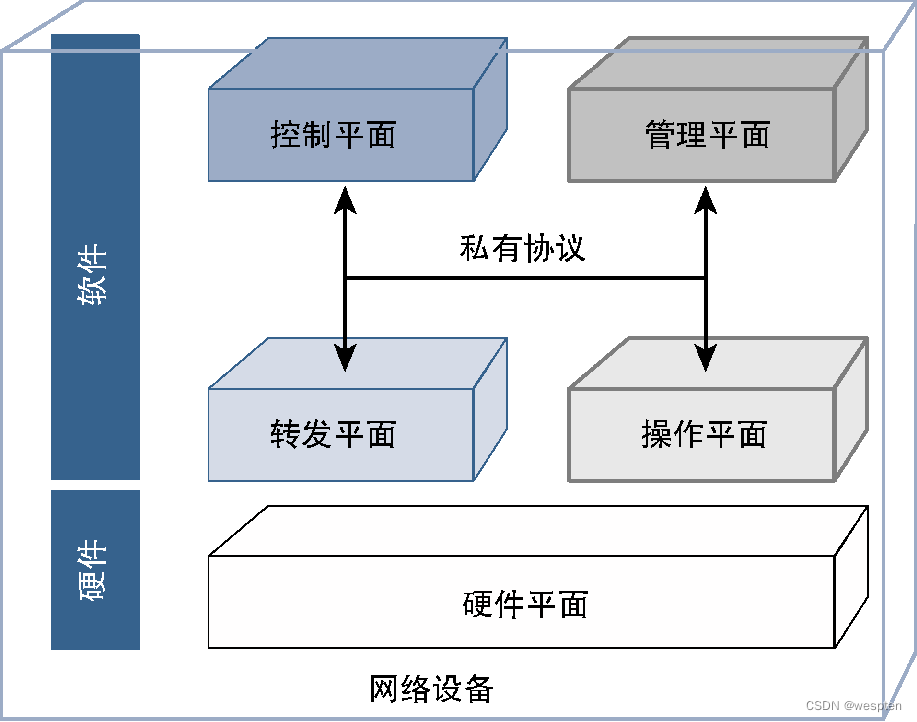

The software used by traditional network devices to implement network functions usually includes multiple roles. We can classify these roles into functional planes that work independently. These functional planes interact through proprietary or open APIs (Application Program Interface, application programming interface) . From a high-level perspective, these roles can be divided into the following four categories.

- Control plane: The main function is to determine the path of data flowing through the device, decide whether to allow data to penetrate the device, the queuing behavior of data, and various operations required by the data, etc. This role is called the control plane.

- Forwarding plane: The function of this part of software is to forward, queue and process data on the device according to the instructions of the control plane. This role is called the forwarding plane or data plane. Thus, the control plane's responsibility is to determine what to do with the data coming into the device, while the data plane's responsibility is to perform specific actions based on the control plane's decisions.

- Management plane: The control plane and forwarding plane are responsible for processing data traffic, while the management plane is responsible for network device configuration, fault monitoring and resource management.

- Operation plane: The operating status of equipment is monitored by the operation plane, which can directly view all equipment entities. The management plane directly cooperates with the operation plane, and uses the operation plane to retrieve the health status information of the device, and is also responsible for pushing configuration updates to manage the running status of the device.

For traditional network equipment, these planes are fully coupled together and communicate through proprietary interfaces and protocols, as shown in the figure below.

The SDN control plane abstract model supports users to control the network through programming on the control plane without caring about the details of the data plane implementation. Through statistical analysis of network status information, an abstract model of a global and real-time network status view is provided. The network control plane can prioritize routes according to the global network status, improve the security of the network system, and enable the network to have stronger management and control capabilities. and security.

To better explain these concepts, let's take a router as an example. The management plane responsible for router configuration provides a mechanism to define parameters such as hostname, interface IP address to use, routing protocol configuration, thresholds and classifications for QoS (Quality of Service), and the operation plane is responsible for Monitor interface status, CPU consumption, memory utilization, etc., and transmit the status information of these resources to the management plane for fault monitoring. The routing protocol (defined by the management plane) running on the router constitutes the control plane, which can predetermine the data flow to build a routing lookup table (called RIB [Routing Information Base, routing information base]), and map this data to the router's specific outgoing interface. The forwarding plane then uses this routing lookup table and determines the path for data to traverse the router.

Because the control plane is integrated in the device software, the network architecture has a distributed control plane, each node will perform its own control plane computing operations, and these control planes can exchange information with each other. For example, routing protocols running on each device exchange information to determine the topology of the entire network or learn routing information from each other. Although the management plane is also localized accordingly, NMS (Network Management System, Network Management System) adds a layer of management layer on the management plane, thus realizing the centralization of management functions.

Usually, protocols such as Syslog, SNMP (Simple Network Management Protocol, Simple Network Management Protocol) and NetFlow are used to perform monitoring operations, while configuration operations are completed using proprietary CLI, API, SNMP or scripts.

The following figure shows a schematic diagram of the deployment architecture of traditional network devices:

Programmability is at the heart of SDN. Programmers can write programs to control various network devices (such as routers, switches, gateways, firewalls, servers, wireless base stations) as long as they master the programming method of the network controller API, without knowing the specific configuration commands of various network devices. syntax, semantics. The controller is responsible for converting API programs into instructions to control various network devices. New network applications can also be easily added to the network through API programs. An open SDN architecture makes the network universal, flexible, secure, and supports innovation.

3. Introduction to SDN

SDN is not a protocol, but an open network architecture.

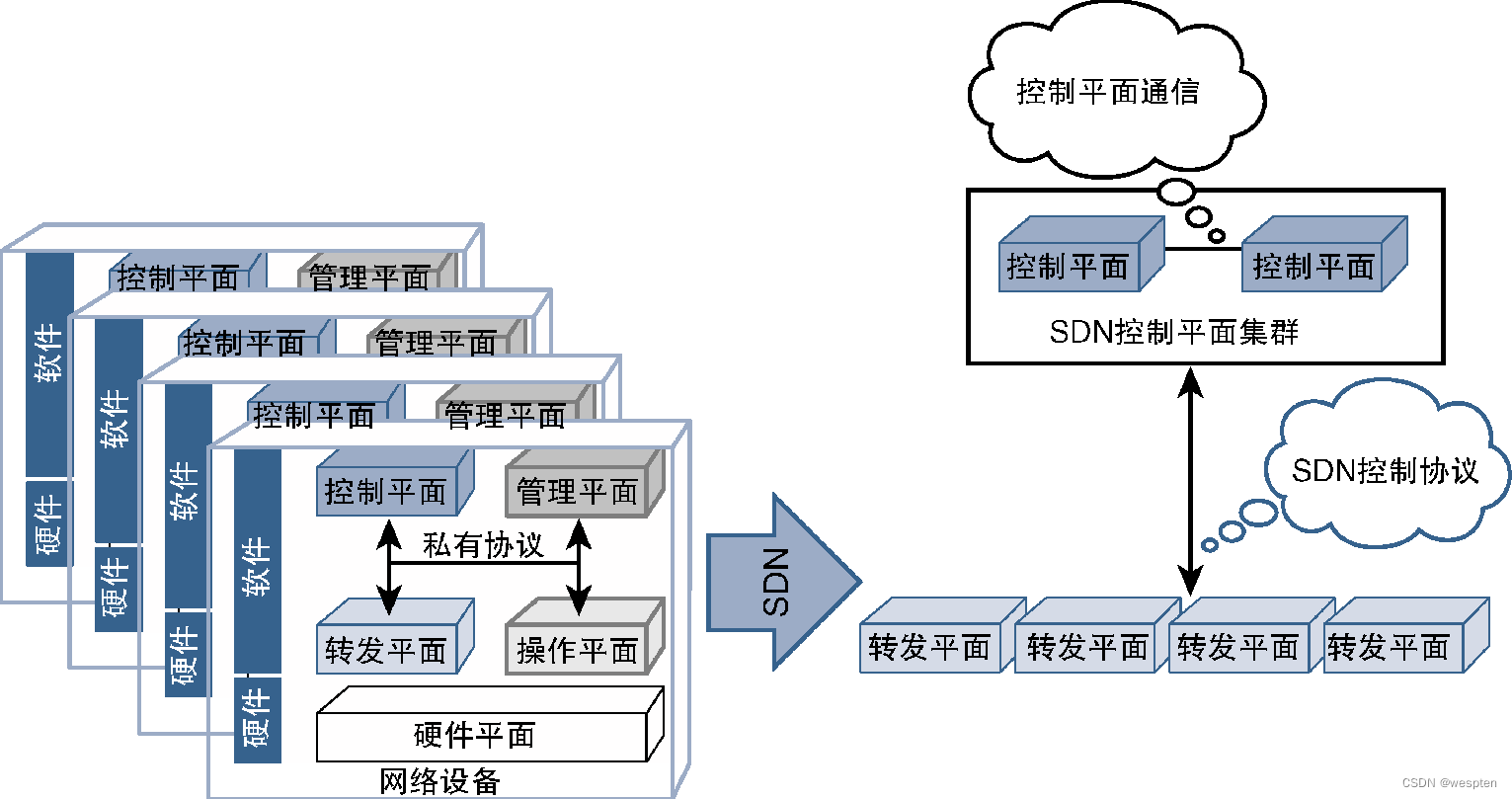

SDN is a structure that changes the traditional tightly coupled data plane and control plane into a structure that decouples and separates the data plane and control plane, and centralizes the network control plane functions of routers to the SDN controller. SDN routers are programmable switches. The SDN controller realizes the control of router data plane functions by issuing routing information and control commands.

SDN centrally controls network logic through standard protocols, realizes flexible control and management of network traffic, and provides a good platform for core network and application innovation.

In an SDN network, SDN is not intended to replace the control plane of routers and switches, but to strengthen the control plane with a view of the entire network, and determine the routing and grouping of each node according to dynamic traffic, delay, quality of service and security status Forwarding strategy, and then push the control command to the control plane of the router and the switch, and the control plane controls the packet forwarding process of the data plane.

Although the goal of SDN is to separate the control plane from the forwarding plane, it is not mandatory to limit the centralized control plane to a single node. In order to achieve scalability and high availability, the control plane is allowed to be extended horizontally to form a control plane cluster. The modules containing the cluster function can pass BGP (Border Gateway Protocol, Border Gateway Protocol) or PCEP (Path Computational Element Communication Protocol, path Computing unit communication protocol) and other protocols to communicate to achieve a single centralized control plane.

The following figure shows the basic concept of SDN and the differences with the traditional network architecture. It should be noted that since the focus of SDN is the control plane and forwarding plane, the figure does not emphasize the relationship between these planes and the hardware plane or operation plane and Interaction issues between management planes.

In the implementation of SDN, the control plane can be managed through the application program. The application program can interact with the control plane and the management plane, extract device information and device configuration from the management plane, and extract network topology and traffic path information from the control plane. Therefore, the application The program has a complete and unified view of the network and uses this information to make processing decisions that can be passed to the control plane or management plane, as shown in the figure below.

The figure also shows the concepts of northbound and southbound protocols and APIs. The meanings of these terms are related to the environment in which they are used. The figure shows the application scenarios of SDN control plane and management plane. At this time, the southbound protocol refers to It is the communication from the control plane or management plane to the bottom plane. The interface provided by the management plane and the control plane to the upper plane (such as the application layer) is called northbound API or northbound protocol.

A typical example of this type of application is on-demand bandwidth. Applications can monitor traffic in the network and provide additional traffic paths during certain hours of the day or when predetermined thresholds are exceeded. The management plane must provide applications with information about network interfaces. The control plane provides real-time forwarding topology, and applications use this information to determine whether additional traffic paths need to be provided for specific traffic. User-defined policies can be used to preset thresholds for the application that trigger the appropriate action, which the application communicates by instructing the management plane to provide a new traffic path and telling the control plane to start using that traffic path.

4. Advantages of SDN

When SDN was first introduced, its benefits were not strong enough for vendors or service providers to firmly move in this direction. At that time, the network expansion deployment plan still adopted a partially automated configuration and management mechanism, and the tightly coupled method of the control plane and the data plane did not become a major bottleneck for network expansion. technology.

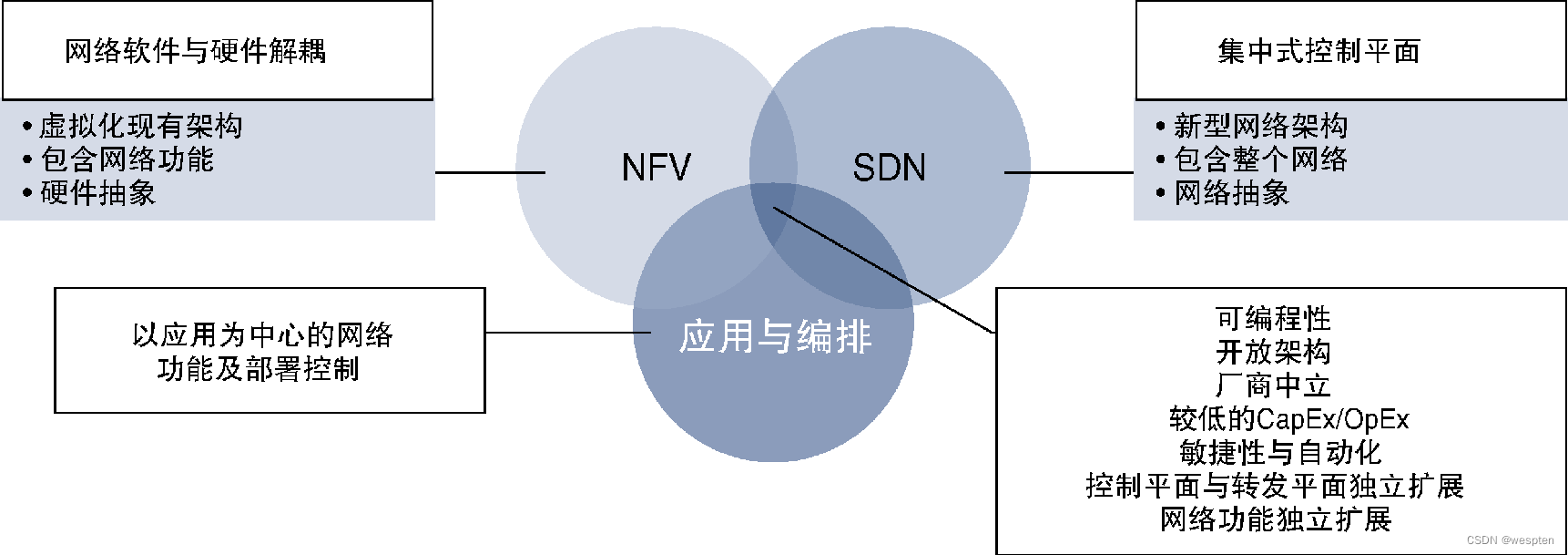

Later, the network gradually faced the demand for exponential scale growth, which caused a large number of limiting factors in the expansion mechanism of the traditional network. NFV is one success story of the networking industry pioneering innovation and adopting new technologies to break free from vendor lock-in, and SDN is another. From academia to real-world application deployment, SDN did not take too much time. Since SDN can realize a flexible, scalable, open and programmable network, it has been deployed more and more widely.

Some of the important advantages of SDN are discussed in detail below, all of which are closely related to reducing the cost of network operation.

1. Programmability and automation

The ability to control the network through applications is an important advantage of SDN. The current network needs to have stronger network recovery capabilities, large-scale scalability, faster deployment mechanisms, and the ability to optimize operating costs. However, due to manual processing The process cannot provide a fast processing mechanism, so the operation of the entire network has to slow down, and the maximum use of automation tools and applications has become a must to meet the needs of the network. Automation and programmability are required to support the on-demand configuration of the network , Monitoring and analysis of device data, but also need to make real-time changes according to traffic load conditions, network interruptions, and known and unknown events that occur in the network. In the traditional sense, the solutions provided by manufacturers are mainly for their own devices or OS (Operating System, operating system), and sometimes provide limited support for external devices (if any), according to the logic and constraints to make decisions.

SDN solutions couple applications to the network, solving the problems of manual control and management processes. Since SDN puts intelligence on a centralized control device (that is, the SDN controller), programs and scripts that automatically respond to expected and unexpected events can be built directly in the controller. As an option, the application can also run on the controller, and use the northbound API to transfer the logic to the controller and finally to the forwarding device. Applications can handle failures and increasingly management needs, enabling rapid resolution and recovery from failures. This approach minimizes operational costs by significantly reducing service downtime, shortening configuration time, and increasing the ratio of equipment to network operations personnel.

2. Support centralized control

After the control plane is centralized, all important information can be obtained more easily, and the implementation of the control logic is also relatively simple. SDN can unify network views, simplify network control logic, and reduce operational complexity and maintenance costs.

3. Multi-vendor and open architecture

Because SDN uses standardized protocols, it breaks the dependency on vendor-specific control mechanisms. Device access and device configuration methods provided by traditional vendors are proprietary methods, not easy to program, and there are many obstacles in developing applications and scripts to automate some configuration and management processes, especially in the hybrid vendor ( Even in a mixed OS) environment, applications must account for changes and differences in device interfaces. Furthermore, if there are differences (possibly parsing differences) in how vendors implement standard control plane protocols, then this can lead to interoperability issues. These challenges have long existed in traditional networks, but SDN frees up the control plane of devices, leaving only the data plane, thus potentially solving the problem of control plane interoperability in mixed-vendor deployment environments.

4. Simplify network equipment

The control plane of network devices usually takes up a lot of network resources (especially network devices running multiple protocols), and transfers various information (such as internal routes, external routes and labels, etc.) between these protocols, and then stores them locally This information, while also running other protocol logic to leverage the data for path computation, all of these operations impose unnecessary overhead on the device and limit its scalability and performance. Since SDN strips these overheads from the equipment, allowing the network equipment to focus on the main responsibility (forwarding data), the processing resources and memory resources of the equipment are released, thus greatly reducing the equipment cost, simplifying the software implementation, and thus obtaining more benefits. Good scalability to achieve the best utilization of equipment resources.

3. Principles of SDN Implementation

As mentioned earlier, the core idea of SDN is to separate the control plane and separate the control plane from the forwarding plane. A straightforward way to achieve this goal is to implement the control plane functionality on an external device (called an SDN controller), leaving the forwarding plane functionality on the device in the data path.

However, as you learn more and more about the concept of SDN, you will find that there are many ways to achieve the basic goals of centralizing control and simplifying the data plane.

1. Introduction to SDN Controller

The SDN controller is an independent device that realizes the function of the SDN control plane, and is responsible for transmitting the decision information of the control plane to the network device. At the same time, the SDN controller can also retrieve information from network devices to make sound control plane decisions. The SDN controller communicates with network devices through the SDN control protocol.

From the perspective of geographical location, SDN controllers do not need to be deployed in the same geographical location as network devices, as long as they can communicate with the network devices they control. At present, the industry provides a variety of open source and commercial SDN controllers, and the relevant content of these SDN controllers will be discussed in detail later.

2. SDN implementation model

From a technical point of view, it is not always feasible for vendors to completely separate the control plane of the network equipment and let the network equipment perform pure forwarding functions. Therefore, vendors have adopted different methods to implement SDN, which is different from the SDN implementation we have discussed so far. Mechanisms are not completely consistent. Service providers also face many practical difficulties, and it is difficult to completely migrate their networks to SDN, so it is possible to adopt some alternative solution to deploy SDN, as long as these alternative implementation solutions can fully enjoy the benefits brought by SDN, they can Realizing the separation of the control plane and the forwarding plane is an effective way to implement SDN.

Common SDN implementation methods mainly include the following three types.

1. Open (Classic) SDN

This method is a classic implementation way to realize the separation of the control plane and the forwarding plane. Since the network equipment developed by the supplier is temporarily unable to achieve this goal, this method uses the SDN Support Layer (SDN Support Layer) to replace the local control plane to realize the SDN support capability.

The new SDN support layer can work with the SDN controller and the forwarding plane of the device, so that the network device has the ability to communicate with the SDN controller through the SDN protocol, and can directly control the forwarding plane, as shown in the following figure.

2. Hybrid SDN

Many vendors have adopted SDN implementations that modify the control plane of devices through the SDN support layer, and claim that their devices are already SDN-enabled. But this does not mean that the local control plane of the device no longer exists, local intelligence can still work with the control plane implemented by the external controller.

For this implementation, because the device will run its own (distributed) local control plane, and the external SDN controller will enhance the intelligence of the device by modifying the routing parameters used by these protocols or directly modifying the forwarding plane, so the The implementation method is called hybrid SDN, as shown in the figure below.

Note that the main difference in a hybrid SDN implementation compared to a classic SDN implementation is that the device still uses a local control plane.

3. Realize SDN through API

Some vendors implement SDN by providing APIs for deploying, configuring, and managing devices. Applications can control the forwarding plane of the device through the API, which is equivalent to the southbound API used between the controller and the network device. However, since the API can be plugged directly into the application, such an SDN implementation may not require an SDN controller using standard southbound protocols.

Compared with the private CLI (Command-Line Interface, command-line interface) that suppliers have been using, this implementation is to change to a more collaborative and open direction, but it is difficult to achieve true openness, because these APIs It is likely that multi-vendor compatibility will not be achieved, thus not really addressing the privacy issue. Applications using this API-based implementation of SDN must know which vendor's equipment they are communicating with in order to be able to use the correct API.

The argument in favor of this approach to SDN is that it allows applications to influence forwarding decisions, and that APIs are publicly available to anyone who wants to build applications and consume them, thus achieving the core goals of SDN. While this approach allows network programmability, it lacks flexibility (due to private southbound APIs). Some vendors address the flexibility issue by offering their own controllers that use a private southbound API (for network devices) and a standard northbound API.

The following figure shows the implementation of SDN through API:

4. Realize SDN through overlay

Another way to separate the control plane from the network is to create a separate overlay network on top of the existing network, where the underlying network still has the control plane managed locally in the traditional way. However, for an overlay network, the underlying network essentially just provides connectivity and forwards data.

For network users, the underlying network and its topology and control plane are transparent, and the overlay network is the network that interacts with users. Users in this implementation mode can manage the overlay network through an external controller, and do not need the devices constituting the underlying network to support any SDN functions. The implementation of the SDN network meets the basic requirements of SDN, and the only constraint is that the underlying equipment must support the protocol for implementing the overlay network. The concept of a virtual network was discussed above, and a virtual network is actually an overlay network.

Technical solutions using this SDN implementation mainly include VXLAN (Virtual Extensible LAN) supported by a large number of suppliers and NVGRE (Network Virtualization using Generic Routing Encapsulation) supported by Microsoft. .

3. SDN protocol content

Regardless of the method used to implement SDN, some type of protocol must be used to complete the communication and information exchange between forwarding devices, applications, and controllers. From the perspective of the SDN controller, these protocols can be divided into northbound protocols and southbound protocols. As mentioned above, the southbound protocol is used for communication between control plane devices (such as SDN controllers or applications) and the forwarding plane, while the northbound protocol is used for communication between applications and SDN controllers.

1. Southbound Agreement

Southbound protocols can be divided into two categories. One is that the control plane can directly communicate with the forwarding plane, and the other is that the control plane indirectly affects the forwarding plane by changing device parameters through the management plane. Protocols that directly interact with the forwarding plane are called SDN control plane protocols, and protocols that use the management plane to change the forwarding plane are simply called management plane protocols.

The following figure shows the schematic diagram of SDN protocol classification:

2. SDN control plane protocol

The SDN control plane protocol operates on the network device as a low-level protocol, and the device hardware can be programmed to directly control the data plane. Common SDN control plane protocols mainly include OpenFlow, PCEP (Path Computation Element Communication Protocol, Path Computation Element Communication Protocol), and BGP Flow-Spec.

A brief analysis of these agreements will be made below.

1)OpenFlow

The communication process between the control plane and the forwarding plane of the traditional network equipment provided by the equipment manufacturer takes place in the same equipment, and these equipment use proprietary communication protocols and internal process calls. For the SDN environment, since the control plane and the forwarding plane are separated, a standard protocol supporting multiple suppliers is required to complete the communication process between them. Therefore, OpenFlow came into being. OpenFlow is the industry's first open source control protocol for communication between SDN controllers and network devices and programming the forwarding plane.

OpenFlow has gradually matured from the initial laboratory version, and currently provides product-level software of version 1.3 and above.

OpenFlow is responsible for maintaining information called a flow table on the device, which contains information about how to forward data. The SDN controller can program the forwarding plane of the OpenFlow-supporting switch through the OpenFlow protocol by changing the flow table on the device.

To program forwarding information and set paths in the network, OpenFlow supports two modes of operation, passive and active. The passive mode is the default operation mode for implementing SDN by using OpenFlow, assuming that the network device has no intelligence or does not run related functions of the control plane.

In passive mode, the first packet of data traffic received by all forwarding nodes is sent to the SDN controller, which uses this information to program the data flow traversing the entire network. Create flow tables in all subsequent devices and switch data traffic accordingly. In active mode, the SDN controller will pre-configure some default flow values, and after the switch starts up, the traffic flow will be pre-programmed.

When the SDN controller and the switch exchange information flows through the network, it is recommended to perform OpenFlow communication through a secure channel, such as SSL (Secure Socket Layer, Secure Socket Layer) or TLS (Transport Layer Security, Transport Layer Security).

The following figure shows the architecture of OpenFlow:

OpenFlow mainly focuses on the relationship between the control plane and the data plane, but if the management plane and operation plane of the device must still be managed in the traditional way, then the programmability advantages that OpenFlow brings to SDN will be weakened. The original OpenFlow was developed for switches, with less consideration for management functions. In order to obtain the full benefits of programmability, it is required that the management plane should also have interfaces that can be used by applications.

Therefore, two different protocols can be used to improve the management and configuration capabilities of OpenFlow: OF-CONFIG (OpenFlow Configuration, OpenFlow configuration) management protocol and OVSDB (Open vSwitch Database, open virtual switch database) management protocol.

2)PCEP

PCEP (Path Computation Element Communication Protocol, Path Computation Element Communication Protocol) is a protocol that works between two devices, one of which uses TE (Traffic Engineering, Traffic Engineering) for forwarding, and the other device is responsible for execution All calculations required to determine traffic engineering paths. PCEP is defined by RFC 4655, which defines the device running the TE protocol as PCC (Path Computation Client, Path Computation Client), and defines the device that performs all computing functions as PCE (Path Computation Element, Path Computation Unit), PCE and The agreement between PCC is called PCEP.

PCC can be any traditional routing device that has enabled the ability to work with PCE. In the traditional sense, routers perform their own computing operations and exchange information with each other, while routers in the PCEP model (acting as PCC) perform traffic forwarding and labeling. Operations such as addition and processing leave all calculation and path decision-making processes to the PCE. If there are multiple PCEs working together, PCEP can also be used as a communication protocol between these PCEs. If you want to learn LSDB (Link State Data Base, link state database) information from the network, then you can establish a passive IGP relationship between the PCE device and the device in the network, but because doing so will limit the PCE's cognition of the network area boundary, Therefore, an alternative solution called BGP LS (BGP Link State, BGP Link State) is proposed. BGP LS is a new BGP extension protocol that can provide LSDB information to PCE.

Since the design of PCEP is based on the traffic engineering use case of SDN, protocols such as RSVP-TE, GMPLS (Generalized MPLS, Generalized MPLS)-based TE, and SR-TE (Segment Routing TE, Segment Routing TE) are adopted. In these scenarios, The roles of PCEP, PCC and PCE are the same.

For example, the PCC can request the PCE to perform path computation operations under certain constraints, and the PCE can return possible paths satisfying the constraints.

3)BGP-FS

BGP-FS (BGP Flow Spec, BGP flow specification) is a supplementary protocol of the BGP protocol, which defines the method for BGP routers to advertise flow filtering rules to upstream BGP peer routers. Specific actions to take on matched traffic (including dropping those matched traffic).

BGP-FS is a standard protocol defined in RFC 5575 and supported by a large number of vendors. BGP-FS defines a new BGP NLRI (Network Layer Reachability Information, network layer reachability information), which can be used to create flow specifications. Essentially, a flow specification is a matching condition, such as source address, destination port, QoS value, and packet length. For matching traffic, the system can perform operations such as rate limiting, QoS classification, discarding, and redirection to a VRF (Virtual Routing and Forwarding, virtual routing and forwarding) instance.

For SDN scenarios, the SDN controller can establish a BGP neighbor relationship with the forwarding device. As long as all devices support BGP-FS, the controller can send traffic filtering rules to these devices through BGP-FS to control the forwarding behavior. . In fact, the original purpose of BGP-FS is to redirect or drop DDoS (Distributed Denial of Service) attack traffic. In this scenario, the controller (after detecting the attack) instructs the router facing the attack traffic to drop the matching traffic. Or divert this traffic to a traffic scrubbing device.

3. SDN management plane protocol

Management plane protocols are responsible for handling device configuration operations, which indirectly affect the forwarding plane. Since management plane protocols assume a hybrid SDN implementation, network devices all run their own control plane protocols that are influenced by external applications using management plane protocols.

A brief analysis of these agreements will be made below.

1)NETCONF

NETCONF (Network Configuration Protocol, Network Configuration Protocol) is an IETF (Internet Engineering Task Force, Internet Engineering Task Force) standard protocol (defined in RFC 6242), and many network vendors have already supported this protocol to support the programming interface of network devices.

NETCONF adopts a client-server model, where an application acts as a client, configuring parameters for a device acting as a server or retrieving operational data from a server. Configuration data or operational data exchanged through NETCONF are in a predefined format described by the YANG data model. SDN controllers such as Cisco NSO (Network Service Orchestrator, Network Service Orchestrator), ODL (Open Daylight), Cisco OSC (Open SDN Controller, Open SDN Controller) developed by Tail-f, and Juniper's Contrail all use NETCONF as the South to the agreement.

YANG is the abbreviation of Yet Another Next Generation, which is a data modeling language discussed earlier. Although YANG was originally developed to work with NETCONF, its practical application is not limited to this.

2)RESTCONF

RESTCONF is an alternative protocol to NETCONF that also uses the data modeling language YANG to parse configuration and operational data exchanged between devices and applications. The operation of RESTCONF is similar but not identical to NETCONF. RESTCONF is derived from REST (Representational State Transfer, representational state transfer) API, and CSP (Cloud Service Provider, cloud service provider) usually uses REST API to program its own computing infrastructure.

RESTCONF uses similar principles and operations as REST API to communicate with network devices, providing an alternative solution to NETCONF that uses the YANG model to access configuration and operation data. Since RESTCONF has many similarities with REST API (the service provider may have adopted the REST API to manage its computing resources), using RESTCONF can provide a very convenient public interface for the service provider's computing and network infrastructure, such as supporting OPTIONS, GET , PUT, POST, and DELETE operations.

REST is an acronym for Representational State Transfer. The REST architecture defines a communication mechanism for stateless communication between two entities in a client-server relationship. APIs that conform to the REST architecture are called RESTful APIs. Many times Also shortens the term RESTful API to REST API.

A common transport protocol based on REST communication is HTTP. REST uses a set of actions (called REST methods) to define its own operations. Common operations mainly include POST (create entries), GET (retrieve entries or data), DELETE (from the server Delete entry or data in the ) as well as PUT (replace existing data or entry) and PATCH (modify existing data on the server).

When encoding these actions and their associated information, REST prefers JSON (JavaScript Object Notation, JavaScript Object Notation), but XML or other methods are also possible, as long as the server can decode the information and understand the operation request.

3)OpenConfig

OpenConfig is a technical framework that supports network devices to implement vendor-neutral programming interfaces. It was initiated by the network operator forum established by Google, AT&T, BT, etc., hoping to promote the industry to create a practical use case model that can be configured in a programmable way and monitor network devices.

OpenConfig adopts the YANG model as its standard for data transmission. Although it does not specify any underlying protocol for operation, some manufacturers have adopted NETCONF to support the OpenConfig framework. In addition, OpenConfig supports network monitoring capabilities by supporting Streaming Telemetry data from devices.

Compared to traditional network monitoring methods such as SNMP, Syslog, and CLI, stream telemetry is a new way to collect data from network devices. Traditional methods are mainly based on polling or events to collect data, while data flow telemetry uses the information flow of network devices based on the push model to send the necessary operating status and data information to the central server, or it can be programmed to send data periodically Or send data based on specific events.

4)XMPP

Some SDN controller manufacturers (such as Juniper Contrail and Nuage Network) have begun to use XMPP (eXtensible Messaging and Presence Protocol, eXtensible Messaging and Presence Protocol) as a communication protocol between centralized controllers and network devices. XMPP is a An open source, free and extensible communication protocol that can provide XML-based real-time data exchange capabilities.

The development purpose of XMPP is to serve as an alternative solution for instant messaging systems developed by manufacturers. The main functions and characteristics of XMPP are as follows.

- open and free.

- Protocol based on IETF standards.

- Security, supports TLS (Transport Layer Security, Transport Layer Security) and SASL (Simple Authentication and Security Layer, Simple Authentication and Security Layer).

- Decentralization (all organizations can implement their own XMPP system and enhance it according to specific needs).

- Flexible and extensible, custom functionality can be created using XML.

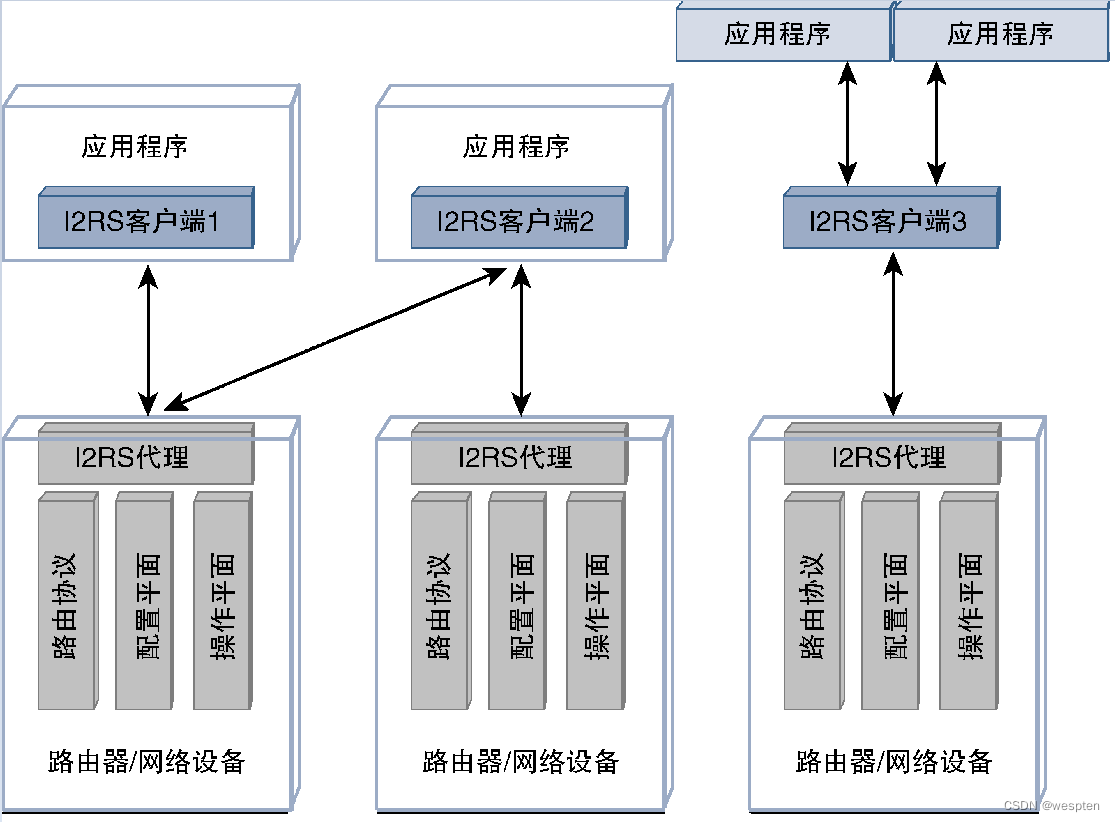

5)I2RS

I2RS (Interface to the Routing System, routing system interface) is a working group of IETF that supports the implementation of hybrid SDN. Its purpose is to provide a method to programmatically access, query and configure routing infrastructure on network devices. The position of I2RS is that there is no need to move the control plane completely out of the network equipment, as with the original SDN proposal. I2RS proposes a way to influence distributed routing decisions, monitor devices and push policies to devices, addressing issues such as lack of device programmability, insufficient automation capabilities, and vendor lock-in.

I2RS defines a proxy and a client. The I2RS agent runs on the network device and communicates with LDP, BGP (Border Gateway Protocol, Border Gateway Protocol), OSPF (Open Shortest Path First, open shortest path first), IS-IS (Intermediate System to Intermediate System, intermediate system to intermediate system ), RIB manager, and routing components such as the operation plane and configuration plane of the device to interact.

The I2RS proxy can provide read and write access capabilities for the I2RS client running on an independent device, allowing the I2RS client to control routing parameters or retrieve routing information by querying the I2RS proxy. In addition, the I2RS client can also subscribe to event notifications from the broker, so changes in any subscribed routing components can be delivered from the broker to the client in a push model.

The proxy defined by the I2RS architecture is required to support and process requests from multiple external clients. The I2RS client can either be embedded code in the application or be located between the routing device and the application, as shown in the figure below.

4. Northbound protocol

The northbound protocol is the interface between the SDN controller and upper-layer applications, as shown in the figure below.

Applications typically perform service orchestration functions or make and implement decisions based on application-defined logic or policies. Communication between an SDN controller and an application is no different than communication between two software entities, and thus does not require any special new protocols. Many protocols in use today enable northbound communication, such as RESTful APIs or libraries in programming languages such as Python, Ruby, Go, Java, C++, etc.

5. Rediscuss NETCONF, RESTCONF and YANG

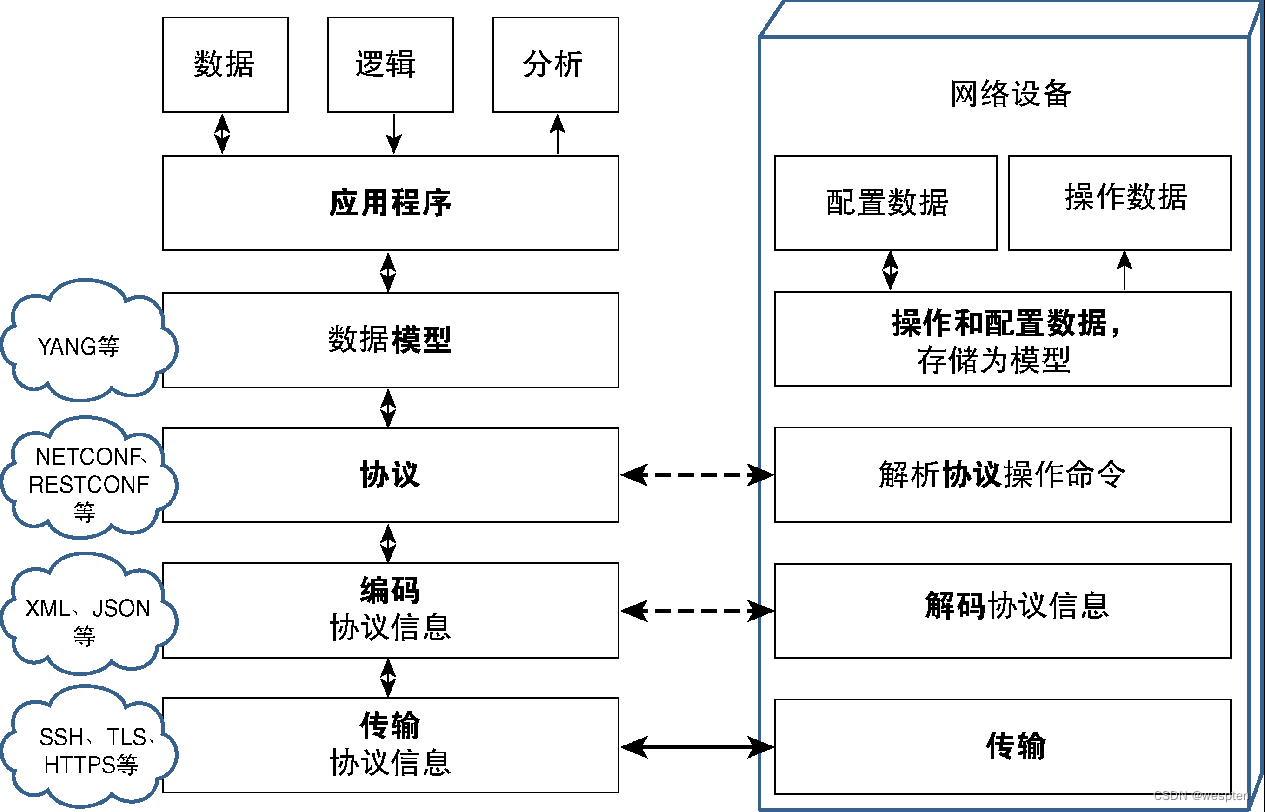

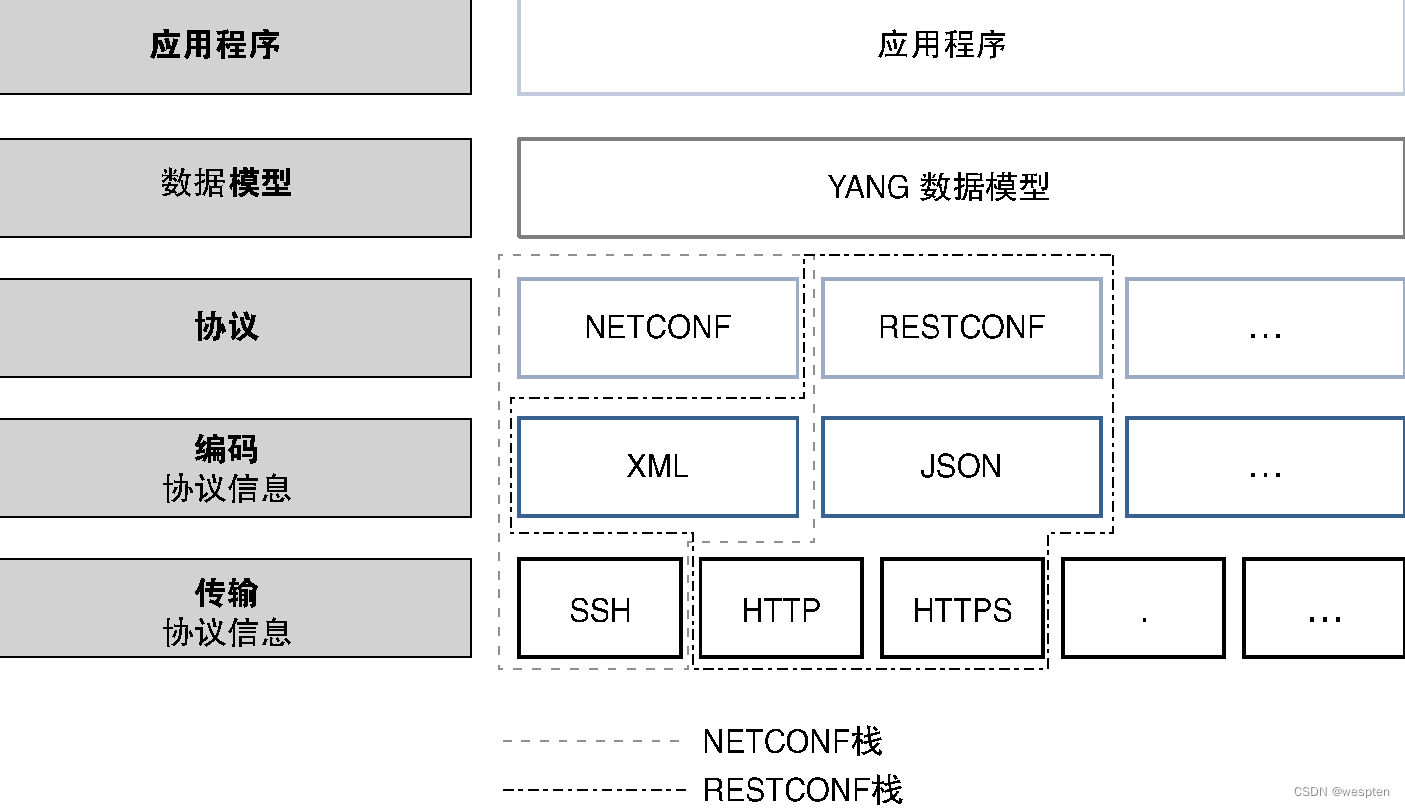

As mentioned earlier, both NETCONF and RESTCONF use the data modeling language YANG for information exchange. No discussion of these protocols would be complete without an analysis of the encoding techniques and transport mechanisms they use. The following will first analyze the relationship between these protocols.

As can be seen from the figure below, data (including operation data, configuration data, and user data) plus programming logic and analysis modules constitute the recipe of the application (note: the recipe file contains information about the given software), if the application If the program wants to configure network devices, it can use a data modeling language such as YANG to construct configuration information.

After the configuration information is constructed, the configuration protocol (such as NETCONF) can use the data to define the type of operation to be performed. For example, after delivering the configuration data, NETCONF can execute the edit-config operation). Next, the operation and data mode information of the protocol must be encoded, and finally the transmission protocol (such as SSH [Secure Shell, secure shell], HTTPS and TLS, etc.) is used to transmit the information.

Network devices need to have the ability to communicate using the same transport method. Similarly, network devices need to decode these protocol message data and pass them to the protocol code to determine the type of operation that must be performed. Network devices are required to understand the data modeling language used and recognize configuration and operational data within a predefined structure. Since the data model used by both the application and the device is the same, parameters and fields related to the data being exchanged can be easily parsed.

The differences between data models, protocols, and codecs can be challenging for the first time.

For example, while YANG data modeling can be expressed in JSON format, it should not be confused with protocol encoding. To better understand these concepts, use an analogy with people's everyday interactions.

- Transmission medium: air.

- Coding: phonemes and sounds.

- Transmission medium: air.

- Coding: phonemes and sounds.

- Protocol: The language used (eg English).

- Data model: grammatical structure (words in a language have no meaning if they are not formed into correct sentences).

- Application: Tongue and ear, the language and auditory organs of human beings.

- Data Logic Analysis: The Human Brain.

On this basis, we can further analyze NETCONF and RESTCONF. Both are very popular and widely used configuration protocols that leverage cross-platform, cross-vendor standard applications to configure network devices.

Both NETCONF and RESTCONF use YANG as the data modeling language, and both are standardized through RFCs. NETCONF tends to use XML as the encoding technique, while RESTCONF often uses JSON-based and XML-based encoding techniques.

At the transport level, the NETCONF standard recommends adopting a secure, authenticated transport protocol that can provide data integrity and security. Although it has certain flexibility, SSH is still listed as one of the mandatory options. The common transmission protocol of RESTCONF is HTTP, but many transmission protocols such as HTTPS are also used.

The diagram below lists the relationship between these modules and common options for RESTCONF and NETCONF.

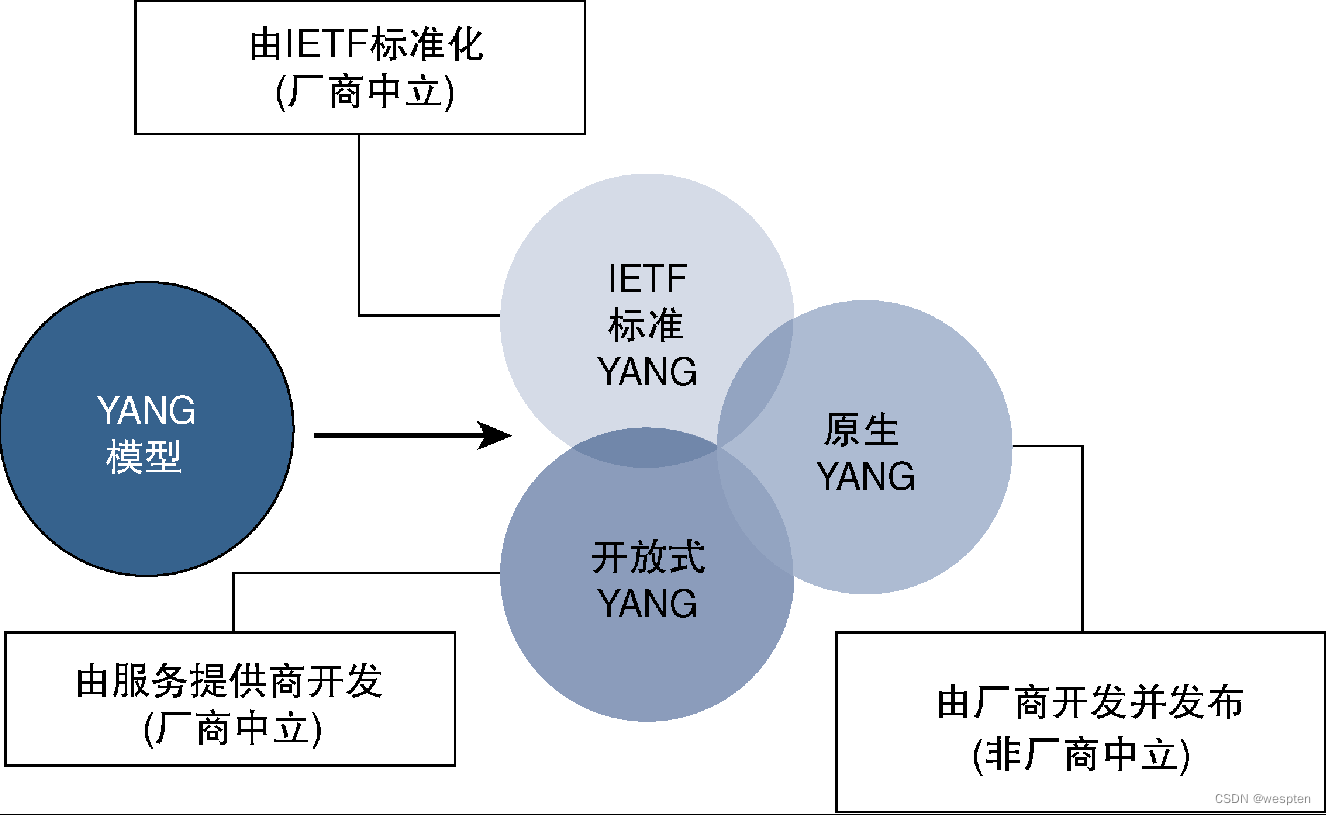

6. More information about the YANG model

Ideally, the YANG model of all functional properties and operational data can be fully standardized. In fact, through the continuous efforts of the IETF, a common standard YANG model has been provided for different functional features and configurable parameters. Although these IETF YANG models can achieve seamless work across vendors, the disadvantage is that they cannot support vendors to target configuration parameters. Or various enhanced functions for manipulating data.

As can be seen from the figure below, many vendors have developed (or modified based on the standard model) separate YANG models. These models are usually called native YANG models, which are more suitable for the vendor's own implementation. These YANG models will be released to the public repository for import and use by application developers. While this approach deviates from standard approaches, it is very realistic and retains flexibility and openness.

The third type of YANG model is the YANG model promoted by service providers. Under the leadership of the OpenConfig working group, service providers believe that the standardization time of IETF is difficult to meet their needs, and sometimes they are too affected by manufacturers, so service providers Vendors have also started to develop and release their own YANG models to fill the gap.

The YANG models mentioned above are all network element-level models, which can represent the configuration information of functional characteristics or the structure of operation data (such as the bit rate of the interface or the routing scale of the protocol). These YANG models all work at the network element level.

It is worth mentioning that the YANG model can also define a complete network service. This type of service-level YANG model can describe the structure and parameters of the entire service (such as L3VPN service or VPLS service). The orchestrator can use this model to implement the entire service. Although IETF drafts define many such YANG models, in many cases vendors or providers are developing their own models to meet specific service deployment needs.

The following figure shows the relationship between these two types of YANG models:

4. Detailed explanation of SDN controller

The main role of the SDN controller and the various protocols used have been introduced above. Next, the common SDN controllers available at present will be discussed, including open source SDN controllers and commercial SDN controllers provided by network equipment suppliers.

All SDN controllers should have the following functions:

- Provide the ability to communicate with various network devices, which can be realized by supporting multiple SDN southbound protocols.

- Provide open and/or well-documented northbound APIs to develop applications capable of interacting with SDN controllers.

- Maintain a global view of the network.

- Provides network event monitoring capabilities and the ability to define response actions in response to these events.

- Provides the network with the ability to perform path computation and decision making.

- Provides high availability capabilities.

- Provides modularity and flexibility mechanisms that allow the network to be programmed and customized to meet changing requirements or emerging protocols. It is imperative to ensure the scalability of the SDN controller, capable of growing as demand grows.

Some of the common SDN controllers currently available are discussed in detail next.

1. Open source SDN controller

There are many open source SDN controllers available from vendors and the open source community. Similar to other open source software, these controllers do not have any licensing costs, and anyone can take the code and use it as is or modify it as needed. Of course, these advantages all depend on the support and development capabilities of the open source community.

Some common open source SDN controllers are discussed below:

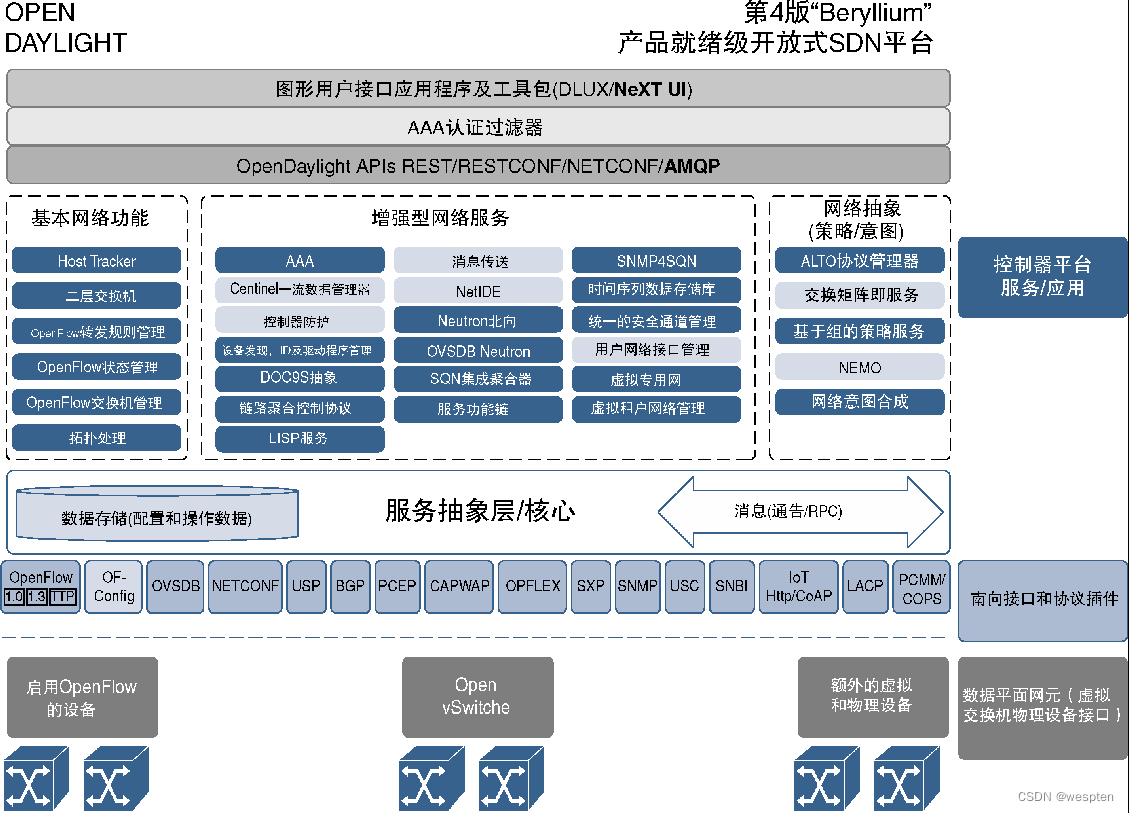

1. ODL(OpenDaylight)

The OpenDaylight Foundation is a forum mainly established by network providers to provide an open SDN platform and support multi-vendor network environments. The foundation is responsible for developing and maintaining the OpenDaylight SDN controller, which has become the de facto standard for open source SDN platforms in the networking world.

ODL adopts micro-service architecture, which has the characteristics of modularization and flexibility, and only needs to install the necessary protocols and services. ODL supports a variety of common southbound protocols (such as OpenFlow, PCEP, BGP, NETCONF, SNMP, and LISP [Location/ID Separation Protocol, Location/ID Separation Protocol]) and northbound APIs (such as RESTCONF), for many southbound protocols The support capability of ODL makes ODL very suitable for semi-open deployment environments, because these deployment scenarios may require the use of specific protocols. ODL is a pure software product that runs on top of Java as a Java virtual machine.

The following figure shows the ODL architecture diagram:

Since ODL is open source software, many suppliers (such as HP, Cisco, Oracle, etc.) are contributing their own software to the ODL code, mainly to support the interaction between ODL and their own devices. There are also some suppliers who develop their own ODL products on the basis of open source ODL, support more additional functions, and provide technical support as commercial products.

The version of ODL is named after the periodic table of elements. The first version, Hydrogen, made its debut in early 2014. The subsequent versions are Helium, Lithium, and Beryllium. So far, ODL has provided a fifth available version called Boron.

2. Ryu

Ryu is an open source SDN controller supported by the open source community. It is written entirely in Python, based on a component approach, and has a well-documented API, making it easy to develop any application to interact with it. Ryu can support major southbound APIs such as OpenFlow, OF-CONFIG, NETCONF, and BGP through the southbound library.

Ryu supports multi-vendor network equipment and has been deployed in the data center of NTT (Nippon Telegraph and Telephone, Nippon Telegraph and Telephone Company).

The following figure shows the deployment architecture based on the Ryu SDN controller:

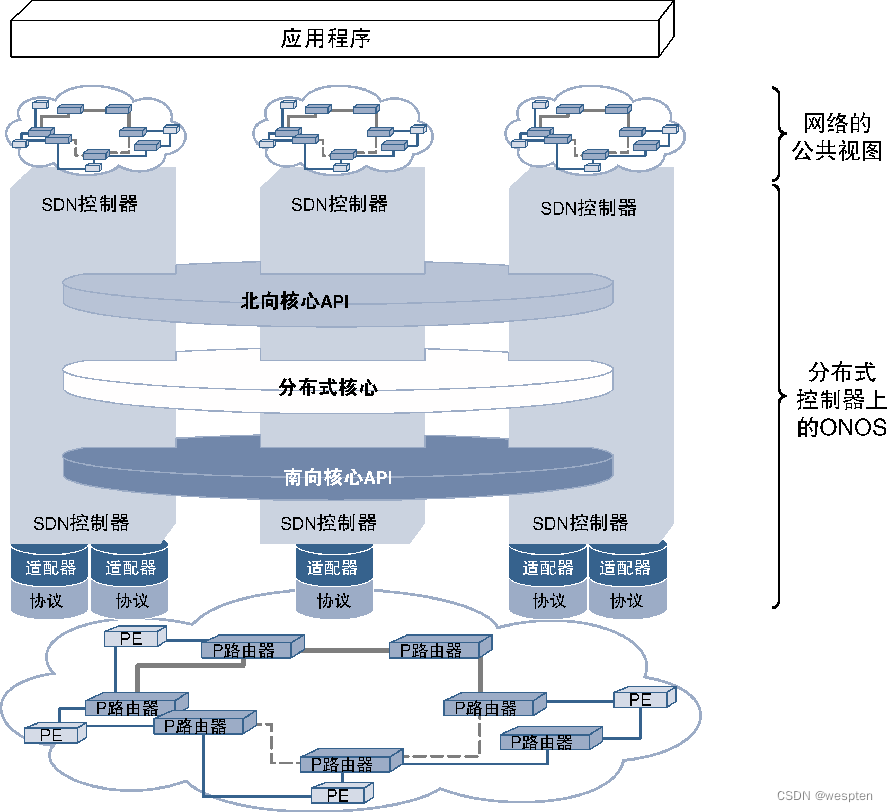

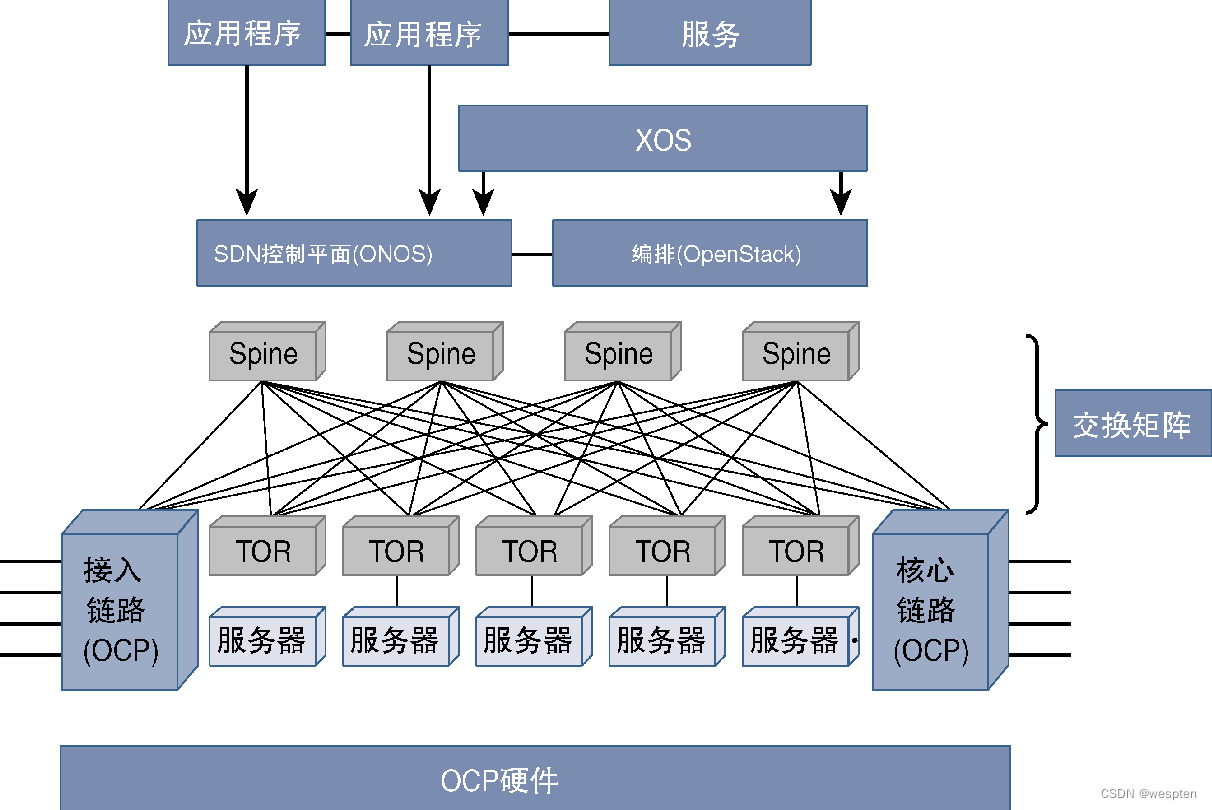

3. ONOS

ONOS (Open Network Operating System, Open Network Operating System) is a distributed SDN operating system that can provide rich high availability and carrier-level SDN. ONOS emerged as an open source software approach in 2014, aiming to provide service providers with an open source platform for building software-defined networks. At present, ONOS has been widely supported by a large number of service providers, suppliers and other partners, and a large number of new members continue to join the ONOS community.

The figure below shows a schematic diagram of the ONOS architecture, which emphasizes the distributed core of ONOS, which is the fundamental reason why ONOS has high availability and flexibility to meet the carrier-level standards.

The distributed core layer is located between the northbound core API and the southbound core API. The purpose of these two core APIs is to provide protocol-independent APIs for the distributed core from their respective directions. The distributed core is responsible for the coordination of the entire cluster, processing state management and data management operations initiated from the northbound and southbound cores, ensuring the coordinated work of various controllers across the network, while maintaining a public view based on the entire network view, while Not just an isolated view based on visibility, all applications interacting with ONOS can have a public view of the network, which is also the core advantage of the ONOS distributed architecture.

ONOS adopts pluggable adapters on the southbound core API and supports a variety of popular SDN southbound protocols, so it is extremely flexible. The northbound API allows applications to interact with and use ONOS without having to understand the deployment of distributed ONOS.

The version name of ONOS is named after a bird, and the logo of ONOS is also a bird graphic.

So far, the latest version of ONOS is called Hummingbird. One of the common ONOS applications is to include ONOS in a project called CORD (Central Office Re-architected as a Datacenter). The purpose of CORD is to accelerate the adoption of SDN and NFV technologies by suppliers.

4. OpenContrail

OpenContrail is an open source SDN platform developed by Juniper, which aims to implement SDN through the overlay model. OpenContrail adopts network virtualization technology to decouple the forwarding function (such as MPLS or VXLAN) of the overlay network from the data forwarding function, and the control function is handled by the SDN controller.

Currently, OpenContrail complies with Apache 2.0 and supports additional functions such as virtual routers and common northbound APIs.

2. Commercial SDN controller

Many SDN controllers have commercial versions. These commercial SDN controllers come not only from network equipment suppliers, but also from many new entrants in the industry. They hope to gain network market share by providing better and more profitable SDN controllers . As mentioned above, many suppliers implement their own SDN controllers based on ODL and provide enhanced functions, evolution routes, and technical support. Of course, some suppliers also develop their own SDN controllers from scratch.

Common commercial SDN controllers are as follows:

1. VMware NSX

VMware NSX was the first SDN controller developed by a vendor, originally developed by a startup called Nicira, which was later acquired by VMware. The NSX platform uses an overlay network to implement SDN. It can create a VXLAN-based overlay network and also supports routing, firewall, switching, and other network functions. The NSX SDN platform can be used with any hardware and hypervisor to provide end users with all network logic functions, such as logical load balancers and logical routers, while also providing flexible programmable networks.

2. Cisco SDN Controller

Cisco has developed a variety of SDN controllers to meet the needs of different market segments. Initially, Cisco developed an open Cisco XNC (eXtensible Network Controller, extensible network controller), which can support southbound communication through Cisco's onePK protocol, and later joined other suppliers and became a founding member of ODL one. Cisco supports a commercial version of ODL called Cisco OSC (Open SDN Controller, Open SDN Controller). OSC is built on ODL and supports standard southbound and northbound APIs and related protocols.

Cisco's SDN controller solution for data centers and enterprises is called APIC (Application Policy Infrastructure Controller, Application Policy Infrastructure Controller), and the enterprise version of APIC is APIC-EM (APIC Enterprise Module, APIC Enterprise Module).

The data center version of the APIC is called APIC-DC (APIC Data Center, APIC Data Center) and is part of the Cisco ACI ecosystem, which uses Cisco proprietary solutions. The Cisco APIC-DC is the core component of the ACI solution, supporting functions such as network programmability, management and deployment, policy enforcement, and network monitoring. APIC-DC provides GUI and CLI interfaces to realize northbound interaction, and supports proprietary protocol iVXLAN (implementing SDN through VXLAN overlay network) and standard OpenFlex protocol (developed by Cisco and become an open source protocol) for southbound communication.

In addition, Cisco also provides an open and standardized SDN controller called Cisco VTS (Virtual Topology System, virtual topology system), which is used for the management and configuration of overlay networks. VTS uses MP-BGP EVPN (Multi-Protocol BGP Ethernet Virtual Private Network, multi-protocol BGP Ethernet virtual private network) provides SDN overlay function. Cisco VTS supports REST-based northbound API to integrate with other OSS/BSS, and also supports rich southbound protocols, such as RESTCONF/YANG, Nexus NX-OS API, etc.

In the VxLAN-based network deployment solution, the Layer 2 address is carried on the Layer 3 transmission system. There are two ways to learn the Layer 2 MAC address of the terminal host: using the flooding and learning mechanism of the data path or exchanging the MAC address by using the control protocol. MP BGP EVPN uses the control protocol mode, and MP BGP provides the function of exchanging MAC addresses between different VxLAN endpoints.

3. Juniper Contrail

The commercially supported version of the open source OpenContrail SDN platform provided by Juniper is Juniper Contrail, as shown in the figure below.

Like OpenContrail, the commercial version implements SDN by supporting an overlay model, working with an existing physical network and deploying a network virtualization layer on top of it. Contrail supports southbound communication using XMPP as well as NETCONF, BGP and Juniper's virtual router (vRouter).

Juniper exited the ODL project in 2016 and currently supports Juniper Contrail and OpenContrail as its SDN controllers.

4. Big Network Controller

Big Switch Networks is one of the few companies that entered the SDN controller market early and contributed 3 important projects to the SDN open source community.

- Floodlight: Open source SDN controller.

- Indigo: Supports OpenFlow in physical and virtual environments.

- OFTest: A framework for testing OpenFlow conformance on switches.

In terms of business, Big Switch's SDN controller has developed from the original Floodlight project to a market-oriented Big Network controller. This controller supports standard southbound protocols (such as OpenFlow), adopts the classic SDN implementation method, and can communicate with physical Devices and virtual devices work together.

5. Nokia Cloud VSP

Nokia provides SDN controller solutions through Nuage VSP (Virtual Service Platform, virtual service platform). This product was originally developed by Nuage Networks, was later acquired by Alcatel-Lucent, and is now part of Nokia.

VSP mainly includes three components. VSC (Virtual Services Controller, Virtual Service Controller) is the main SDN controller, which is used to program the data forwarding plane and supports the OpenFlow communication protocol. The VSC communicates with the northbound VSD (Virtual Service Directory, virtual service directory) through XMPP, and the VSD is a policy engine. Similar to Open vSwitch, Nuage also has a VRS (Virtual Routing and Switching, virtual routing and switching) platform, which is integrated with the Hypervisor that provides network functions.

6. SD-WAN controller

As mentioned earlier, SDN is gradually penetrating into all levels of the network, and one of the important application areas is SD-WAN. At present, several suppliers have clearly provided usable SD-WAN controllers. The controllers are all similar in architecture, so they are described here in general terms.

At present, the enterprise WAN market has just begun to adopt SDN technology. In addition to traditional suppliers, there are many new entrants trying to occupy this market. The SD-WAN controllers provided by large companies on the market include Cisco’s APIC-EM, Riverbed’s SteelConnect and Viptela's vSmart Controller.

The main functions of these products are as follows:

- Cisco APIC-EM, the feature-rich SD-WAN solution is part of the Cisco IWAN (Intelligent WAN, Intelligent WAN) solution.

- Works with any WAN link technology.

- Leverage DMVPN for site-to-site communication.

- Riverbed SteelConnect。

- Utilize the application database directory to direct traffic on different WAN links, bringing value-added services to customers using cloud application products such as MS Office365, Salesforce and Box.

- Using Riverbed's SteelHead CX platform to provide SaaS services can dynamically create virtual machines closer to branches or end users, thereby providing advantages such as low latency, low jitter, and high-speed access.

- Viptela vSmart Controller。

- The SD-WAN solution is part of the Viptela SEN (Secure Extensible Network) platform, which also includes the vManage application for managing the network and vEdge routers.

- Simplify deployment and management operations, and realize plug-and-play of access devices.

- The control plane and data plane communicate through a proprietary protocol.

- The controller and configuration management software are free, and customers only pay for the edge hardware system.

- Use L3VPN for inter-site communication.

5. SDN Application Cases

SDN was originally considered to be a solution dedicated to data center scalability and traffic control problems. Later, this new technology gradually entered many network fields and achieved certain applications in these fields.

Considering that the protocols and technologies used by SDN in different network fields are different due to different specific solutions, here we will analyze the role of SDN in these fields from five different network fields, as shown in the figure below.

1. SDN in the data center (SDN DC)

While data centers have been around since the days of mainframes, they have grown exponentially in size and capacity over the past decade or so. The emergence of the Internet and the cloud and the trend of service providers maintaining online businesses to meet consumer demand have greatly promoted the explosive growth of data centers, resulting in large-scale data centers with thousands of servers installed, which are deployed on tens of acres On the land, several megawatts of electricity resources are consumed.

1. Issues and Challenges

The development of data centers is the main driving force for server virtualization. Although virtualization has improved the space utilization rate, energy consumption level and cost efficiency of the computer room, it has also brought new and severe challenges to the network architecture interconnecting these virtual servers.

One of the challenges is that the scalability of VLAN (Virtual LAN, virtual LAN) is limited to 4096. Virtual servers are usually located in the same Layer 2 domain. VLANs need to be used to isolate these virtual servers to support multi-tenant applications, and enterprises The proliferation of cloud-hosted services has also created the need to stretch enterprise VLAN domains across multiple data centers, putting enormous pressure on available VLAN space.

In order to solve this problem, a VXLAN (Virtual Extensible LAN, Virtual Extensible LAN) protocol is introduced. VXLAN can provide layer-2 adjacency for virtual servers through a layer-3 network. The overlay network established by VXLAN using VXLAN ID can be expanded to a maximum of 16 million network segments, thus solving the scalability problem mentioned above. However, VXLAN also brings new challenges, namely the management, monitoring and programming of the overlay network.

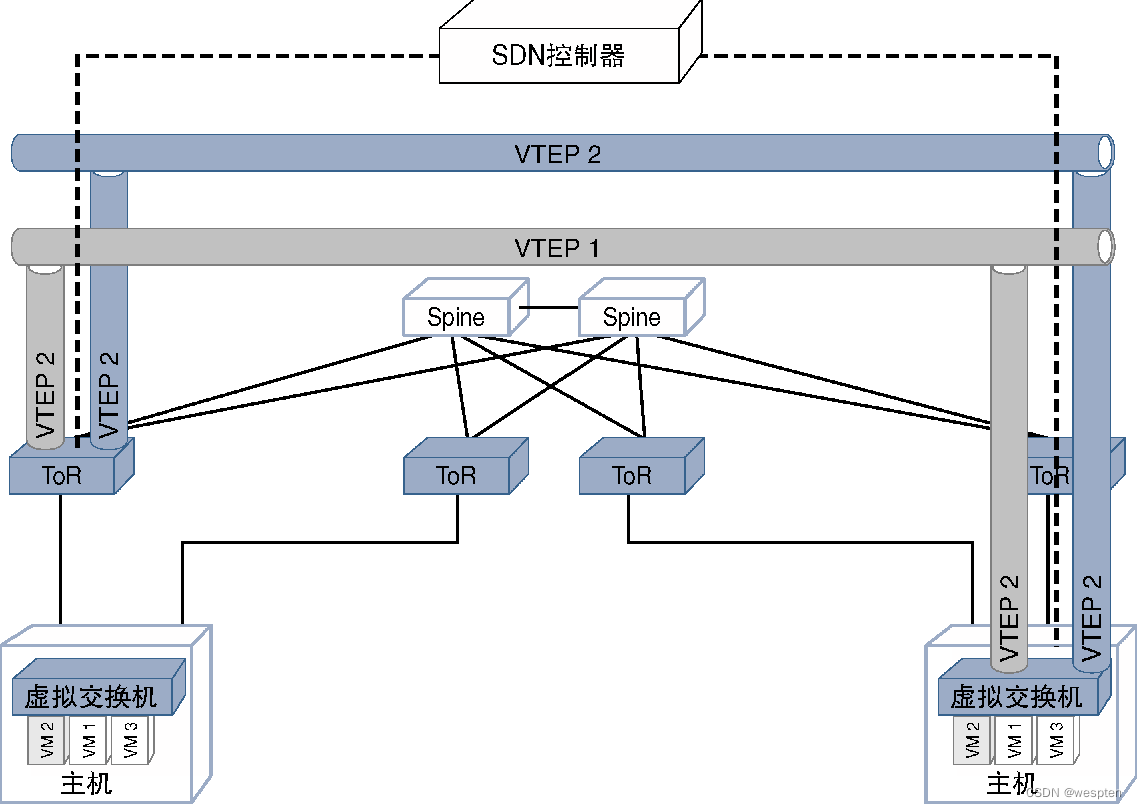

The following figure shows a VXLAN-based overlay network:

2. SDN solutions

The endpoint of the VXLAN overlay network (that is, the VTEP [VXLAN Tunnel End Point, VXLAN tunnel end point]) is usually located on the ToR (Top of Rack, Top of Rack) switch or the virtual switch of the host. In both cases, the VTEP needs to be programmed and communicated with Tenant virtual machines are associated.

Virtual machines in large-scale data centers are deployed using orchestration tools, such as OpenStack, which can deploy VMs in an automated manner, so virtual machines can be deployed on any physical server. However, in order to connect to the network through VXLAN, it is necessary to provide a corresponding mechanism to view the entire network. At this time, SDN is used, because SDN has a complete network view, and can also coordinate with virtual development tools. For the forwarding plane (located in the ToR or virtual switch) for programming VTEP and VXLAN information.

As can be seen from the figure below, the SDN control communicates with the switch and creates a VTEP interface based on the virtual machine configured on the server served by the switch.

Since virtual machines may be moved or removed between physical servers, there may be a need to reprogram or delete VTEP information, which is also handled by the SDN controller.

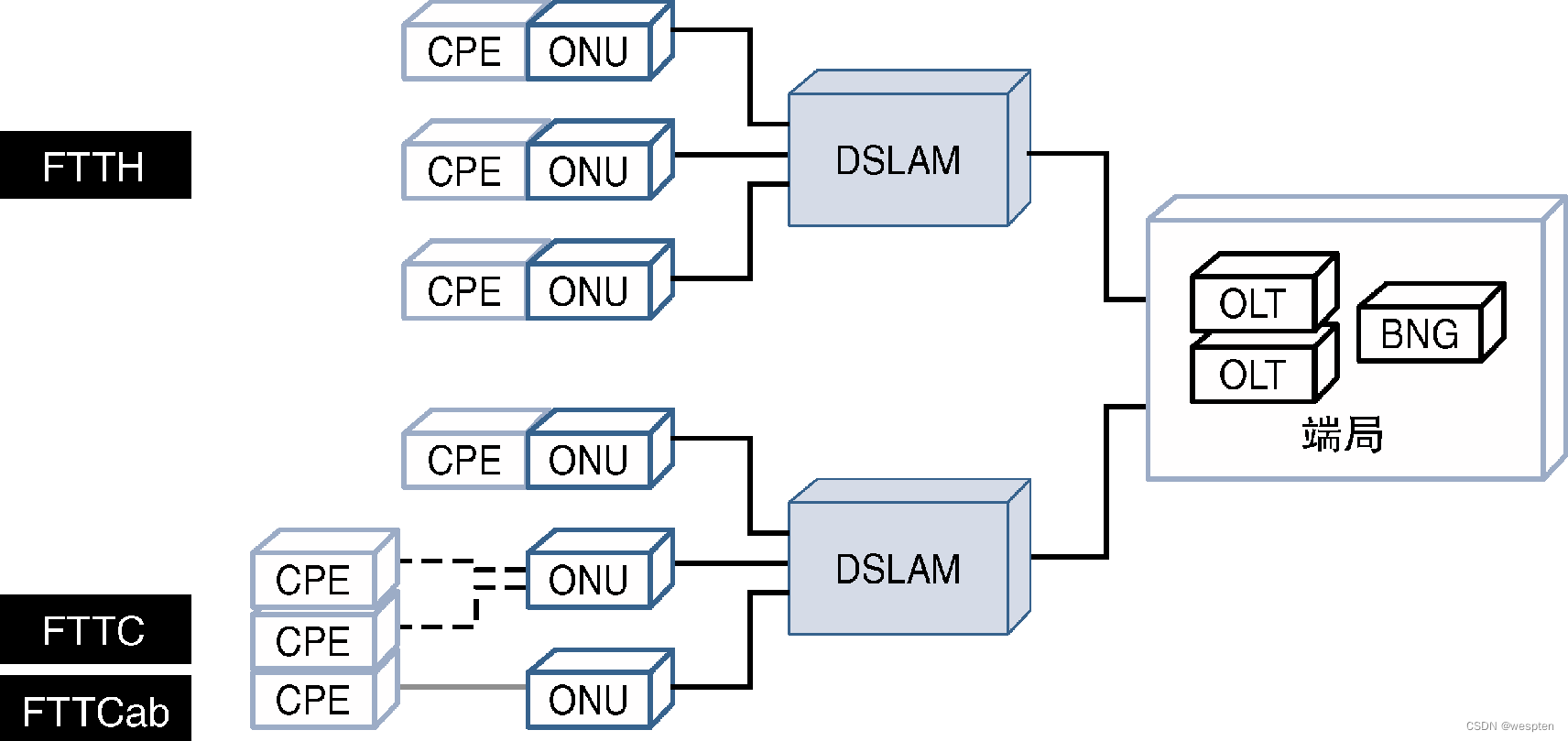

2. SDN in service provider network (SP SDN)

From a high-level perspective, the routing devices in the SP (Service Provider, service provider) network can be divided into PE (Provider Edge, provider edge) and P (Provider, provider) routers. PE routers are directly connected to customer networks, so PE routers have a large number of interfaces, and perform various operations such as classification, QoS, access control, fault detection, and routing. These routers usually carry a large amount of customer routing information and ARP cache to form a service provider network. borders. PE routers usually aggregate traffic to P routers through high-bandwidth upstream links.

The functional characteristics of the P routers are not complicated, and the number is relatively small, but the P routers need to be interconnected through large-bandwidth links provided by geographically dispersed POP points. For most SP networks, services such as voice, video, data, and Internet generally use public core links and core routers, and these core links carry the aggregation of a large number of customers served by service providers. Traffic, any interruption of large-bandwidth links will have a serious impact on a large number of users, so in order to avoid failures and provide carrier-class availability, physical redundancy is usually deployed for these core links and the core routers interconnected through these links mechanism.

1. Issues and Challenges

Since SP traffic faces a large number of redundant links, nodes, and paths, the shortest available path between nodes may not usually be the path with the best cost per bit, or may not be able to carry all the traffic at once. Therefore, a common practice for SPs is to use traffic engineering technology to direct traffic to specific paths based on factors such as importance, cost, delay, and network status, thereby optimizing network costs and achieving better performance.

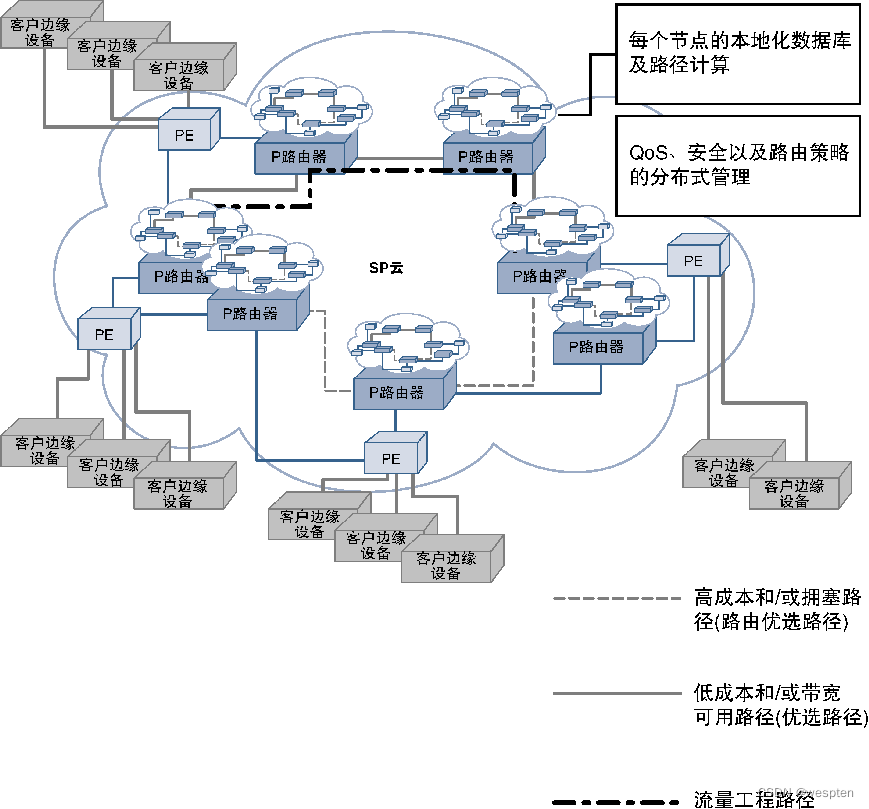

The figure below shows a generic view of an SP network and illustrates how traffic engineering tunnels can be used to direct traffic from the best path to the user's preferred path.

As mentioned above, the optimal routing path may not be the preferred traffic path (considering factors such as cost, delay, bandwidth availability, etc.), and the behavior of routing protocols will be changed to meet specific needs through specific traffic engineering techniques. MPLS-TE (MPLS Traffic Engineering, MPLS traffic engineering) is a commonly used technology to achieve such goals, and SR-TE (Segment Routing Traffic Engineering, segment routing traffic engineering) has also been widely used.

For these two protocols, not all nodes have end-to-end views such as network link bandwidth, link preference, shared fault groups (such as links sharing the same transmission equipment), and switching information of traffic engineering paths. Coordination between nodes using special protocols or protocol extensions is required to exchange this information and determine the complete traffic engineering path.

Each node performs path calculations and makes decisions, so the required data must be persisted on all nodes. Since these operations all consume a large amount of CPU resources and memory, and this distributed implementation mechanism also needs to perform end-to-end coordination operations, these overheads will occupy a large amount of device resources.

Another challenge for SP networks is the extent of impact on the network and services in the event of a potential failure. Although FRR (Fast Re-Route, fast rerouting) and other mechanisms can be deployed, when network capacity planning and QoS guarantee requirements are added, the entire network design work will become very complicated and difficult to optimize.

2. SDN solutions

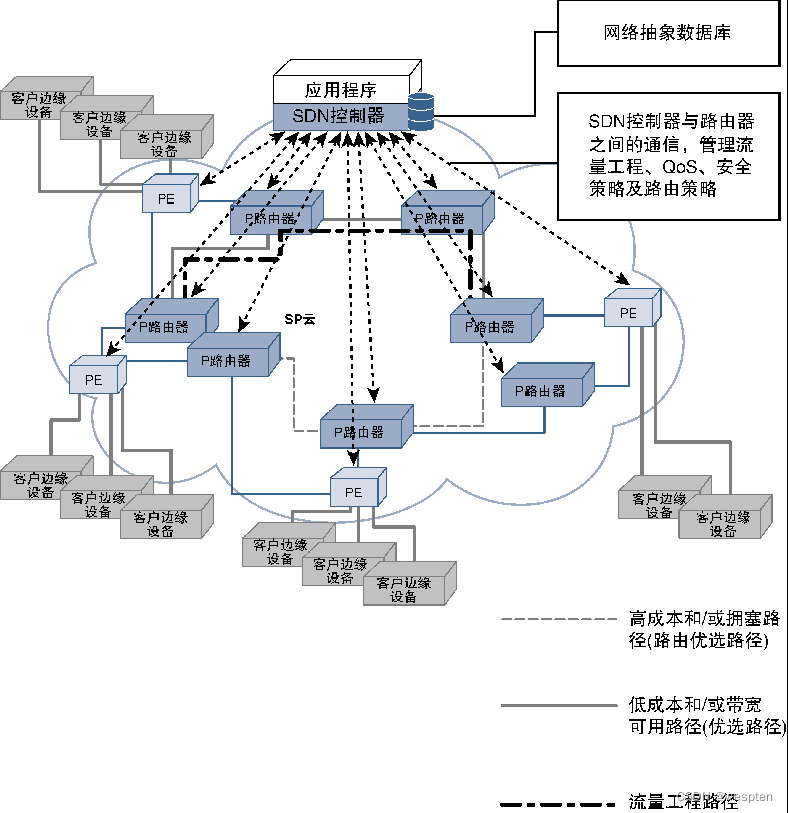

Traffic engineering management and design challenges across networks can be effectively addressed by using a centralized controller because it has a network-wide link-state view and can also track bandwidth allocation and allow the controller to handle the decision-making process.

SDN is perfect for this scenario. For SDN-based solutions, routers do not need to make decisions or keep databases required for decisions, thus greatly reducing the memory and CPU resource overhead of routers. The centralized SDN controller can completely go beyond the basic decision-making criteria based on traffic and link utilization, and interact with high-level applications to implement policy-based traffic rerouting, such as pre-carrying out traffic rerouting before the maintenance window arrives, according to a day Change the direction of traffic at specific times or events in the network, or dynamically change the bandwidth allocation scheme for specific traffic flows to meet temporary needs.

A centralized controller is also useful for managing feature-rich PE routers. When configuring new customers on the PE router, you can configure consistent QoS, security, scalability, and connectivity experiences for these customers according to SLA (Service Level Agreement) requirements. Specific data streams have a higher preference), then configuration change data can be easily and consistently pushed to all edge routers in the SP network through the centralized SDN controller, as shown in the figure below.

Another major advantage of the SDN solution is to improve the security and high availability of the SP network. If the service provider network is suffering from a large-traffic DDoS (Distributed Denial-of-Service) attack (attacking the SP network or customers hosted on the SP network), then the centralized SDN controller can be used to block the attack Traffic deviates from the standard routing path and is redirected to centralized or distributed traffic cleaning devices, thus effectively protecting the SP infrastructure.

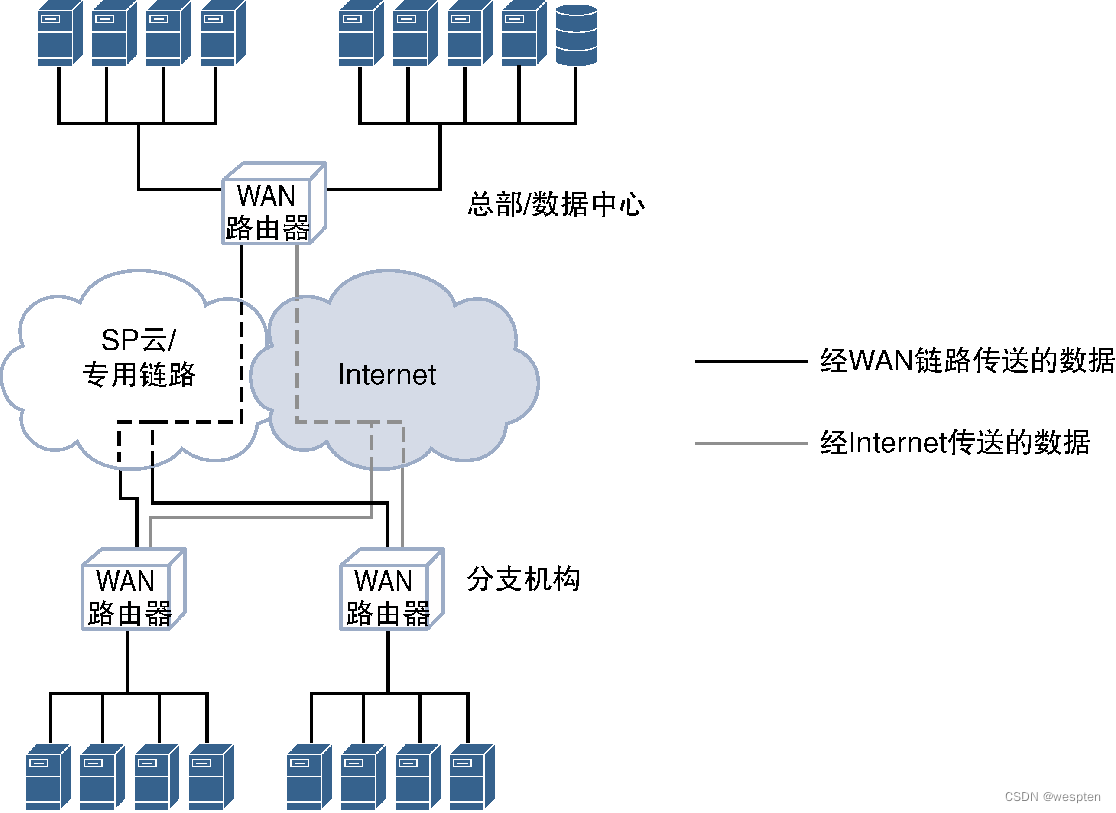

3. SDN in wide area network (SD WAN)

The networks of enterprises and their business customers are distributed in different geographical locations, and many branches must be connected to the network of the headquarters. These sites are connected to different offices through dedicated WAN (Wide-Area Network, wide area network) circuits (such as T1 or T3) or dedicated lines provided by VPN (Virtual Private Network, virtual private network) service providers. The prices of these links or services All are very expensive, greatly increasing the operating expenses of the enterprise network. In order to reduce costs, many enterprises use new technologies such as DMVPN (Dynamic Multipoint VPN) or MPLS VPN to transfer these network connections to secure Internet links. After the data encryption protection mechanism is added, the dynamically established overlay network can use the shared Internet link to provide services for the enterprise's private traffic.