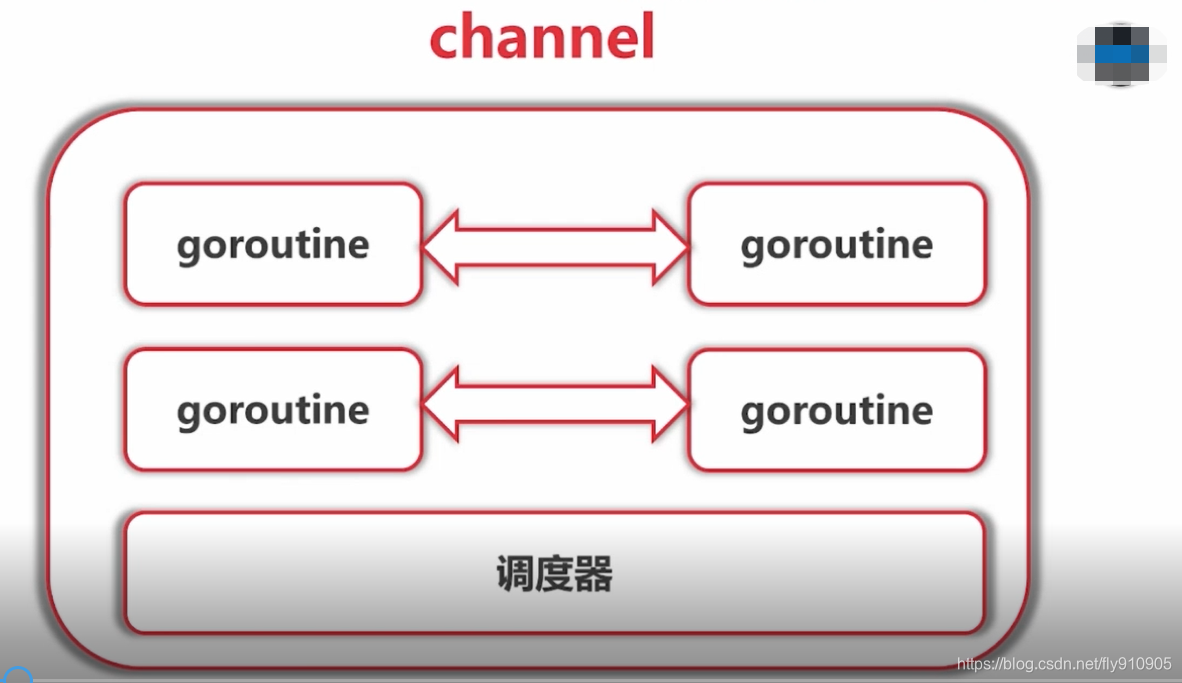

Channel

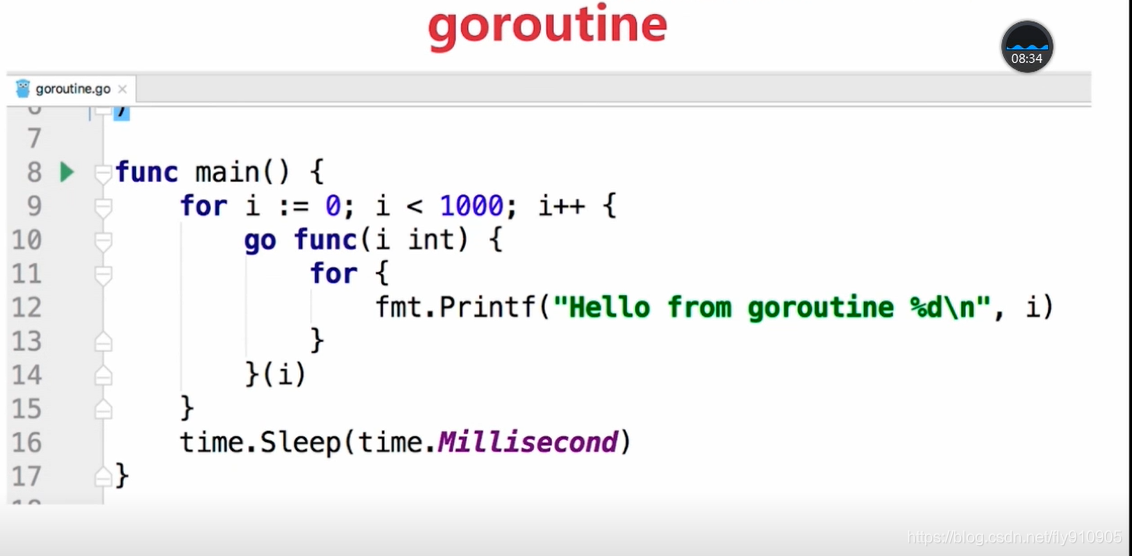

The function simply makes no sense to execute concurrently. Between function and function need to exchange data in order to reflect the significance of concurrent execution of the function .

Although the use of shared memory for data exchange, but the shared memory at different goroutine race condition occurs easily . To ensure the integrity of data exchange, you must use the mutex lock the memory is that this approach will inevitably cause performance problems.

Go concurrency model language is CSP (Communicating Sequential Processes), promoted by shared memory communication and communicate through shared memory instead.

If goroutine Go program is concurrent execution body, channel is the connection between them. channel is that it gives a specific value goroutine transmitted to another communication mechanism of goroutine.

Go language channel (channel) is a special type. A conveyor belt or like channel queue, always follow the FIFO (First In First Out) rules guarantee the order of the data transceiver. Each channel is a specific type of catheter, which is a statement channel when you need to specify the element type.

channel type

channel is a type of a reference type. Channel type declaration format:

var 变量 chan 元素类型

A few examples:

var ch1 chan int // 声明一个传递整型的通道

var ch2 chan bool // 声明一个传递布尔型的通道

var ch3 chan []int // 声明一个传递int切片的通道

Create a channel

Reference is null channel type, the channel type is nil.

var ch chan int

fmt.Println(ch) // <nil>

You need to make use of the function declaration in order to use the rear channels after initialization .

Create a channel format is as follows:

make(chan 元素类型, [缓冲大小])

- channel buffer size is optional.

A few examples:

ch4 := make(chan int)

ch5 := make(chan bool)

ch6 := make(chan []int)

channel operation

Channel has a transmission (send), receiving (the receive) and closing (Close) three operations.

Transmission and reception use <- symbol.

Now let's use the following statement to define a channel:

ch := make(chan int)

send

The value is sent to a channel.

ch <- 10 // 把10发送到ch中

receive

Receiving a value from the channel.

x := <- ch // 从ch中接收值并赋值给变量x

<-ch // 从ch中接收值,忽略结果

shut down

Let's close the channel by invoking the built-close function.

close(ch)

Thing about closing the channel needs to be noted that only a notification when the recipient goroutine all data have been sent only to the need to close the channel .

Channel can be recycled garbage collection mechanism , and it is not the same as closing the file, close the file after the end of the operation is to be done, but closing the channel is not required.

After closing the channel has the following characteristics:

1. a closed channel, and then sends the value will lead to panic .

2. a closed channel, receives will always get the value until the channel is empty .

3. a closed and there is no channel value, performs the reception operation will be a corresponding type of zero value .

4. Close a closed passage leads to panic .

Unbuffered channel

Unbuffered channel, also known as the blocked channels . We look at the following code:

func main() {

ch := make(chan int)

ch <- 10

fmt.Println("发送成功")

}

The code above can compile, but will be executed when the following error:

fatal error: all goroutines are asleep - deadlock!

goroutine 1 [chan send]:

main.main()

.../src/github.com/pprof/studygo/day06/channel02/main.go:8 +0x54

Why deadlock errors will happen?

Because we use ch: = make (chan int) is created unbuffered channel, unbuffered channel can only be sent when someone receives value in value. As you live in the district did not express counters and collection points, the courier to call you have to put this item to your hands, it simply is no buffer to receive the channel must be made in order to send .

The above code will be blocked in ch <- 10 this line of code to form a deadlock, then how to solve this problem?

A method goroutine is enabled to receive a value, for example:

func recv(c chan int) {

ret := <-c

fmt.Println("接收成功", ret)

}

func main() {

ch := make(chan int)

go recv(ch) // 启用goroutine从通道接收值

ch <- 10

fmt.Println("发送成功")

}

Will send operation on the buffer without obstruction, until another goroutine reception operation is performed on the channel, then the value to be sent successfully, two goroutine will continue. Conversely, if the receiving operation is performed first, the recipient goroutine blocks until a value other goroutine transmitted on that channel.

Unbuffered communication channel will result in transmission and reception goroutine synchronization. Accordingly, no buffer channel also called a Synchronization channel.

Channel buffer

Solution to the above problem is to use there there is a channel buffer.

We can specify the channel for their capacity in the use of make-channel initialization function, for example:

func main() {

ch := make(chan int, 1) // 创建一个容量为1的有缓冲区通道

ch <- 10

fmt.Println("发送成功")

}

As long as the capacity of the channel is greater than zero, then the channel is a channel buffer capacity represents the number of channels in the channel elements can be stored. As you only so cells express more than one cabinet plaid, plaid full to fit in it, it is blocked until someone removed a courier can fill it up a.

We can use the built-in len function to get the number of elements in the channel , using the cap function to get the capacity of the channel , although we rarely do so.

close()

Can be built close () function closes the channel ( the time if you are not inside the pipe stored value or values must remember to close the pipeline )

package main

import "fmt"

func main() {

c := make(chan int)

go func() {

for i := 0; i < 5; i++ {

c <- i

}

close(c)

}()

for {

if data, ok := <-c; ok {

fmt.Println(data)

} else {

break

}

}

fmt.Println("main结束")

}

//0

//1

//2

//3

//4

//main结束How elegant cycle values from channel

When transmitting data through the limited channel, we can close the passage by the close function to notify the channel from the received value goroutine stop waiting. When the channel is closed, the channel sends the value to cause panic, received from the channel is always zero value in the value type. How to determine whether a channel that was closed it?

Let's look at the following example:

package main

import "fmt"

// channel 练习

func main() {

ch1 := make(chan int)

ch2 := make(chan int)

// 开启goroutine将0~100的数发送到ch1中

go func() {

for i := 0; i < 100; i++ {

ch1 <- i

}

close(ch1)

}()

// 开启goroutine从ch1中接收值,并将该值的平方发送到ch2中

go func() {

for {

i, ok := <-ch1 // 通道关闭后再取值ok=false

if !ok {

break

}

ch2 <- i * i

}

close(ch2)

}()

// 在主goroutine中从ch2中接收值打印

for i := range ch2 { // 通道关闭后会退出for range循环

fmt.Println(i)

}

}

From the above example, we see two ways determines whether the channel is closed when the received value, we usually used are for range manner .

Unidirectional channel

Sometimes we will channel as an argument between multiple tasks function , many times we use channel will function in different tasks in its restrictions, such restrictions can only send or only receive channels in the function .

Go language unidirectional channel is provided to handle this situation. For example, we transform the above example as follows:

package main

import "fmt"

func counter(out chan<- int) {

for i := 0; i < 100; i++ {

out <- i

}

close(out)

}

func squarer(out chan<- int, in <-chan int) {

for i := range in {

out <- i * i

}

close(out)

}

func printer(in <-chan int) {

for i := range in {

fmt.Println(i)

}

}

func main() {

ch1 := make(chan int)

ch2 := make(chan int)

go counter(ch1)

go squarer(ch2, ch1)

printer(ch2)

}

among them,

1.chan <- int is only a transmission channel, may be transmitted but not received;

. 2 <- Chan int is only a reception channel can be received but not transmitted.

In the function and any parameter passing operation in the bidirectional channel assignment into a unidirectional channel it is possible, but the reverse is not possible.

Channel summary

channel common abnormalities summarized below:

Note: Closing the channel has been shut down can also cause panic.

Goroutine pond

- It is essentially a producer-consumer model

- It can effectively control the amount goroutine, preventing inflation

- demand:

- Calculating a respective number of bits and, for example number 123, the result is 1 + 2 + 3 = 6

- Generating a random number calculated

package main

import (

"fmt"

"math/rand"

)

type Job struct {

// id

Id int

// 需要计算的随机数

RandNum int

}

type Result struct {

// 这里必须传对象实例

job *Job

// 求和

sum int

}

func main() {

// 需要2个管道

// 1.job管道

jobChan := make(chan *Job, 128)

// 2.结果管道

resultChan := make(chan *Result, 128)

// 3.创建工作池

createPool(64, jobChan, resultChan)

// 4.开个打印的协程

go func(resultChan chan *Result) {

// 遍历结果管道打印

for result := range resultChan {

fmt.Printf("job id:%v randnum:%v result:%d\n", result.job.Id,

result.job.RandNum, result.sum)

}

}(resultChan)

var id int

// 循环创建job,输入到管道

for {

id++

// 生成随机数

r_num := rand.Int()

job := &Job{

Id: id,

RandNum: r_num,

}

jobChan <- job

}

}

// 创建工作池

// 参数1:开几个协程

func createPool(num int, jobChan chan *Job, resultChan chan *Result) {

// 根据开协程个数,去跑运行

for i := 0; i < num; i++ {

go func(jobChan chan *Job, resultChan chan *Result) {

// 执行运算

// 遍历job管道所有数据,进行相加

for job := range jobChan {

// 随机数接过来

r_num := job.RandNum

// 随机数每一位相加

// 定义返回值

var sum int

for r_num != 0 {

tmp := r_num % 10

sum += tmp

r_num /= 10

}

// 想要的结果是Result

r := &Result{

job: job,

sum: sum,

}

//运算结果扔到管道

resultChan <- r

}

}(jobChan, resultChan)

}

}Timer

Timer: time to perform only executed once

package main

import (

"fmt"

"time"

)

func main() {

// 1.timer基本使用

//timer1 := time.NewTimer(2 * time.Second)

//t1 := time.Now()

//fmt.Printf("t1:%v\n", t1)

//t2 := <-timer1.C

//fmt.Printf("t2:%v\n", t2)

// 2.验证timer只能响应1次

//timer2 := time.NewTimer(time.Second)

//for {

// <-timer2.C

// fmt.Println("时间到")

//}

// 3.timer实现延时的功能

//(1)

//time.Sleep(time.Second)

//(2)

//timer3 := time.NewTimer(2 * time.Second)

//<-timer3.C

//fmt.Println("2秒到")

//(3)

//<-time.After(2*time.Second)

//fmt.Println("2秒到")

// 4.停止定时器

//timer4 := time.NewTimer(2 * time.Second)

//go func() {

// <-timer4.C

// fmt.Println("定时器执行了")

//}()

//b := timer4.Stop()

//if b {

// fmt.Println("timer4已经关闭")

//}

// 5.重置定时器

timer5 := time.NewTimer(3 * time.Second)

timer5.Reset(1 * time.Second)

fmt.Println(time.Now())

fmt.Println(<-timer5.C)

for {

}

}

Ticker: time is up, performed multiple times

package main

import (

"fmt"

"time"

)

func main() {

// 1.获取ticker对象

ticker := time.NewTicker(1 * time.Second)

i := 0

// 子协程

go func() {

for {

//<-ticker.C

i++

fmt.Println(<-ticker.C)

if i == 5 {

//停止

ticker.Stop()

}

}

}()

for {

}

}select multiplexer

In some scenarios we need to receive data from a plurality of channels . Upon receiving the data channel, the data can be received if there is no blocking will occur. You might write the following code uses traversed ways to achieve:

for{

// 尝试从ch1接收值

data, ok := <-ch1

// 尝试从ch2接收值

data, ok := <-ch2

…

}

Although this method can achieve a plurality of channels received from the demand values, but much worse operating performance. To cope with this scenario, Go built select keyword, a plurality of channels can operate simultaneously in response.

The switch statement is similar to using a select, it has a series of case branches and a default branch. Each case will correspond to a communication channel (receiving or transmitting) process. select waits, until the communication operation when a case is completed, the case will be executed branch corresponding statement. The following format:

select {

case <-chan1:

// 如果chan1成功读到数据,则进行该case处理语句

case chan2 <- 1:

// 如果成功向chan2写入数据,则进行该case处理语句

default:

// 如果上面都没有成功,则进入default处理流程

}

select one or more simultaneously can listen channel, wherein a channel ready until

package main

import (

"fmt"

"time"

)

func test1(ch chan string) {

time.Sleep(time.Second * 5)

ch <- "test1"

}

func test2(ch chan string) {

time.Sleep(time.Second * 2)

ch <- "test2"

}

func main() {

// 2个管道

output1 := make(chan string)

output2 := make(chan string)

// 跑2个子协程,写数据

go test1(output1)

go test2(output2)

// 用select监控

select {

case s1 := <-output1:

fmt.Println("s1=", s1)

case s2 := <-output2:

fmt.Println("s2=", s2)

}

}

Meanwhile, if a plurality of channel ready, randomly selects an execution

package main

import (

"fmt"

)

func main() {

// 创建2个管道

int_chan := make(chan int, 1)

string_chan := make(chan string, 1)

go func() {

//time.Sleep(2 * time.Second)

int_chan <- 1

}()

go func() {

string_chan <- "hello"

}()

select {

case value := <-int_chan:

fmt.Println("int:", value)

case value := <-string_chan:

fmt.Println("string:", value)

}

fmt.Println("main结束")

}

Select may be used to judge whether the pipeline is full

package main

import (

"fmt"

"time"

)

// 判断管道有没有存满

func main() {

// 创建管道

output1 := make(chan string, 10)

// 子协程写数据

go write(output1)

// 取数据

for s := range output1 {

fmt.Println("res:", s)

time.Sleep(time.Second)

}

}

func write(ch chan string) {

for {

select {

// 写数据

case ch <- "hello":

fmt.Println("write hello")

default:

fmt.Println("channel full")

}

time.Sleep(time.Millisecond * 500)

}

}Concurrency and security lock

Sometimes the Go may be present in a plurality of simultaneously operating goroutine resources (critical), this race condition (data race) occurs . Examples of analogy in real life there is a crossroads of the car to compete in all directions; there is competition toilet on the train car were.

for example:

package main

import (

"fmt"

"sync"

)

var x int64

var wg sync.WaitGroup

func add() {

for i := 0; i < 5000; i++ {

x = x + 1

}

wg.Done()

}

func main() {

wg.Add(1)

go add()

go add()

wg.Wait()

fmt.Println(x)

}

// 6310 (想要的结果应该是10000)

The above code, we opened two goroutine to accumulate value of the variable x, these two goroutine competition will exist in data access and modify the x variable, leading to discrepancies with the final results expected.

Mutex

Mutex is a commonly used method for controlling access to shared resources, it is possible to ensure that only one goroutine can access the shared resources. Go language used in sync Mutex type of package to implement the mutex.

Mutex lock to fix the problem using the above code:

package main

import (

"fmt"

"sync"

)

var x int64

var wg sync.WaitGroup

var lock sync.Mutex

func add() {

for i := 0; i < 5000; i++ {

lock.Lock() // 加锁

x = x + 1

lock.Unlock() // 解锁

}

wg.Done()

}

func main() {

wg.Add(2)

go add()

go add()

wg.Wait()

fmt.Println(x)

}

// 10000

Use a mutex to ensure the same time and only one goroutine enter the critical section, other goroutine are waiting for a lock;

When the mutex released pending the goroutine before they can acquire the lock to enter the critical section, multiple goroutine while waiting for a lock, Wake strategy is random.

Write mutex

Mutex is entirely mutually exclusive, but there are many practical scenarios are reading and writing less, when we read a resource concurrently to resources that do not involve modification is not necessary when locked, use this scenario read-write lock be a better choice. Using the sync RWMutex write lock type package, in Go.

Read-write locks are divided into two types: read and write locks.

When goroutine get a read lock, other goroutine if it is to acquire a read lock will continue to get the lock, if it is to obtain a write lock will wait;

When goroutine get a write lock, the other goroutine either acquire a read lock or a write lock will wait.

Read-write lock Example:

package main

import (

"fmt"

"sync"

"time"

)

var (

x int64

wg sync.WaitGroup

lock sync.Mutex

rwlock sync.RWMutex

)

func write() {

// lock.Lock() // 加互斥锁

rwlock.Lock() // 加写锁

x = x + 1

time.Sleep(10 * time.Millisecond) // 假设读操作耗时10毫秒

rwlock.Unlock() // 解写锁

// lock.Unlock() // 解互斥锁

wg.Done()

}

func read() {

// lock.Lock() // 加互斥锁

rwlock.RLock() // 加读锁

time.Sleep(time.Millisecond) // 假设读操作耗时1毫秒

rwlock.RUnlock() // 解读锁

// lock.Unlock() // 解互斥锁

wg.Done()

}

func main() {

start := time.Now()

for i := 0; i < 10; i++ {

wg.Add(1)

go write()

}

for i := 0; i < 1000; i++ {

wg.Add(1)

go read()

}

wg.Wait()

end := time.Now()

fmt.Println(end.Sub(start))

}

Note that the read-write lock is ideal for reading and writing little scenes, little difference if the operation read and write, read-write locks advantage play out.

Sync

sync.WaitGroup

Synchronization blunt use time.Sleep in code is certainly not appropriate, Go sync.WaitGroup language can be used to implement concurrent tasks . sync.WaitGroup has the following methods:

| Method name | Features |

|---|---|

| (wg * WaitGroup) Add(delta int) | Counter + delta |

| (wg *WaitGroup) Done() | -1 counters |

| (wg *WaitGroup) Wait() | Blocks until the counter becomes 0 |

Internal sync.WaitGroup maintains a counter, the counter value can increase and decrease. For example, when we launched the N concurrent tasks, it will increment the counter N. The counter is decremented by calling the Done () method of the completion of each task. To wait for concurrent tasks by calling the Wait () executed, when the counter value is 0, indicating that all concurrent tasks have been completed.

We use sync.WaitGroup the above code to optimize it:

package main

import (

"fmt"

"sync"

)

var wg sync.WaitGroup

func hello() {

defer wg.Done()

fmt.Println("Hello Goroutine!")

}

func main() {

wg.Add(1)

go hello() // 启动另外一个goroutine去执行hello函数

fmt.Println("main goroutine done!")

wg.Wait()

}

//main goroutine done!

//Hello Goroutine!

Note sync.WaitGroup is a structure, when passing a pointer to pass.

sync.Once

He said in front of the words: This is an advanced knowledge points.

In the programming of many scenarios we need to make sure certain operations in a highly concurrent scenarios is performed only once, for example configuration file is loaded only once, only one channel and so close .

Go language in sync package provides a solution for -sync.Once executed only once scene.

Do sync.Once only a method signature as follows:

func (o *Once) Do(f func()) {}

Note: If you need to perform the function f you will need to pass parameters to use with closures.

Load Profile example

A delay spending a lot of time to actually use the initialization operation to re-execute it is a good practice. Because pre-initialize a variable (such as done in the init function to initialize) will increase the startup time-consuming process, and it is possible that the actual implementation process variable no access, then the initialization is not to be done. Let's look at an example:

var icons map[string]image.Image

func loadIcons() {

icons = map[string]image.Image{

"left": loadIcon("left.png"),

"up": loadIcon("up.png"),

"right": loadIcon("right.png"),

"down": loadIcon("down.png"),

}

}

// Icon 被多个goroutine调用时不是并发安全的

func Icon(name string) image.Image {

if icons == nil {

loadIcons()

}

return icons[name]

}

Goroutine multiple concurrent calls Icon function is not concurrency-safe, modern compiler and CPU may be in order to ensure that each goroutine are met on a consistent basis serial freely rearranged access memory. loadIcons function may be rearranged to the following results:

func loadIcons() {

icons = make(map[string]image.Image)

icons["left"] = loadIcon("left.png")

icons["up"] = loadIcon("up.png")

icons["right"] = loadIcon("right.png")

icons["down"] = loadIcon("down.png")

}

In this case the icons will appear even if the judgment is not nil does not mean variable initialization is complete. Given this situation, we could think of is to add mutex to ensure that the time will not be initialized icons goroutine other operations, but doing so will lead to performance problems.

Sample code sync.Once modified as follows:

var icons map[string]image.Image

var loadIconsOnce sync.Once

func loadIcons() {

icons = map[string]image.Image{

"left": loadIcon("left.png"),

"up": loadIcon("up.png"),

"right": loadIcon("right.png"),

"down": loadIcon("down.png"),

}

}

// Icon 是并发安全的

func Icon(name string) image.Image {

loadIconsOnce.Do(loadIcons)

return icons[name]

}

sync.Once actually inside a mutex and a Boolean value, mutex to ensure data security and Boolean values, and Boolean value that records initialization is complete. In this way we can guarantee the design is complicated by the initialization operation when initialization operation safe and will not be executed multiple times.

sync.Map

Go languages built-in map is not concurrent safe. Consider the following example:

var m = make(map[string]int)

func get(key string) int {

return m[key]

}

func set(key string, value int) {

m[key] = value

}

func main() {

wg := sync.WaitGroup{}

for i := 0; i < 20; i++ {

wg.Add(1)

go func(n int) {

key := strconv.Itoa(n)

set(key, n)

fmt.Printf("k=:%v,v:=%v\n", key, get(key))

wg.Done()

}(i)

}

wg.Wait()

}

The code above to open a small amount of time may be a few goroutine no problem, after more than a concurrent implementation of the code above will be reported fatal error: concurrent map writes error.

Like this scenario it is necessary to map lock to ensure the safety of the concurrent, sync Go language package provides a safe-box concurrent version map-sync.Map. Out of the box represents do not like the built-in map function to initialize make use of the same can be used directly. Meanwhile sync.Map operating method such as a built-Store, Load, LoadOrStore, Delete, Range like.

var m = sync.Map{}

func main() {

wg := sync.WaitGroup{}

for i := 0; i < 20; i++ {

wg.Add(1)

go func(n int) {

key := strconv.Itoa(n)

m.Store(key, n)

value, _ := m.Load(key)

fmt.Printf("k=:%v,v:=%v\n", key, value)

wg.Done()

}(i)

}

wg.Wait()

}Atomic operation (Atomic packet)

Atomic operation

Because the lock operation code relates to kernel mode context switches would be more time-consuming, the cost is relatively high. For basic data types we can use atomic operations to ensure concurrency-safe, since the atomic operation is provided a method of Go it can be done in user mode, thus better performance than the locking operation. Go language by a built-in atomic operation provides standard library sync / atomic.

atomic package

| method | Explanation |

|---|---|

| func LoadInt32(addr int32) (val int32) func LoadInt64(addr `int64 ) (val int64)<br>func LoadUint32(addruint32) (val uint32)<br>func LoadUint64(addruint64) (val uint64)<br>func LoadUintptr(addruintptr) (val uintptr)<br>func LoadPointer(addrunsafe.Pointer`) (val unsafe.Pointer) |

Read operation |

func StoreInt32(addr *int32, val int32)func StoreInt64(addr *int64, val int64)func StoreUint32(addr *uint32, val uint32)func StoreUint64(addr *uint64, val uint64)func StoreUintptr(addr *uintptr, val uintptr)func StorePointer(addr *unsafe.Pointer, val unsafe.Pointer) |

Write |

func AddInt32(addr *int32, delta int32) (new int32)func AddInt64(addr *int64, delta int64) (new int64)func AddUint32(addr *uint32, delta uint32) (new uint32)func AddUint64(addr *uint64, delta uint64) (new uint64)func AddUintptr(addr *uintptr, delta uintptr) (new uintptr) |

Modification operations |

func SwapInt32(addr *int32, new int32) (old int32)func SwapInt64(addr *int64, new int64) (old int64)func SwapUint32(addr *uint32, new uint32) (old uint32)func SwapUint64(addr *uint64, new uint64) (old uint64)func SwapUintptr(addr *uintptr, new uintptr) (old uintptr)func SwapPointer(addr *unsafe.Pointer, new unsafe.Pointer) (old unsafe.Pointer) |

Swap operation |

func CompareAndSwapInt32(addr *int32, old, new int32) (swapped bool)func CompareAndSwapInt64(addr *int64, old, new int64) (swapped bool)func CompareAndSwapUint32(addr *uint32, old, new uint32) (swapped bool)func CompareAndSwapUint64(addr *uint64, old, new uint64) (swapped bool)func CompareAndSwapUintptr(addr *uintptr, old, new uintptr) (swapped bool)func CompareAndSwapPointer(addr *unsafe.Pointer, old, new unsafe.Pointer) (swapped bool) |

Compare and swap operation |

atomic examples

We fill a sample and to compare the performance of the mutex atomic operation.

var x int64

var l sync.Mutex

var wg sync.WaitGroup

// 普通版加函数

func add() {

// x = x + 1

x++ // 等价于上面的操作

wg.Done()

}

// 互斥锁版加函数

func mutexAdd() {

l.Lock()

x++

l.Unlock()

wg.Done()

}

// 原子操作版加函数

func atomicAdd() {

atomic.AddInt64(&x, 1)

wg.Done()

}

func main() {

start := time.Now()

for i := 0; i < 10000; i++ {

wg.Add(1)

// go add() // 普通版add函数 不是并发安全的

// go mutexAdd() // 加锁版add函数 是并发安全的,但是加锁性能开销大

go atomicAdd() // 原子操作版add函数 是并发安全,性能优于加锁版

}

wg.Wait()

end := time.Now()

fmt.Println(x)

fmt.Println(end.Sub(start))

}

atomic atomic level package provides the underlying memory operations for the synchronization algorithm to achieve useful. These functions must be careful to ensure proper use. Except in special underlying application, the use of channels or packet sync function / better synchronization type.

Reference Links: http://www.topgoer.com/%E5%B9%B6%E5%8F%91%E7%BC%96%E7%A8%8B/channel.html