Docker container to achieve interoperability across hosts. Recommended overlay network type.

What is the overlay network

The overlaynetwork driver will watch the process of creating a distributed network between multiple hosts in Docker. The network is positioned above the network-specific host, it allows connection to the container securely communicate (including the Cluster Service Container). Docker handled transparently routed between each packet with the correct host Docker daemon and correct target container.

When you initialize a group or join a Docker host to an existing group, will create two new network on the host Docker

- Called ingress of overlay network for cluster control or data messaging services, if not specified when creating a swarm overlay network connection service user-defined, will be added to the default ingress network.

- A bridge network named

docker_gwbridge, it will connect to each other Docker daemon daemon in the cluster.

You can create custom use docker network create the overlay network, and service container can join multiple network, and only on the same network container can exchange information with each other, can be connected to the overlay network in a single container or swarm services.

Environment:

East Second District (Shanghai) two sets as: 11.11.11.11 and 11.11.11.22

East a zone (Hangzhou) one is: 11.11.11.33

Before Ali cloud dcoker build cross-host communication. Docker has been used on a physical machine cluster swarm + overlay successfully solved the problem of cross-host access. Ali thought directly on the cloud, then take time, it should not be a problem. Can be counterproductive, ultimately, can not on Ali cloud cluster server, set up docker interoperability across hosts.

Then find a variety of information, use the consul + overlay, can not communicate.

Finally found the answer:

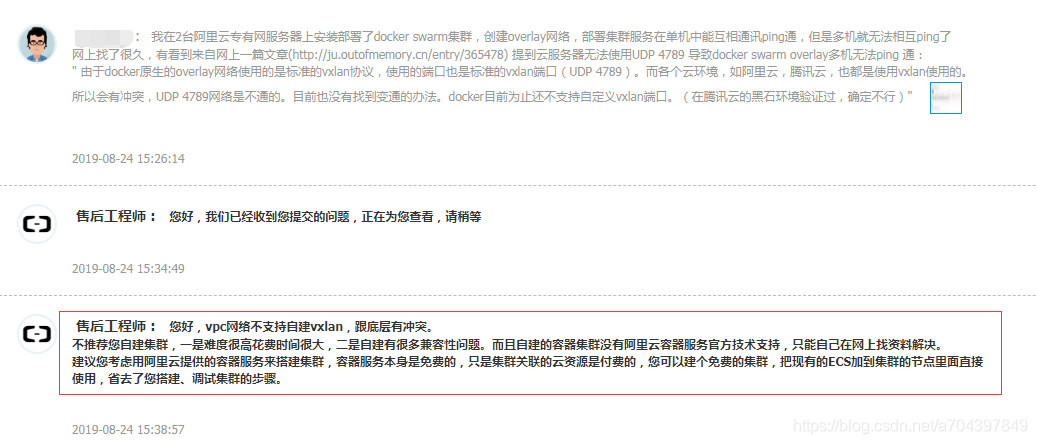

Ali cloud sales engineers: Hello, vpc network does not support self vxlan, there is a conflict with the underlying.

We do not recommend self-built cluster, one is very difficult to spend a lot of time, and second, there are a lot of self-built compatibility issues. And self-built container vessel service cluster is not Ali goes official technical support, only to find their own information to solve online.

We recommend that you consider using container services provided by Ali cloud to build clusters, container service itself is free, but the associated cloud resource cluster is paid for, you can build a free cluster, the cluster node is added to the existing ECS inside directly, eliminating the need for you to build, debug cluster step.

Screenshot:

Ali employees clear answer: vpc network does not support the self vxlan, while docker overlay with the vxlan!

In addition to other major cloud server should also not, some people have tested Tencent cloud server does not work (I did not verify this!).

docker swarm cluster can use container services provided by cloud Ali (Ali cloud services provide a container or k8s docker swarm cluster services), or build your own room!

As for self k8s not get off temporarily unclear.

This, I thought, should be self helpless.

However, inadvertently, Ali saw the cloud an explanation within the network:

If the ECS need to transfer data between two instances of the same region, the network connection is recommended. Between the ECS and the cloud server cloud database, load balancing, and object store may also be provided with another network connection.

ECS intra-network cloud server, a non-optimized examples I O / gigabit shared bandwidth, the I O Example optimized / Gigabit or 25G is shared bandwidth. Because it is a shared network, and therefore can not guarantee the bandwidth speed is constant.

For the ECS within the network Example:

- Affects the type of network communication network ECS instance, those accounts, local, security groups and the like. Specific information, shown in the following table.

Network Type Your account area Security Group How to achieve network interoperability VPC (the same VPC) The same account or a different account The same area The same security group Default interoperability can be achieved within a set of network security isolation. Refer to security groups in the network isolation . Different security group Security group authorized to achieve interoperability within the network, please refer to the security group Applications . VPC (different VPC) The same account or a different account The same area Different security group Achieve interoperability via high-speed network, please refer to usage scenarios . Different regions Classic Network The same account The same area The same security group The default exchange. Different accounts The same area The same security group or different security groups Security group authorized to achieve interoperability within the network, details see security group Applications . - Private IP address of the private network can be modified. For details, please refer to modify the private IP address . You can not modify or replace the classic example of the type of network of ECS private IP address.

- You can use a proprietary network of ClassicLink VPC function, the ECS classic example of the type of network to access cloud resources within the VPC network through a private network. For details, see ClassicLink .

Above that is very clear. With a VPC, the same account, the same region, the same security group, the default network is interconnected.

In other words, I have at least two servers, the current network is interconnected. (Ie East Second District), and then I try to use consul + overlay, to test on both servers, containers. Can not ping each other. Fortunately. Normal ping pass. But in the East a zone. Certainly it does not. So, I'm on the East Second District, bought one. While no longer a district of the East renewals.

Next, a detailed explanation. If you build a consul + overlay environment.

First, build a consul cluster environment

1, running and pulling the container mirror consul

[root@master /]# docker pull consul

Using default tag: latest

latest: Pulling from library/consul

Digest: sha256:a167e7222c84687c3e7f392f13b23d9f391cac80b6b839052e58617dab714805

Status: Image is up to date for consul:latest

docker.io/library/consul:latest

[root@master /]# docker run -dit \

--network host \

--privileged=true \

--restart=always \

--hostname=master_consul \

--name=master-consul \

-v /etc/localtime:/etc/localtime \

-e CONSUL_BIND_INTERFACE=eth0 \

-e TZ='Asia/Shanghai' \

-e LANG="en_US.UTF-8" \

-e 'CONSUL_LOCAL_CONFIG={"skip_leave_on_interrupt": true}' \

consul:latest \

agent -server \

-bind=172.18.18.11 \

-advertise=11.11.11.11 \

-bootstrap-expect=3 -ui -node=master-consul \

-data-dir=/tmp/consul -client 0.0.0.0 Description:

-e CONSUL_BIND_INTERFACE use eth0 = eth0 card

-bind = 172.18.18.11 ip address represents the network bind this server. I.e., network card address of eth0

-advertise = 11.11.11.11 showing notification address, using a public network Ip

2, into the container, add cluster

a、进入容器

docker exec -it master-consul sh

b、 加入 consul 集群

consul join 11.11.11.113, the remaining two servers is the same step.

Note: 11.11.11.22 and 11.11.11.33 are consul join 11.11.11.11

Second, create overlay network

# 创建 overlay 网卡,用于 集群服务的网卡设置(只需要在 master 节点上创建,从节点自然会获取)

docker network create --driver overlay --subnet=20.0.0.0/24 --gateway=20.0.0.254 --attachable cluster-overlay-subThird, the use of nginx load balancing consul,

1, running nginx container

docker run -dit \

--net cluster-overlay-sub \

--ip 20.0.0.247 \

--privileged=true \

--restart=always \

--name=keda-master-nginx \

--hostname=master_nginx \

-p 80:80 \

-p 443:443 \

-p 18500:8500 \

-v /usr/docker/software/nginx/html/:/usr/share/nginx/html/ \

-v /usr/docker/software/nginx/conf/:/etc/nginx/ \

-v /usr/docker/software/nginx/home/:/home/ \

-v /usr/docker/software/nginx/data/:/data/ \

-v /usr/docker/software/nginx/logs/:/var/log/nginx/ \

-v /etc/localtime:/etc/localtime \

-e TZ='Asia/Shanghai' \

-e LANG="en_US.UTF-8" \

nginx:latest2, disposed upstream of nginx

#consul集群负载均衡

upstream consul {

server 11.11.11.11:8500;

server 11.11.11.22:8500;

server 11.11.11.33:8500;

}

#监听本机8500端口,转发到upstream consul下

server {

listen 8510;

location / {

include /etc/nginx/conf.d/proxy.conf;

proxy_pass http://consul;

}

}Fourth, the configuration change docker.service

[root@master /]# vim /lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

BindsTo=containerd.service

After=network-online.target firewalld.service containerd.service

Wants=network-online.target

Requires=docker.socket

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

#ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:12375 -H unix://var/run/docker.sock -H fd:// --containerd=/run/containerd/containerd.sock

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --cluster-store=consul://11.11.11.11:18510 --cluster-advertise=172.18.18.11:12375 -H tcp://0.0.0.0:12375 -H unix://var/run/docker.sock

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

# Note that StartLimit* options were moved from "Service" to "Unit" in systemd 229.

# Both the old, and new location are accepted by systemd 229 and up, so using the old location

# to make them work for either version of systemd.

StartLimitBurst=3

# Note that StartLimitInterval was renamed to StartLimitIntervalSec in systemd 230.

# Both the old, and new name are accepted by systemd 230 and up, so using the old name to make

# this option work for either version of systemd.

StartLimitInterval=60s

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Comment TasksMax if your systemd version does not support it.

# Only systemd 226 and above support this option.

TasksMax=infinity

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

[Install]

WantedBy=multi-user.target主要:

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --cluster-store=consul://11.11.11.11:18510 --cluster-advertise=172.18.18.11:12375 -H tcp://0.0.0.0:12375 -H unix://var/run/docker.sock

--cluster-store=consul://11.11.11.11:18510

He represents: nginx address. By nginx to load consul cluster

--cluster-advertise=172.18.18.11:12375

Represents: Ip native network communication port 12375 + docker address (default is 2375, changed to another possible or likely to be attacked.)

The remaining two servers is the same step.

Fifth, restart docker

sudo systemctl daemon-reload & sudo systemctl restart dockerSixth, on three servers, create a separate container overlay network. As in the previous "cluster-overlay-sub"

1. Create a container

master:

docker run -dit \

--net cluster-overlay-sub \

--ip 20.0.0.3 \

--privileged=true \

--restart=always \

--name=springCloud-master-eureka \

--hostname=master_eureka \

-v /usr/docker/springCloud/eureka-dockerfile/eureka/:/var/local/eureka/ \

-v /data/springCloud/eureka/:/data/springCloud/ \

-v /etc/localtime:/etc/localtime \

-e TZ='Asia/Shanghai' \

-e LANG="en_US.UTF-8" \

-p 20000:9800 \

seowen/eureka:latest \

slave1:

docker run -dit \

--net cluster-overlay-sub \

--ip 20.0.0.4 \

--privileged=true \

--restart=always \

--name=springCloud-slave1-eureka \

--hostname=salve1_eureka \

-v /usr/docker/springCloud/eureka-dockerfile/eureka/:/var/local/eureka/ \

-v /data/springCloud/eureka/:/data/springCloud/ \

-v /etc/localtime:/etc/localtime \

-e TZ='Asia/Shanghai' \

-e LANG="en_US.UTF-8" \

-p 20000:9800 \

seowen/eureka:latest \

slave2:

docker run -dit \

--net cluster-overlay-sub \

--ip 20.0.0.5 \

--privileged=true \

--restart=always \

--name=springCloud-slave2-eureka \

--hostname=salve2_eureka \

-v /usr/docker/springCloud/eureka-dockerfile/eureka/:/var/local/eureka/ \

-v /data/springCloud/eureka/:/data/springCloud/ \

-v /etc/localtime:/etc/localtime \

-e TZ='Asia/Shanghai' \

-e LANG="en_US.UTF-8" \

-p 20000:9800 \

seowen/eureka:latest \2, into the container, of ping

[root@master opt]# clear

[root@master opt]# docker exec -it e03b31f2c8b6 bash

[root@master_eureka eureka]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 20.0.0.2 netmask 255.255.255.0 broadcast 20.0.0.255

ether 02:42:14:00:00:02 txqueuelen 0 (Ethernet)

RX packets 1110 bytes 81148 (79.2 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.18.0.2 netmask 255.255.0.0 broadcast 172.18.255.255

ether 02:42:ac:12:00:02 txqueuelen 0 (Ethernet)

RX packets 3181102 bytes 810628239 (773.0 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2869065 bytes 791404615 (754.7 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 31 bytes 2392 (2.3 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 31 bytes 2392 (2.3 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@master_eureka eureka]# ping 20.0.0.3

PING 20.0.0.3 (20.0.0.3) 56(84) bytes of data.

64 bytes from 20.0.0.3: icmp_seq=1 ttl=64 time=1003 ms

64 bytes from 20.0.0.3: icmp_seq=2 ttl=64 time=3.22 ms

64 bytes from 20.0.0.3: icmp_seq=3 ttl=64 time=1.79 ms

64 bytes from 20.0.0.3: icmp_seq=4 ttl=64 time=1.79 ms

64 bytes from 20.0.0.3: icmp_seq=5 ttl=64 time=1.77 ms

64 bytes from 20.0.0.3: icmp_seq=6 ttl=64 time=1.77 ms

3, view network

[root@slave2 ~]# docker network inspect cluster-overlay-sub

[

{

"Name": "cluster-overlay-sub",

"Id": "26db25278114e2cxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxca1f2eddba1cf38add952b0",

"Created": "2019-12-25T18:29:51.325915414+08:00",

"Scope": "global",

"Driver": "overlay",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "20.0.0.0/24",

"Gateway": "20.0.0.254"

}

]

},

"Internal": false,

"Attachable": true,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"48f2da4eaa2c693939d6xxxxxxxxxxxxxxxxxxxxxxxxxxxx7c58669a4b464ad493f73": {

"Name": "springCloud-slave2-eureka",

"EndpointID": "2d2c68a17e0b27915e52xxxxxxxxxxxxxxxxxxxxxxxxxxx467f0eb1f7bda9",

"MacAddress": "02:42:14:00:00:04",

"IPv4Address": "20.0.0.4/24",

"IPv6Address": ""

},

"ep-2b407f01d38b6cb1dc247xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxa068040be6ded8": {

"Name": "keda-master-nginx",

"EndpointID": "2b407f01d38b6cxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx68040be6ded8",

"MacAddress": "02:42:14:00:00:f7",

"IPv4Address": "20.0.0.247/24",

"IPv6Address": ""

},

"ep-732b41808e90ce0xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxe149c0eec41": {

"Name": "springCloud-slave1-eureka",

"EndpointID": "732b41808e90ce0xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx149c0eec41",

"MacAddress": "02:42:14:00:00:03",

"IPv4Address": "20.0.0.3/24",

"IPv6Address": ""

},

"ep-7609e871f68fe5bc6xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx5e0573fc30d73f": {

"Name": "springCloud-master-eureka",

"EndpointID": "7609e871f68fe5bxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx573fc30d73f",

"MacAddress": "02:42:14:00:00:02",

"IPv4Address": "20.0.0.2/24",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

[root@slave2 ~]#