Single evaluation index Machine Learning

In machine learning, they usually faced with a situation, the face of numerous models and numerous performance indicators, how should it be selected models. This article is mainly to showcase how the evaluation index to select a single model, the main content from deeplearnai video tutorials.

Single evaluation index

application of machine learning process can be divided into three ideas, coding, see results. When initially faced with a problem to be solved, we will often have an idea, and then encoded according to our ideas, eventually to generate new ideas based on the actual effect of the code, and then modify the code, and so on.

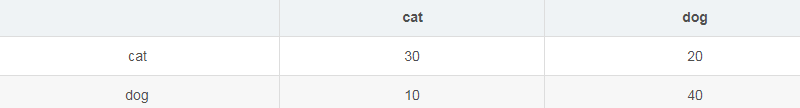

Below through an example to illustrate the precision and recall, suppose we have a classifier cats and dogs, a total of 100 pictures, which accounted for cats and dogs pictures 50/50, prediction table with a description.

On the table is actually a representation of confusion matrix, it is predicted class standard and is consistent with real class standard indicated by diagonal lines. Here the cat is called positive cases, the dog is known as counterexample. 50 pictures of cats, there are 30 pictures correctly predict is the real cases (TP), there are 20 pictures of cats are predicted to become a dog that is a false counter-examples (FN). 50 pictures of dogs, there are 40 pictures of correctly predicted is true negatives (TN), there are 10 pictures of dogs is known as the cat is predicted false positive cases (FP).

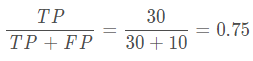

Precision (precision): also known as precision, calculated as

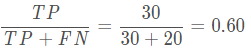

Recall (recall): also referred to recall, calculated as

Precision in fact, to predict the results of a class, we predict the correct proportion. Precision pursuit of prediction accuracy of the results. The recall is for a certain type of prediction, the correct picture to find out the proportion of such share. Recall the pursuit of comprehensive prediction results.

At first we had a cat and dog classifier A, precision (precision) A classifier is 95% recall (recall) was 90%. By adjusting the parameters and the optimization algorithm model, we get a cats classifier B, B precision of the classifier (precision) of 98% recall rate (reacall) was 85%.

At this time, the face of classifiers A and B, we will encounter a problem, which in the end classification should choose? For accuracy in terms of performance classifier classifier B higher than A, the recall ratio for the A classifier performance and also better than classifiers B. So this time we do not know which one should choose a classifier.

Single evaluation index: refers to the above, when this happens, we just want precision and recall in a selected evaluation can be carried out, can be selected according to the different characteristics of different indicators of indicators suitable for their own system . If you want taking into account the precision and recall, you can use F1 score. Can be found on the table by F1 score we can quickly pick out a classifier A to B. As the classifiers

F1 socre calculated as follows:

Wherein P represents precision, R representative of recall, through F1 score can be balanced by the precision and recall of the full-rate evaluation. F1 score is also known as the harmonic mean of precision and recall rates.

In some cases, we may encounter the following situation

Sometimes you may encounter, for a model, need to look at a number of different indicators, this time we can consider using the average of a number of different indicators, if required will be higher for certain indicators, then by adjust these indicators share of the weight, to highlight the importance of these indicators.