This article introduces object-oriented multi-threaded python reptile crawling example code Sohu page, a friend in need can refer

first of all we need several packages: requests, lxml, bs4, pymongo , redis

- Create a crawler object with several behaviors: grab the page, page parsing, extraction page, store page

class Spider(object):

def __init__(self):

# 状态(是否工作)

self.status = SpiderStatus.IDLE

# 抓取页面

def fetch(self, current_url):

pass

# 解析页面

def parse(self, html_page):

pass

# 抽取页面

def extract(self, html_page):

pass

# 储存页面

def store(self, data_dict):

pass

- Reptiles setting properties, and not in the crawling crawling, we use a type of packaging, which makes @Unique unique elements, unique and requires the Enum enum introduced from the inside:

@unique

class SpiderStatus(Enum):

IDLE = 0

WORKING = 1

- Rewrite multithreaded categories:

class SpiderThread(Thread):

def __init__(self, spider, tasks):

super().__init__(daemon=True)

self.spider = spider

self.tasks = tasks

def run(self):

while True:

pass

- Now the basic structure of reptiles have been done, create tasks in the main function, Queue need to import from the queue inside:

def main():

# list没有锁,所以使用Queue比较安全, task_queue=[]也可以使用,Queue 是先进先出结构, 即 FIFO

task_queue = Queue()

# 往队列放种子url, 即搜狐手机端的url

task_queue.put('http://m.sohu,com/')

# 指定起多少个线程

spider_threads = [SpiderThread(Spider(), task_queue) for _ in range(10)]

for spider_thread in spider_threads:

spider_thread.start()

# 控制主线程不能停下,如果队列里有东西,任务不能停, 或者spider处于工作状态,也不能停

while task_queue.empty() or is_any_alive(spider_threads):

pass

print('Over')

. 4-1 and is_any_threads is to determine whether there are spider thread is still alive, so we write a function to encapsulate this:

def is_any_alive(spider_threads):

return any([spider_thread.spider.status == SpiderStatus.WORKING

for spider_thread in spider_threads])

- All structures have all been finished, the next step is to fill the code can be part of the reptiles, in SpiderThread (Thread) inside and started writing method reptile run run, that after the thread up to do:

def run(self):

while True:

# 获取url

current_url = self.tasks_queue.get()

visited_urls.add(current_url)

# 把爬虫的status改成working

self.spider.status = SpiderStatus.WORKING

# 获取页面

html_page = self.spider.fetch(current_url)

# 判断页面是否为空

if html_page not in [None, '']:

# 去解析这个页面, 拿到列表

url_links = self.spider.parse(html_page)

# 把解析完的结构加到 self.tasks_queue里面来

# 没有一次性添加到队列的方法 用循环添加算求了

for url_link in url_links:

self.tasks_queue.put(url_link)

# 完成任务,状态变回IDLE

self.spider.status = SpiderStatus.IDLE

- You can now start writing Spider () method of this class four inside the first write fetch () to fetch page inside:

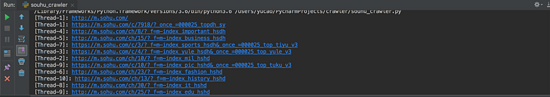

@Retry()

def fetch(self, current_url, *, charsets=('utf-8', ), user_agent=None, proxies=None):

thread_name = current_thread().name

print(f'[{thread_name}]: {current_url}')

headers = {'user-agent': user_agent} if user_agent else {}

resp = requests.get(current_url,

headers=headers, proxies=proxies)

# 判断状态码,只要200的页面

return decode_page(resp.content, charsets) \

if resp.status_code == 200 else None

. 6-1 decode_page our package outside of a decoding function of the class:

def decode_page(page_bytes, charsets=('utf-8',)):

page_html = None

for charset in charsets:

try:

page_html = page_bytes.decode(charset)

break

except UnicodeDecodeError:

pass

# logging.error('Decode:', error)

return page_html

. 6-2 @retry is decorators, retry, because of the need parameter passing, where we use a class packaging, the final change @Retry ():

# retry的类,重试次数3次,时间5秒(这样写在装饰器就不用传参数类), 异常

class Retry(object):

def __init__(self, *, retry_times=3, wait_secs=5, errors=(Exception, )):

self.retry_times = retry_times

self.wait_secs = wait_secs

self.errors = errors

# call 方法传参

def __call__(self, fn):

def wrapper(*args, **kwargs):

for _ in range(self.retry_times):

try:

return fn(*args, **kwargs)

except self.errors as e:

# 打日志

logging.error(e)

# 最小避让 self.wait_secs 再发起请求(最小避让时间)

sleep((random() + 1) * self.wait_secs)

return None

return wrapper()

- Next page write analytical method, namely parse ():

# 解析页面

def parse(self, html_page, *, domain='m.sohu.com'):

soup = BeautifulSoup(html_page, 'lxml')

url_links = []

# 找body的有 href 属性的 a 标签

for a_tag in soup.body.select('a[href]'):

# 拿到这个属性

parser = urlparse(a_tag.attrs['href'])

netloc = parser.netloc or domain

scheme = parser.scheme or 'http'

netloc = parser.netloc or 'm.sohu.com'

# 只爬取 domain 底下的

if scheme != 'javascript' and netloc == domain:

path = parser.path

query = '?' + parser.query if parser.query else ''

full_url = f'{scheme}://{netloc}{path}{query}'

if full_url not in visited_urls:

url_links.append(full_url)

7-1. We need to run inside method SpiderThread (), and in

current_url = self.tasks_queue.get()

adding the following

visited_urls.add(current_url)

Add another outside the class

visited_urls = set()去重

- Now we can begin to crawl the URL.

.

We recommend the python learning sites , to see how old the program is to learn! From basic python script, reptiles, django, data mining, programming techniques, work experience, as well as senior careful study of small python partners to combat finishing zero-based information projects! The method every day to explain the timing of Python programmers technology, learn and share some small details need to pay attention to the

summary

The above is an example of the code python object-oriented small series to introduce multi-threaded crawling reptiles Sohu page, we want to help, if you have any questions please give me a message,