Benpian in (08) java program connects kafka example based on some changes made

1, into the jar package need hadoop

Hadoop the installation on the server, the packet path to the jar, the jar package unpacked have required are as follows:

/usr/local/hadoop-2.7.3/share/hadoop/common

/usr/local/hadoop-2.7.3/share/hadoop/hdfs

2, the new connection class hadoo

package demo; import java.io.OutputStream; import java.net.URI; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; public class HDFSUtils { private static FileSystem fs; //初始化fs static{ Configuration conf = new Configuration(); conf.set("dfs.client.block.write.replace-datanode-on-failure.policy", "NEVER"); conf.set("dfs.client.block.write.replace-Datanode-ON-failure.enable", "to true" ); the try { FS = FileSystem.get ( new new the URI ( "HDFS: //192.168.7.151: 9000" ), the conf ); } the catch (Exception E) { e.printStackTrace (); } } / ** * consumer writes the data acquired from the topic to the HDFS * @param filename: file on the HDFS * @param data: data * / public static void sendToHDFS (String filename, String Data) throws Exception { the OutputStream OUT= Null ; IF (fs.exists (! New new Path (filename))) { // Create a file OUT = fs.create ( new new Path (filename)); } the else { // append the new content out = fs.append ( new new the Path (filename)); } // write data to the HDFS out.write (data.getBytes ()); the out.close (); } }

3, the new producer class, as in the first 08, without making changes

package demo; import java.util.Properties; import java.util.concurrent.TimeUnit; import kafka.javaapi.producer.Producer; import kafka.producer.KeyedMessage; import kafka.producer.ProducerConfig; import kafka.serializer.StringEncoder; public class ProducerDemo extends Thread { //指定具体的topic private String topic; public ProducerDemo(String topic){ this.topic = topic; } //每隔5秒发送一条消息 public void run(){ // create a producer object Producer producer = createProducer (); // send message int I =. 1 ; the while ( to true ) { String Data = "Message" + I ++ ; // use to send messages produer producer.send ( new new KeyedMessage ( the this .topic, data)); // Print System.out.println ( "sending data:" + data); the try { TimeUnit.SECONDS.sleep ( . 5 ); } the catch (Exception e) { e.printStackTrace(); } } } //创建Producer的实例 private Producer createProducer() { Properties prop = new Properties(); //声明zk prop.put("zookeeper.connect", "192.168.7.151:2181,192.168.7.152:2181,192.168.7.153:2181"); prop.put("serializer.class",StringEncoder.class.getName()); //声明Broker的地址 prop.put("metadata.broker.list","192.168.7.151:9092,192.168.7.151:9093"); return new Producer(new new ProducerConfig (prop)); } public static void main (String [] args) { // Start message thread sends new new ProducerDemo ( "mydemo1" ) .start (); } }

4, a new consumer class, the first 43-47 rows are added, the consumer information write hadoop

1 package demo; 2 3 import java.util.HashMap; 4 import java.util.List; 5 import java.util.Map; 6 import java.util.Properties; 7 8 9 import kafka.consumer.Consumer; 10 import kafka.consumer.ConsumerConfig; 11 import kafka.consumer.ConsumerIterator; 12 import kafka.consumer.KafkaStream; 13 import kafka.javaapi.consumer.ConsumerConnector; 14 15 public class ConsumerDemo the extends the Thread { 16 . 17 // specify a particular Topic 18 is Private String Topic; . 19 20 is public ConsumerDemo (String Topic) { 21 is the this .topic = Topic; 22 is } 23 is 24 public void RUN () { 25 // Construct a consumer object 26 is ConsumerConnector Consumer = createConsumer (); 27 // Construct a Map object representing topic 28 // String: topic name Integer: how many records are obtained from the topic 29 Map <String, Integer> = topicCountMap new newThe HashMap <String, Integer> (); 30 // last acquired from this topic in a record 31 is topicCountMap.put ( the this .topic,. 1 ); 32 // Construct a messageStream: input stream 33 is // String: List name topic : acquired data 34 is the Map <String, List <KafkaStream < byte [], byte [] = >>> messageStreams consumer.createMessageStreams (topicCountMap); 35 // get specific data each received 36 KafkaStream < byte [] , byte []> = messageStreams.get Stream ( the this .topic) .get (0 ); 37 [ ConsumerIterator<byte[], byte[]> iterator = stream.iterator(); 38 while(iterator.hasNext()){ 39 String message = new String(iterator.next().message()); 40 System.out.println("接受数据:" + message); 41 42 //将数据写入HDFS 43 try { 44 HDFSUtils.sendToHDFS("/kafka/data.txt", message); 45 } catch (Exception e) { 46 e.printStackTrace(); 47 } 48 49 } 50 } 51 is 52 is // create specific Consumer 53 is Private ConsumerConnector createConsumer () { 54 is the Properties prop = new new the Properties (); 55 // specified zk address 56 is prop.put ( "zookeeper.connect", "192.168. 7.151: 2181,192.168.7.152: 2181,192.168.7.153: 2181 " ); 57 // indicate that consumer spending group 58 prop.put (" group.id "," group1 " ); 59 // set time too small may be a connection timeout. . . 60 prop.put ( "zookeeper.connection.timeout.ms", "60000" ); return Consumer.createJavaConsumerConnector(new ConsumerConfig(prop)); 62 } 63 64 public static void main(String[] args) { 65 new ConsumerDemo("mydemo1").start(); 66 } 67 68 }

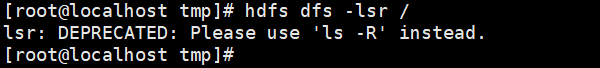

Code completion here, there is no data before the program starts first in hadoop

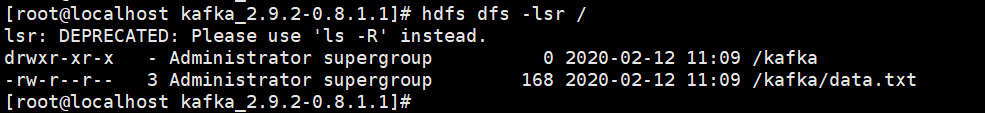

After starting the program producers and consumers, to see again, has produced data

Execution hdfs dfs -cat /kafka/data.txt view the data and found that consumer acceptance of data