Editor's Note: Netty is the famous Java open source network library field, is characterized by high performance and scalability, many popular frameworks are based on it to build, such as our well-known Dubbo, Rocketmq, Hadoop and so on. This article netty threading model launched under discussion analysis:)

IO model

- BIO: synchronous blocking IO model;

- NIO: IO multiplexing technology based on "non-blocking synchronization" IO model. In simple terms, the kernel will read and write event notification application, by the application initiates read and write operations;

- AIO: non-blocking asynchronous IO model. In simple terms, the kernel will read complete event notification application, read by the kernel is complete, the application can only manipulate data; immediately return the application to do asynchronous write operation, the kernel will write queue and write.

NIO and AIO except that the application is genuine read and write operations.

reactor model and proactor

- reactor: NIO-based technology, to notify the application when the read and write;

- proactor: AIO-based technology, notify the application when the reading is complete, the application writes to inform the kernel.

netty threading model

netty threading model is based on Reactor model.

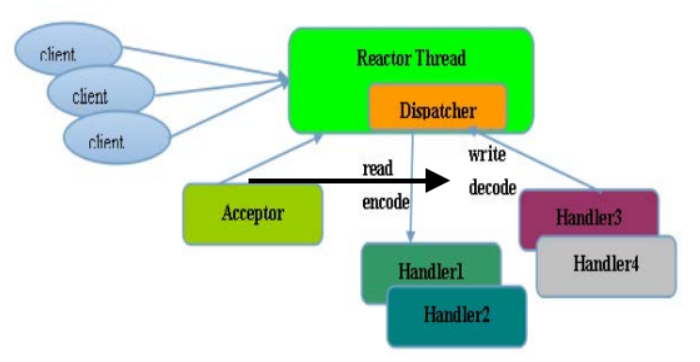

netty single-threaded model

Reactor threaded model, refers to all I / O operations are in the same upper thread NIO completed, in which case the thread NIO functions comprising: receiving a new connection request, read and write operations.

In some scenarios small-capacity, single-threading model can be used ( 注意,Redis的请求处理也是单线程模型,为什么Redis的性能会如此之高呢?因为Redis的读写操作基本都是内存操作,并且Redis协议比较简洁,序列化/反序列化耗费性能更低). But for high-load, high concurrency scenarios was inappropriate, the main reasons are as follows:

- A processing hundreds of threads NIO connection can not support the performance, even if the CPU load NIO thread 100%, can not meet the massive message encoding, decoding, reading and transmission.

- When the NIO thread overloaded, processing speed will slow down, which causes a large number of client connection timeout, timeout after tend to be retransmitted, which is even more heavy load NIO thread will eventually lead to a large number of messages backlog and handle timeouts, become a performance bottleneck of the system.

- Reliability problems: Once NIO thread unexpectedly running out, or into the loop, can cause the entire system communication modules are not available to receive and process external message, causing node failure.

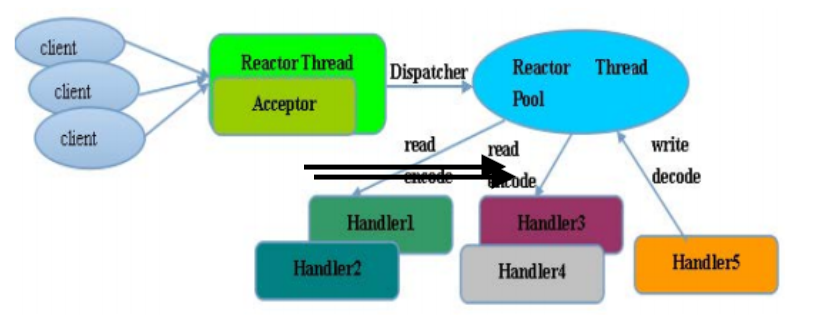

Reactor multi-threaded model

Rector multithreaded model and the maximum difference is a single-threaded model NIO thread to handle connections set write operation, a thread processing NIO Accept. A thread can handle multiple connections NIO event, the event of a connection can only belong to a NIO thread.

In most scenarios, Reactor multi-threading model to meet the performance requirements. However, in individual special scene, a NIO thread is responsible for monitoring and handling all client connections may exist performance problems. For example, one million concurrent client connections, or the server requires the client security authentication handshake, but the certification itself is loss performance. In such scenarios, a single thread Acceptor there may be insufficient performance problems in order to solve performance problems, resulting in a third Reactor threading model - multi-threaded master model from Reactor.

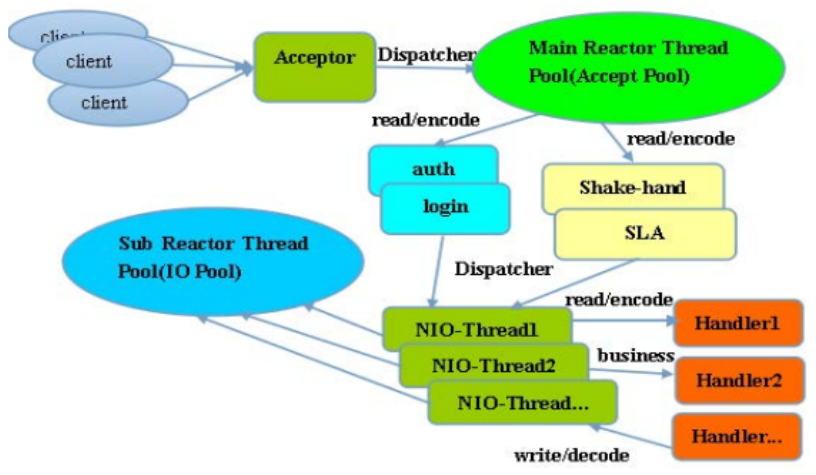

Reactor master-slave multi-threaded model

Reactor thread features from the master model is: the service terminal for receiving a client connection is no longer a separate thread NIO, but an independent NIO thread pool. Acceptor receives TCP connection requests to the client and ends the process after completion (which may include an access authentication), the newly created SocketChannel register to I / O thread pool (sub reactor thread pool) of an I / O thread by its responsible for reading and writing and codecs work of SocketChannel. Acceptor thread pool is only used for client login, handshakes and safety certification, once the link is established, it will link-to-back registration subReactor thread pool of the I / O thread, responsible for subsequent I / O from the I / O thread operating.

netty threading model thinking

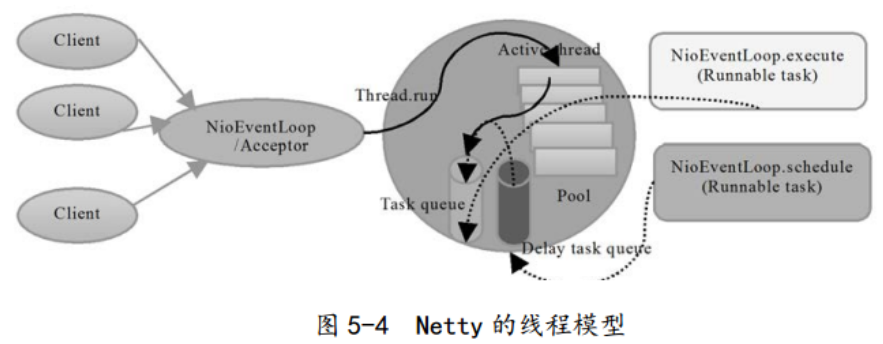

netty threading model is not static, it really depends on the user's startup parameters. Start by setting different parameters, Netty Reactor can support single-threaded model, multi-threading model.

In order to improve performance as much as possible, Netty in many parts of the lock-free design, such as serial operation within the I / O thread, avoid multi-threaded performance degradation caused by competition. On the surface, the serial design seems CPU utilization rate is not high enough degree of concurrency. However, by adjusting parameters NIO thread pool threads may be started simultaneously a plurality of threads run in parallel serialized, such localized serial no lock thread design of a model as compared to the performance of a plurality of work queue threads better. ( 小伙伴们后续多线程并发流程可参考该类实现方案)

Netty's NioEventLoop after reading the message, direct call ChannelPipeline of fireChannelRead (Object msg). As long as the user does not actively switching threads, thread switching has been without a ChannelHandler, during NioEventLoop calling user. This serialization approach to avoid lock contention due to multi-threaded operation, from a performance point of view is optimal.

NIO Netty has two thread pools, respectively, bossGroupand workerGroupthe former new connection request is processed, and then the newly established connection to the polling in which a NioEventLoop workerGroup processed, subsequent read and write operations on the same connections are made NioEventLoop to deal with. Note that although bossGroup can specify multiple NioEventLoop (a NioEventLoop one thread), but the default will be only one thread in the case, because the application will only use the external monitor under normal circumstances a port.

Imagine here, you do not use multiple threads to listen to the same external port it, that is multi-threaded epoll_wait to the same epoll instance?

epoll two main methods are related epoll_wait and epoll_ctl, while operating with a multi-threaded epoll instance, you first need to epoll related methods to confirm whether thread safety: In simple terms, epoll is by a lock to guarantee thread-safe, the smallest size epoll spinlock ep-> lock (spinlock) to protect the ready queue mutex ep-> mtx epoll important to protect red-black tree data structure .

See here, some small partner thought Nginx multiprocessing strategy for listening port, Nginx is ensured by accept_mutex mechanism. accept_mutex is the nginx (New Connection) load balancing lock, turn allows multiple worker processes to handle new connection to the client. When the number of connections to a worker process reached 7/8 (the maximum number of connections to a single worker handling process) the maximum number of connections worker_connections configuration, it will greatly reduce the probability of obtaining the worker to obtain accept lock, in order to achieve each worker process the connection between the number of load balancing. accept the default lock open, nginx New Connection time-consuming process will be shorter when you close it, but could not connect balance between worker processes, and there is a "thundering herd" problem. Only when the enable accept_mutex and the current system does not support atomic lock, the lock will be achieved accept a file. Note that does not block the current thread when accept_mutex lock failure, similar tryLock.

Modern linux, multiple socker while monitoring the same port is also feasible, nginx 1.9.1 also supports this behavior. linux 3.9 kernel support SO_REUSEPORT more options, allowing multiple socker bind / listen on the same port. In this way, multiple processes can each apply for socker listen on the same port, when the connection event comes, the kernel do load balancing, wake-up process in which a listener to handle, reuseport mechanism effectively solves epoll thundering herd problem.

Then back to the question just raised, java multithreaded to listen to the same external port, epoll method is thread-safe, so you can use multiple threads to use to listen epoll_wait yet, of course, is not recommended dry, except for the surprise epoll outside the group issues, there is a general development we use epoll set is the LT model ( 水平触发方式,与之相对的是ET默认,前者只要连接事件未被处理就会在epoll_wait时始终触发,后者只会在真正有事件来时在epoll_wait触发一次), this is the case, it will lead to multi-threaded epoll_wait when epoll_wait after the first thread has not yet finished processing the event has occurred, the second thread will epoll_wait return, obviously this is not what we want, on java nio test demo is as follows:

public class NioDemo {

private static AtomicBoolean flag = new AtomicBoolean(true);

public static void main(String[] args) throws Exception {

ServerSocketChannel serverChannel = ServerSocketChannel.open();

serverChannel.socket().bind(new InetSocketAddress(8080));

// non-block io

serverChannel.configureBlocking(false);

Selector selector = Selector.open();

serverChannel.register(selector, SelectionKey.OP_ACCEPT);

// 多线程执行

Runnable task = () -> {

try {

while (true) {

if (selector.select(0) == 0) {

System.out.println("selector.select loop... " + Thread.currentThread().getName());

Thread.sleep(1);

continue;

}

if (flag.compareAndSet(true, false)) {

System.out.println(Thread.currentThread().getName() + " over");

return;

}

Iterator<SelectionKey> iter = selector.selectedKeys().iterator();

while (iter.hasNext()) {

SelectionKey key = iter.next();

// accept event

if (key.isAcceptable()) {

handlerAccept(selector, key);

}

// socket event

if (key.isReadable()) {

handlerRead(key);

}

/**

* Selector不会自己从已选择键集中移除SelectionKey实例,必须在处理完通道时手动移除。

* 下次该通道变成就绪时,Selector会再次将其放入已选择键集中。

*/

iter.remove();

}

}

} catch (Exception e) {

e.printStackTrace();

}

};

List<Thread> threadList = new ArrayList<>();

for (int i = 0; i < 2; i++) {

Thread thread = new Thread(task);

threadList.add(thread);

thread.start();

}

for (Thread thread : threadList) {

thread.join();

}

System.out.println("main end");

}

static void handlerAccept(Selector selector, SelectionKey key) throws Exception {

System.out.println("coming a new client... " + Thread.currentThread().getName());

Thread.sleep(10000);

SocketChannel channel = ((ServerSocketChannel) key.channel()).accept();

channel.configureBlocking(false);

channel.register(selector, SelectionKey.OP_READ, ByteBuffer.allocate(1024));

}

static void handlerRead(SelectionKey key) throws Exception {

SocketChannel channel = (SocketChannel) key.channel();

ByteBuffer buffer = (ByteBuffer) key.attachment();

buffer.clear();

int num = channel.read(buffer);

if (num <= 0) {

// error or fin

System.out.println("close " + channel.getRemoteAddress());

channel.close();

} else {

buffer.flip();

String recv = Charset.forName("UTF-8").newDecoder().decode(buffer).toString();

System.out.println("recv: " + recv);

buffer = ByteBuffer.wrap(("server: " + recv).getBytes());

channel.write(buffer);

}

}

}netty threading model practice

Simple service (1) time controlled process directly on the I / O threads

Simple time controlled traffic on the I / O processing threads directly, if the business is very simple, the execution time is very short, do not need to interact with the external network, and disk access to the database, without waiting for other resources, it is recommended directly in the business ChannelHandler execution, do not need to start a business or a thread pool thread. Avoid thread context switching, there is no thread concurrency problems.

(2) the complexity and time uncontrollable business advice delivered to the back-end business processing thread pool unity

High complexity or time is not controllable business advice delivered to the back-end business processing thread pool unity, for this type of business is not recommended to start a thread or thread pool directly in the business ChannelHandler recommended different services into a unified package Task, unity Post to back-end business processing thread pool. Excessive business ChannelHandler will bring development efficiency and maintainability issues, not to Netty as a service container, for the most complex business products, still need to integrate or develop their own business container, good and layered architecture of Netty .

(3) Business thread to avoid direct operating ChannelHandler

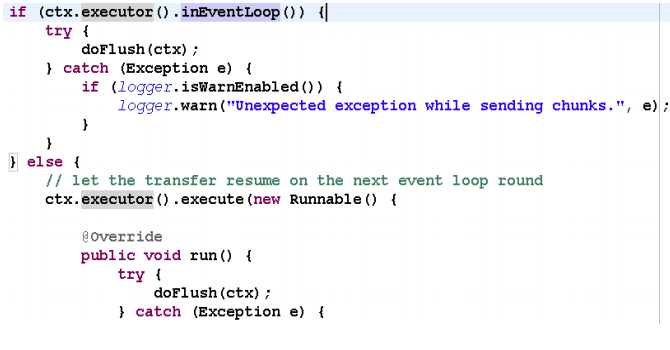

Business thread to avoid direct operating ChannelHandler, for ChannelHandler, IO thread and the thread business can operate, because the business model is usually multi-threaded, multi-threaded operation which would exist ChannelHandler. In order to avoid problems multithreading, as recommended in the practice of their Netty, packaged as separate Task operation performed by a unified NioEventLoop, rather than a direct operation thread operations, related code as follows:

If you confirm concurrent access to data or concurrent operation is safe, you do not bother, this needs to be judged according to specific business scenarios, flexibility.

Recommended Reading

- What Java nio bug in the end is empty polling

- Netty started, this article is enough

- Netty start flow analysis

- Netty deal with those things connected

- Several common Java dynamic contrast agents

- Programmers must see | mockito Principle Analysis

- Eureka Principle Analysis

- MQ first glimpse avenue [Kafka and storage RocketMQ difference must-see interview]

- java lambda layman's language

Welcome to a small partner concerned [TopCoder] Read more exciting good text.